|

Numba

Numba is an open-source JIT compiler that translates a subset of Python and NumPy into fast machine code using LLVM, via the llvmlite Python package. It offers a range of options for parallelising Python code for CPUs and GPUs, often with only minor code changes. Numba was started by Travis Oliphant in 2012 and has since been under active development aits repository in GitHubwith frequent releases. The project is driven by developers at Anaconda, Inc., with support by DARPA, the Gordon and Betty Moore Foundation, Intel, Nvidia and AMD, and a community of contributors on GitHub. Example Numba can be used by simply applying the numba.jit decorator to a Python function that does numerical computations: import numba import random @numba.jit def monte_carlo_pi(n_samples: int) -> float: """Monte Carlo""" acc = 0 for i in range(n_samples): x = random.random() y = random.random() if (x**2 + y**2) >> monte_carlo_pi(1000000) 3.14 Numba's webs ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Python (programming Language)

Python is a high-level programming language, high-level, general-purpose programming language. Its design philosophy emphasizes code readability with the use of significant indentation. Python is type system#DYNAMIC, dynamically type-checked and garbage collection (computer science), garbage-collected. It supports multiple programming paradigms, including structured programming, structured (particularly procedural programming, procedural), object-oriented and functional programming. It is often described as a "batteries included" language due to its comprehensive standard library. Guido van Rossum began working on Python in the late 1980s as a successor to the ABC (programming language), ABC programming language, and he first released it in 1991 as Python 0.9.0. Python 2.0 was released in 2000. Python 3.0, released in 2008, was a major revision not completely backward-compatible with earlier versions. Python 2.7.18, released in 2020, was the last release of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

NumPy

NumPy (pronounced ) is a library for the Python programming language, adding support for large, multi-dimensional arrays and matrices, along with a large collection of high-level mathematical functions to operate on these arrays. The predecessor of NumPy, Numeric, was originally created by Jim Hugunin with contributions from several other developers. In 2005, Travis Oliphant created NumPy by incorporating features of the competing Numarray into Numeric, with extensive modifications. NumPy is open-source software and has many contributors. NumPy is fiscally sponsored by NumFOCUS. History matrix-sig The Python programming language was not originally designed for numerical computing, but attracted the attention of the scientific and engineering community early on. In 1995 the special interest group (SIG) ''matrix-sig'' was founded with the aim of defining an array computing package; among its members was Python designer and maintainer Guido van Rossum, who extended Python ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gordon And Betty Moore Foundation

The Gordon and Betty Moore Foundation is an American foundation established by Intel co-founder Gordon E. Moore and his wife Betty I. Moore in September 2000 to support scientific discovery, environmental conservation, patient care improvements and preservation of the character of the San Francisco Bay Area. As outlined in the Statement of Founder's Intent, the foundation's aim is to tackle large, important issues at a scale where it can achieve significant and measurable impacts. According to the OECD, the Gordon and Betty Moore Foundation provided US$60 million for development in 2020 by means of grants. Funded projects Astronomy * All Sky Automated Survey for SuperNovae (ASAS-SN) * Event Horizon Telescope (EHT) * Hydrogen Epoch of Reionization Array (HERA) * South Pole Telescope (SPT) * Thirty Meter Telescope (TMT) * W. M. Keck Observatory * BICEP and Keck Array Biology Advanced Imaging Center (AIC) , Janelia Research Campus* Center for Ocean Solutions * Community C ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cython

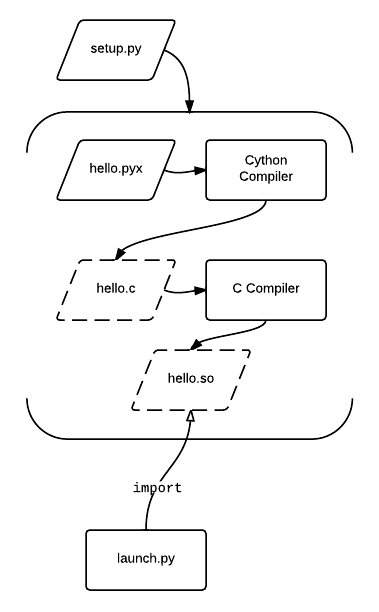

Cython () is a superset of the programming language Python, which allows developers to write Python code (with optional, C-inspired syntax extensions) that yields performance comparable to that of C. Cython is a compiled language that is typically used to generate CPython extension modules. Annotated Python-like code is compiled to C and then automatically wrapped in interface code, producing extension modules that can be loaded and used by regular Python code using the import statement, but with significantly less computational overhead at run time. Cython also facilitates wrapping independent C or C++ code into python-importable modules. Cython is written in Python and C and works on Windows, macOS, and Linux, producing C source files compatible with CPython 2.6, 2.7, and 3.3 and later versions. The Cython source code that Cython compiles (to C) can use both Python 2 and Python 3 syntax, defaulting to Python 2 syntax in Cython 0.x and Python 3 syntax in Cython 3.x. The d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Heterogeneous System Architecture

Heterogeneous System Architecture (HSA) is a cross-vendor set of specifications that allow for the integration of central processing units and graphics processors on the same bus, with shared memory and tasks. The HSA is being developed by the HSA Foundation, which includes (among many others) AMD and ARM. The platform's stated aim is to reduce communication latency between CPUs, GPUs and other compute devices, and make these various devices more compatible from a programmer's perspective, relieving the programmer of the task of planning the moving of data between devices' disjoint memories (as must currently be done with OpenCL or CUDA). CUDA and OpenCL as well as most other fairly advanced programming languages can use HSA to increase their execution performance. Heterogeneous computing is widely used in system-on-chip devices such as tablets, smartphones, other mobile devices, and video game consoles. HSA allows programs to use the graphics processor for floating point cal ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ROCm

ROCm is an Advanced Micro Devices (AMD) software stack for graphics processing unit (GPU) programming. ROCm spans several domains, including general-purpose computing on graphics processing units (GPGPU), high performance computing (HPC), and heterogeneous computing. It offers several programming models: HIP ( GPU-kernel-based programming), OpenMP ( directive-based programming), and OpenCL. ROCm is free, libre and open-source software (except the GPU firmware blobs), and it is distributed under various licenses. ROCm initially stood for Radeon Open Compute platfor''m''; however, due to Open Compute being a registered trademark, ROCm is no longer an acronym — it is simply AMD's open-source stack designed for GPU compute. Background The first GPGPU software stack from ATI/AMD was Close to Metal, which became Stream. ROCm was launched around 2016 with the Boltzmann Initiative. ROCm stack builds upon previous AMD GPU stacks; some tools trace back to GPUOpen and others ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Advanced Micro Devices

Advanced Micro Devices, Inc. (AMD) is an American multinational corporation and technology company headquartered in Santa Clara, California and maintains significant operations in Austin, Texas. AMD is a Information technology, hardware and Fabless manufacturing, fabless company that designs and develops List of AMD processors, central processing units (CPUs), List of AMD graphics processing units, graphics processing units (GPUs), field-programmable gate arrays (FPGAs), System on a chip, system-on-chip (SoC), and high-performance computing, high-performance computer solutions. AMD serves a wide range of business and consumer markets, including gaming, data centers, artificial intelligence (AI), and embedded systems. AMD's main products include List of AMD microprocessors, microprocessors, motherboard chipsets, embedded processors, and List of AMD graphics processing units, graphics processors for Server (computing), servers, workstations, personal computers, and embedded syst ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CUDA

In computing, CUDA (Compute Unified Device Architecture) is a proprietary parallel computing platform and application programming interface (API) that allows software to use certain types of graphics processing units (GPUs) for accelerated general-purpose processing, an approach called general-purpose computing on GPUs. CUDA was created by Nvidia in 2006. When it was first introduced, the name was an acronym for ''Compute Unified Device Architecture'', but Nvidia later dropped the common use of the acronym and now rarely expands it. CUDA is a software layer that gives direct access to the GPU's virtual instruction set and parallel computational elements for the execution of compute kernels. In addition to drivers and runtime kernels, the CUDA platform includes compilers, libraries and developer tools to help programmers accelerate their applications. CUDA is designed to work with programming languages such as C, C++, Fortran, Python and Julia. This accessibility makes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Just-in-time Compilation

In computing, just-in-time (JIT) compilation (also dynamic translation or run-time compilations) is compilation (of computer code) during execution of a program (at run time) rather than before execution. This may consist of source code translation but is more commonly bytecode translation to machine code, which is then executed directly. A system implementing a JIT compiler typically continuously analyses the code being executed and identifies parts of the code where the speedup gained from compilation or recompilation would outweigh the overhead of compiling that code. JIT compilation is a combination of the two traditional approaches to translation to machine code— ahead-of-time compilation (AOT), and interpretation—and combines some advantages and drawbacks of both. Roughly, JIT compilation combines the speed of compiled code with the flexibility of interpretation, with the overhead of an interpreter and the additional overhead of compiling and linking (not j ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

GitHub

GitHub () is a Proprietary software, proprietary developer platform that allows developers to create, store, manage, and share their code. It uses Git to provide distributed version control and GitHub itself provides access control, bug tracking system, bug tracking, software feature requests, task management, continuous integration, and wikis for every project. Headquartered in California, GitHub, Inc. has been a subsidiary of Microsoft since 2018. It is commonly used to host open source software development projects. GitHub reported having over 100 million developers and more than 420 million Repository (version control), repositories, including at least 28 million public repositories. It is the world's largest source code host Over five billion developer contributions were made to more than 500 million open source projects in 2024. About Founding The development of the GitHub platform began on October 19, 2005. The site was launched in April 2008 by Tom ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nvidia

Nvidia Corporation ( ) is an American multinational corporation and technology company headquartered in Santa Clara, California, and incorporated in Delaware. Founded in 1993 by Jensen Huang (president and CEO), Chris Malachowsky, and Curtis Priem, it designs and supplies graphics processing units (GPUs), application programming interfaces (APIs) for data science and high-performance computing, and system on a chip units (SoCs) for mobile computing and the automotive market. Nvidia is also a leading supplier of artificial intelligence (AI) hardware and software. Nvidia outsources the manufacturing of the hardware it designs. Nvidia's professional line of GPUs are used for edge-to-cloud computing and in supercomputers and workstations for applications in fields such as architecture, engineering and construction, media and entertainment, automotive, scientific research, and manufacturing design. Its GeForce line of GPUs are aimed at the consumer market and are used in ap ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |