|

Image Rescaling

In computer graphics and digital imaging, image scaling refers to the resizing of a digital image. In video technology, the magnification of digital material is known as upscaling or Resolution enhancement technology, resolution enhancement. When scaling a vector graphic image, the graphic primitives that make up the image can be scaled using geometric transformations with no loss of image quality. When scaling a raster graphics image, a new image with a higher or lower number of pixels must be generated. In the case of decreasing the pixel number (scaling down), this usually results in a visible quality loss. From the standpoint of digital signal processing, the scaling of raster graphics is a two-dimensional example of sample-rate conversion, the conversion of a discrete signal from a sampling rate (in this case, the local sampling rate) to another. Mathematical Image scaling can be interpreted as a form of image resampling or image reconstruction from the view of the Nyquis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regularization (mathematics)

In mathematics, statistics, Mathematical finance, finance, and computer science, particularly in machine learning and inverse problems, regularization is a process that converts the Problem solving, answer to a problem to a simpler one. It is often used in solving ill-posed problems or to prevent overfitting. Although regularization procedures can be divided in many ways, the following delineation is particularly helpful: * Explicit regularization is regularization whenever one explicitly adds a term to the optimization problem. These terms could be Prior probability, priors, penalties, or constraints. Explicit regularization is commonly employed with ill-posed optimization problems. The regularization term, or penalty, imposes a cost on the optimization function to make the optimal solution unique. * Implicit regularization is all other forms of regularization. This includes, for example, early stopping, using a robust loss function, and discarding outliers. Implicit regularizat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

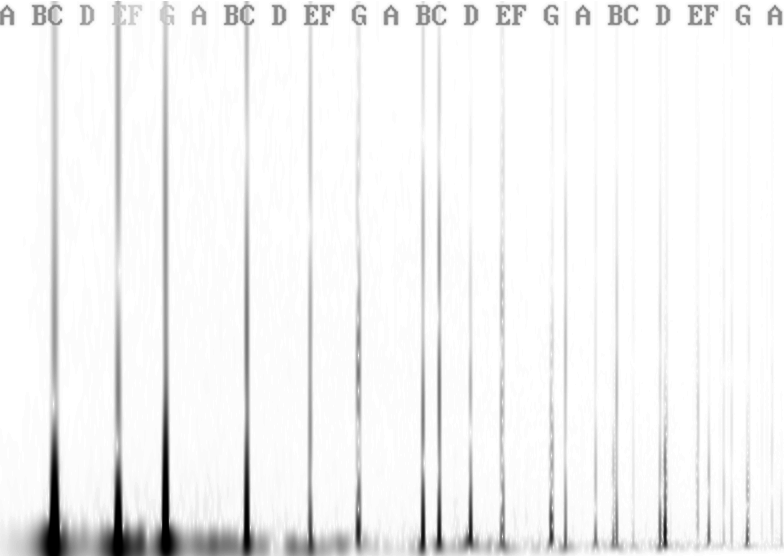

Fourier Transform

In mathematics, the Fourier transform (FT) is an integral transform that takes a function as input then outputs another function that describes the extent to which various frequencies are present in the original function. The output of the transform is a complex-valued function of frequency. The term ''Fourier transform'' refers to both this complex-valued function and the mathematical operation. When a distinction needs to be made, the output of the operation is sometimes called the frequency domain representation of the original function. The Fourier transform is analogous to decomposing the sound of a musical chord into the intensities of its constituent pitches. Functions that are localized in the time domain have Fourier transforms that are spread out across the frequency domain and vice versa, a phenomenon known as the uncertainty principle. The critical case for this principle is the Gaussian function, of substantial importance in probability theory and statist ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

OpenGL

OpenGL (Open Graphics Library) is a Language-independent specification, cross-language, cross-platform application programming interface (API) for rendering 2D computer graphics, 2D and 3D computer graphics, 3D vector graphics. The API is typically used to interact with a graphics processing unit (GPU), to achieve Hardware acceleration, hardware-accelerated Rendering (computer graphics), rendering. Silicon Graphics, Inc. (SGI) began developing OpenGL in 1991 and released it on June 30, 1992. It is used for a variety of applications, including computer-aided design (CAD), video games, scientific visualization, virtual reality, and Flight simulator, flight simulation. Since 2006, OpenGL has been managed by the Non-profit organization, non-profit technology consortium Khronos Group. Design The OpenGL specification describes an abstract application programming interface, application programming interface (API) for drawing 2D and 3D graphics. It is designed to be implemented mostly ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mipmap

In computer graphics, a mipmap (''mip'' being an acronym of the Latin phrase ''multum in parvo'', meaning "much in little") is a pre-calculated, optimized sequence of images, each of which has an image resolution which is a factor of two smaller than the previous. Their use is known as ''mipmapping''. They are intended to increase rendering speed and reduce aliasing artifacts. A high-resolution mipmap image is used for high-density samples, such as for objects close to the camera; lower-resolution images are used as the object appears farther away. This is a more efficient way of downscaling a texture than sampling all texels in the original texture that would contribute to a screen pixel; it is faster to take a constant number of samples from the appropriately downfiltered textures. Since mipmaps, by definition, are pre-allocated, additional storage space is required to take advantage of them. They are also related to wavelet compression. Mipmaps are widely used in 3D ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lanczos Resampling

Lanczos filtering and Lanczos resampling are two applications of a certain mathematical formula. It can be used as a low-pass filter or used to smoothly interpolate the value of a digital signal between its samples. In the latter case, it maps each sample of the given signal to a translated and scaled copy of the Lanczos kernel, which is a sinc function windowed by the central lobe of a second, longer, sinc function. The sum of these translated and scaled kernels is then evaluated at the desired points. Lanczos resampling is typically used to increase the sampling rate of a digital signal, or to shift it by a fraction of the sampling interval. It is often used also for multivariate interpolation, for example to resize or rotate a digital image. It has been considered the "best compromise" among several simple filters for this purpose. The filter was invented by Claude Duchon, who named it after Cornelius Lanczos due to Duchon's use of the sigma approximation in const ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sinc Resampling

In mathematics, physics and engineering, the sinc function ( ), denoted by , has two forms, normalized and unnormalized.. In mathematics, the historical unnormalized sinc function is defined for by \operatorname(x) = \frac. Alternatively, the unnormalized sinc function is often called the sampling function, indicated as Sa(''x''). In digital signal processing and information theory, the normalized sinc function is commonly defined for by \operatorname(x) = \frac. In either case, the value at is defined to be the limiting value \operatorname(0) := \lim_\frac = 1 for all real (the limit can be proven using the Squeeze theorem#Second example, squeeze theorem). The Normalizing constant, normalization causes the integral, definite integral of the function over the real numbers to equal 1 (whereas the same integral of the unnormalized sinc function has a value of pi, ). As a further useful property, the zeros of the normalized sinc function are the nonzero integer values of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bicubic Interpolation

In mathematics, bicubic interpolation is an extension of cubic spline interpolation (a method of applying cubic interpolation to a data set) for interpolating data points on a two-dimensional regular grid. The interpolated surface (meaning the kernel shape, not the image) is smoother than corresponding surfaces obtained by bilinear interpolation or nearest-neighbor interpolation. Bicubic interpolation can be accomplished using either Lagrange polynomials, cubic splines, or cubic convolution algorithm. In image processing, bicubic interpolation is often chosen over bilinear or nearest-neighbor interpolation in image resampling, when speed is not an issue. In contrast to bilinear interpolation, which only takes 4 pixels (2×2) into account, bicubic interpolation considers 16 pixels (4×4). Images resampled with bicubic interpolation can have different interpolation artifacts, depending on the b and c values chosen. Computation Suppose the function values f and the deriv ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Display Contrast

In physics and digital imaging, contrast is a quantifiable property used to describe the difference in appearance between elements within a visual field. It is closely linked with the perceived brightness of objects and is typically defined by specific formulas that involve the luminances of the stimuli. For example, contrast can be quantified as ΔL/L near the luminance threshold, known as Weber contrast, or as LH/LL at much higher luminances. Further, contrast can result from differences in chromaticity, which are specified by colorimetric characteristics such as the color difference ΔE in the CIE 1976 UCS (Uniform Colour Space). Understanding contrast is crucial in fields such as imaging and display technologies, where it significantly affects the quality of visual content rendering. The contrast of electronic visual displays is influenced by the type of signal driving mechanism used, which can be either analog or digital. This mechanism directly influences how well th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Interpolation

In the mathematics, mathematical field of numerical analysis, interpolation is a type of estimation, a method of constructing (finding) new data points based on the range of a discrete set of known data points. In engineering and science, one often has a number of data points, obtained by sampling (statistics), sampling or experimentation, which represent the values of a function for a limited number of values of the Dependent and independent variables, independent variable. It is often required to interpolate; that is, estimate the value of that function for an intermediate value of the independent variable. A closely related problem is the function approximation, approximation of a complicated function by a simple function. Suppose the formula for some given function is known, but too complicated to evaluate efficiently. A few data points from the original function can be interpolated to produce a simpler function which is still fairly close to the original. The resulting gai ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bilinear Interpolation

In mathematics, bilinear interpolation is a method for interpolating functions of two variables (e.g., ''x'' and ''y'') using repeated linear interpolation. It is usually applied to functions sampled on a 2D rectilinear grid, though it can be generalized to functions defined on the vertices of (a mesh of) arbitrary convex quadrilaterals. Bilinear interpolation is performed using linear interpolation first in one direction, and then again in another direction. Although each step is linear in the sampled values and in the position, the interpolation as a whole is not linear but rather quadratic in the sample location. Bilinear interpolation is one of the basic resampling techniques in computer vision and image processing, where it is also called bilinear filtering or bilinear texture mapping. Computation Suppose that we want to find the value of the unknown function ''f'' at the point (''x'', ''y''). It is assumed that we know the value of ''f'' at the four points ''Q''11 = ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |