|

F-distribution

In probability theory and statistics, the ''F''-distribution or F-ratio, also known as Snedecor's ''F'' distribution or the Fisher–Snedecor distribution (after Ronald Fisher and George W. Snedecor) is a continuous probability distribution that arises frequently as the null distribution of a test statistic, most notably in the analysis of variance (ANOVA) and other ''F''-tests. Definition The F-distribution with ''d''1 and ''d''2 degrees of freedom is the distribution of : X = \frac where S_1 and S_2 are independent random variables with chi-square distributions with respective degrees of freedom d_1 and d_2. It can be shown to follow that the probability density function (pdf) for ''X'' is given by : \begin f(x; d_1,d_2) &= \frac \\ pt&=\frac \left(\frac\right)^ x^ \left(1+\frac \, x \right)^ \end for real ''x'' > 0. Here \mathrm is the beta function. In many applications, the parameters ''d''1 and ''d''2 are positive integers, but the distribution is well ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

F-test

An ''F''-test is any statistical test in which the test statistic has an ''F''-distribution under the null hypothesis. It is most often used when comparing statistical models that have been fitted to a data set, in order to identify the model that best fits the population from which the data were sampled. Exact "''F''-tests" mainly arise when the models have been fitted to the data using least squares. The name was coined by George W. Snedecor, in honour of Ronald Fisher. Fisher initially developed the statistic as the variance ratio in the 1920s. Common examples Common examples of the use of ''F''-tests include the study of the following cases: * The hypothesis that the means of a given set of normally distributed populations, all having the same standard deviation, are equal. This is perhaps the best-known ''F''-test, and plays an important role in the analysis of variance (ANOVA). * The hypothesis that a proposed regression model fits the data well. See Lack-of-f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ronald Fisher

Sir Ronald Aylmer Fisher (17 February 1890 – 29 July 1962) was a British polymath who was active as a mathematician, statistician, biologist, geneticist, and academic. For his work in statistics, he has been described as "a genius who almost single-handedly created the foundations for modern statistical science" and "the single most important figure in 20th century statistics". In genetics, his work used mathematics to combine Mendelian genetics and natural selection; this contributed to the revival of Darwinism in the early 20th-century revision of the theory of evolution known as the modern synthesis. For his contributions to biology, Fisher has been called "the greatest of Darwin’s successors". Fisher held strong views on race and eugenics, insisting on racial differences. Although he was clearly a eugenist and advocated for the legalization of voluntary sterilization of those with heritable mental disabilities, there is some debate as to whether Fisher support ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Chi-squared Distribution

In probability theory and statistics, the chi-squared distribution (also chi-square or \chi^2-distribution) with k degrees of freedom is the distribution of a sum of the squares of k independent standard normal random variables. The chi-squared distribution is a special case of the gamma distribution and is one of the most widely used probability distributions in inferential statistics, notably in hypothesis testing and in construction of confidence intervals. This distribution is sometimes called the central chi-squared distribution, a special case of the more general noncentral chi-squared distribution. The chi-squared distribution is used in the common chi-squared tests for goodness of fit of an observed distribution to a theoretical one, the independence of two criteria of classification of qualitative data, and in confidence interval estimation for a population standard deviation of a normal distribution from a sample standard deviation. Many other statistical tes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Chi-square Distribution

In probability theory and statistics, the chi-squared distribution (also chi-square or \chi^2-distribution) with k degrees of freedom is the distribution of a sum of the squares of k independent standard normal random variables. The chi-squared distribution is a special case of the gamma distribution and is one of the most widely used probability distributions in inferential statistics, notably in hypothesis testing and in construction of confidence intervals. This distribution is sometimes called the central chi-squared distribution, a special case of the more general noncentral chi-squared distribution. The chi-squared distribution is used in the common chi-squared tests for goodness of fit of an observed distribution to a theoretical one, the independence of two criteria of classification of qualitative data, and in confidence interval estimation for a population standard deviation of a normal distribution from a sample standard deviation. Many other statistical tests also ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cochran's Theorem

In statistics, Cochran's theorem, devised by William G. Cochran, is a theorem used to justify results relating to the probability distributions of statistics that are used in the analysis of variance. Statement Let ''U''1, ..., ''U''''N'' be i.i.d. standard normally distributed random variables, and U = _1, ..., U_NT. Let B^,B^,\ldots, B^be symmetric matrices. Define ''r''''i'' to be the rank of B^. Define Q_i=U^T B^U, so that the ''Q''i are quadratic forms. Further assume \sum_i Q_i = U^T U. Cochran's theorem states that the following are equivalent: * r_1+\cdots +r_k=N, * the ''Q''''i'' are independent * each ''Q''''i'' has a chi-squared distribution with ''r''''i'' degrees of freedom. Often it's stated as \sum_i A_i = A, where A is idempotent, and \sum_i r_i = N is replaced by \sum_i r_i = rank(A). But after an orthogonal transform, A = diag(I_M, 0), and so we reduce to the above theorem. Proof Claim: Let X be a standard Gaussian in \R^n, then for any symmetric matric ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Degrees Of Freedom (statistics)

In statistics, the number of degrees of freedom is the number of values in the final calculation of a statistic that are free to vary. Estimates of statistical parameters can be based upon different amounts of information or data. The number of independent pieces of information that go into the estimate of a parameter is called the degrees of freedom. In general, the degrees of freedom of an estimate of a parameter are equal to the number of independent scores that go into the estimate minus the number of parameters used as intermediate steps in the estimation of the parameter itself. For example, if the variance is to be estimated from a random sample of ''N'' independent scores, then the degrees of freedom is equal to the number of independent scores (''N'') minus the number of parameters estimated as intermediate steps (one, namely, the sample mean) and is therefore equal to ''N'' − 1. Mathematically, degrees of freedom is the number of dimensions of the domain ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

F-statistics

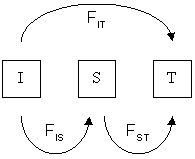

In population genetics, ''F''-statistics (also known as fixation indices) describe the statistically expected level of heterozygosity in a population; more specifically the expected degree of (usually) a reduction in heterozygosity when compared to Hardy–Weinberg expectation. ''F''-statistics can also be thought of as a measure of the correlation between genes drawn at different levels of a (hierarchically) subdivided population. This correlation is influenced by several evolutionary processes, such as genetic drift, founder effect, bottleneck, genetic hitchhiking, meiotic drive, mutation, gene flow, inbreeding, natural selection, or the Wahlund effect, but it was originally designed to measure the amount of allelic fixation owing to genetic drift. The concept of ''F''-statistics was developed during the 1920s by the American geneticist Sewall Wright, who was interested in inbreeding in cattle. However, because complete dominance causes the phenotypes of homozygote domi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Analysis Of Variance

Analysis of variance (ANOVA) is a collection of statistical models and their associated estimation procedures (such as the "variation" among and between groups) used to analyze the differences among means. ANOVA was developed by the statistician Ronald Fisher. ANOVA is based on the law of total variance, where the observed variance in a particular variable is partitioned into components attributable to different sources of variation. In its simplest form, ANOVA provides a statistical test of whether two or more population means are equal, and therefore generalizes the ''t''-test beyond two means. In other words, the ANOVA is used to test the difference between two or more means. History While the analysis of variance reached fruition in the 20th century, antecedents extend centuries into the past according to Stigler. These include hypothesis testing, the partitioning of sums of squares, experimental techniques and the additive model. Laplace was performing hypothesis testi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Beta Prime Distribution

In probability theory and statistics, the beta prime distribution (also known as inverted beta distribution or beta distribution of the second kindJohnson et al (1995), p 248) is an absolutely continuous probability distribution. Definitions Beta prime distribution is defined for x > 0 with two parameters ''α'' and ''β'', having the probability density function: : f(x) = \frac where ''B'' is the Beta function. The cumulative distribution function is : F(x; \alpha,\beta)=I_\left(\alpha, \beta \right) , where ''I'' is the regularized incomplete beta function. The expected value, variance, and other details of the distribution are given in the sidebox; for \beta>4, the excess kurtosis is :\gamma_2 = 6\frac. While the related beta distribution is the conjugate prior distribution of the parameter of a Bernoulli distribution expressed as a probability, the beta prime distribution is the conjugate prior distribution of the parameter of a Bernoulli distribution expressed i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Null Distribution

In statistical hypothesis testing, the null distribution is the probability distribution of the test statistic when the null hypothesis is true. For example, in an F-test, the null distribution is an F-distribution. Null distribution is a tool scientists often use when conducting experiments. The null distribution is the distribution of two sets of data under a null hypothesis. If the results of the two sets of data are not outside the parameters of the expected results, then the null hypothesis is said to be true. Examples of application The null hypothesis is often a part of an experiment. The null hypothesis tries to show that among two sets of data, there is no statistical difference between the results of doing one thing as opposed to doing a different thing. For an example of this, a scientist might be trying to prove that people who walk two miles a day have healthier hearts than people who walk less than two miles a day. The scientist would use the null hypothesis to test ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Continuous Probability Distribution

In probability theory and statistics, a probability distribution is the mathematical function that gives the probabilities of occurrence of different possible outcomes for an experiment. It is a mathematical description of a random phenomenon in terms of its sample space and the probabilities of events (subsets of the sample space). For instance, if is used to denote the outcome of a coin toss ("the experiment"), then the probability distribution of would take the value 0.5 (1 in 2 or 1/2) for , and 0.5 for (assuming that the coin is fair). Examples of random phenomena include the weather conditions at some future date, the height of a randomly selected person, the fraction of male students in a school, the results of a survey to be conducted, etc. Introduction A probability distribution is a mathematical description of the probabilities of events, subsets of the sample space. The sample space, often denoted by \Omega, is the set of all possible outcomes of a random ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |