|

EfficientNet

EfficientNet is a family of convolutional neural networks (CNNs) for computer vision published by researchers at Google AI in 2019. Its key innovation is compound scaling, which uniformly scales all dimensions of depth, width, and resolution using a single parameter. EfficientNet models have been adopted in various computer vision tasks, including image classification, object detection, and segmentation. Compound scaling EfficientNet introduces compound scaling, which, instead of scaling one dimension of the network at a time, such as depth (number of layers), width (number of channels), or resolution (input image size), uses a compound coefficient \phi to scale all three dimensions simultaneously. Specifically, given a baseline network, the depth, width, and resolution are scaled according to the following equations: \begin \text d &= \alpha^ \\ \text w &= \beta^ \\ \text r &= \gamma^ \end subject to \alpha \cdot \beta^2 \cdot \gamma^2 \approx 2 and \alpha \ge 1, \beta \g ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

You Only Look Once

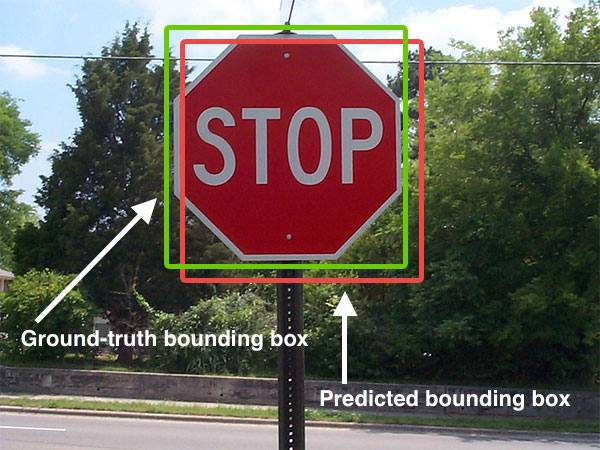

You Only Look Once (YOLO) is a series of real-time object detection systems based on convolutional neural networks. First introduced by Joseph Redmon et al. in 2015, YOLO has undergone several iterations and improvements, becoming one of the most popular object detection frameworks. The name "You Only Look Once" refers to the fact that the algorithm requires only one forward propagation pass through the neural network to make predictions, unlike previous region proposal-based techniques like R-CNN that require thousands for a single image. Overview Compared to previous methods like R-CNN and OverFeat, instead of applying the model to an image at multiple locations and scales, YOLO applies a single neural network to the full image. This network divides the image into regions and predicts bounding boxes and probabilities for each region. These bounding boxes are weighted by the predicted probabilities. OverFeat OverFeat was an early influential model for simultaneous object ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

SqueezeNet

SqueezeNet is a deep neural network for image classification released in 2016. SqueezeNet was developed by researchers at DeepScale, University of California, Berkeley, and Stanford University. In designing SqueezeNet, the authors' goal was to create a smaller neural network with fewer parameters while achieving competitive accuracy. Their best-performing model achieved the same accuracy as AlexNet on ImageNet classification, but has a size 510x less than it. Version history SqueezeNet was originally released on February 22, 2016. This original version of SqueezeNet was implemented on top of the Caffe deep learning software framework. Shortly thereafter, the open-source research community ported SqueezeNet to a number of other deep learning frameworks. On February 26, 2016, Eddie Bell released a port of SqueezeNet for the Chainer deep learning framework. On March 2, 2016, Guo Haria released a port of SqueezeNet for the Apache MXNet framework. On June 3, 2016, Tammy Yang releas ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Google AI

Google AI is a division of Google dedicated to artificial intelligence. It was announced at Google I/O 2017 by CEO Sundar Pichai. This division has expanded its reach with research facilities in various parts of the world such as Zurich, Paris, Israel, and Beijing. In 2023, Google AI was part of the reorganization initiative that elevated its head, Jeff Dean, to the position of chief scientist at Google. This reorganization involved the merging of Google Brain and DeepMind, a UK-based company that Google acquired in 2014 that operated separately from the company's core research. In March 2019, Google announced the creation of an Advanced Technology External Advisory Council (ATEAC) comprising eight members: Alessandro Acquisti, Bubacarr Bah, De Kai, Dyan Gibbens, Joanna Bryson, Kay Coles James, Luciano Floridi and William Joseph Burns. Following objections from a large number of Google staff to the appointment of Kay Coles James, the Council was abandoned within one ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Edge Computing

Edge computing is a distributed computing model that brings computation and data storage closer to the sources of data. More broadly, it refers to any design that pushes computation physically closer to a user, so as to reduce the Latency (engineering), latency compared to when an application runs on a centralized data centre. The term began being used in the 1990s to describe Content Delivery Network, content delivery networks—these were used to deliver website and video content from servers located near users. In the early 2000s, these systems expanded their scope to hosting other applications, leading to early edge computing services. These services could do things like find dealers, manage shopping carts, gather real-time data, and place ads. The Internet of Things (IoT), where devices are connected to the internet, is often linked with edge computing. Definition Edge computing involves running computer programs that deliver quick responses Locality of reference, close to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Vision

Computer vision tasks include methods for image sensor, acquiring, Image processing, processing, Image analysis, analyzing, and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the form of decisions. "Understanding" in this context signifies the transformation of visual images (the input to the retina) into descriptions of the world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory. The scientific discipline of computer vision is concerned with the theory behind artificial systems that extract information from images. Image data can take many forms, such as video sequences, views from multiple cameras, multi-dimensional data from a 3D scanning, 3D scanner, 3D point clouds ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task (computing), tasks without explicit Machine code, instructions. Within a subdiscipline in machine learning, advances in the field of deep learning have allowed Neural network (machine learning), neural networks, a class of statistical algorithms, to surpass many previous machine learning approaches in performance. ML finds application in many fields, including natural language processing, computer vision, speech recognition, email filtering, agriculture, and medicine. The application of ML to business problems is known as predictive analytics. Statistics and mathematical optimisation (mathematical programming) methods comprise the foundations of machine learning. Data mining is a related field of study, focusing on exploratory data analysi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Augmentation

Data augmentation is a statistical technique which allows maximum likelihood estimation from incomplete data. Data augmentation has important applications in Bayesian analysis, and the technique is widely used in machine learning to reduce overfitting when training machine learning models, achieved by training models on several slightly-modified copies of existing data. Synthetic oversampling techniques for traditional machine learning Synthetic Minority Over-sampling Technique (SMOTE) is a method used to address imbalanced datasets in machine learning. In such datasets, the number of samples in different classes varies significantly, leading to biased model performance. For example, in a medical diagnosis dataset with 90 samples representing healthy individuals and only 10 samples representing individuals with a particular disease, traditional algorithms may struggle to accurately classify the minority class. SMOTE rebalances the dataset by generating synthetic samples for the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dropout Training

Dropout and dilution (also called DropConnect) are regularization techniques for reducing overfitting in artificial neural networks by preventing complex co-adaptations on training data. They are an efficient way of performing model averaging with neural networks. ''Dilution'' refers to randomly decreasing weights towards zero, while ''dropout'' refers to randomly setting the outputs of hidden neurons to zero. Both are usually performed during the training process of a neural network, not during inference. Types and uses Dilution is usually split in ''weak dilution'' and ''strong dilution''. Weak dilution describes the process in which the finite fraction of removed connections is small, and strong dilution refers to when this fraction is large. There is no clear distinction on where the limit between strong and weak dilution is, and often the distinction is dependent on the precedent of a specific use-case and has implications for how to solve for exact solutions. Sometimes di ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Cluster

A computer cluster is a set of computers that work together so that they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software. The newest manifestation of cluster computing is cloud computing. The components of a cluster are usually connected to each other through fast local area networks, with each node (computer used as a server) running its own instance of an operating system. In most circumstances, all of the nodes use the same hardware and the same operating system, although in some setups (e.g. using Open Source Cluster Application Resources (OSCAR)), different operating systems can be used on each computer, or different hardware. Clusters are usually deployed to improve performance and availability over that of a single computer, while typically being much more cost-effective than single computers of comparable speed or availability. Computer clusters emerged as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Graphics Processing Unit

A graphics processing unit (GPU) is a specialized electronic circuit designed for digital image processing and to accelerate computer graphics, being present either as a discrete video card or embedded on motherboards, mobile phones, personal computers, workstations, and game consoles. GPUs were later found to be useful for non-graphic calculations involving embarrassingly parallel problems due to their parallel structure. The ability of GPUs to rapidly perform vast numbers of calculations has led to their adoption in diverse fields including artificial intelligence (AI) where they excel at handling data-intensive and computationally demanding tasks. Other non-graphical uses include the training of neural networks and cryptocurrency mining. History 1970s Arcade system boards have used specialized graphics circuits since the 1970s. In early video game hardware, RAM for frame buffers was expensive, so video chips composited data together as the display was being scann ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

MobileNet

MobileNet is a family of convolutional neural network (CNN) architectures designed for image classification, object detection, and other computer vision tasks. They are designed for small size, low latency, and low power consumption, making them suitable for on-device inference and edge computing on resource-constrained devices like mobile phones and embedded systems. They were originally designed to be run efficiently on mobile devices with TensorFlow Lite. The need for efficient deep learning models on mobile devices led researchers at Google to develop MobileNet. , the family has four versions, each improving upon the previous one in terms of performance and efficiency. Features V1 MobileNetV1 was published in April 2017. Its main architectural innovation was incorporation of depthwise separable convolutions. It was first developed by Laurent Sifre during an internship at Google Brain in 2013 as an architectural variation on AlexNet to improve convergence speed and mod ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |