|

MobileNet

MobileNet is a family of convolutional neural network (CNN) architectures designed for image classification, object detection, and other computer vision tasks. They are designed for small size, low latency, and low power consumption, making them suitable for on-device inference and edge computing on resource-constrained devices like mobile phones and embedded systems. They were originally designed to be run efficiently on mobile devices with TensorFlow Lite. The need for efficient deep learning models on mobile devices led researchers at Google to develop MobileNet. , the family has four versions, each improving upon the previous one in terms of performance and efficiency. Features V1 MobileNetV1 was published in April 2017. Its main architectural innovation was incorporation of depthwise separable convolutions. It was first developed by Laurent Sifre during an internship at Google Brain in 2013 as an architectural variation on AlexNet to improve convergence speed and mod ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Neural Architecture Search

Neural architecture search (NAS) is a technique for automating the design of artificial neural networks (ANN), a widely used model in the field of machine learning. NAS has been used to design networks that are on par with or outperform hand-designed architectures. Methods for NAS can be categorized according to the search space, search strategy and performance estimation strategy used: * The ''search space'' defines the type(s) of ANN that can be designed and optimized. * The ''search strategy'' defines the approach used to explore the search space. * The ''performance estimation strategy'' evaluates the performance of a possible ANN from its design (without constructing and training it). NAS is closely related to hyperparameter optimization and meta-learning and is a subfield of automated machine learning (AutoML). Reinforcement learning Reinforcement learning (RL) can underpin a NAS search strategy. Barret Zoph and Quoc Viet Le applied NAS with RL targeting the CIFAR-10 datas ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pixel 4

The Pixel 4 and Pixel 4 XL are a pair of Android smartphones designed, developed, and marketed by Google as part of the Google Pixel product line. They collectively serve as the successors to the Pixel 3 and Pixel 3 XL. They were officially announced on October 15, 2019 at the Made by Google event and released in the United States on October 24, 2019. On September 30, 2020, they were succeeded by the Pixel 5. History Google confirmed the device's design in June 2019 after renders of it were leaked online. In the United States, the Pixel 4 is the first Pixel phone to be offered for sale by all major wireless carriers at launch. Previous flagship Pixel models had launched as exclusives to Verizon and Google Fi; the midrange Pixel 3a was additionally available from Sprint and T-Mobile, but not AT&T, at its launch. As with all other Pixel releases, Google is offering unlocked U.S. versions through its website. The phones were officially announced on October 15, 2019 and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

AlexNet

AlexNet is a convolutional neural network architecture developed for image classification tasks, notably achieving prominence through its performance in the ImageNet Large Scale Visual Recognition Challenge (ILSVRC). It classifies images into 1,000 distinct object categories and is regarded as the first widely recognized application of deep convolutional networks in large-scale visual recognition. Developed in 2012 by Alex Krizhevsky in collaboration with Ilya Sutskever and his Ph.D. advisor Geoffrey Hinton at the University of Toronto, the model contains 60 million parameters and 650,000 neurons. The original paper's primary result was that the depth of the model was essential for its high performance, which was computationally expensive, but made feasible due to the utilization of graphics processing units (GPUs) during training. The three formed team SuperVision and submitted AlexNet in the ImageNet Large Scale Visual Recognition Challenge on September 30, 2012. The network ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Vision

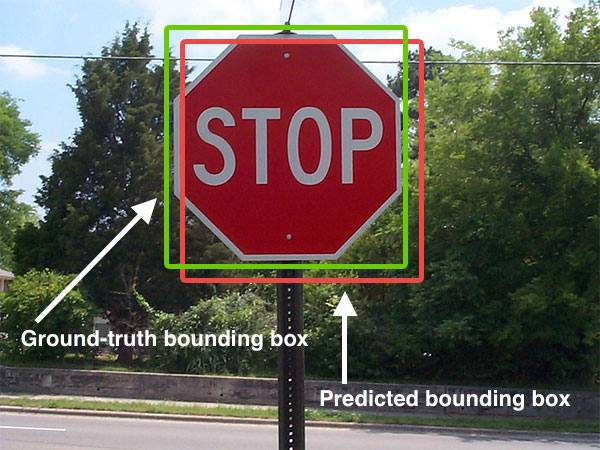

Computer vision tasks include methods for image sensor, acquiring, Image processing, processing, Image analysis, analyzing, and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the form of decisions. "Understanding" in this context signifies the transformation of visual images (the input to the retina) into descriptions of the world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory. The scientific discipline of computer vision is concerned with the theory behind artificial systems that extract information from images. Image data can take many forms, such as video sequences, views from multiple cameras, multi-dimensional data from a 3D scanning, 3D scanner, 3D point clouds ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deep Learning

Deep learning is a subset of machine learning that focuses on utilizing multilayered neural networks to perform tasks such as classification, regression, and representation learning. The field takes inspiration from biological neuroscience and is centered around stacking artificial neurons into layers and "training" them to process data. The adjective "deep" refers to the use of multiple layers (ranging from three to several hundred or thousands) in the network. Methods used can be either supervised, semi-supervised or unsupervised. Some common deep learning network architectures include fully connected networks, deep belief networks, recurrent neural networks, convolutional neural networks, generative adversarial networks, transformers, and neural radiance fields. These architectures have been applied to fields including computer vision, speech recognition, natural language processing, machine translation, bioinformatics, drug design, medical image analysis, c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Convolutional Neural Network

A convolutional neural network (CNN) is a type of feedforward neural network that learns features via filter (or kernel) optimization. This type of deep learning network has been applied to process and make predictions from many different types of data including text, images and audio. Convolution-based networks are the de-facto standard in deep learning-based approaches to computer vision and image processing, and have only recently been replaced—in some cases—by newer deep learning architectures such as the transformer. Vanishing gradients and exploding gradients, seen during backpropagation in earlier neural networks, are prevented by the regularization that comes from using shared weights over fewer connections. For example, for ''each'' neuron in the fully-connected layer, 10,000 weights would be required for processing an image sized 100 × 100 pixels. However, applying cascaded ''convolution'' (or cross-correlation) kernels, only 25 weights for each convolutio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Transformer (deep Learning Architecture)

The transformer is a deep learning architecture based on the multi-head attention mechanism, in which text is converted to numerical representations called tokens, and each token is converted into a vector via lookup from a word embedding table. At each layer, each token is then contextualized within the scope of the context window with other (unmasked) tokens via a parallel multi-head attention mechanism, allowing the signal for key tokens to be amplified and less important tokens to be diminished. Transformers have the advantage of having no recurrent units, therefore requiring less training time than earlier recurrent neural architectures (RNNs) such as long short-term memory (LSTM). Later variations have been widely adopted for training large language models (LLM) on large (language) datasets. The modern version of the transformer was proposed in the 2017 paper " Attention Is All You Need" by researchers at Google. Transformers were first developed as an improvement ov ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vision Transformer

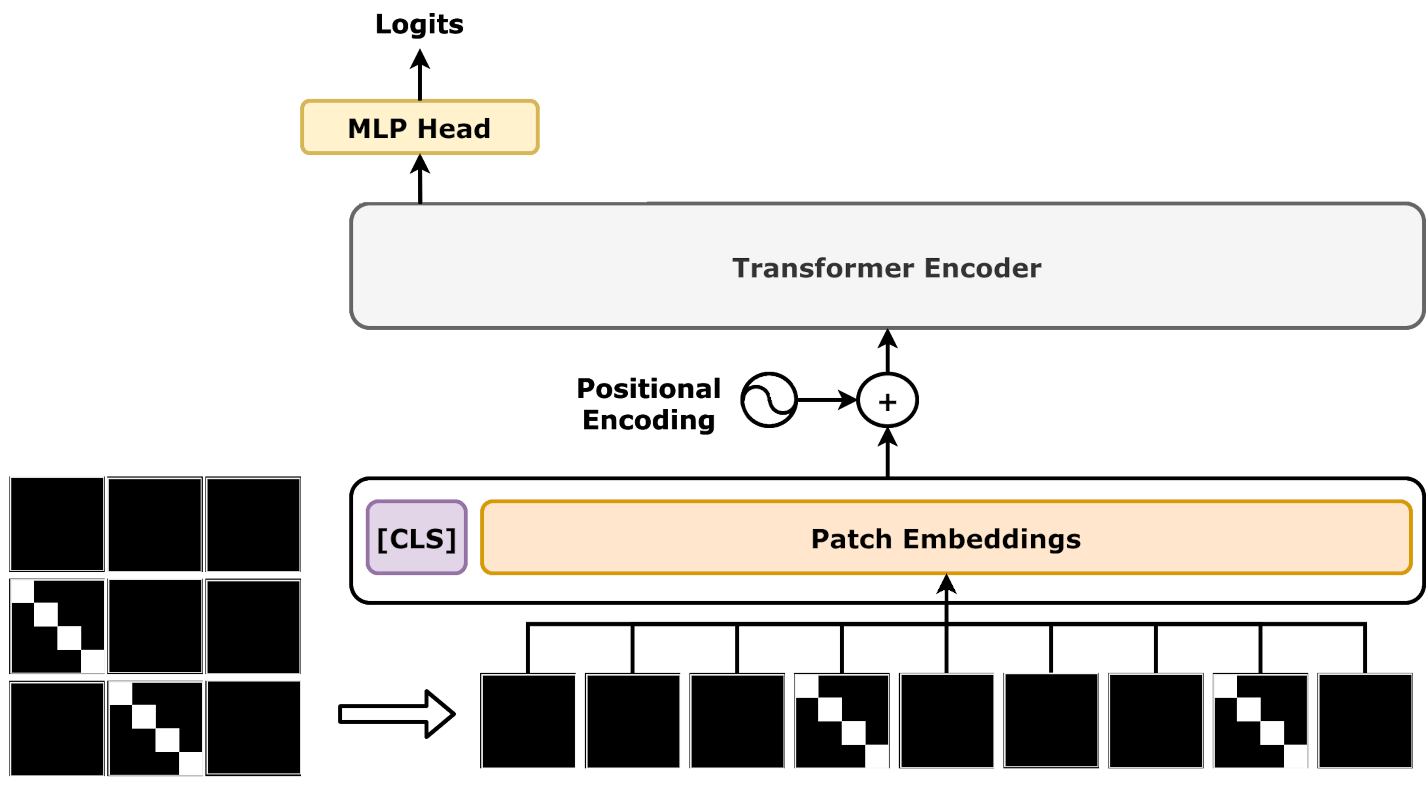

A vision transformer (ViT) is a Transformer (machine learning model), transformer designed for computer vision. A ViT decomposes an input image into a series of patches (rather than text into Byte pair encoding, tokens), serializes each patch into a vector, and maps it to a smaller dimension with a single matrix multiplication. These vector Latent space, embeddings are then processed by a BERT (language model), transformer encoder as if they were token embeddings. ViTs were designed as alternatives to convolutional neural networks (CNNs) in computer vision applications. They have different inductive biases, training stability, and data efficiency. Compared to CNNs, ViTs are less data efficient, but have higher capacity. Some of the largest modern computer vision models are ViTs, such as one with 22B parameters. Subsequent to its publication, many variants were proposed, with hybrid architectures with both features of ViTs and CNNs. ViTs have found application in image recognition, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Squeeze-and-excitation Network

A residual neural network (also referred to as a residual network or ResNet) is a deep learning architecture in which the layers learn residual functions with reference to the layer inputs. It was developed in 2015 for image recognition, and won the ImageNet Large Scale Visual Recognition ChallengeILSVRC of that year. As a point of terminology, "residual connection" refers to the specific architectural motif of , where f is an arbitrary neural network module. The motif had been used previously (see §History for details). However, the publication of ResNet made it widely popular for feedforward networks, appearing in neural networks that are seemingly unrelated to ResNet. The residual connection stabilizes the training and convergence of deep neural networks with hundreds of layers, and is a common motif in deep neural networks, such as transformer models (e.g., BERT, and GPT models such as ChatGPT), the AlphaGo Zero system, the AlphaStar system, and the AlphaFold system. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Activation Function

The activation function of a node in an artificial neural network is a function that calculates the output of the node based on its individual inputs and their weights. Nontrivial problems can be solved using only a few nodes if the activation function is ''nonlinear''. Modern activation functions include the logistic ( sigmoid) function used in the 2012 speech recognition model developed by Hinton et al; the ReLU used in the 2012 AlexNet computer vision model and in the 2015 ResNet model; and the smooth version of the ReLU, the GELU, which was used in the 2018 BERT model. Comparison of activation functions Aside from their empirical performance, activation functions also have different mathematical properties: ; Nonlinear: When the activation function is non-linear, then a two-layer neural network can be proven to be a universal function approximator. This is known as the Universal Approximation Theorem. The identity activation function does not satisfy this property. W ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sigmoid Function

A sigmoid function is any mathematical function whose graph of a function, graph has a characteristic S-shaped or sigmoid curve. A common example of a sigmoid function is the logistic function, which is defined by the formula :\sigma(x) = \frac = \frac = 1 - \sigma(-x). Other sigmoid functions are given in the #Examples, Examples section. In some fields, most notably in the context of artificial neural networks, the term "sigmoid function" is used as a synonym for "logistic function". Special cases of the sigmoid function include the Gompertz curve (used in modeling systems that saturate at large values of ''x'') and the ogee curve (used in the spillway of some dams). Sigmoid functions have domain of all real numbers, with return (response) value commonly monotonically increasing but could be decreasing. Sigmoid functions most often show a return value (''y'' axis) in the range 0 to 1. Another commonly used range is from −1 to 1. A wide variety of sigmoid functions ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Swish Function

The swish function is a family of mathematical function defined as follows: : \operatorname_\beta(x) = x \operatorname(\beta x) = \frac. where \beta can be constant (usually set to 1) or trainable and "sigmoid" refers to the logistic function. The swish family was designed to smoothly interpolate between a linear function and the ReLU function. When considering positive values, Swish is a particular case of doubly parameterized sigmoid shrinkage function defined in . Variants of the swish function include Mish. Special values For β = 0, the function is linear: f(''x'') = ''x''/2. For β = 1, the function is the Sigmoid Linear Unit (SiLU). With β → ∞, the function converges to ReLU. Thus, the swish family smoothly interpolates between a linear function and the ReLU function. Since \operatorname_\beta(x) = \operatorname_1(\beta x) / \beta, all instances of swish have the same shape as the default \operatorname_1 , zoomed by \beta . ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |