List of important publications in theoretical computer science on:

[Wikipedia]

[Google]

[Amazon]

A list is a

A list is a

The Potential and Limitations of Studying Lists

, in Lucie Doležalová, ed., ''The Charm of a List: From the Sumerians to Computerised Data Processing'' (2009).

The 100 Greatest Bands of All Time: A Guide to the Legends Who Rocked the World

' (2015), p. vii. Musicologist David V. Moskowitz notes: The practice of ordering a list evaluating things so that better items on the list are ahead of less good items is called

A list is a

A list is a set

Set, The Set, SET or SETS may refer to:

Science, technology, and mathematics Mathematics

*Set (mathematics), a collection of elements

*Category of sets, the category whose objects and morphisms are sets and total functions, respectively

Electro ...

of discrete items of information

Information is an Abstraction, abstract concept that refers to something which has the power Communication, to inform. At the most fundamental level, it pertains to the Interpretation (philosophy), interpretation (perhaps Interpretation (log ...

collected and set forth in some format for utility, entertainment, or other purposes. A list may be memorialized in any number of ways, including existing only in the mind of the list-maker, but lists are frequently written down on paper, or maintained electronically. Lists are "most frequently a tool", and "one does not ''read'' but only ''uses'' a list: one looks up the relevant information in it, but usually does not need to deal with it as a whole". Lucie Doležalová,The Potential and Limitations of Studying Lists

, in Lucie Doležalová, ed., ''The Charm of a List: From the Sumerians to Computerised Data Processing'' (2009).

Purpose

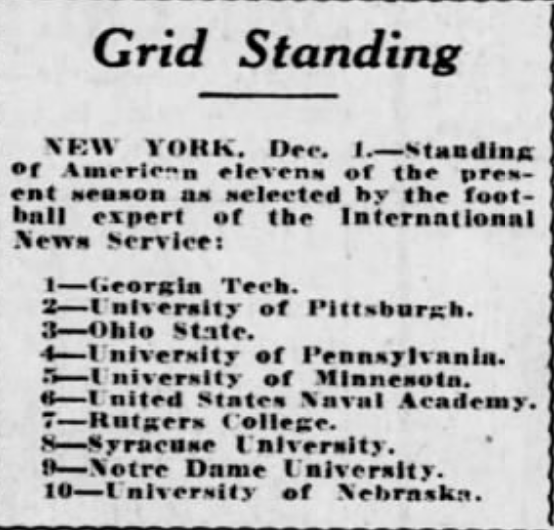

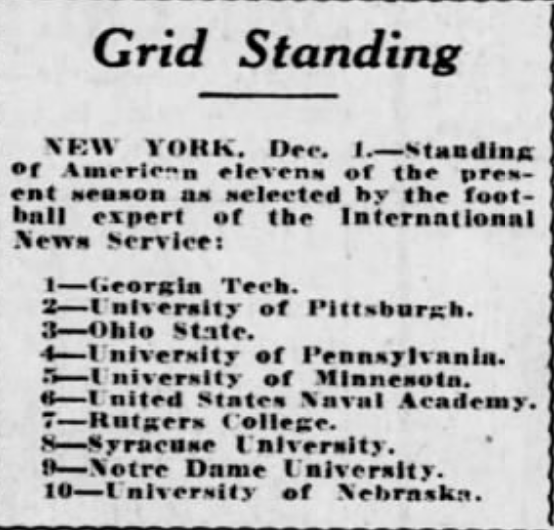

It has been observed that, with a few exceptions, "the scholarship on lists remains fragmented". David Wallechinsky, a co-author of '' The Book of Lists'', described the attraction of lists as being "because we live in an era of overstimulation, especially in terms of information, and lists help us in organizing what is otherwise overwhelming". While many lists have practical purposes, such as memorializing needed household items, lists are also created purely for entertainment, such as lists put out by various music venues of the "best bands" or "best songs" of a certain era. Such lists may be based on objective factors such as record sales and awards received, or may be generated entirely from the subjective opinion of the writer of the list.David V. Moskowitz, ed.,The 100 Greatest Bands of All Time: A Guide to the Legends Who Rocked the World

' (2015), p. vii. Musicologist David V. Moskowitz notes: The practice of ordering a list evaluating things so that better items on the list are ahead of less good items is called

ranking

A ranking is a relationship between a set of items, often recorded in a list, such that, for any two items, the first is either "ranked higher than", "ranked lower than", or "ranked equal to" the second. In mathematics, this is known as a weak ...

. Lists created for the purpose of ranking a subset of an indefinite population (such as the top 100 of the thousands of bands that have performed in a given genre) are almost always presented as round numbers. Studies have determined that a list of items falling within a round number has a substantial psychological impact, such that "the difference between items ranked No. 10 and No. 11 feels enormous and significant, even if it's actually quite minimal or unknown". The same list may serve different purposes for different people. A list of currently popular songs may provide the average person with suggestions for music that they may want to sample, but to a record company executive, the same list would indicate trends regarding the kinds of artists to sign to maximize future profits.

Organizing principles

Lists may be organized by a number of different principles. For example, ashopping list

A shopping list is a list of items needed to be purchased by a shopping, shopper. Consumers often compile a shopping list of groceries to purchase on the next visit to the grocery store (a grocery list). There are surviving examples of Ancient R ...

or a list of places to visit while vacationing might each be organized by priority (with the most important or most desired items at the top and least important or least desired at the bottom), or by proximity, so that following the list will take the shopper or vacationer on the most efficient route.

A list may also completely lack any principle of organization, if it does not serve a purpose for which such a principle is needed. An unsorted list is one "in which data items are placed in no particular order with respect to their content; the only relationships between data elements consist of the list predecessor and successor relationships". For example, in her book, ''Seriously... I'm Kidding'', comedian Ellen DeGeneres

Ellen Lee DeGeneres ( ; born January 26, 1958) is an American former comedian, actress, television host, writer, and producer.

She began her career in stand-up comedy in the early 1980s, gaining national attention with a 1986 appearance on '' ...

provides a list of acknowledgements, notes her difficulty in determining how to order the list, and ultimately writes: "This list is in no particular order. Just because someone is first doesn't mean they're the most important. It doesn't mean they're not the most important either". A list that is sorted by some principle may be said to be following a ranking

A ranking is a relationship between a set of items, often recorded in a list, such that, for any two items, the first is either "ranked higher than", "ranked lower than", or "ranked equal to" the second. In mathematics, this is known as a weak ...

or sequence

In mathematics, a sequence is an enumerated collection of objects in which repetitions are allowed and order matters. Like a set, it contains members (also called ''elements'', or ''terms''). The number of elements (possibly infinite) is cal ...

.

Items on a list are often delineated by bullet points or a numbering scheme

There are many different numbering schemes for assigning nominal numbers to entities. These generally require an agreed set of rules, or a central coordinator. The schemes can be considered to be examples of a primary key of a database management ...

.

Kinds of lists

Kinds of lists used ineveryday life

Everyday life, daily life or routine life comprises the ways in which people typically act, think, and feel on a daily basis. Everyday life may be described as mundane, routine, natural, habitual, or Normality (behavior), normal.

Human diurna ...

include:

* Shopping list

A shopping list is a list of items needed to be purchased by a shopping, shopper. Consumers often compile a shopping list of groceries to purchase on the next visit to the grocery store (a grocery list). There are surviving examples of Ancient R ...

: a list of items needed to be purchased by a shopper, such as a list of groceries

A grocery store (American English, AE), grocery shop or grocer's shop (British English, BE) or simply grocery is a retail store that primarily retails a general range of food Product (business), products, which may be Fresh food, fresh or Food p ...

to be purchased on the next visit to the grocery store

A grocery store ( AE), grocery shop or grocer's shop ( BE) or simply grocery is a retail store that primarily retails a general range of food products, which may be fresh or packaged. In everyday US usage, however, "grocery store" is a synon ...

(a grocery list)

* To-do list or Task list: a list or "backlog" of pending tasks

* Checklist

A checklist is a type of job aid used in repetitive tasks to reduce failure by compensating for potential limits of human memory and attention. Checklists are used both to ensure that safety-critical system preparations are carried out completely ...

: a type of job aid used in repetitive tasks to reduce failure by compensating for potential limits of human memory and attention

* Roster: a list of people scheduled to participate in a task, such as employees of a company, or, more specifically, professional athletes set to participate in a specific sporting event

* Wish list

A wish list, wishlist or want list is a list of goods or services that a person or organization desires. The author may distribute copies of their list to family, friends, and other stakeholder (corporate), stakeholders who are likely to purch ...

, an itemization of goods or services that a person or organization desires

Many highly specialized kinds of lists also exist. For example, a table of contents

A table of contents (or simply contents, abbreviated as TOC), is a list usually part of the Book design#Front matter, front matter preceding the main text of a book or other written work containing the titles of the text's sections, sometimes with ...

is a list of the chapters or other features of a written work, usually at the beginning of that work, and an index

Index (: indexes or indices) may refer to:

Arts, entertainment, and media Fictional entities

* Index (''A Certain Magical Index''), a character in the light novel series ''A Certain Magical Index''

* The Index, an item on the Halo Array in the ...

is a list of concepts or terms found in such a work, usually at the end of the work, and usually indicating where in the work the concepts or terms can be found. A track list

In the field of sound recording and reproduction, a track listing (also called a track list or tracklist) is a list created in connection with a recorded medium to indicate the contents of that medium and their order. The most typical usage of a t ...

is a list of songs on an album, and set list is a list of songs that a band will regularly play in concerts during a tour. A word list is a list of the lexicon

A lexicon (plural: lexicons, rarely lexica) is the vocabulary of a language or branch of knowledge (such as nautical or medical). In linguistics, a lexicon is a language's inventory of lexemes. The word ''lexicon'' derives from Greek word () ...

of a language

Language is a structured system of communication that consists of grammar and vocabulary. It is the primary means by which humans convey meaning, both in spoken and signed language, signed forms, and may also be conveyed through writing syste ...

(generally sorted by frequency of occurrence either by levels or as a ranked list) within some given text corpus, serving the purpose of vocabulary acquisition.

Many connoisseurs or experts in particular areas will assemble "best of" lists containing things that are considered the best examples within that area. Where such lists are open to a wide array of subjective considerations, such as a list of best poems, best songs, or best athletes in a particular sport, experts with differing opinions may engage in lengthy debates over which items belong on the list, and in which order.

Task lists

A task list (also called a to-do list or "things-to-do") is a list of tasks to be completed, such as chores or steps toward completing a project. It is aninventory

Inventory (British English) or stock (American English) is a quantity of the goods and materials that a business holds for the ultimate goal of resale, production or utilisation.

Inventory management is a discipline primarily about specifying ...

tool which serves as an alternative or supplement to memory

Memory is the faculty of the mind by which data or information is encoded, stored, and retrieved when needed. It is the retention of information over time for the purpose of influencing future action. If past events could not be remembe ...

. Writer Julie Morgenstern suggests "do's and don'ts" of time management

Time management is the process of planning and exercising conscious control of time spent on specific activities—especially to increase effectiveness, efficiency and productivity.

Time management involves demands relating to work, social ...

that include mapping out everything that is important, by making a task list. Task lists are also business management, project management

Project management is the process of supervising the work of a Project team, team to achieve all project goals within the given constraints. This information is usually described in project initiation documentation, project documentation, crea ...

, and software development

Software development is the process of designing and Implementation, implementing a software solution to Computer user satisfaction, satisfy a User (computing), user. The process is more encompassing than Computer programming, programming, wri ...

, and may involve more than one list.

When one of the items on a task list is accomplished, the task is checked or cross

A cross is a religious symbol consisting of two Intersection (set theory), intersecting Line (geometry), lines, usually perpendicular to each other. The lines usually run vertically and horizontally. A cross of oblique lines, in the shape of t ...

ed off. The traditional method is to write these on a piece of paper with a pen

PEN may refer to:

* (National Ecological Party), former name of the Brazilian political party Patriota (PATRI)

* PEN International, a worldwide association of writers

** English PEN, the founding centre of PEN International

** PEN America, located ...

or pencil

A pencil () is a writing or drawing implement with a solid pigment core in a protective casing that reduces the risk of core breakage and keeps it from marking the user's hand.

Pencils create marks by physical abrasion, leaving a trail of ...

, usually on a note pad or clip-board. Task lists can also have the form of paper or software checklist

A checklist is a type of job aid used in repetitive tasks to reduce failure by compensating for potential limits of human memory and attention. Checklists are used both to ensure that safety-critical system preparations are carried out completely ...

s. Numerous digital equivalents are now available, including personal information management (PIM) applications and most PDAs. There are also several web-based task list applications, many of which are free.

Task list organization

Task lists are often diarized and tiered. The simplest tiered system includes a general to-do list (or task-holding file) to record all the tasks the person needs to accomplish and a daily to-do list which is created each day by transferring tasks from the general to-do list. An alternative is to create a "not-to-do list", to avoid unnecessary tasks. Task lists are often prioritized in the following ways. * A daily list of things to do, numbered in the order of their importance and done in that order one at a time as daily time allows, is attributed to consultant Ivy Lee (1877–1934) as the most profitable advice received by Charles M. Schwab (1862–1939), president of the Bethlehem Steel Corporation. * An early advocate of "ABC" prioritization was Alan Lakein, in 1973. In his system "A" items were the most important ("A-1" the most important within that group), "B" next most important, "C" least important. * A particular method of applying the ''ABC method'' assigns "A" to tasks to be done within aday

A day is the time rotation period, period of a full Earth's rotation, rotation of the Earth with respect to the Sun. On average, this is 24 hours (86,400 seconds). As a day passes at a given location it experiences morning, afternoon, evening, ...

, "B" a week

A week is a unit of time equal to seven days. It is the standard time period used for short cycles of days in most parts of the world. The days are often used to indicate common work days and rest days, as well as days of worship. Weeks are ofte ...

, and "C" a month

A month is a unit of time, used with calendars, that is approximately as long as a natural phase cycle of the Moon; the words ''month'' and ''Moon'' are cognates. The traditional concept of months arose with the cycle of Moon phases; such lunar mo ...

.

* To prioritize a daily task list, one either records the tasks in the order of highest priority, or assigns them a number

A number is a mathematical object used to count, measure, and label. The most basic examples are the natural numbers 1, 2, 3, 4, and so forth. Numbers can be represented in language with number words. More universally, individual numbers can ...

after they are listed ("1" for highest priority, "2" for second highest priority, etc.) which indicates in which order to execute the tasks. The latter method is generally faster, allowing the tasks to be recorded more quickly.

* Another way of prioritizing compulsory tasks (group A) is to put the most unpleasant one first. When it is done, the rest of the list feels easier. Groups B and C can benefit from the same idea, but instead of doing the first task (which is the most unpleasant) right away, it gives motivation to do other tasks from the list to avoid the first one.

A completely different approach which argues ''against'' prioritizing altogether was put forward by British author Mark Forster in his book "Do It Tomorrow and Other Secrets of Time Management". This is based on the idea of operating "closed" to-do lists, instead of the traditional "open" to-do list. He argues that the traditional never-ending to-do lists virtually guarantees that some of your work will be left undone. This approach advocates getting all your work done, every day, and if you are unable to achieve it, that helps you diagnose where you are going wrong and what needs to change.

Various writers have stressed potential difficulties with to-do lists such as the following.

* Management of the list can take over from implementing it. This could be caused by procrastination by prolonging the planning activity. This is akin to analysis paralysis. As with any activity, there is a point of diminishing returns.

* To remain flexible, a task system must allow for disaster. A company must be ready for a disaster. Even if it is a small disaster, if no one made time for this situation, it can metastasize

Metastasis is a pathogenic agent's spreading from an initial or primary site to a different or secondary site within the host's body; the term is typically used when referring to metastasis by a cancerous tumor. The newly pathological sites, ...

, potentially causing damage to the company.

* To avoid getting stuck in a wasteful pattern, the task system should also include regular (monthly, semi-annual, and annual) planning and system-evaluation sessions, to weed out inefficiencies and ensure the user is headed in the direction he or she truly desires.

* If some time is not regularly spent on achieving long-range goals, the individual may get stuck in a perpetual holding pattern on short-term plans, like staying at a particular job much longer than originally planned.

See also

* A-list *Blacklist

Blacklisting is the action of a group or authority compiling a blacklist of people, countries or other entities to be avoided or distrusted as being deemed unacceptable to those making the list; if people are on a blacklist, then they are considere ...

/Whitelist

A whitelist or allowlist is a list or register of entities that are being provided a particular privilege, service, mobility, access or recognition. Entities on the list will be accepted, approved and/or recognized. Whitelisting is the reverse of ...

* '' The Book of Lists''

* Difference list

In computer science, the term difference list refers to a data structure representing a list with an efficient O(1) concatenation operation and conversion to a linked list in time proportional to its length. Difference lists can be implemented usi ...

* '' The Infinity of Lists'' (2009) by Umberto Eco, on the topic of lists

* Life list

* Linked list

In computer science, a linked list is a linear collection of data elements whose order is not given by their physical placement in memory. Instead, each element points to the next. It is a data structure consisting of a collection of nodes whi ...

* List (abstract data type)

In computer science, a list or sequence is a collection of items that are finite in number and in a particular order. An instance of a list is a computer representation of the mathematical concept of a tuple or finite sequence.

A list may co ...

, in computer science

* List comprehension

* List of lists of lists

This list of lists of lists is a list of articles that are lists of other list articles. Each of the pages linked here is an index to multiple lists on a topic.

General reference

* List of lists of liststhis article itself is a list of lists, s ...

* Outline (list)

An outline, also called a hierarchical outline, is a list arranged to show hierarchy, hierarchical relationships and is a type of tree structure. An outline is used to present the main points (in Sentence (linguistics), sentences) or Topic and co ...

* Self-organizing list

* Short list

* Wait list

* Word list

References

{{Authority control Main topic articles Information management