computer rendering on:

[Wikipedia]

[Google]

[Amazon]

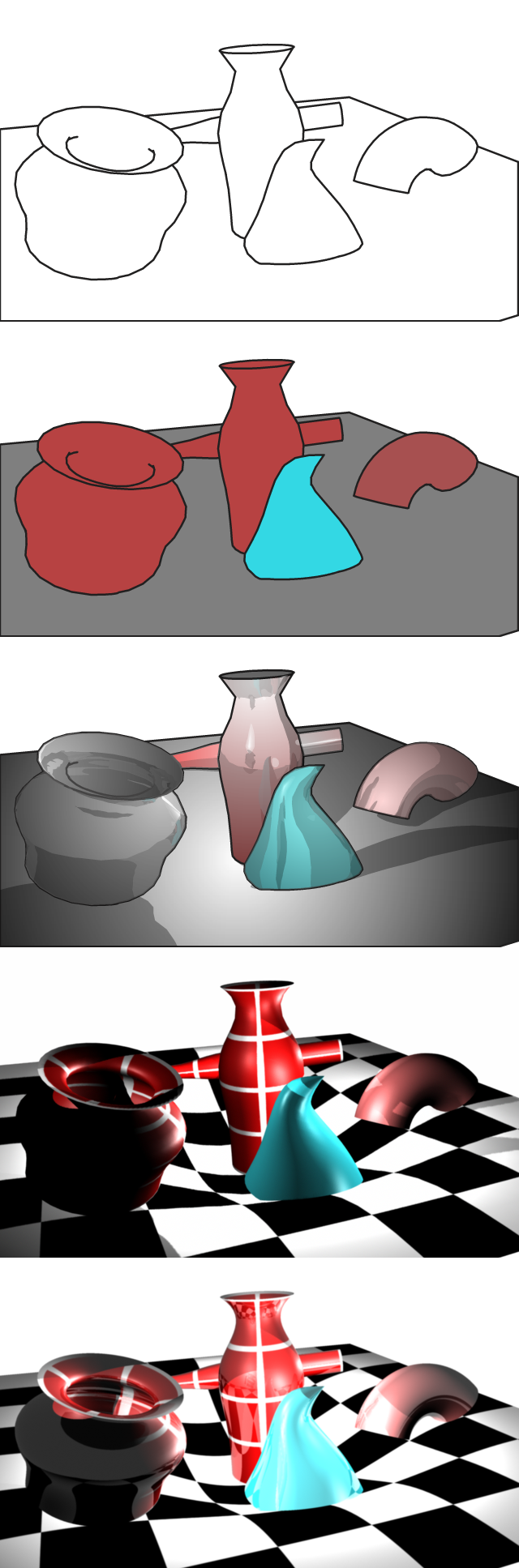

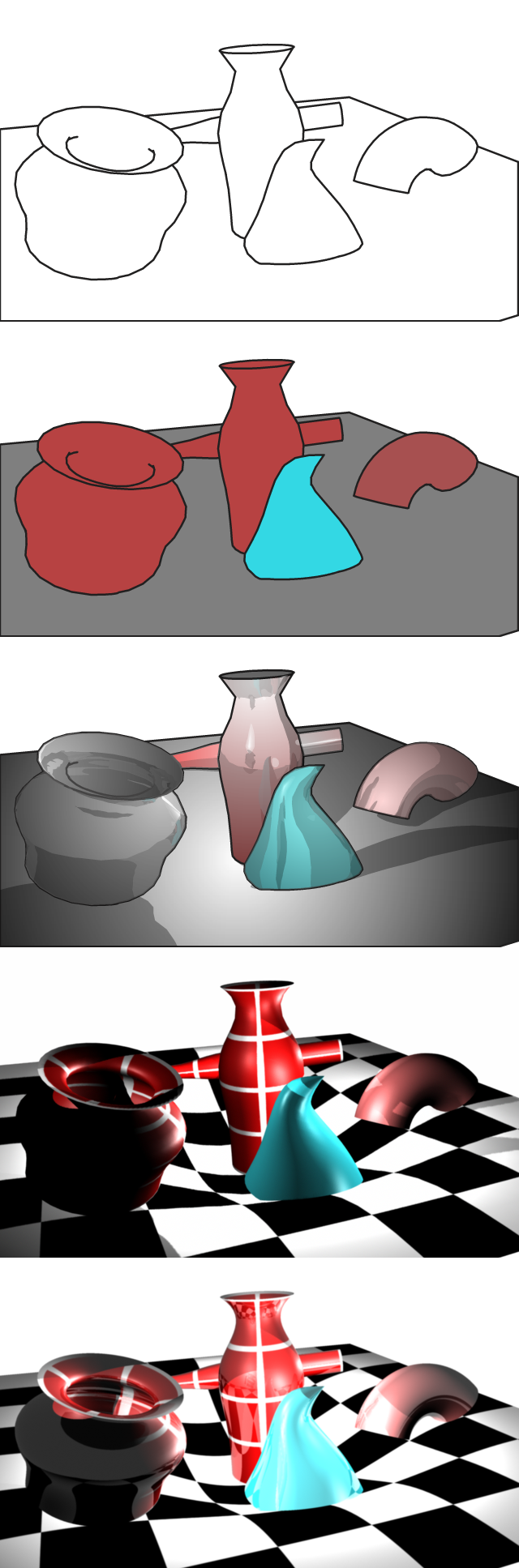

Rendering or image synthesis is the process of generating a photorealistic or non-photorealistic image from a 2D or

Rendering or image synthesis is the process of generating a photorealistic or non-photorealistic image from a 2D or

Many rendering have been researched, and software used for rendering may employ a number of different techniques to obtain a final image.

Many rendering have been researched, and software used for rendering may employ a number of different techniques to obtain a final image.

A high-level representation of an image necessarily contains elements in a different domain from pixels. These elements are referred to as primitives. In a schematic drawing, for instance, line segments and curves might be primitives. In a graphical user interface, windows and buttons might be the primitives. In rendering of 3D models, triangles and polygons in space might be primitives.

If a pixel-by-pixel (image order) approach to rendering is impractical or too slow for some task, then a primitive-by-primitive (object order) approach to rendering may prove useful. Here, one loop through each of the primitives, determines which pixels in the image it affects, and modifies those pixels accordingly. This is called rasterization, and is the rendering method used by all current

A high-level representation of an image necessarily contains elements in a different domain from pixels. These elements are referred to as primitives. In a schematic drawing, for instance, line segments and curves might be primitives. In a graphical user interface, windows and buttons might be the primitives. In rendering of 3D models, triangles and polygons in space might be primitives.

If a pixel-by-pixel (image order) approach to rendering is impractical or too slow for some task, then a primitive-by-primitive (object order) approach to rendering may prove useful. Here, one loop through each of the primitives, determines which pixels in the image it affects, and modifies those pixels accordingly. This is called rasterization, and is the rendering method used by all current

* 1968 '' Ray casting''

* 1970 '' Scanline rendering''

* 1971 '' Gouraud shading''

* 1973 '' Phong shading''

* 1973 '' Phong reflection''

* 1973 '' Diffuse reflection''

* 1973 '' Specular highlight''

* 1973 '' Specular reflection''

* 1974 '' Sprites''

* 1974 '' Scrolling''

* 1974 '' Texture mapping''

* 1974 '' Z-buffering''

* 1976 '' Environment mapping''

* 1977 '' Blinn shading''

* 1977 '' Side-scrolling''

* 1977 '' Shadow volumes''

* 1978 '' Shadow mapping''

* 1978 '' Bump mapping''

* 1979 ''

* 1968 '' Ray casting''

* 1970 '' Scanline rendering''

* 1971 '' Gouraud shading''

* 1973 '' Phong shading''

* 1973 '' Phong reflection''

* 1973 '' Diffuse reflection''

* 1973 '' Specular highlight''

* 1973 '' Specular reflection''

* 1974 '' Sprites''

* 1974 '' Scrolling''

* 1974 '' Texture mapping''

* 1974 '' Z-buffering''

* 1976 '' Environment mapping''

* 1977 '' Blinn shading''

* 1977 '' Side-scrolling''

* 1977 '' Shadow volumes''

* 1978 '' Shadow mapping''

* 1978 '' Bump mapping''

* 1979 ''

Generalization of Lambert's Reflectance Model

". SIGGRAPH. pp.239-246, Jul, 1994 * 1993 '' Tone mapping'' * 1993 '' Subsurface scattering'' * 1994 '' Ambient occlusion'' * 1995 ''

GPU Rendering Magazine

', online CGI magazine about advantages of GPU rendering

SIGGRAPH

the ACMs special interest group in graphics the largest academic and professional association and conference

List of links to (recent, as of 2004) siggraph papers (and some others) on the web

{{DEFAULTSORT:Rendering (Computer Graphics) 3D rendering

Rendering or image synthesis is the process of generating a photorealistic or non-photorealistic image from a 2D or

Rendering or image synthesis is the process of generating a photorealistic or non-photorealistic image from a 2D or 3D model

In 3D computer graphics, 3D modeling is the process of developing a mathematical coordinate-based representation of any surface of an object (inanimate or living) in three dimensions via specialized software by manipulating edges, vertices, an ...

by means of a computer program. The resulting image is referred to as the render. Multiple models can be defined in a ''scene file'' containing objects in a strictly defined language or data structure

In computer science, a data structure is a data organization, management, and storage format that is usually chosen for efficient access to data. More precisely, a data structure is a collection of data values, the relationships among them, a ...

. The scene file contains geometry, viewpoint, texture, lighting, and shading information describing the virtual scene. The data contained in the scene file is then passed to a rendering program to be processed and output to a digital image

A digital image is an image composed of picture elements, also known as ''pixels'', each with ''finite'', '' discrete quantities'' of numeric representation for its intensity or gray level that is an output from its two-dimensional functions ...

or raster graphics

upright=1, The Smiley, smiley face in the top left corner is a raster image. When enlarged, individual pixels appear as squares. Enlarging further, each pixel can be analyzed, with their colors constructed through combination of the values for ...

image file. The term "rendering" is analogous to the concept of an artist's impression of a scene. The term "rendering" is also used to describe the process of calculating effects in a video editing program to produce the final video output.

Rendering is one of the major sub-topics of 3D computer graphics

3D computer graphics, or “3D graphics,” sometimes called CGI, 3D-CGI or three-dimensional computer graphics are graphics that use a three-dimensional representation of geometric data (often Cartesian) that is stored in the computer for th ...

, and in practice it is always connected to the others. It is the last major step in the graphics pipeline

In computer graphics, a computer graphics pipeline, rendering pipeline or simply graphics pipeline, is a conceptual model that describes what steps a graphics system needs to perform to Rendering (computer graphics), render a ...

, giving models and animation their final appearance. With the increasing sophistication of computer graphics since the 1970s, it has become a more distinct subject.

Rendering has uses in architecture, video games, simulators

A simulation is the imitation of the operation of a real-world process or system over time. Simulations require the use of models; the model represents the key characteristics or behaviors of the selected system or process, whereas the ...

, movie and TV visual effects, and design visualization, each employing a different balance of features and techniques. A wide variety of renderers are available for use. Some are integrated into larger modeling and animation packages, some are stand-alone, and some are free open-source projects. On the inside, a renderer is a carefully engineered program based on multiple disciplines, including light physics, visual perception, mathematics

Mathematics is an area of knowledge that includes the topics of numbers, formulas and related structures, shapes and the spaces in which they are contained, and quantities and their changes. These topics are represented in modern mathematics ...

, and software development

Software development is the process of conceiving, specifying, designing, programming, documenting, testing, and bug fixing involved in creating and maintaining applications, frameworks, or other software components. Software development invol ...

.

Though the technical details of rendering methods vary, the general challenges to overcome in producing a 2D image on a screen from a 3D representation stored in a scene file are handled by the graphics pipeline

In computer graphics, a computer graphics pipeline, rendering pipeline or simply graphics pipeline, is a conceptual model that describes what steps a graphics system needs to perform to Rendering (computer graphics), render a ...

in a rendering device such as a GPU. A GPU is a purpose-built device that assists a CPU

A central processing unit (CPU), also called a central processor, main processor or just processor, is the electronic circuitry that executes instructions comprising a computer program. The CPU performs basic arithmetic, logic, controlling, and ...

in performing complex rendering calculations. If a scene is to look relatively realistic and predictable under virtual lighting, the rendering software must solve the rendering equation. The rendering equation doesn't account for all lighting phenomena, but instead acts as a general lighting model for computer-generated imagery.

In the case of 3D graphics, scenes can be pre-rendered or generated in realtime. Pre-rendering is a slow, computationally intensive process that is typically used for movie creation, where scenes can be generated ahead of time, while real-time rendering is often done for 3D video games and other applications that must dynamically create scenes. 3D hardware accelerators can improve realtime rendering performance.

Usage

When the pre-image (a wireframe sketch usually) is complete, rendering is used, which adds in bitmap textures orprocedural textures

In computer graphics, a procedural texture is a texture created using a mathematical description (i.e. an algorithm) rather than directly stored data. The advantage of this approach is low storage cost, unlimited texture resolution and easy textur ...

, lights, bump mapping and relative position to other objects. The result is a completed image the consumer or intended viewer sees.

For movie animations, several images (frames) must be rendered, and stitched together in a program capable of making an animation of this sort. Most 3D image editing programs can do this.

Features

A rendered image can be understood in terms of a number of visible features. Renderingresearch and development

Research and development (R&D or R+D), known in Europe as research and technological development (RTD), is the set of innovative activities undertaken by corporations or governments in developing new services or products, and improving existi ...

has been largely motivated by finding ways to simulate these efficiently. Some relate directly to particular algorithms and techniques, while others are produced together.

* Shading how the color and brightness of a surface varies with lighting

* Texture-mapping a method of applying detail to surfaces

* Bump-mapping

Bump mapping is a texture mapping technique in computer graphics for simulating bumps and wrinkles on the surface of an object. This is achieved by perturbing the surface normals of the object and using the perturbed normal during lighting calcu ...

a method of simulating small-scale bumpiness on surfaces

* Fogging/participating medium how light dims when passing through non-clear atmosphere or air

* Shadows the effect of obstructing light

* Soft shadows

The umbra, penumbra and antumbra are three distinct parts of a shadow, created by any light source after impinging on an opaque object. Assuming no diffraction, for a collimated beam (such as a point source) of light, only the umbra is cast.

Th ...

varying darkness caused by partially obscured light sources

* Reflection mirror-like or highly glossy reflection

* Transparency (optics), transparency (graphic) or opacity

Opacity or opaque may refer to:

* Impediments to (especially, visible) light:

** Opacities, absorption coefficients

** Opacity (optics), property or degree of blocking the transmission of light

* Metaphors derived from literal optics:

** In lingu ...

sharp transmission of light through solid objects

* Translucency highly scattered transmission of light through solid objects

* Refraction bending of light associated with transparency

* Diffraction

Diffraction is defined as the interference or bending of waves around the corners of an obstacle or through an aperture into the region of geometrical shadow of the obstacle/aperture. The diffracting object or aperture effectively becomes a s ...

bending, spreading, and interference of light passing by an object or aperture that disrupts the ray

* Indirect illumination

Global illumination (GI), or indirect illumination, is a group of algorithms used in 3D computer graphics that are meant to add more realistic lighting to 3D scenes. Such algorithms take into account not only the light that comes directly from ...

surfaces illuminated by light reflected off other surfaces, rather than directly from a light source (also known as global illumination)

* Caustics (a form of indirect illumination) reflection of light off a shiny object, or focusing of light through a transparent object, to produce bright highlights on another object

* Depth of field

The depth of field (DOF) is the distance between the nearest and the furthest objects that are in acceptably sharp focus in an image captured with a camera.

Factors affecting depth of field

For cameras that can only focus on one object dist ...

objects appear blurry or out of focus when too far in front of or behind the object in focus

* Motion blur objects appear blurry due to high-speed motion, or the motion of the camera

* Non-photorealistic rendering rendering of scenes in an artistic style, intended to look like a painting or drawing

Techniques

Many rendering have been researched, and software used for rendering may employ a number of different techniques to obtain a final image.

Many rendering have been researched, and software used for rendering may employ a number of different techniques to obtain a final image.

Tracing

Tracing may refer to:

Computer graphics

* Image tracing, digital image processing to convert raster graphics into vector graphics

* Path tracing, a method of rendering images of three-dimensional scenes such that the global illumination is faithf ...

every particle of light in a scene is nearly always completely impractical and would take a stupendous amount of time. Even tracing a portion large enough to produce an image takes an inordinate amount of time if the sampling is not intelligently restricted.

Therefore, a few loose families of more-efficient light transport modeling techniques have emerged:

* rasterization, including scanline rendering, geometrically projects objects in the scene to an image plane, without advanced optical effects;

* ray casting considers the scene as observed from a specific point of view, calculating the observed image based only on geometry and very basic optical laws of reflection intensity, and perhaps using Monte Carlo techniques to reduce artifacts;

* ray tracing is similar to ray casting, but employs more advanced optical simulation, and usually uses Monte Carlo techniques to obtain more realistic results at a speed that is often orders of magnitude faster.

The fourth type of light transport technique, radiosity is not usually implemented as a rendering technique but instead calculates the passage of light as it leaves the light source and illuminates surfaces. These surfaces are usually rendered to the display using one of the other three techniques.

Most advanced software combines two or more of the techniques to obtain good-enough results at reasonable cost.

Another distinction is between image order

In computer graphics, image order algorithms iterate over the pixels in the image to be produced, rather than the elements in the scene to be rendered. Object order algorithms are those that iterate over the elements in the scene to be rendered, r ...

algorithms, which iterate over pixels of the image plane, and object order algorithms, which iterate over objects in the scene. Generally object order is more efficient, as there are usually fewer objects in a scene than pixels.

Scanline rendering and rasterization

A high-level representation of an image necessarily contains elements in a different domain from pixels. These elements are referred to as primitives. In a schematic drawing, for instance, line segments and curves might be primitives. In a graphical user interface, windows and buttons might be the primitives. In rendering of 3D models, triangles and polygons in space might be primitives.

If a pixel-by-pixel (image order) approach to rendering is impractical or too slow for some task, then a primitive-by-primitive (object order) approach to rendering may prove useful. Here, one loop through each of the primitives, determines which pixels in the image it affects, and modifies those pixels accordingly. This is called rasterization, and is the rendering method used by all current

A high-level representation of an image necessarily contains elements in a different domain from pixels. These elements are referred to as primitives. In a schematic drawing, for instance, line segments and curves might be primitives. In a graphical user interface, windows and buttons might be the primitives. In rendering of 3D models, triangles and polygons in space might be primitives.

If a pixel-by-pixel (image order) approach to rendering is impractical or too slow for some task, then a primitive-by-primitive (object order) approach to rendering may prove useful. Here, one loop through each of the primitives, determines which pixels in the image it affects, and modifies those pixels accordingly. This is called rasterization, and is the rendering method used by all current graphics card

A graphics card (also called a video card, display card, graphics adapter, VGA card/VGA, video adapter, display adapter, or mistakenly GPU) is an expansion card which generates a feed of output images to a display device, such as a computer moni ...

s.

Rasterization is frequently faster than pixel-by-pixel rendering. First, large areas of the image may be empty of primitives; rasterization will ignore these areas, but pixel-by-pixel rendering must pass through them. Second, rasterization can improve cache coherency and reduce redundant work by taking advantage of the fact that the pixels occupied by a single primitive tend to be contiguous in the image. For these reasons, rasterization is usually the approach of choice when interactive

Across the many fields concerned with interactivity, including information science, computer science, human-computer interaction, communication, and industrial design, there is little agreement over the meaning of the term "interactivity", but mo ...

rendering is required; however, the pixel-by-pixel approach can often produce higher-quality images and is more versatile because it does not depend on as many assumptions about the image as rasterization.

The older form of rasterization is characterized by rendering an entire face (primitive) as a single color. Alternatively, rasterization can be done in a more complicated manner by first rendering the vertices of a face and then rendering the pixels of that face as a blending of the vertex colors. This version of rasterization has overtaken the old method as it allows the graphics to flow without complicated textures (a rasterized image when used face by face tends to have a very block-like effect if not covered in complex textures; the faces are not smooth because there is no gradual color change from one primitive to the next). This newer method of rasterization utilizes the graphics card's more taxing shading functions and still achieves better performance because the simpler textures stored in memory use less space. Sometimes designers will use one rasterization method on some faces and the other method on others based on the angle at which that face meets other joined faces, thus increasing speed and not hurting the overall effect.

Ray casting

In ray casting the geometry which has been modeled is parsed pixel by pixel, line by line, from the point of view outward, as if casting rays out from the point of view. Where an object is intersected, the color value at the point may be evaluated using several methods. In the simplest, the color value of the object at the point of intersection becomes the value of that pixel. The color may be determined from a texture-map. A more sophisticated method is to modify the color value by an illumination factor, but without calculating the relationship to a simulated light source. To reduce artifacts, a number of rays in slightly different directions may be averaged. Ray casting involves calculating the "view direction" (from camera position), and incrementally following along that "ray cast" through "solid 3d objects" in the scene, while accumulating the resulting value from each point in 3D space. This is related and similar to "ray tracing" except that the raycast is usually not "bounced" off surfaces (where the "ray tracing" indicates that it is tracing out the lights path including bounces). "Ray casting" implies that the light ray is following a straight path (which may include traveling through semi-transparent objects). The ray cast is a vector that can originate from the camera or from the scene endpoint ("back to front", or "front to back"). Sometimes the final light value is derived from a "transfer function" and sometimes it's used directly. Rough simulations of optical properties may be additionally employed: a simple calculation of the ray from the object to the point of view is made. Another calculation is made of the angle of incidence of light rays from the light source(s), and from these as well as the specified intensities of the light sources, the value of the pixel is calculated. Another simulation uses illumination plotted from a radiosity algorithm, or a combination of these two.Ray tracing

Ray tracing aims to simulate the natural flow of light, interpreted as particles. Often, ray tracing methods are utilized to approximate the solution to the rendering equation by applyingMonte Carlo methods

Monte Carlo methods, or Monte Carlo experiments, are a broad class of computational algorithms that rely on repeated random sampling to obtain numerical results. The underlying concept is to use randomness to solve problems that might be determini ...

to it. Some of the most used methods are path tracing, bidirectional path tracing

In 3D computer graphics, ray tracing is a technique for modeling light transport for use in a wide variety of rendering algorithms for generating digital images.

On a spectrum of computational cost and visual fidelity, ray tracing-based rend ...

, or Metropolis light transport, but also semi realistic methods are in use, like Whitted Style Ray Tracing Whitted may refer to:

*Albert Whitted Airport, small public airport in St. Petersburg, Florida, United States

* Alvis Whitted (born 1974), American football player

* Chiles-Whitted UFO Encounter on July 24, 1948, when two American commercial pilots ...

, or hybrids. While most implementations let light propagate on straight lines, applications exist to simulate relativistic spacetime effects.

In a final, production quality rendering of a ray traced work, multiple rays are generally shot for each pixel, and traced not just to the first object of intersection, but rather, through a number of sequential 'bounces', using the known laws of optics such as "angle of incidence equals angle of reflection" and more advanced laws that deal with refraction and surface roughness.

Once the ray either encounters a light source, or more probably once a set limiting number of bounces has been evaluated, then the surface illumination at that final point is evaluated using techniques described above, and the changes along the way through the various bounces evaluated to estimate a value observed at the point of view. This is all repeated for each sample, for each pixel.

In distribution ray tracing, at each point of intersection, multiple rays may be spawned. In path tracing, however, only a single ray or none is fired at each intersection, utilizing the statistical nature of Monte Carlo experiments.

As a brute-force method, ray tracing has been too slow to consider for real-time, and until recently too slow even to consider for short films of any degree of quality, although it has been used for special effects sequences, and in advertising, where a short portion of high quality (perhaps even photorealistic) footage is required.

However, efforts at optimizing to reduce the number of calculations needed in portions of a work where detail is not high or does not depend on ray tracing features have led to a realistic possibility of wider use of ray tracing. There is now some hardware accelerated ray tracing equipment, at least in prototype phase, and some game demos which show use of real-time software or hardware ray tracing.

Neural rendering

Neural rendering is a rendering method using artificial neural networks. Neural rendering includes image-based rendering methods that are used to reconstruct 3D models from 2-dimensional images.One of these methods arephotogrammetry

Photogrammetry is the science and technology of obtaining reliable information about physical objects and the environment through the process of recording, measuring and interpreting photographic images and patterns of electromagnetic radiant ima ...

, which is a method in which a collection of images from multiple angles of an object are turned into a 3D model. There have also been recent developments in generating and rendering 3D models from text and coarse paintings by notably NVIDIA, Google and various other companies.

Radiosity

Radiosity is a method which attempts to simulate the way in which directly illuminated surfaces act as indirect light sources that illuminate other surfaces. This produces more realistic shading and seems to better capture the ' ambience' of an indoor scene. A classic example is a way that shadows 'hug' the corners of rooms. The optical basis of the simulation is that some diffused light from a given point on a given surface is reflected in a large spectrum of directions and illuminates the area around it. The simulation technique may vary in complexity. Many renderings have a very rough estimate of radiosity, simply illuminating an entire scene very slightly with a factor known as ambiance. However, when advanced radiosity estimation is coupled with a high quality ray tracing algorithm, images may exhibit convincing realism, particularly for indoor scenes. In advanced radiosity simulation, recursive, finite-element algorithms 'bounce' light back and forth between surfaces in the model, until some recursion limit is reached. The colouring of one surface in this way influences the colouring of a neighbouring surface, and vice versa. The resulting values of illumination throughout the model (sometimes including for empty spaces) are stored and used as additional inputs when performing calculations in a ray-casting or ray-tracing model. Due to the iterative/recursive nature of the technique, complex objects are particularly slow to emulate. Prior to the standardization of rapid radiosity calculation, some digital artists used a technique referred to loosely asfalse radiosity False Radiosity is a 3D computer graphics technique used to create texture mapping for objects that emulates patch interaction algorithms in Radiosity (3D computer graphics), radiosity Rendering (computer graphics), rendering. Though practiced in so ...

by darkening areas of texture maps corresponding to corners, joints and recesses, and applying them via self-illumination or diffuse mapping for scanline rendering. Even now, advanced radiosity calculations may be reserved for calculating the ambiance of the room, from the light reflecting off walls, floor and ceiling, without examining the contribution that complex objects make to the radiosity or complex objects may be replaced in the radiosity calculation with simpler objects of similar size and texture.

Radiosity calculations are viewpoint independent which increases the computations involved, but makes them useful for all viewpoints. If there is little rearrangement of radiosity objects in the scene, the same radiosity data may be reused for a number of frames, making radiosity an effective way to improve on the flatness of ray casting, without seriously impacting the overall rendering time-per-frame.

Because of this, radiosity is a prime component of leading real-time rendering methods, and has been used from beginning-to-end to create a large number of well-known recent feature-length animated 3D-cartoon films.

Sampling and filtering

One problem that any rendering system must deal with, no matter which approach it takes, is the sampling problem. Essentially, the rendering process tries to depict acontinuous function

In mathematics, a continuous function is a function such that a continuous variation (that is a change without jump) of the argument induces a continuous variation of the value of the function. This means that there are no abrupt changes in value ...

from image space to colors by using a finite number of pixels. As a consequence of the Nyquist–Shannon sampling theorem (or Kotelnikov theorem), any spatial waveform that can be displayed must consist of at least two pixels, which is proportional to image resolution. In simpler terms, this expresses the idea that an image cannot display details, peaks or troughs in color or intensity, that are smaller than one pixel.

If a naive rendering algorithm is used without any filtering, high frequencies in the image function will cause ugly aliasing to be present in the final image. Aliasing typically manifests itself as jaggies, or jagged edges on objects where the pixel grid is visible. In order to remove aliasing, all rendering algorithms (if they are to produce good-looking images) must use some kind of low-pass filter on the image function to remove high frequencies, a process called antialiasing.

Optimization

Due to the large number of calculations, a work in progress is usually only rendered in detail appropriate to the portion of the work being developed at a given time, so in the initial stages of modeling, wireframe and ray casting may be used, even where the target output is ray tracing with radiosity. It is also common to render only parts of the scene at high detail, and to remove objects that are not important to what is currently being developed. For real-time, it is appropriate to simplify one or more common approximations, and tune to the exact parameters of the scenery in question, which is also tuned to the agreed parameters to get the most 'bang for the buck'.Academic core

The implementation of a realistic renderer always has some basic element of physical simulation or emulation some computation which resembles or abstracts a real physical process. The term "'' physically based''" indicates the use of physical models and approximations that are more general and widely accepted outside rendering. A particular set of related techniques have gradually become established in the rendering community. The basic concepts are moderately straightforward, but intractable to calculate; and a single elegant algorithm or approach has been elusive for more general purpose renderers. In order to meet demands of robustness, accuracy and practicality, an implementation will be a complex combination of different techniques. Rendering research is concerned with both the adaptation of scientific models and their efficient application.The rendering equation

This is the key academic/theoretical concept in rendering. It serves as the most abstract formal expression of the non-perceptual aspect of rendering. All more complete algorithms can be seen as solutions to particular formulations of this equation. : Meaning: at a particular position and direction, the outgoing light (Lo) is the sum of the emitted light (Le) and the reflected light. The reflected light being the sum of the incoming light (Li) from all directions, multiplied by the surface reflection and incoming angle. By connecting outward light to inward light, via an interaction point, this equation stands for the whole 'light transport' all the movement of light in a scene.The bidirectional reflectance distribution function

The bidirectional reflectance distribution function (BRDF) expresses a simple model of light interaction with a surface as follows: : Light interaction is often approximated by the even simpler models: diffuse reflection and specular reflection, although both can ALSO be BRDFs.Geometric optics

Rendering is practically exclusively concerned with the particle aspect of light physics known as geometrical optics. Treating light, at its basic level, as particles bouncing around is a simplification, but appropriate: the wave aspects of light are negligible in most scenes, and are significantly more difficult to simulate. Notable wave aspect phenomena include diffraction (as seen in the colours ofCDs

The compact disc (CD) is a digital optical disc data storage format that was co-developed by Philips and Sony to store and play digital audio recordings. In August 1982, the first compact disc was manufactured. It was then released in Octo ...

and DVDs) and polarisation (as seen in LCDs). Both types of effect, if needed, are made by appearance-oriented adjustment of the reflection model.

Visual perception

Though it receives less attention, an understanding ofhuman visual perception

Visual perception is the ability to interpret the surrounding environment through photopic vision (daytime vision), color vision, scotopic vision (night vision), and mesopic vision (twilight vision), using light in the visible spectrum reflecte ...

is valuable to rendering. This is mainly because image displays and human perception have restricted ranges. A renderer can simulate a wide range of light brightness and color, but current displays movie screen, computer monitor, etc. cannot handle so much, and something must be discarded or compressed. Human perception also has limits, and so does not need to be given large-range images to create realism. This can help solve the problem of fitting images into displays, and, furthermore, suggest what short-cuts could be used in the rendering simulation, since certain subtleties won't be noticeable. This related subject is tone mapping.

Mathematics used in rendering includes: linear algebra, calculus, numerical mathematics

Numerical analysis is the study of algorithms that use numerical approximation (as opposed to symbolic manipulations) for the problems of mathematical analysis (as distinguished from discrete mathematics). It is the study of numerical methods th ...

, signal processing, and Monte Carlo methods

Monte Carlo methods, or Monte Carlo experiments, are a broad class of computational algorithms that rely on repeated random sampling to obtain numerical results. The underlying concept is to use randomness to solve problems that might be determini ...

.

Rendering for movies often takes place on a network of tightly connected computers known as a render farm.

The current state of the art in 3-D image description for movie creation is the Mental Ray scene description language

A scene description language is any description language used to describe a scene to a 3D renderer, such as a ray tracer. The scene is written in a text editor (which may include syntax highlighting), as opposed to being modeled in a graphical ...

designed at Mental Images and RenderMan Shading Language designed at Pixar (compare with simpler 3D fileformats such as VRML or APIs

Apis or APIS may refer to:

* Apis (deity), an ancient Egyptian god

* Apis (Greek mythology), several different figures in Greek mythology

* Apis (city), an ancient seaport town on the northern coast of Africa

**Kom el-Hisn, a different Egyptian ci ...

such as OpenGL

OpenGL (Open Graphics Library) is a cross-language, cross-platform application programming interface (API) for rendering 2D and 3D vector graphics. The API is typically used to interact with a graphics processing unit (GPU), to achieve hardwa ...

and DirectX

Microsoft DirectX is a collection of application programming interfaces (APIs) for handling tasks related to multimedia, especially game programming and video, on Microsoft platforms. Originally, the names of these APIs all began with "Direct", ...

tailored for 3D hardware accelerators).

Other renderers (including proprietary ones) can and are sometimes used, but most other renderers tend to miss one or more of the often needed features like good texture filtering, texture caching, programmable shaders, highend geometry types like hair, subdivision

Subdivision may refer to:

Arts and entertainment

* Subdivision (metre), in music

* ''Subdivision'' (film), 2009

* "Subdivision", an episode of ''Prison Break'' (season 2)

* ''Subdivisions'' (EP), by Sinch, 2005

* "Subdivisions" (song), by Rus ...

or nurbs surfaces with tesselation on demand, geometry caching, raytracing with geometry caching, high quality shadow mapping, speed or patent-free implementations. Other highly sought features these days may include interactive photorealistic rendering

__NOTOC__

Within the field of computer graphics, unbiased rendering refers to any rendering (computer graphics), rendering technique that does not introduce systematic error, or bias of an estimator, bias, into the rendering equation, radiance ap ...

(IPR) and hardware rendering/shading.

Chronology of important published ideas

* 1968 '' Ray casting''

* 1970 '' Scanline rendering''

* 1971 '' Gouraud shading''

* 1973 '' Phong shading''

* 1973 '' Phong reflection''

* 1973 '' Diffuse reflection''

* 1973 '' Specular highlight''

* 1973 '' Specular reflection''

* 1974 '' Sprites''

* 1974 '' Scrolling''

* 1974 '' Texture mapping''

* 1974 '' Z-buffering''

* 1976 '' Environment mapping''

* 1977 '' Blinn shading''

* 1977 '' Side-scrolling''

* 1977 '' Shadow volumes''

* 1978 '' Shadow mapping''

* 1978 '' Bump mapping''

* 1979 ''

* 1968 '' Ray casting''

* 1970 '' Scanline rendering''

* 1971 '' Gouraud shading''

* 1973 '' Phong shading''

* 1973 '' Phong reflection''

* 1973 '' Diffuse reflection''

* 1973 '' Specular highlight''

* 1973 '' Specular reflection''

* 1974 '' Sprites''

* 1974 '' Scrolling''

* 1974 '' Texture mapping''

* 1974 '' Z-buffering''

* 1976 '' Environment mapping''

* 1977 '' Blinn shading''

* 1977 '' Side-scrolling''

* 1977 '' Shadow volumes''

* 1978 '' Shadow mapping''

* 1978 '' Bump mapping''

* 1979 ''Tile map

A tile-based video game is a type of video or video game where the playing area consists of small square (or, much less often, rectangular, parallelogram, or hexagonal) graphic images referred to as ''tiles'' laid out in a grid. That the screen ...

''

* 1980 '' BSP trees''

* 1980 '' Ray tracing''

* 1981 '' Parallax scrolling''

* 1981 '' Sprite zooming''

* 1981 ''Cook shader''

* 1983 '' MIP maps''

* 1984 '' Octree ray tracing''

* 1984 '' Alpha compositing''

* 1984 '' Distributed ray tracing''

* 1984 '' Radiosity''

* 1985 '' Row/column scrolling''

* 1985 ''Hemicube Hemicube can mean:

* Hemicube (technology company), a company based in Dubai that develops advanced technology solutions.

* Hemicube (computer graphics), a concept in 3D computer graphics rendering

*Hemicube (geometry), an abstract regular polytope ...

radiosity''

* 1986 ''Light source tracing''

* 1986 '' Rendering equation''

* 1987 '' Reyes rendering''

* 1988 ''Depth cue

Depth perception is the ability to perceive distance to objects in the world using the visual system and visual perception. It is a major factor in perceiving the world in three dimensions. Depth perception happens primarily due to stereopsis an ...

''

* 1988 '' Distance fog''

* 1988 '' Tiled rendering''

* 1991 '' Xiaolin Wu line anti-aliasing''

* 1991 ''Hierarchical radiosity''

* 1993 '' Texture filtering''

* 1993 '' Perspective correction''

* 1993 '' Transform, clipping, and lighting''

* 1993 '' Directional lighting''

* 1993 '' Trilinear interpolation''

* 1993 ''Z-culling

A depth buffer, also known as a z-buffer, is a type of data buffer used in computer graphics to represent depth information of objects in 3D space from a particular perspective. Depth buffers are an aid to rendering a scene to ensure that the ...

''

* 1993 '' Oren–Nayar reflectance''M. Oren and S.K. Nayar,Generalization of Lambert's Reflectance Model

". SIGGRAPH. pp.239-246, Jul, 1994 * 1993 '' Tone mapping'' * 1993 '' Subsurface scattering'' * 1994 '' Ambient occlusion'' * 1995 ''

Hidden-surface determination

In 3D computer graphics, hidden-surface determination (also known as shown-surface determination, hidden-surface removal (HSR), occlusion culling (OC) or visible-surface determination (VSD)) is the process of identifying what surfaces and parts o ...

''

* 1995 '' Photon mapping''

* 1996 '' Multisample anti-aliasing''

* 1997 '' Metropolis light transport''

* 1997 ''Instant Radiosity''

* 1998 '' Hidden-surface removal''

* 2000 '' Pose space deformation''

* 2002 ''Precomputed Radiance Transfer Precomputed Radiance Transfer (PRT) is a computer graphics technique used to render a scene in real time with complex light interactions being precomputed to save time. Radiosity methods can be used to determine the diffuse lighting of the scene, ...

''

See also

* * * * * * * * * * * * * * * * * * Per-pixel lighting * * * * * * * * * * * * * * * * *References

Further reading

* * * * * * * * * * * * *External links

*GPU Rendering Magazine

', online CGI magazine about advantages of GPU rendering

SIGGRAPH

the ACMs special interest group in graphics the largest academic and professional association and conference

List of links to (recent, as of 2004) siggraph papers (and some others) on the web

{{DEFAULTSORT:Rendering (Computer Graphics) 3D rendering