In

mathematics

Mathematics is an area of knowledge that includes the topics of numbers, formulas and related structures, shapes and the spaces in which they are contained, and quantities and their changes. These topics are represented in modern mathematics ...

, Hilbert spaces (named after

David Hilbert

David Hilbert (; ; 23 January 1862 – 14 February 1943) was a German mathematician, one of the most influential mathematicians of the 19th and early 20th centuries. Hilbert discovered and developed a broad range of fundamental ideas in many a ...

) allow generalizing the methods of

linear algebra and

calculus from (finite-dimensional)

Euclidean vector spaces to spaces that may be

infinite-dimensional

In mathematics, the dimension of a vector space ''V'' is the cardinality (i.e., the number of vectors) of a basis of ''V'' over its base field. p. 44, §2.36 It is sometimes called Hamel dimension (after Georg Hamel) or algebraic dimension to disti ...

. Hilbert spaces arise naturally and frequently in mathematics and

physics, typically as

function space

In mathematics, a function space is a set of functions between two fixed sets. Often, the domain and/or codomain will have additional structure which is inherited by the function space. For example, the set of functions from any set into a vect ...

s. Formally, a Hilbert space is a

vector space equipped with an

inner product that defines a

distance function for which the space is a

complete metric space.

The earliest Hilbert spaces were studied from this point of view in the first decade of the 20th century by

David Hilbert

David Hilbert (; ; 23 January 1862 – 14 February 1943) was a German mathematician, one of the most influential mathematicians of the 19th and early 20th centuries. Hilbert discovered and developed a broad range of fundamental ideas in many a ...

,

Erhard Schmidt, and

Frigyes Riesz. They are indispensable tools in the theories of

partial differential equation

In mathematics, a partial differential equation (PDE) is an equation which imposes relations between the various partial derivatives of a Multivariable calculus, multivariable function.

The function is often thought of as an "unknown" to be sol ...

s,

quantum mechanics,

Fourier analysis

In mathematics, Fourier analysis () is the study of the way general functions may be represented or approximated by sums of simpler trigonometric functions. Fourier analysis grew from the study of Fourier series, and is named after Josep ...

(which includes applications to

signal processing and heat transfer), and

ergodic theory

Ergodic theory (Greek: ' "work", ' "way") is a branch of mathematics that studies statistical properties of deterministic dynamical systems; it is the study of ergodicity. In this context, statistical properties means properties which are expres ...

(which forms the mathematical underpinning of

thermodynamics).

John von Neumann coined the term ''Hilbert space'' for the abstract concept that underlies many of these diverse applications. The success of Hilbert space methods ushered in a very fruitful era for

functional analysis. Apart from the classical Euclidean vector spaces, examples of Hilbert spaces include

spaces of square-integrable functions,

spaces of sequences,

Sobolev spaces consisting of

generalized functions, and

Hardy spaces of

holomorphic function

In mathematics, a holomorphic function is a complex-valued function of one or more complex variables that is complex differentiable in a neighbourhood of each point in a domain in complex coordinate space . The existence of a complex derivativ ...

s.

Geometric intuition plays an important role in many aspects of Hilbert space theory. Exact analogs of the

Pythagorean theorem

In mathematics, the Pythagorean theorem or Pythagoras' theorem is a fundamental relation in Euclidean geometry between the three sides of a right triangle. It states that the area of the square whose side is the hypotenuse (the side opposite t ...

and

parallelogram law hold in a Hilbert space. At a deeper level, perpendicular projection onto a linear subspace or a subspace (the analog of "

dropping the altitude" of a triangle) plays a significant role in optimization problems and other aspects of the theory. An element of a Hilbert space can be uniquely specified by its coordinates with respect to an

orthonormal basis, in analogy with

Cartesian coordinates

A Cartesian coordinate system (, ) in a plane is a coordinate system that specifies each point uniquely by a pair of numerical coordinates, which are the signed distances to the point from two fixed perpendicular oriented lines, measured in t ...

in classical geometry. When this basis is

countably infinite

In mathematics, a set is countable if either it is finite or it can be made in one to one correspondence with the set of natural numbers. Equivalently, a set is ''countable'' if there exists an injective function from it into the natural numbers; ...

, it allows identifying the Hilbert space with the space of the

infinite sequences that are

square-summable. The latter space is often in the older literature referred to as ''the'' Hilbert space.

Definition and illustration

Motivating example: Euclidean vector space

One of the most familiar examples of a Hilbert space is the

Euclidean vector space consisting of three-dimensional

vectors, denoted by , and equipped with the

dot product. The dot product takes two vectors and , and produces a real number . If and are represented in

Cartesian coordinates

A Cartesian coordinate system (, ) in a plane is a coordinate system that specifies each point uniquely by a pair of numerical coordinates, which are the signed distances to the point from two fixed perpendicular oriented lines, measured in t ...

, then the dot product is defined by

The dot product satisfies the properties:

# It is symmetric in and : .

# It is

linear in its first argument: for any scalars , , and vectors , , and .

# It is

positive definite In mathematics, positive definiteness is a property of any object to which a bilinear form or a sesquilinear form may be naturally associated, which is positive-definite. See, in particular:

* Positive-definite bilinear form

* Positive-definite f ...

: for all vectors , , with equality

if and only if .

An operation on pairs of vectors that, like the dot product, satisfies these three properties is known as a (real)

inner product. A

vector space equipped with such an inner product is known as a (real)

inner product space. Every finite-dimensional inner product space is also a Hilbert space. The basic feature of the dot product that connects it with Euclidean geometry is that it is related to both the length (or

norm) of a vector, denoted , and to the angle between two vectors and by means of the formula

Multivariable calculus

Multivariable calculus in Euclidean space relies on the ability to compute

limits, and to have useful criteria for concluding that limits exist. A

mathematical series

consisting of vectors in is

absolutely convergent provided that the sum of the lengths converges as an ordinary series of real numbers:

Just as with a series of scalars, a series of vectors that converges absolutely also converges to some limit vector in the Euclidean space, in the sense that

This property expresses the ''completeness'' of Euclidean space: that a series that converges absolutely also converges in the ordinary sense.

Hilbert spaces are often taken over the

complex numbers. The

complex plane

In mathematics, the complex plane is the plane formed by the complex numbers, with a Cartesian coordinate system such that the -axis, called the real axis, is formed by the real numbers, and the -axis, called the imaginary axis, is formed by the ...

denoted by is equipped with a notion of magnitude, the

complex modulus , which is defined as the square root of the product of with its

complex conjugate:

If is a decomposition of into its real and imaginary parts, then the modulus is the usual Euclidean two-dimensional length:

The inner product of a pair of complex numbers and is the product of with the complex conjugate of :

This is complex-valued. The real part of gives the usual two-dimensional Euclidean

dot product.

A second example is the space whose elements are pairs of complex numbers . Then the inner product of with another such vector is given by

The real part of is then the two-dimensional Euclidean dot product. This inner product is ''Hermitian'' symmetric, which means that the result of interchanging and is the complex conjugate:

Definition

A is a

real or

complex inner product space that is also a

complete metric space with respect to the distance function induced by the inner product.

[The mathematical material in this section can be found in any good textbook on functional analysis, such as , , or .]

To say that is a means that is a complex vector space on which there is an inner product

associating a complex number to each pair of elements

of that satisfies the following properties:

# The inner product is conjugate symmetric; that is, the inner product of a pair of elements is equal to the

complex conjugate of the inner product of the swapped elements:

Importantly, this implies that

is a real number.

# The inner product is

linear in its first

[In some conventions, inner products are linear in their second arguments instead.] argument. For all complex numbers

and

# The inner product of an element with itself is

positive definite In mathematics, positive definiteness is a property of any object to which a bilinear form or a sesquilinear form may be naturally associated, which is positive-definite. See, in particular:

* Positive-definite bilinear form

* Positive-definite f ...

:

It follows from properties 1 and 2 that a complex inner product is , also called , in its second argument, meaning that

A is defined in the same way, except that is a real vector space and the inner product takes real values. Such an inner product will be a

bilinear map and

will form a

dual system.

The

norm is the real-valued function

and the distance

between two points

in is defined in terms of the norm by

That this function is a distance function means firstly that it is symmetric in

and

secondly that the distance between

and itself is zero, and otherwise the distance between

and

must be positive, and lastly that the

triangle inequality holds, meaning that the length of one leg of a triangle cannot exceed the sum of the lengths of the other two legs:

:

This last property is ultimately a consequence of the more fundamental

Cauchy–Schwarz inequality

The Cauchy–Schwarz inequality (also called Cauchy–Bunyakovsky–Schwarz inequality) is considered one of the most important and widely used inequalities in mathematics.

The inequality for sums was published by . The corresponding inequality fo ...

, which asserts

with equality if and only if

and

are

linearly dependent.

With a distance function defined in this way, any inner product space is a

metric space, and sometimes is known as a . Any pre-Hilbert space that is additionally also a

complete space is a Hilbert space.

The of is expressed using a form of the

Cauchy criterion for sequences in : a pre-Hilbert space is complete if every

Cauchy sequence converges with respect to this norm to an element in the space. Completeness can be characterized by the following equivalent condition: if a series of vectors

converges absolutely

In mathematics, an infinite series of numbers is said to converge absolutely (or to be absolutely convergent) if the sum of the absolute values of the summands is finite. More precisely, a real or complex series \textstyle\sum_^\infty a_n is s ...

in the sense that

then the series converges in , in the sense that the partial sums converge to an element of .

As a complete normed space, Hilbert spaces are by definition also

Banach space

In mathematics, more specifically in functional analysis, a Banach space (pronounced ) is a complete normed vector space. Thus, a Banach space is a vector space with a metric that allows the computation of vector length and distance between vector ...

s. As such they are

topological vector spaces, in which

topological notions like the

openness and

closedness of subsets are

well defined. Of special importance is the notion of a closed

linear subspace

In mathematics, and more specifically in linear algebra, a linear subspace, also known as a vector subspaceThe term ''linear subspace'' is sometimes used for referring to flats and affine subspaces. In the case of vector spaces over the reals, li ...

of a Hilbert space that, with the inner product induced by restriction, is also complete (being a closed set in a complete metric space) and therefore a Hilbert space in its own right.

Second example: sequence spaces

The

sequence space consists of all

infinite sequences of complex numbers such that the following series

converges:

The inner product on is defined by:

This second series converges as a consequence of the

Cauchy–Schwarz inequality

The Cauchy–Schwarz inequality (also called Cauchy–Bunyakovsky–Schwarz inequality) is considered one of the most important and widely used inequalities in mathematics.

The inequality for sums was published by . The corresponding inequality fo ...

and the convergence of the previous series.

Completeness of the space holds provided that whenever a series of elements from converges absolutely (in norm), then it converges to an element of . The proof is basic in

mathematical analysis, and permits mathematical series of elements of the space to be manipulated with the same ease as series of complex numbers (or vectors in a finite-dimensional Euclidean space).

History

Prior to the development of Hilbert spaces, other generalizations of Euclidean spaces were known to

mathematicians and

physicists. In particular, the idea of an

abstract linear space (vector space) had gained some traction towards the end of the 19th century: this is a space whose elements can be added together and multiplied by scalars (such as

real or

complex numbers) without necessarily identifying these elements with

"geometric" vectors, such as position and momentum vectors in physical systems. Other objects studied by mathematicians at the turn of the 20th century, in particular spaces of

sequences (including

series) and spaces of functions, can naturally be thought of as linear spaces. Functions, for instance, can be added together or multiplied by constant scalars, and these operations obey the algebraic laws satisfied by addition and scalar multiplication of spatial vectors.

In the first decade of the 20th century, parallel developments led to the introduction of Hilbert spaces. The first of these was the observation, which arose during

David Hilbert

David Hilbert (; ; 23 January 1862 – 14 February 1943) was a German mathematician, one of the most influential mathematicians of the 19th and early 20th centuries. Hilbert discovered and developed a broad range of fundamental ideas in many a ...

and

Erhard Schmidt's study of

integral equations, that two

square-integrable real-valued functions and on an interval have an ''inner product''

:

which has many of the familiar properties of the Euclidean dot product. In particular, the idea of an

orthogonal

In mathematics, orthogonality is the generalization of the geometric notion of ''perpendicularity''.

By extension, orthogonality is also used to refer to the separation of specific features of a system. The term also has specialized meanings in ...

family of functions has meaning. Schmidt exploited the similarity of this inner product with the usual dot product to prove an analog of the

spectral decomposition for an operator of the form

:

where is a continuous function symmetric in and . The resulting

eigenfunction expansion

In mathematics, an eigenfunction of a linear operator ''D'' defined on some function space is any non-zero function f in that space that, when acted upon by ''D'', is only multiplied by some scaling factor called an eigenvalue. As an equation, thi ...

expresses the function as a series of the form

:

where the functions are orthogonal in the sense that for all . The individual terms in this series are sometimes referred to as elementary product solutions. However, there are eigenfunction expansions that fail to converge in a suitable sense to a square-integrable function: the missing ingredient, which ensures convergence, is completeness.

The second development was the

Lebesgue integral, an alternative to the

Riemann integral introduced by

Henri Lebesgue in 1904. The Lebesgue integral made it possible to integrate a much broader class of functions. In 1907,

Frigyes Riesz and

Ernst Sigismund Fischer

Ernst Sigismund Fischer (12 July 1875 – 14 November 1954) was a mathematician born in Vienna, Austria. He worked alongside both Mertens and Minkowski at the Universities of Vienna and Zurich, respectively. He later became professor at the Un ...

independently proved that the space of square Lebesgue-integrable functions is a

complete metric space. As a consequence of the interplay between geometry and completeness, the 19th century results of

Joseph Fourier

Jean-Baptiste Joseph Fourier (; ; 21 March 1768 – 16 May 1830) was a French people, French mathematician and physicist born in Auxerre and best known for initiating the investigation of Fourier series, which eventually developed into Fourier an ...

,

Friedrich Bessel and

Marc-Antoine Parseval on

trigonometric series easily carried over to these more general spaces, resulting in a geometrical and analytical apparatus now usually known as the

Riesz–Fischer theorem.

Further basic results were proved in the early 20th century. For example, the

Riesz representation theorem was independently established by

Maurice Fréchet and

Frigyes Riesz in 1907.

John von Neumann coined the term ''abstract Hilbert space'' in his work on unbounded

Hermitian operators. Although other mathematicians such as

Hermann Weyl

Hermann Klaus Hugo Weyl, (; 9 November 1885 – 8 December 1955) was a German mathematician, theoretical physicist and philosopher. Although much of his working life was spent in Zürich, Switzerland, and then Princeton, New Jersey, he is assoc ...

and

Norbert Wiener

Norbert Wiener (November 26, 1894 – March 18, 1964) was an American mathematician and philosopher. He was a professor of mathematics at the Massachusetts Institute of Technology (MIT). A child prodigy, Wiener later became an early researcher i ...

had already studied particular Hilbert spaces in great detail, often from a physically motivated point of view, von Neumann gave the first complete and axiomatic treatment of them. Von Neumann later used them in his seminal work on the foundations of quantum mechanics, and in his continued work with

Eugene Wigner. The name "Hilbert space" was soon adopted by others, for example by Hermann Weyl in his book on quantum mechanics and the theory of groups.

[.]

The significance of the concept of a Hilbert space was underlined with the realization that it offers one of the best

mathematical formulations of quantum mechanics

The mathematical formulations of quantum mechanics are those mathematical formalisms that permit a rigorous description of quantum mechanics. This mathematical formalism uses mainly a part of functional analysis, especially Hilbert spaces, which ...

. In short, the states of a quantum mechanical system are vectors in a certain Hilbert space, the observables are

hermitian operators on that space, the

symmetries of the system are

unitary operators, and

measurements

Measurement is the quantification of attributes of an object or event, which can be used to compare with other objects or events.

In other words, measurement is a process of determining how large or small a physical quantity is as compared t ...

are

orthogonal projections. The relation between quantum mechanical symmetries and unitary operators provided an impetus for the development of the

unitary representation theory of

groups, initiated in the 1928 work of Hermann Weyl.

On the other hand, in the early 1930s it became clear that classical mechanics can be described in terms of Hilbert space (

Koopman–von Neumann classical mechanics) and that certain properties of classical

dynamical systems

In mathematics, a dynamical system is a system in which a function describes the time dependence of a point in an ambient space. Examples include the mathematical models that describe the swinging of a clock pendulum, the flow of water in a p ...

can be analyzed using Hilbert space techniques in the framework of

ergodic theory

Ergodic theory (Greek: ' "work", ' "way") is a branch of mathematics that studies statistical properties of deterministic dynamical systems; it is the study of ergodicity. In this context, statistical properties means properties which are expres ...

.

The algebra of

observables in quantum mechanics is naturally an algebra of operators defined on a Hilbert space, according to

Werner Heisenberg's

matrix mechanics formulation of quantum theory. Von Neumann began investigating

operator algebras in the 1930s, as

rings of operators on a Hilbert space. The kind of algebras studied by von Neumann and his contemporaries are now known as

von Neumann algebras. In the 1940s,

Israel Gelfand,

Mark Naimark and

Irving Segal

Irving Ezra Segal (1918–1998) was an American mathematician known for work on theoretical quantum mechanics. He shares credit for what is often referred to as the Segal–Shale–Weil representation. Early in his career Segal became known for h ...

gave a definition of a kind of operator algebras called

C*-algebra

In mathematics, specifically in functional analysis, a C∗-algebra (pronounced "C-star") is a Banach algebra together with an involution satisfying the properties of the adjoint. A particular case is that of a complex algebra ''A'' of continuous ...

s that on the one hand made no reference to an underlying Hilbert space, and on the other extrapolated many of the useful features of the operator algebras that had previously been studied. The spectral theorem for self-adjoint operators in particular that underlies much of the existing Hilbert space theory was generalized to C*-algebras. These techniques are now basic in abstract harmonic analysis and representation theory.

Examples

Lebesgue spaces

Lebesgue spaces are

function space

In mathematics, a function space is a set of functions between two fixed sets. Often, the domain and/or codomain will have additional structure which is inherited by the function space. For example, the set of functions from any set into a vect ...

s associated to

measure spaces , where is a set, is a

σ-algebra of subsets of , and is a

countably additive measure on . Let be the space of those complex-valued measurable functions on for which the

Lebesgue integral of the square of the

absolute value

In mathematics, the absolute value or modulus of a real number x, is the non-negative value without regard to its sign. Namely, , x, =x if is a positive number, and , x, =-x if x is negative (in which case negating x makes -x positive), an ...

of the function is finite, i.e., for a function in ,

and where functions are identified if and only if they differ only on a

set of measure zero.

The inner product of functions and in is then defined as

or

where the second form (conjugation of the first element) is commonly found in the theoretical physics literature. For and in , the integral exists because of the Cauchy–Schwarz inequality, and defines an inner product on the space. Equipped with this inner product, is in fact complete. The Lebesgue integral is essential to ensure completeness: on domains of real numbers, for instance, not enough functions are

Riemann integrable.

The Lebesgue spaces appear in many natural settings. The spaces and of square-integrable functions with respect to the

Lebesgue measure

In measure theory, a branch of mathematics, the Lebesgue measure, named after French mathematician Henri Lebesgue, is the standard way of assigning a measure to subsets of ''n''-dimensional Euclidean space. For ''n'' = 1, 2, or 3, it coincides wit ...

on the real line and unit interval, respectively, are natural domains on which to define the Fourier transform and Fourier series. In other situations, the measure may be something other than the ordinary Lebesgue measure on the real line. For instance, if is any positive measurable function, the space of all measurable functions on the interval satisfying

is called the

weighted space , and is called the weight function. The inner product is defined by

The weighted space is identical with the Hilbert space where the measure of a Lebesgue-measurable set is defined by

Weighted spaces like this are frequently used to study orthogonal polynomials, because different families of orthogonal polynomials are orthogonal with respect to different weighting functions.

Sobolev spaces

Sobolev spaces, denoted by or , are Hilbert spaces. These are a special kind of

function space

In mathematics, a function space is a set of functions between two fixed sets. Often, the domain and/or codomain will have additional structure which is inherited by the function space. For example, the set of functions from any set into a vect ...

in which

differentiation may be performed, but that (unlike other

Banach spaces such as the

Hölder space Hölder:

* ''Hölder, Hoelder'' as surname

* Hölder condition

* Hölder's inequality

* Hölder mean

* Jordan–Hölder theorem In abstract algebra, a composition series provides a way to break up an algebraic structure, such as a group or a modu ...

s) support the structure of an inner product. Because differentiation is permitted, Sobolev spaces are a convenient setting for the theory of

partial differential equations.

They also form the basis of the theory of

direct methods in the calculus of variations

In mathematics, the direct method in the calculus of variations is a general method for constructing a proof of the existence of a minimizer for a given functional, introduced by Stanisław Zaremba and David Hilbert around 1900. The method relies ...

.

For a non-negative integer and , the Sobolev space contains functions whose

weak derivatives of order up to are also . The inner product in is

where the dot indicates the dot product in the Euclidean space of partial derivatives of each order. Sobolev spaces can also be defined when is not an integer.

Sobolev spaces are also studied from the point of view of spectral theory, relying more specifically on the Hilbert space structure. If is a suitable domain, then one can define the Sobolev space as the space of

Bessel potential In mathematics, the Bessel potential is a potential (named after Friedrich Wilhelm Bessel) similar to the Riesz potential but with better decay properties at infinity.

If ''s'' is a complex number with positive real part then the Bessel potential o ...

s; roughly,

Here is the Laplacian and is understood in terms of the

spectral mapping theorem

In mathematics, especially functional analysis, a Banach algebra, named after Stefan Banach, is an associative algebra A over the real or complex numbers (or over a non-Archimedean complete normed field) that at the same time is also a Banach spa ...

. Apart from providing a workable definition of Sobolev spaces for non-integer , this definition also has particularly desirable properties under the

Fourier transform

A Fourier transform (FT) is a mathematical transform that decomposes functions into frequency components, which are represented by the output of the transform as a function of frequency. Most commonly functions of time or space are transformed, ...

that make it ideal for the study of

pseudodifferential operators. Using these methods on a

compact Riemannian manifold

In differential geometry, a Riemannian manifold or Riemannian space , so called after the German mathematician Bernhard Riemann, is a real manifold, real, smooth manifold ''M'' equipped with a positive-definite Inner product space, inner product ...

, one can obtain for instance the

Hodge decomposition, which is the basis of

Hodge theory

In mathematics, Hodge theory, named after W. V. D. Hodge, is a method for studying the cohomology groups of a smooth manifold ''M'' using partial differential equations. The key observation is that, given a Riemannian metric on ''M'', every cohom ...

.

Spaces of holomorphic functions

Hardy spaces

The

Hardy spaces are function spaces, arising in

complex analysis

Complex analysis, traditionally known as the theory of functions of a complex variable, is the branch of mathematical analysis that investigates Function (mathematics), functions of complex numbers. It is helpful in many branches of mathemati ...

and

harmonic analysis

Harmonic analysis is a branch of mathematics concerned with the representation of Function (mathematics), functions or signals as the Superposition principle, superposition of basic waves, and the study of and generalization of the notions of Fo ...

, whose elements are certain

holomorphic function

In mathematics, a holomorphic function is a complex-valued function of one or more complex variables that is complex differentiable in a neighbourhood of each point in a domain in complex coordinate space . The existence of a complex derivativ ...

s in a complex domain. Let denote the

unit disc in the complex plane. Then the Hardy space is defined as the space of holomorphic functions on such that the means

remain bounded for . The norm on this Hardy space is defined by

Hardy spaces in the disc are related to Fourier series. A function is in if and only if

where

Thus consists of those functions that are ''L''

2 on the circle, and whose negative frequency Fourier coefficients vanish.

Bergman spaces

The

Bergman space In complex analysis, functional analysis and operator theory, a Bergman space, named after Stefan Bergman, is a function space of holomorphic functions in a domain ''D'' of the complex plane that are sufficiently well-behaved at the boundary that t ...

s are another family of Hilbert spaces of holomorphic functions. Let be a bounded open set in the

complex plane

In mathematics, the complex plane is the plane formed by the complex numbers, with a Cartesian coordinate system such that the -axis, called the real axis, is formed by the real numbers, and the -axis, called the imaginary axis, is formed by the ...

(or a higher-dimensional complex space) and let be the space of holomorphic functions in that are also in in the sense that

where the integral is taken with respect to the Lebesgue measure in . Clearly is a subspace of ; in fact, it is a

closed

Closed may refer to:

Mathematics

* Closure (mathematics), a set, along with operations, for which applying those operations on members always results in a member of the set

* Closed set, a set which contains all its limit points

* Closed interval, ...

subspace, and so a Hilbert space in its own right. This is a consequence of the estimate, valid on

compact subsets of , that

which in turn follows from

Cauchy's integral formula. Thus convergence of a sequence of holomorphic functions in implies also

compact convergence, and so the limit function is also holomorphic. Another consequence of this inequality is that the linear functional that evaluates a function at a point of is actually continuous on . The Riesz representation theorem implies that the evaluation functional can be represented as an element of . Thus, for every , there is a function such that

for all . The integrand

is known as the

Bergman kernel of . This

integral kernel

In mathematics, an integral transform maps a function from its original function space into another function space via integration, where some of the properties of the original function might be more easily characterized and manipulated than in t ...

satisfies a reproducing property

A Bergman space is an example of a

reproducing kernel Hilbert space, which is a Hilbert space of functions along with a kernel that verifies a reproducing property analogous to this one. The Hardy space also admits a reproducing kernel, known as the

Szegő kernel In the mathematical study of several complex variables, the Szegő kernel is an integral kernel that gives rise to a reproducing kernel on a natural Hilbert space of holomorphic functions. It is named for its discoverer, the Hungarian mathemati ...

. Reproducing kernels are common in other areas of mathematics as well. For instance, in

harmonic analysis

Harmonic analysis is a branch of mathematics concerned with the representation of Function (mathematics), functions or signals as the Superposition principle, superposition of basic waves, and the study of and generalization of the notions of Fo ...

the

Poisson kernel is a reproducing kernel for the Hilbert space of square-integrable

harmonic functions in the

unit ball. That the latter is a Hilbert space at all is a consequence of the mean value theorem for harmonic functions.

Applications

Many of the applications of Hilbert spaces exploit the fact that Hilbert spaces support generalizations of simple geometric concepts like

projection

Projection, projections or projective may refer to:

Physics

* Projection (physics), the action/process of light, heat, or sound reflecting from a surface to another in a different direction

* The display of images by a projector

Optics, graphic ...

and

change of basis from their usual finite dimensional setting. In particular, the

spectral theory of

continuous self-adjoint linear operator

In mathematics, and more specifically in linear algebra, a linear map (also called a linear mapping, linear transformation, vector space homomorphism, or in some contexts linear function) is a mapping V \to W between two vector spaces that pre ...

s on a Hilbert space generalizes the usual

spectral decomposition of a

matrix

Matrix most commonly refers to:

* ''The Matrix'' (franchise), an American media franchise

** '' The Matrix'', a 1999 science-fiction action film

** "The Matrix", a fictional setting, a virtual reality environment, within ''The Matrix'' (franchi ...

, and this often plays a major role in applications of the theory to other areas of mathematics and physics.

Sturm–Liouville theory

In the theory of

ordinary differential equations, spectral methods on a suitable Hilbert space are used to study the behavior of eigenvalues and eigenfunctions of differential equations. For example, the

Sturm–Liouville problem arises in the study of the harmonics of waves in a violin string or a drum, and is a central problem in

ordinary differential equations

In mathematics, an ordinary differential equation (ODE) is a differential equation whose unknown(s) consists of one (or more) function(s) of one variable and involves the derivatives of those functions. The term ''ordinary'' is used in contrast w ...

. The problem is a differential equation of the form

for an unknown function on an interval , satisfying general homogeneous

Robin boundary conditions

In mathematics, the Robin boundary condition (; properly ), or third type boundary condition, is a type of boundary condition, named after Victor Gustave Robin (1855–1897). When imposed on an ordinary or a partial differential equatio ...

The functions , , and are given in advance, and the problem is to find the function and constants for which the equation has a solution. The problem only has solutions for certain values of , called eigenvalues of the system, and this is a consequence of the spectral theorem for

compact operators applied to the

integral operator defined by the

Green's function for the system. Furthermore, another consequence of this general result is that the eigenvalues of the system can be arranged in an increasing sequence tending to infinity.

[The eigenvalues of the Fredholm kernel are , which tend to zero.]

Partial differential equations

Hilbert spaces form a basic tool in the study of

partial differential equations.

[.] For many classes of partial differential equations, such as linear

elliptic equations, it is possible to consider a generalized solution (known as a

weak

Weak may refer to:

Songs

* "Weak" (AJR song), 2016

* "Weak" (Melanie C song), 2011

* "Weak" (SWV song), 1993

* "Weak" (Skunk Anansie song), 1995

* "Weak", a song by Seether from '' Seether: 2002-2013''

Television episodes

* "Weak" (''Fear t ...

solution) by enlarging the class of functions. Many weak formulations involve the class of

Sobolev functions, which is a Hilbert space. A suitable weak formulation reduces to a geometrical problem the analytic problem of finding a solution or, often what is more important, showing that a solution exists and is unique for given boundary data. For linear elliptic equations, one geometrical result that ensures unique solvability for a large class of problems is the

Lax–Milgram theorem. This strategy forms the rudiment of the

Galerkin method (a

finite element method) for numerical solution of partial differential equations.

A typical example is the

Poisson equation with

Dirichlet boundary conditions in a bounded domain in . The weak formulation consists of finding a function such that, for all continuously differentiable functions in vanishing on the boundary:

This can be recast in terms of the Hilbert space consisting of functions such that , along with its weak partial derivatives, are square integrable on , and vanish on the boundary. The question then reduces to finding in this space such that for all in this space

where is a continuous

bilinear form

In mathematics, a bilinear form is a bilinear map on a vector space (the elements of which are called '' vectors'') over a field ''K'' (the elements of which are called ''scalars''). In other words, a bilinear form is a function that is linear i ...

, and is a continuous

linear functional, given respectively by

Since the Poisson equation is

elliptic, it follows from Poincaré's inequality that the bilinear form is

coercive. The Lax–Milgram theorem then ensures the existence and uniqueness of solutions of this equation.

Hilbert spaces allow for many elliptic partial differential equations to be formulated in a similar way, and the Lax–Milgram theorem is then a basic tool in their analysis. With suitable modifications, similar techniques can be applied to

parabolic partial differential equation

A parabolic partial differential equation is a type of partial differential equation (PDE). Parabolic PDEs are used to describe a wide variety of time-dependent phenomena, including heat conduction, particle diffusion, and pricing of derivati ...

s and certain

hyperbolic partial differential equations.

Ergodic theory

The field of

ergodic theory

Ergodic theory (Greek: ' "work", ' "way") is a branch of mathematics that studies statistical properties of deterministic dynamical systems; it is the study of ergodicity. In this context, statistical properties means properties which are expres ...

is the study of the long-term behavior of

chaotic

Chaotic was originally a Danish trading card game. It expanded to an online game in America which then became a television program based on the game. The program was able to be seen on 4Kids TV (Fox affiliates, nationwide), Jetix, The CW4Kids ...

dynamical systems. The protypical case of a field that ergodic theory applies to is

thermodynamics, in which—though the microscopic state of a system is extremely complicated (it is impossible to understand the ensemble of individual collisions between particles of matter)—the average behavior over sufficiently long time intervals is tractable. The

laws of thermodynamics are assertions about such average behavior. In particular, one formulation of the

zeroth law of thermodynamics asserts that over sufficiently long timescales, the only functionally independent measurement that one can make of a thermodynamic system in equilibrium is its total energy, in the form of

temperature.

An ergodic dynamical system is one for which, apart from the energy—measured by the

Hamiltonian—there are no other functionally independent

conserved quantities

In mathematics, a conserved quantity of a dynamical system is a function of the dependent variables, the value of which remains constant (mathematics), constant along each trajectory of the system.

Not all systems have conserved quantities, and c ...

on the

phase space

In dynamical system theory, a phase space is a space in which all possible states of a system are represented, with each possible state corresponding to one unique point in the phase space. For mechanical systems, the phase space usually ...

. More explicitly, suppose that the energy is fixed, and let be the subset of the phase space consisting of all states of energy (an energy surface), and let denote the evolution operator on the phase space. The dynamical system is ergodic if there are no continuous non-constant functions on such that

for all on and all time .

Liouville's theorem implies that there exists a

measure

Measure may refer to:

* Measurement, the assignment of a number to a characteristic of an object or event

Law

* Ballot measure, proposed legislation in the United States

* Church of England Measure, legislation of the Church of England

* Mea ...

on the energy surface that is invariant under the

time translation. As a result, time translation is a

unitary transformation of the Hilbert space consisting of square-integrable functions on the energy surface with respect to the inner product

The von Neumann mean ergodic theorem

states the following:

* If is a (strongly continuous) one-parameter semigroup of unitary operators on a Hilbert space , and is the orthogonal projection onto the space of common fixed points of , , then

For an ergodic system, the fixed set of the time evolution consists only of the constant functions, so the ergodic theorem implies the following: for any function ,

That is, the long time average of an observable is equal to its expectation value over an energy surface.

Fourier analysis

One of the basic goals of

Fourier analysis

In mathematics, Fourier analysis () is the study of the way general functions may be represented or approximated by sums of simpler trigonometric functions. Fourier analysis grew from the study of Fourier series, and is named after Josep ...

is to decompose a function into a (possibly infinite)

linear combination of given basis functions: the associated

Fourier series

A Fourier series () is a summation of harmonically related sinusoidal functions, also known as components or harmonics. The result of the summation is a periodic function whose functional form is determined by the choices of cycle length (or ''p ...

. The classical Fourier series associated to a function defined on the interval is a series of the form

where

The example of adding up the first few terms in a Fourier series for a sawtooth function is shown in the figure. The basis functions are sine waves with wavelengths (for integer ) shorter than the wavelength of the sawtooth itself (except for , the ''fundamental'' wave). All basis functions have nodes at the nodes of the sawtooth, but all but the fundamental have additional nodes. The oscillation of the summed terms about the sawtooth is called the

Gibbs phenomenon.

A significant problem in classical Fourier series asks in what sense the Fourier series converges, if at all, to the function . Hilbert space methods provide one possible answer to this question. The functions form an orthogonal basis of the Hilbert space . Consequently, any square-integrable function can be expressed as a series

and, moreover, this series converges in the Hilbert space sense (that is, in the

mean).

The problem can also be studied from the abstract point of view: every Hilbert space has an

orthonormal basis, and every element of the Hilbert space can be written in a unique way as a sum of multiples of these basis elements. The coefficients appearing on these basis elements are sometimes known abstractly as the Fourier coefficients of the element of the space. The abstraction is especially useful when it is more natural to use different basis functions for a space such as . In many circumstances, it is desirable not to decompose a function into trigonometric functions, but rather into

orthogonal polynomials or

wavelets for instance, and in higher dimensions into

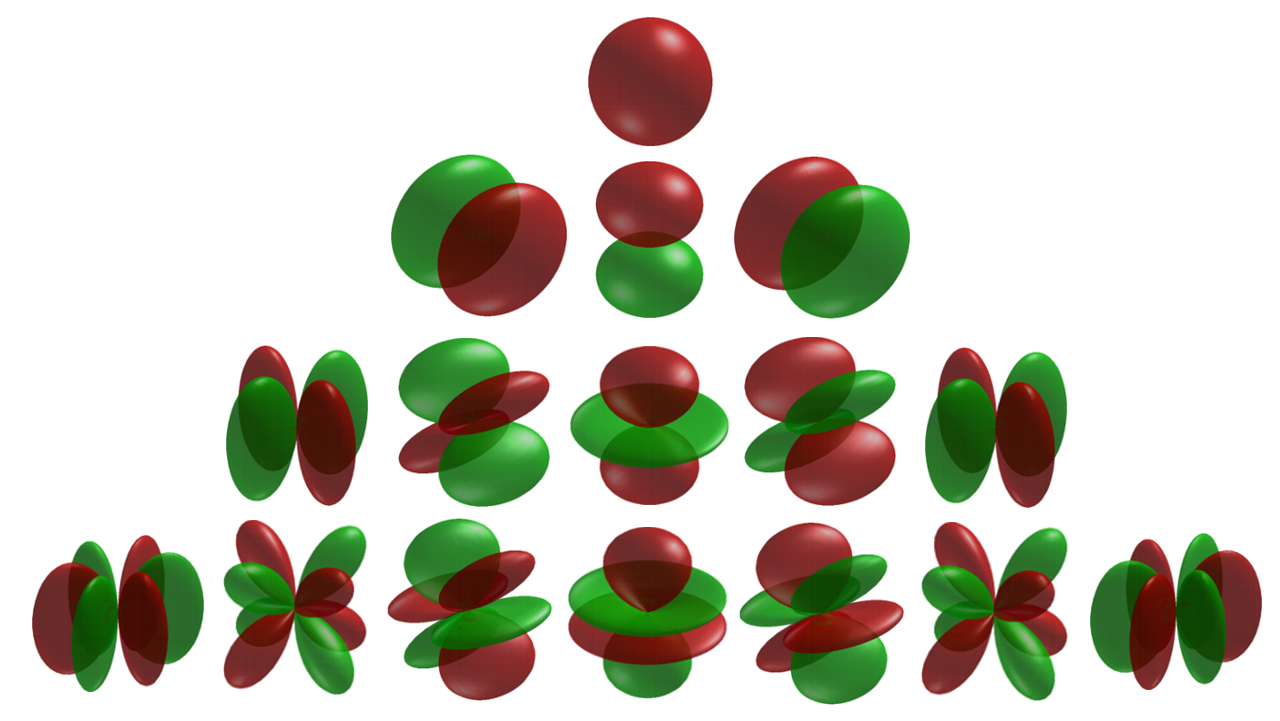

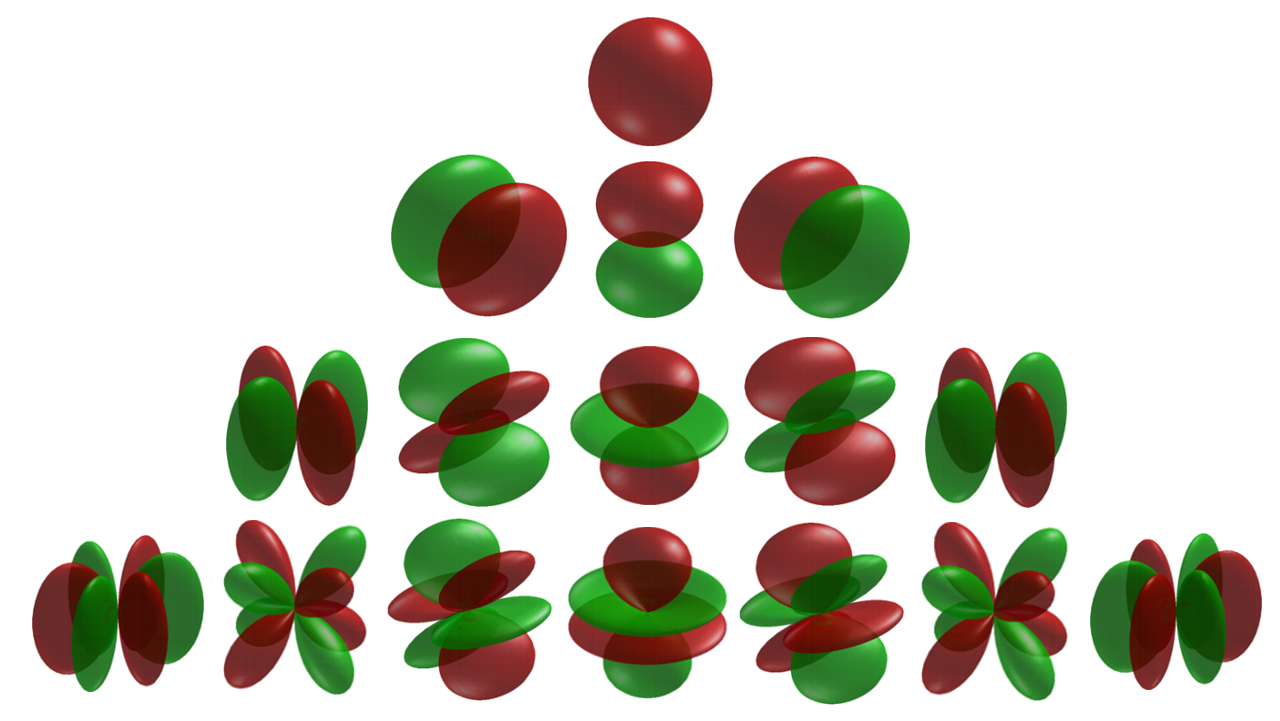

spherical harmonics.

For instance, if are any orthonormal basis functions of , then a given function in can be approximated as a finite linear combination

The coefficients are selected to make the magnitude of the difference as small as possible. Geometrically, the

best approximation is the

orthogonal projection of onto the subspace consisting of all linear combinations of the , and can be calculated by

That this formula minimizes the difference is a consequence of

Bessel's inequality and Parseval's formula.

In various applications to physical problems, a function can be decomposed into physically meaningful

eigenfunctions of a

differential operator

In mathematics, a differential operator is an operator defined as a function of the differentiation operator. It is helpful, as a matter of notation first, to consider differentiation as an abstract operation that accepts a function and return ...

(typically the

Laplace operator

In mathematics, the Laplace operator or Laplacian is a differential operator given by the divergence of the gradient of a scalar function on Euclidean space. It is usually denoted by the symbols \nabla\cdot\nabla, \nabla^2 (where \nabla is the ...

): this forms the foundation for the spectral study of functions, in reference to the

spectrum of the differential operator. A concrete physical application involves the problem of

hearing the shape of a drum: given the fundamental modes of vibration that a drumhead is capable of producing, can one infer the shape of the drum itself? The mathematical formulation of this question involves the

Dirichlet eigenvalue In mathematics, the Dirichlet eigenvalues are the fundamental modes of vibration of an idealized drum with a given shape. The problem of whether one can hear the shape of a drum is: given the Dirichlet eigenvalues, what features of the shape of t ...

s of the Laplace equation in the plane, that represent the fundamental modes of vibration in direct analogy with the integers that represent the fundamental modes of vibration of the violin string.

Spectral theory also underlies certain aspects of the

Fourier transform

A Fourier transform (FT) is a mathematical transform that decomposes functions into frequency components, which are represented by the output of the transform as a function of frequency. Most commonly functions of time or space are transformed, ...

of a function. Whereas Fourier analysis decomposes a function defined on a

compact set into the discrete spectrum of the Laplacian (which corresponds to the vibrations of a violin string or drum), the Fourier transform of a function is the decomposition of a function defined on all of Euclidean space into its components in the

continuous spectrum

In physics, a continuous spectrum usually means a set of attainable values for some physical quantity (such as energy or wavelength) that is best described as an interval of real numbers, as opposed to a discrete spectrum, a set of attainable ...

of the Laplacian. The Fourier transformation is also geometrical, in a sense made precise by the

Plancherel theorem, that asserts that it is an

isometry of one Hilbert space (the "time domain") with another (the "frequency domain"). This isometry property of the Fourier transformation is a recurring theme in abstract

harmonic analysis

Harmonic analysis is a branch of mathematics concerned with the representation of Function (mathematics), functions or signals as the Superposition principle, superposition of basic waves, and the study of and generalization of the notions of Fo ...

(since it reflects the conservation of energy for the continuous Fourier Transform), as evidenced for instance by the

Plancherel theorem for spherical functions occurring in

noncommutative harmonic analysis

In mathematics, noncommutative harmonic analysis is the field in which results from Fourier analysis are extended to topological groups that are not commutative. Since locally compact abelian groups have a well-understood theory, Pontryagin duality ...

.

Quantum mechanics

In the mathematically rigorous formulation of

quantum mechanics, developed by

John von Neumann, the possible states (more precisely, the

pure states) of a quantum mechanical system are represented by

unit vectors (called ''state vectors'') residing in a complex separable Hilbert space, known as the

state space, well defined up to a complex number of norm 1 (the

phase factor). In other words, the possible states are points in the

projectivization of a Hilbert space, usually called the

complex projective space. The exact nature of this Hilbert space is dependent on the system; for example, the position and momentum states for a single non-relativistic spin zero particle is the space of all

square-integrable functions, while the states for the spin of a single proton are unit elements of the two-dimensional complex Hilbert space of

spinors. Each observable is represented by a

self-adjoint linear operator

In mathematics, and more specifically in linear algebra, a linear map (also called a linear mapping, linear transformation, vector space homomorphism, or in some contexts linear function) is a mapping V \to W between two vector spaces that pre ...

acting on the state space. Each eigenstate of an observable corresponds to an

eigenvector of the operator, and the associated

eigenvalue corresponds to the value of the observable in that eigenstate.

The inner product between two state vectors is a complex number known as a

probability amplitude. During an ideal measurement of a quantum mechanical system, the probability that a system collapses from a given initial state to a particular eigenstate is given by the square of the

absolute value

In mathematics, the absolute value or modulus of a real number x, is the non-negative value without regard to its sign. Namely, , x, =x if is a positive number, and , x, =-x if x is negative (in which case negating x makes -x positive), an ...

of the probability amplitudes between the initial and final states. The possible results of a measurement are the eigenvalues of the operator—which explains the choice of self-adjoint operators, for all the eigenvalues must be real. The probability distribution of an observable in a given state can be found by computing the spectral decomposition of the corresponding operator.

For a general system, states are typically not pure, but instead are represented as statistical mixtures of pure states, or mixed states, given by

density matrices

In quantum mechanics, a density matrix (or density operator) is a matrix that describes the quantum state of a physical system. It allows for the calculation of the probabilities of the outcomes of any Measurement in quantum mechanics, measurement ...

: self-adjoint operators of

trace one on a Hilbert space. Moreover, for general quantum mechanical systems, the effects of a single measurement can influence other parts of a system in a manner that is described instead by a

positive operator valued measure

In functional analysis and quantum measurement theory, a positive operator-valued measure (POVM) is a measure whose values are positive semi-definite operators on a Hilbert space. POVMs are a generalisation of projection-valued measures (PVM) a ...

. Thus the structure both of the states and observables in the general theory is considerably more complicated than the idealization for pure states.

Color perception

Any true physical color can be represented by a combination of pure

spectral color

A spectral color is a color that is evoked by ''monochromatic light'', i.e. either a single wavelength of light in the visible spectrum, or by a relatively narrow band of wavelengths (e.g. lasers). Every wavelength of visible light is percei ...

s. As physical colors can be composed of any number of spectral colors, the space of physical colors may aptly be represented by a Hilbert space over spectral colors. Humans have

three types of cone cells for color perception, so the perceivable colors can be represented by 3-dimensional Euclidean space. The many-to-one linear mapping from the Hilbert space of physical colors to the Euclidean space of human perceivable colors explains why many distinct physical colors may be perceived by humans to be identical (e.g., pure yellow light versus a mix of red and green light, see

metamerism).

Properties

Pythagorean identity

Two vectors and in a Hilbert space are orthogonal when . The notation for this is . More generally, when is a subset in , the notation means that is orthogonal to every element from .

When and are orthogonal, one has

By induction on , this is extended to any family of orthogonal vectors,

Whereas the Pythagorean identity as stated is valid in any inner product space, completeness is required for the extension of the Pythagorean identity to series. A series of ''orthogonal'' vectors converges in if and only if the series of squares of norms converges, and

Furthermore, the sum of a series of orthogonal vectors is independent of the order in which it is taken.

Parallelogram identity and polarization

By definition, every Hilbert space is also a

Banach space

In mathematics, more specifically in functional analysis, a Banach space (pronounced ) is a complete normed vector space. Thus, a Banach space is a vector space with a metric that allows the computation of vector length and distance between vector ...

. Furthermore, in every Hilbert space the following

parallelogram identity

In mathematics, the simplest form of the parallelogram law (also called the parallelogram identity) belongs to elementary geometry. It states that the sum of the squares of the lengths of the four sides of a parallelogram equals the sum of the s ...

holds:

Conversely, every Banach space in which the parallelogram identity holds is a Hilbert space, and the inner product is uniquely determined by the norm by the

polarization identity

In linear algebra, a branch of mathematics, the polarization identity is any one of a family of formulas that express the inner product of two vectors in terms of the norm of a normed vector space.

If a norm arises from an inner product then t ...

. For real Hilbert spaces, the polarization identity is

For complex Hilbert spaces, it is

The parallelogram law implies that any Hilbert space is a

uniformly convex Banach space In mathematics, uniformly convex spaces (or uniformly rotund spaces) are common examples of reflexive Banach spaces. The concept of uniform convexity was first introduced by James A. Clarkson in 1936.

Definition

A uniformly convex space is a ...

.

Best approximation

This subsection employs the

Hilbert projection theorem. If is a non-empty closed convex subset of a Hilbert space and a point in , there exists a unique point that minimizes the distance between and points in ,

This is equivalent to saying that there is a point with minimal norm in the translated convex set . The proof consists in showing that every minimizing sequence is Cauchy (using the parallelogram identity) hence converges (using completeness) to a point in that has minimal norm. More generally, this holds in any uniformly convex Banach space.

When this result is applied to a closed subspace of , it can be shown that the point closest to is characterized by

This point is the ''orthogonal projection'' of onto , and the mapping is linear (see

Orthogonal complements and projections). This result is especially significant in

applied mathematics, especially

numerical analysis, where it forms the basis of

least squares

The method of least squares is a standard approach in regression analysis to approximate the solution of overdetermined systems (sets of equations in which there are more equations than unknowns) by minimizing the sum of the squares of the res ...

methods.

In particular, when is not equal to , one can find a nonzero vector orthogonal to (select and ). A very useful criterion is obtained by applying this observation to the closed subspace generated by a subset of .

: A subset of spans a dense vector subspace if (and only if) the vector 0 is the sole vector orthogonal to .

Duality

The

dual space

In mathematics, any vector space ''V'' has a corresponding dual vector space (or just dual space for short) consisting of all linear forms on ''V'', together with the vector space structure of pointwise addition and scalar multiplication by const ...

is the space of all

continuous linear functions from the space into the base field. It carries a natural norm, defined by

This norm satisfies the

parallelogram law, and so the dual space is also an inner product space where this inner product can be defined in terms of this dual norm by using the

polarization identity

In linear algebra, a branch of mathematics, the polarization identity is any one of a family of formulas that express the inner product of two vectors in terms of the norm of a normed vector space.

If a norm arises from an inner product then t ...

. The dual space is also complete so it is a Hilbert space in its own right.

If is a complete orthonormal basis for then the inner product on the dual space of any two

is

where all but countably many of the terms in this series are zero.

The

Riesz representation theorem affords a convenient description of the dual space. To every element of , there is a unique element of , defined by

where moreover,

The Riesz representation theorem states that the map from to defined by is

surjective

In mathematics, a surjective function (also known as surjection, or onto function) is a function that every element can be mapped from element so that . In other words, every element of the function's codomain is the image of one element of i ...

, which makes this map an

isometric

The term ''isometric'' comes from the Greek for "having equal measurement".

isometric may mean:

* Cubic crystal system, also called isometric crystal system

* Isometre, a rhythmic technique in music.

* "Isometric (Intro)", a song by Madeon from ...

antilinear isomorphism. So to every element of the dual there exists one and only one in such that

for all . The inner product on the dual space satisfies

The reversal of order on the right-hand side restores linearity in from the antilinearity of . In the real case, the antilinear isomorphism from to its dual is actually an isomorphism, and so real Hilbert spaces are naturally isomorphic to their own duals.

The representing vector is obtained in the following way. When , the

kernel is a closed vector subspace of , not equal to , hence there exists a nonzero vector orthogonal to . The vector is a suitable scalar multiple of . The requirement that yields

This correspondence is exploited by the

bra–ket notation

In quantum mechanics, bra–ket notation, or Dirac notation, is used ubiquitously to denote quantum states. The notation uses angle brackets, and , and a vertical bar , to construct "bras" and "kets".

A ket is of the form , v \rangle. Mathema ...

popular in

physics. It is common in physics to assume that the inner product, denoted by , is linear on the right,

The result can be seen as the action of the linear functional (the ''bra'') on the vector (the ''ket'').

The Riesz representation theorem relies fundamentally not just on the presence of an inner product, but also on the completeness of the space. In fact, the theorem implies that the

topological dual of any inner product space can be identified with its completion. An immediate consequence of the Riesz representation theorem is also that a Hilbert space is

reflexive, meaning that the natural map from into its

double dual space is an isomorphism.

Weakly-convergent sequences

In a Hilbert space , a sequence is

weakly convergent to a vector when

for every .

For example, any orthonormal sequence converges weakly to 0, as a consequence of

Bessel's inequality. Every weakly convergent sequence is bounded, by the

uniform boundedness principle

In mathematics, the uniform boundedness principle or Banach–Steinhaus theorem is one of the fundamental results in functional analysis.

Together with the Hahn–Banach theorem and the open mapping theorem, it is considered one of the cornerst ...

.

Conversely, every bounded sequence in a Hilbert space admits weakly convergent subsequences (

Alaoglu's theorem). This fact may be used to prove minimization results for continuous

convex function

In mathematics, a real-valued function is called convex if the line segment between any two points on the graph of a function, graph of the function lies above the graph between the two points. Equivalently, a function is convex if its epigra ...

als, in the same way that the

Bolzano–Weierstrass theorem is used for continuous functions on . Among several variants, one simple statement is as follows:

:If is a convex continuous function such that tends to when tends to , then admits a minimum at some point .

This fact (and its various generalizations) are fundamental for

direct methods in the

calculus of variations

The calculus of variations (or Variational Calculus) is a field of mathematical analysis that uses variations, which are small changes in functions

and functionals, to find maxima and minima of functionals: mappings from a set of functions t ...

. Minimization results for convex functionals are also a direct consequence of the slightly more abstract fact that closed bounded convex subsets in a Hilbert space are

weakly compact, since is reflexive. The existence of weakly convergent subsequences is a special case of the

Eberlein–Šmulian theorem.

Banach space properties

Any general property of

Banach space

In mathematics, more specifically in functional analysis, a Banach space (pronounced ) is a complete normed vector space. Thus, a Banach space is a vector space with a metric that allows the computation of vector length and distance between vector ...

s continues to hold for Hilbert spaces. The

open mapping theorem states that a

continuous surjective

In mathematics, a surjective function (also known as surjection, or onto function) is a function that every element can be mapped from element so that . In other words, every element of the function's codomain is the image of one element of i ...

linear transformation from one Banach space to another is an

open mapping meaning that it sends open sets to open sets. A corollary is the

bounded inverse theorem, that a continuous and

bijective linear function from one Banach space to another is an isomorphism (that is, a continuous linear map whose inverse is also continuous). This theorem is considerably simpler to prove in the case of Hilbert spaces than in general Banach spaces. The open mapping theorem is equivalent to the

closed graph theorem, which asserts that a linear function from one Banach space to another is continuous if and only if its graph is a

closed set

In geometry, topology, and related branches of mathematics, a closed set is a set whose complement is an open set. In a topological space, a closed set can be defined as a set which contains all its limit points. In a complete metric space, a cl ...

. In the case of Hilbert spaces, this is basic in the study of

unbounded operators (see

closed operator).

The (geometrical)

Hahn–Banach theorem asserts that a closed convex set can be separated from any point outside it by means of a

hyperplane

In geometry, a hyperplane is a subspace whose dimension is one less than that of its ''ambient space''. For example, if a space is 3-dimensional then its hyperplanes are the 2-dimensional planes, while if the space is 2-dimensional, its hyper ...

of the Hilbert space. This is an immediate consequence of the

best approximation property: if is the element of a closed convex set closest to , then the separating hyperplane is the plane perpendicular to the segment passing through its midpoint.

Operators on Hilbert spaces

Bounded operators

The

continuous linear operator

In mathematics, and more specifically in linear algebra, a linear map (also called a linear mapping, linear transformation, vector space homomorphism, or in some contexts linear function) is a mapping V \to W between two vector spaces that pre ...

s from a Hilbert space to a second Hilbert space are

''bounded'' in the sense that they map

bounded set

:''"Bounded" and "boundary" are distinct concepts; for the latter see boundary (topology). A circle in isolation is a boundaryless bounded set, while the half plane is unbounded yet has a boundary.

In mathematical analysis and related areas of mat ...

s to bounded sets. Conversely, if an operator is bounded, then it is continuous. The space of such

bounded linear operators has a

norm, the

operator norm given by

The sum and the composite of two bounded linear operators is again bounded and linear. For ''y'' in ''H''

2, the map that sends to is linear and continuous, and according to the

Riesz representation theorem can therefore be represented in the form

for some vector in . This defines another bounded linear operator , the

adjoint of . The adjoint satisfies . When the Riesz representation theorem is used to identify each Hilbert space with its continuous dual space, the adjoint of can be shown to be

identical to the

transpose of , which by definition sends

to the functional

The set of all bounded linear operators on (meaning operators ), together with the addition and composition operations, the norm and the adjoint operation, is a

C*-algebra

In mathematics, specifically in functional analysis, a C∗-algebra (pronounced "C-star") is a Banach algebra together with an involution satisfying the properties of the adjoint. A particular case is that of a complex algebra ''A'' of continuous ...

, which is a type of

operator algebra.

An element of is called 'self-adjoint' or 'Hermitian' if . If is Hermitian and for every , then is called 'nonnegative', written ; if equality holds only when , then is called 'positive'. The set of self adjoint operators admits a

partial order, in which if . If has the form for some , then is nonnegative; if is invertible, then is positive. A converse is also true in the sense that, for a non-negative operator , there exists a unique non-negative

square root such that

In a sense made precise by the

spectral theorem, self-adjoint operators can usefully be thought of as operators that are "real". An element of is called ''normal'' if . Normal operators decompose into the sum of a self-adjoint operator and an imaginary multiple of a self adjoint operator

that commute with each other. Normal operators can also usefully be thought of in terms of their real and imaginary parts.

An element of is called

unitary if is invertible and its inverse is given by . This can also be expressed by requiring that be onto and for all . The unitary operators form a

group under composition, which is the

isometry group of .

An element of is

compact if it sends bounded sets to

relatively compact sets. Equivalently, a bounded operator is compact if, for any bounded sequence , the sequence has a convergent subsequence. Many

integral operators are compact, and in fact define a special class of operators known as

Hilbert–Schmidt operators that are especially important in the study of

integral equations.

Fredholm operators differ from a compact operator by a multiple of the identity, and are equivalently characterized as operators with a finite dimensional

kernel and

cokernel. The index of a Fredholm operator is defined by

The index is

homotopy invariant, and plays a deep role in

differential geometry

Differential geometry is a mathematical discipline that studies the geometry of smooth shapes and smooth spaces, otherwise known as smooth manifolds. It uses the techniques of differential calculus, integral calculus, linear algebra and multili ...

via the

Atiyah–Singer index theorem

In differential geometry, the Atiyah–Singer index theorem, proved by Michael Atiyah and Isadore Singer (1963), states that for an elliptic differential operator on a compact manifold, the analytical index (related to the dimension of the space ...

.

Unbounded operators

Unbounded operators are also tractable in Hilbert spaces, and have important applications to

quantum mechanics. An unbounded operator on a Hilbert space is defined as a linear operator whose domain is a linear subspace of . Often the domain is a dense subspace of , in which case is known as a

densely defined operator.

The adjoint of a densely defined unbounded operator is defined in essentially the same manner as for bounded operators.

Self-adjoint unbounded operators play the role of the ''observables'' in the mathematical formulation of quantum mechanics. Examples of self-adjoint unbounded operators on the Hilbert space are:

* A suitable extension of the differential operator

where is the imaginary unit and is a differentiable function of compact support.

* The multiplication-by- operator:

These correspond to the

momentum

In Newtonian mechanics, momentum (more specifically linear momentum or translational momentum) is the product of the mass and velocity of an object. It is a vector quantity, possessing a magnitude and a direction. If is an object's mass an ...

and

position observables, respectively. Note that neither nor is defined on all of , since in the case of the derivative need not exist, and in the case of the product function need not be square integrable. In both cases, the set of possible arguments form dense subspaces of .

Constructions

Direct sums

Two Hilbert spaces and can be combined into another Hilbert space, called the

(orthogonal) direct sum, and denoted

consisting of the set of all

ordered pair

In mathematics, an ordered pair (''a'', ''b'') is a pair of objects. The order in which the objects appear in the pair is significant: the ordered pair (''a'', ''b'') is different from the ordered pair (''b'', ''a'') unless ''a'' = ''b''. (In con ...

s where , , and inner product defined by

More generally, if is a family of Hilbert spaces indexed by , then the direct sum of the , denoted

consists of the set of all indexed families

in the

Cartesian product

In mathematics, specifically set theory, the Cartesian product of two sets ''A'' and ''B'', denoted ''A''×''B'', is the set of all ordered pairs where ''a'' is in ''A'' and ''b'' is in ''B''. In terms of set-builder notation, that is

: A\ti ...

of the such that

The inner product is defined by

Each of the is included as a closed subspace in the direct sum of all of the . Moreover, the are pairwise orthogonal. Conversely, if there is a system of closed subspaces, , , in a Hilbert space , that are pairwise orthogonal and whose union is dense in , then is canonically isomorphic to the direct sum of . In this case, is called the internal direct sum of the . A direct sum (internal or external) is also equipped with a family of orthogonal projections onto the th direct summand . These projections are bounded, self-adjoint,

idempotent operators that satisfy the orthogonality condition

The

spectral theorem for

compact self-adjoint operators on a Hilbert space states that splits into an orthogonal direct sum of the eigenspaces of an operator, and also gives an explicit decomposition of the operator as a sum of projections onto the eigenspaces. The direct sum of Hilbert spaces also appears in quantum mechanics as the

Fock space of a system containing a variable number of particles, where each Hilbert space in the direct sum corresponds to an additional

degree of freedom for the quantum mechanical system. In

representation theory, the

Peter–Weyl theorem guarantees that any

unitary representation of a

compact group on a Hilbert space splits as the direct sum of finite-dimensional representations.

Tensor products

If and , then one defines an inner product on the (ordinary)

tensor product as follows. On

simple tensors, let

This formula then extends by

sesquilinearity to an inner product on . The Hilbertian tensor product of and , sometimes denoted by , is the Hilbert space obtained by completing for the metric associated to this inner product.

An example is provided by the Hilbert space . The Hilbertian tensor product of two copies of is isometrically and linearly isomorphic to the space of square-integrable functions on the square . This isomorphism sends a simple tensor to the function

on the square.

This example is typical in the following sense. Associated to every simple tensor product is the rank one operator from to that maps a given as

This mapping defined on simple tensors extends to a linear identification between and the space of finite rank operators from to . This extends to a linear isometry of the Hilbertian tensor product with the Hilbert space of

Hilbert–Schmidt operators from to .

Orthonormal bases

The notion of an

orthonormal basis from linear algebra generalizes over to the case of Hilbert spaces. In a Hilbert space , an orthonormal basis is a family of elements of satisfying the conditions:

# ''Orthogonality'': Every two different elements of are orthogonal: for all with .

# ''Normalization'': Every element of the family has norm 1: for all .

# ''Completeness'': The

linear span

In mathematics, the linear span (also called the linear hull or just span) of a set of vectors (from a vector space), denoted , pp. 29-30, §§ 2.5, 2.8 is defined as the set of all linear combinations of the vectors in . It can be characterized ...

of the family , , is

dense in ''H''.

A system of vectors satisfying the first two conditions basis is called an orthonormal system or an orthonormal set (or an orthonormal sequence if is

countable). Such a system is always

linearly independent.

Despite the name, an orthonormal basis is not, in general, a basis in the sense of linear algebra (

Hamel basis). More precisely, an orthonormal basis is a Hamel basis if and only if the Hilbert space is a finite-dimensional vector space.

Completeness of an orthonormal system of vectors of a Hilbert space can be equivalently restated as:

: for every , if for all , then .

This is related to the fact that the only vector orthogonal to a dense linear subspace is the zero vector, for if is any orthonormal set and is orthogonal to , then is orthogonal to the closure of the linear span of , which is the whole space.

Examples of orthonormal bases include:

* the set forms an orthonormal basis of with the

dot product;

* the sequence with forms an orthonormal basis of the complex space ;

In the infinite-dimensional case, an orthonormal basis will not be a basis in the sense of