|

Definite Bilinear Form

In mathematics, a definite quadratic form is a quadratic form over some real vector space that has the same sign (always positive or always negative) for every non-zero vector of . According to that sign, the quadratic form is called positive-definite or negative-definite. A semidefinite (or semi-definite) quadratic form is defined in much the same way, except that "always positive" and "always negative" are replaced by "never negative" and "never positive", respectively. In other words, it may take on zero values for some non-zero vectors of . An indefinite quadratic form takes on both positive and negative values and is called an isotropic quadratic form. More generally, these definitions apply to any vector space over an ordered field. Associated symmetric bilinear form Quadratic forms correspond one-to-one to symmetric bilinear forms over the same space.This is true only over a field of characteristic other than 2, but here we consider only ordered fields, which necessaril ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematics

Mathematics is a field of study that discovers and organizes methods, Mathematical theory, theories and theorems that are developed and Mathematical proof, proved for the needs of empirical sciences and mathematics itself. There are many areas of mathematics, which include number theory (the study of numbers), algebra (the study of formulas and related structures), geometry (the study of shapes and spaces that contain them), Mathematical analysis, analysis (the study of continuous changes), and set theory (presently used as a foundation for all mathematics). Mathematics involves the description and manipulation of mathematical object, abstract objects that consist of either abstraction (mathematics), abstractions from nature orin modern mathematicspurely abstract entities that are stipulated to have certain properties, called axioms. Mathematics uses pure reason to proof (mathematics), prove properties of objects, a ''proof'' consisting of a succession of applications of in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Matrix Transpose

In linear algebra, the transpose of a matrix is an operator which flips a matrix over its diagonal; that is, it switches the row and column indices of the matrix by producing another matrix, often denoted by (among other notations). The transpose of a matrix was introduced in 1858 by the British mathematician Arthur Cayley. Transpose of a matrix Definition The transpose of a matrix , denoted by , , , A^, , , or , may be constructed by any one of the following methods: # Reflect over its main diagonal (which runs from top-left to bottom-right) to obtain #Write the rows of as the columns of #Write the columns of as the rows of Formally, the -th row, -th column element of is the -th row, -th column element of : :\left mathbf^\operatorname\right = \left mathbf\right. If is an matrix, then is an matrix. In the case of square matrices, may also denote the th power of the matrix . For avoiding a possible confusion, many authors use left upperscripts, t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Positive-definite Matrix

In mathematics, a symmetric matrix M with real entries is positive-definite if the real number \mathbf^\mathsf M \mathbf is positive for every nonzero real column vector \mathbf, where \mathbf^\mathsf is the row vector transpose of \mathbf. More generally, a Hermitian matrix (that is, a complex matrix equal to its conjugate transpose) is positive-definite if the real number \mathbf^* M \mathbf is positive for every nonzero complex column vector \mathbf, where \mathbf^* denotes the conjugate transpose of \mathbf. Positive semi-definite matrices are defined similarly, except that the scalars \mathbf^\mathsf M \mathbf and \mathbf^* M \mathbf are required to be positive ''or zero'' (that is, nonnegative). Negative-definite and negative semi-definite matrices are defined analogously. A matrix that is not positive semi-definite and not negative semi-definite is sometimes called ''indefinite''. Some authors use more general definitions of definiteness, permitting the matrices to be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Positive-definite Function

In mathematics, a positive-definite function is, depending on the context, either of two types of function. Definition 1 Let \mathbb be the set of real numbers and \mathbb be the set of complex numbers. A function f: \mathbb \to \mathbb is called ''positive semi-definite'' if for all real numbers ''x''1, …, ''x''''n'' the ''n'' × ''n'' matrix : A = \left(a_\right)_^n~, \quad a_ = f(x_i - x_j) is a positive ''semi-''definite matrix. By definition, a positive semi-definite matrix, such as A, is Hermitian; therefore ''f''(−''x'') is the complex conjugate of ''f''(''x'')). In particular, it is necessary (but not sufficient) that : f(0) \geq 0~, \quad , f(x), \leq f(0) (these inequalities follow from the condition for ''n'' = 1, 2.) A function is ''negative semi-definite'' if the inequality is reversed. A function is ''definite'' if the weak inequality is replaced with a strong ( 0). Examples If (X, \langle \cdot, \cdot \rangle) is a real inner prod ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Isotropic Quadratic Form

In mathematics, a quadratic form over a field ''F'' is said to be isotropic if there is a non-zero vector on which the form evaluates to zero. Otherwise it is a definite quadratic form. More explicitly, if ''q'' is a quadratic form on a vector space ''V'' over ''F'', then a non-zero vector ''v'' in ''V'' is said to be isotropic if . A quadratic form is isotropic if and only if there exists a non-zero isotropic vector (or null vector) for that quadratic form. Suppose that is quadratic space and ''W'' is a subspace of ''V''. Then ''W'' is called an isotropic subspace of ''V'' if ''some'' vector in it is isotropic, a totally isotropic subspace if ''all'' vectors in it are isotropic, and a definite subspace if it does not contain ''any'' (non-zero) isotropic vectors. The of a quadratic space is the maximum of the dimensions of the totally isotropic subspaces. Over the real numbers, more generally in the case where ''F'' is a real closed field (so that the signature is defined), ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

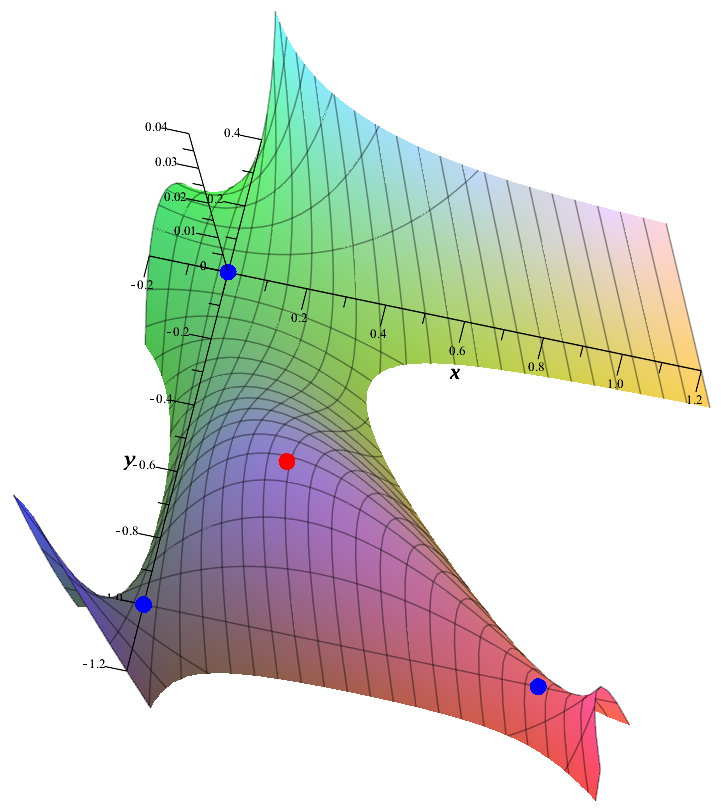

Second Partial Derivative Test

In mathematics, the second partial derivative test is a method in multivariable calculus used to determine if a Critical point (mathematics), critical point of a function is a maxima and minima, local minimum, maximum or saddle point. Functions of two variables Suppose that is a differentiable real function of two variables whose second partial derivatives exist and are continuous function, continuous. The Hessian matrix of is the 2 × 2 matrix of partial derivatives of : H(x,y) = \begin f_(x,y) &f_(x,y)\\ f_(x,y) &f_(x,y) \end. Define to be the determinant D(x,y)=\det(H(x,y)) = f_(x,y)f_(x,y) - \left( f_(x,y) \right)^2 of . Finally, suppose that is a critical point of , that is, that . Then the second partial derivative test asserts the following: #If and then is a local minimum of . #If and then is a local maximum of . #If then is a saddle point of . #If then the point could be any of a minimum, maximum, or saddle point (that is, the test is inconclusive). S ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nonsingular Matrix

In linear algebra, an invertible matrix (''non-singular'', ''non-degenarate'' or ''regular'') is a square matrix that has an inverse. In other words, if some other matrix is multiplied by the invertible matrix, the result can be multiplied by an inverse to undo the operation. An invertible matrix multiplied by its inverse yields the identity matrix. Invertible matrices are the same size as their inverse. Definition An -by- square matrix is called invertible if there exists an -by- square matrix such that\mathbf = \mathbf = \mathbf_n ,where denotes the -by- identity matrix and the multiplication used is ordinary matrix multiplication. If this is the case, then the matrix is uniquely determined by , and is called the (multiplicative) ''inverse'' of , denoted by . Matrix inversion is the process of finding the matrix which when multiplied by the original matrix gives the identity matrix. Over a field, a square matrix that is ''not'' invertible is called singular or degenerat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Matrix Derivative

In mathematics, matrix calculus is a specialized notation for doing multivariable calculus, especially over spaces of matrices. It collects the various partial derivatives of a single function with respect to many variables, and/or of a multivariate function with respect to a single variable, into vectors and matrices that can be treated as single entities. This greatly simplifies operations such as finding the maximum or minimum of a multivariate function and solving systems of differential equations. The notation used here is commonly used in statistics and engineering, while the tensor index notation is preferred in physics. Two competing notational conventions split the field of matrix calculus into two separate groups. The two groups can be distinguished by whether they write the derivative of a scalar with respect to a vector as a column vector or a row vector. Both of these conventions are possible even when the common assumption is made that vectors should be trea ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

First-order Condition

In calculus, a derivative test uses the derivatives of a function to locate the critical points of a function and determine whether each point is a local maximum, a local minimum, or a saddle point. Derivative tests can also give information about the concavity of a function. The usefulness of derivatives to find extrema is proved mathematically by Fermat's theorem of stationary points. First-derivative test The first-derivative test examines a function's monotonic properties (where the function is increasing or decreasing), focusing on a particular point in its domain. If the function "switches" from increasing to decreasing at the point, then the function will achieve a highest value at that point. Similarly, if the function "switches" from decreasing to increasing at the point, then it will achieve a least value at that point. If the function fails to "switch" and remains increasing or remains decreasing, then no highest or least value is achieved. One can examine a funct ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Optimization

Mathematical optimization (alternatively spelled ''optimisation'') or mathematical programming is the selection of a best element, with regard to some criteria, from some set of available alternatives. It is generally divided into two subfields: discrete optimization and continuous optimization. Optimization problems arise in all quantitative disciplines from computer science and engineering to operations research and economics, and the development of solution methods has been of interest in mathematics for centuries. In the more general approach, an optimization problem consists of maxima and minima, maximizing or minimizing a Function of a real variable, real function by systematically choosing Argument of a function, input values from within an allowed set and computing the Value (mathematics), value of the function. The generalization of optimization theory and techniques to other formulations constitutes a large area of applied mathematics. Optimization problems Opti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Principal Minor

In linear algebra, a minor of a matrix (mathematics), matrix is the determinant of some smaller square matrix generated from by removing one or more of its rows and columns. Minors obtained by removing just one row and one column from square matrices (first minors) are required for calculating matrix cofactors, which are useful for computing both the determinant and Inverse matrix, inverse of square matrices. The requirement that the square matrix be smaller than the original matrix is often omitted in the definition. Definition and illustration First minors If is a square matrix, then the ''minor'' of the entry in the -th row and -th column (also called the ''minor'', or a ''first minor'') is the determinant of the submatrix formed by deleting the -th row and -th column. This number is often denoted . The ''cofactor'' is obtained by multiplying the minor by . To illustrate these definitions, consider the following matrix, \begin 1 & 4 & 7 \\ 3 & 0 & 5 \\ -1 & 9 & 11 \\ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |