Learning classifier system on:

[Wikipedia]

[Google]

[Amazon]

Learning classifier systems, or LCS, are a paradigm of rule-based machine learning methods that combine a discovery component (e.g. typically a

Learning classifier systems, or LCS, are a paradigm of rule-based machine learning methods that combine a discovery component (e.g. typically a

Keeping in mind that LCS is a paradigm for genetic-based machine learning rather than a specific method, the following outlines key elements of a generic, modern (i.e. post-XCS) LCS algorithm. For simplicity let us focus on Michigan-style architecture with supervised learning. See the illustrations on the right laying out the sequential steps involved in this type of generic LCS.

Keeping in mind that LCS is a paradigm for genetic-based machine learning rather than a specific method, the following outlines key elements of a generic, modern (i.e. post-XCS) LCS algorithm. For simplicity let us focus on Michigan-style architecture with supervised learning. See the illustrations on the right laying out the sequential steps involved in this type of generic LCS.

/ref> Other important concepts that emerged in the early days of LCS research included (1) the formalization of a ''bucket brigade algorithm'' (BBA) for credit assignment/learning, (2) selection of parent rules from a common 'environmental niche' (i.e. the ''match set'' rather than from the whole ''population'' (3) ''covering'', first introduced as a ''create'' operator,Wilson, S. W.

Knowledge growth in an artificial animal

Proceedings of the First International Conference on Genetic Algorithms and their Applications." (1985). (4) the formalization of an ''action set'' (5) a simplified algorithm architecture, (6) ''strength-based fitness'', (7) consideration of single-step, or supervised learning problems and the introduction of the ''correct set'' (8) ''accuracy-based fitness'' (9) the combination of fuzzy logic with LCS (which later spawned a lineage of ''fuzzy LCS algorithms''), (10) encouraging ''long action chains'' and ''default hierarchies'' for improving performance on multi-step problems, (11) examining latent learning (which later inspired a new branch of ''anticipatory classifier systems'' (ACS)W. Stolzmann, "Anticipatory classifier systems," in Proceedings of the 3rd Annual Genetic Programming Conference, pp. 658–664, 1998. ), and (12) the introduction of the first Q-learning-like credit assignment technique. While not all of these concepts are applied in modern LCS algorithms, each were landmarks in the development of the LCS paradigm.

Learning Classifier Systems in a Nutshell

- (2016) Go inside a basic LCS algorithm to learn their components and how they work.

LCS & GBML Central

UWE Learning Classifier Research Group

Prediction Dynamics

Evolutionary algorithms

Learning classifier systems, or LCS, are a paradigm of rule-based machine learning methods that combine a discovery component (e.g. typically a

Learning classifier systems, or LCS, are a paradigm of rule-based machine learning methods that combine a discovery component (e.g. typically a genetic algorithm

In computer science and operations research, a genetic algorithm (GA) is a metaheuristic inspired by the process of natural selection that belongs to the larger class of evolutionary algorithms (EA). Genetic algorithms are commonly used to g ...

in evolutionary computation

Evolutionary computation from computer science is a family of algorithms for global optimization inspired by biological evolution, and the subfield of artificial intelligence and soft computing studying these algorithms. In technical terms ...

) with a learning component (performing either supervised learning

In machine learning, supervised learning (SL) is a paradigm where a Statistical model, model is trained using input objects (e.g. a vector of predictor variables) and desired output values (also known as a ''supervisory signal''), which are often ...

, reinforcement learning

Reinforcement learning (RL) is an interdisciplinary area of machine learning and optimal control concerned with how an intelligent agent should take actions in a dynamic environment in order to maximize a reward signal. Reinforcement learnin ...

, or unsupervised learning). Learning classifier systems seek to identify a set of context-dependent rules that collectively store and apply knowledge in a piecewise manner in order to make predictions (e.g. behavior modeling, classification

Classification is the activity of assigning objects to some pre-existing classes or categories. This is distinct from the task of establishing the classes themselves (for example through cluster analysis). Examples include diagnostic tests, identif ...

, data mining, regression, function approximation, or game strategy). This approach allows complex solution spaces to be broken up into smaller, simpler parts for the reinforcement learning that is inside artificial intelligence research.

The founding concepts behind learning classifier systems came from attempts to model complex adaptive systems, using rule-based agents to form an artificial cognitive system (i.e. artificial intelligence

Artificial intelligence (AI) is the capability of computer, computational systems to perform tasks typically associated with human intelligence, such as learning, reasoning, problem-solving, perception, and decision-making. It is a field of re ...

).

Methodology

The architecture and components of a given learning classifier system can be quite variable. It is useful to think of an LCS as a machine consisting of several interacting components. Components may be added or removed, or existing components modified/exchanged to suit the demands of a given problem domain (like algorithmic building blocks) or to make the algorithm flexible enough to function in many different problem domains. As a result, the LCS paradigm can be flexibly applied to many problem domains that call formachine learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task ( ...

. The major divisions among LCS implementations are as follows: (1) Michigan-style architecture vs. Pittsburgh-style architecture, (2) reinforcement learning

Reinforcement learning (RL) is an interdisciplinary area of machine learning and optimal control concerned with how an intelligent agent should take actions in a dynamic environment in order to maximize a reward signal. Reinforcement learnin ...

vs. supervised learning

In machine learning, supervised learning (SL) is a paradigm where a Statistical model, model is trained using input objects (e.g. a vector of predictor variables) and desired output values (also known as a ''supervisory signal''), which are often ...

, (3) incremental learning vs. batch learning, (4) online learning vs. offline learning, (5) strength-based fitness vs. accuracy-based fitness, and (6) complete action mapping vs best action mapping. These divisions are not necessarily mutually exclusive. For example, XCS, the best known and best studied LCS algorithm, is Michigan-style, was designed for reinforcement learning but can also perform supervised learning, applies incremental learning that can be either online or offline, applies accuracy-based fitness, and seeks to generate a complete action mapping.

Elements of a generic LCS algorithm

Keeping in mind that LCS is a paradigm for genetic-based machine learning rather than a specific method, the following outlines key elements of a generic, modern (i.e. post-XCS) LCS algorithm. For simplicity let us focus on Michigan-style architecture with supervised learning. See the illustrations on the right laying out the sequential steps involved in this type of generic LCS.

Keeping in mind that LCS is a paradigm for genetic-based machine learning rather than a specific method, the following outlines key elements of a generic, modern (i.e. post-XCS) LCS algorithm. For simplicity let us focus on Michigan-style architecture with supervised learning. See the illustrations on the right laying out the sequential steps involved in this type of generic LCS.

Environment

The environment is the source of data upon which an LCS learns. It can be an offline, finite training dataset (characteristic of a data mining,classification

Classification is the activity of assigning objects to some pre-existing classes or categories. This is distinct from the task of establishing the classes themselves (for example through cluster analysis). Examples include diagnostic tests, identif ...

, or regression problem), or an online sequential stream of live training instances. Each training instance is assumed to include some number of ''features'' (also referred to as ''attributes'', or ''independent variables''), and a single ''endpoint'' of interest (also referred to as the class

Class, Classes, or The Class may refer to:

Common uses not otherwise categorized

* Class (biology), a taxonomic rank

* Class (knowledge representation), a collection of individuals or objects

* Class (philosophy), an analytical concept used d ...

, ''action'', ''phenotype

In genetics, the phenotype () is the set of observable characteristics or traits of an organism. The term covers the organism's morphology (physical form and structure), its developmental processes, its biochemical and physiological propert ...

'', ''prediction'', or ''dependent variable''). Part of LCS learning can involve feature selection, therefore not all of the features in the training data need to be informative. The set of feature values of an instance is commonly referred to as the ''state''. For simplicity let's assume an example problem domain with Boolean/ binary features and a Boolean/ binary class. For Michigan-style systems, one instance from the environment is trained on each learning cycle (i.e. incremental learning). Pittsburgh-style systems perform batch learning, where rule sets are evaluated in each iteration over much or all of the training data.

Rule/classifier/population

A rule is a context dependent relationship between state values and some prediction. Rules typically take the form of an expression, (e.g. ,'' or as a more specific example, '). A critical concept in LCS and rule-based machine learning alike, is that an individual rule is not in itself a model, since the rule is only applicable when its condition is satisfied. Think of a rule as a "local-model" of the solution space. Rules can be represented in many different ways to handle different data types (e.g. binary, discrete-valued, ordinal, continuous-valued). Given binary data LCS traditionally applies a ternary rule representation (i.e. rules can include either a 0, 1, or '#' for each feature in the data). The 'don't care' symbol (i.e. '#') serves as a wild card within a rule's condition allowing rules, and the system as a whole to generalize relationships between features and the target endpoint to be predicted. Consider the following rule (#1###0 ~ 1) (i.e. condition ~ action). This rule can be interpreted as: IF the second feature = 1 AND the sixth feature = 0 THEN the class prediction = 1. We would say that the second and sixth features were specified in this rule, while the others were generalized. This rule, and the corresponding prediction are only applicable to an instance when the condition of the rule is satisfied by the instance. This is more commonly referred to as matching. In Michigan-style LCS, each rule has its own fitness, as well as a number of other rule-parameters associated with it that can describe the number of copies of that rule that exist (i.e. the ''numerosity''), the age of the rule, its accuracy, or the accuracy of its reward predictions, and other descriptive or experiential statistics. A rule along with its parameters is often referred to as a ''classifier''. In Michigan-style systems, classifiers are contained within a ''population'' that has a user defined maximum number of classifiers. Unlike moststochastic Stochastic (; ) is the property of being well-described by a random probability distribution. ''Stochasticity'' and ''randomness'' are technically distinct concepts: the former refers to a modeling approach, while the latter describes phenomena; i ...

search algorithms (e.g. evolutionary algorithm

Evolutionary algorithms (EA) reproduce essential elements of the biological evolution in a computer algorithm in order to solve "difficult" problems, at least Approximation, approximately, for which no exact or satisfactory solution methods are k ...

s), LCS populations start out empty (i.e. there is no need to randomly initialize a rule population). Classifiers will instead be initially introduced to the population with a covering mechanism.

In any LCS, the trained model is a set of rules/classifiers, rather than any single rule/classifier. In Michigan-style LCS, the entire trained (and optionally, compacted) classifier population forms the prediction model.

Matching

One of the most critical and often time-consuming elements of an LCS is the matching process. The first step in an LCS learning cycle takes a single training instance from the environment and passes it to where matching takes place. In step two, every rule in is now compared to the training instance to see which rules match (i.e. are contextually relevant to the current instance). In step three, any matching rules are moved to a ''match set'' A rule matches a training instance if all feature values specified in the rule condition are equivalent to the corresponding feature value in the training instance. For example, assuming the training instance is (001001 ~ 0), these rules would match: (###0## ~ 0), (00###1 ~ 0), (#01001 ~ 1), but these rules would not (1##### ~ 0), (000##1 ~ 0), (#0#1#0 ~ 1). Notice that in matching, the endpoint/action specified by the rule is not taken into consideration. As a result, the match set may contain classifiers that propose conflicting actions. In the fourth step, since we are performing supervised learning, is divided into a correct set and an incorrect set A matching rule goes into the correct set if it proposes the correct action (based on the known action of the training instance), otherwise it goes into In reinforcement learning LCS, an action set would be formed here instead, since the correct action is not known.Covering

At this point in the learning cycle, if no classifiers made it into either or (as would be the case when the population starts off empty), the covering mechanism is applied (fifth step). Covering is a form of ''online smart population initialization''. Covering randomly generates a rule that matches the current training instance (and in the case of supervised learning, that rule is also generated with the correct action. Assuming the training instance is (001001 ~ 0), covering might generate any of the following rules: (#0#0## ~ 0), (001001 ~ 0), (#010## ~ 0). Covering not only ensures that each learning cycle there is at least one correct, matching rule in but that any rule initialized into the population will match at least one training instance. This prevents LCS from exploring the search space of rules that do not match any training instances.Parameter updates/credit assignment/learning

In the sixth step, the rule parameters of any rule in are updated to reflect the new experience gained from the current training instance. Depending on the LCS algorithm, a number of updates can take place at this step. For supervised learning, we can simply update the accuracy/error of a rule. Rule accuracy/error is different than model accuracy/error, since it is not calculated over the entire training data, but only over all instances that it matched. Rule accuracy is calculated by dividing the number of times the rule was in a correct set by the number of times it was in a match set Rule accuracy can be thought of as a 'local accuracy'. Rule fitness is also updated here, and is commonly calculated as a function of rule accuracy. The concept of fitness is taken directly from classicgenetic algorithm

In computer science and operations research, a genetic algorithm (GA) is a metaheuristic inspired by the process of natural selection that belongs to the larger class of evolutionary algorithms (EA). Genetic algorithms are commonly used to g ...

s. Be aware that there are many variations on how LCS updates parameters in order to perform credit assignment and learning.

Subsumption

In the seventh step, a ''subsumption'' mechanism is typically applied. Subsumption is an explicit generalization mechanism that merges classifiers that cover redundant parts of the problem space. The subsuming classifier effectively absorbs the subsumed classifier (and has its numerosity increased). This can only happen when the subsuming classifier is more general, just as accurate, and covers all of the problem space of the classifier it subsumes.Rule discovery/genetic algorithm

In the eighth step, LCS adopts a highly elitistgenetic algorithm

In computer science and operations research, a genetic algorithm (GA) is a metaheuristic inspired by the process of natural selection that belongs to the larger class of evolutionary algorithms (EA). Genetic algorithms are commonly used to g ...

(GA) which will select two parent classifiers based on fitness (survival of the fittest). Parents are selected from typically using tournament selection. Some systems have applied roulette wheel selection or deterministic selection, and have differently selected parent rules from either - panmictic selection, or from . Crossover and mutation

In biology, a mutation is an alteration in the nucleic acid sequence of the genome of an organism, virus, or extrachromosomal DNA. Viral genomes contain either DNA or RNA. Mutations result from errors during DNA or viral replication, ...

operators are now applied to generate two new offspring rules. At this point, both the parent and offspring rules are returned to The LCS genetic algorithm

In computer science and operations research, a genetic algorithm (GA) is a metaheuristic inspired by the process of natural selection that belongs to the larger class of evolutionary algorithms (EA). Genetic algorithms are commonly used to g ...

is highly elitist since each learning iteration, the vast majority of the population is preserved. Rule discovery may alternatively be performed by some other method, such as an estimation of distribution algorithm, but a GA is by far the most common approach. Evolutionary algorithms like the GA employ a stochastic search, which makes LCS a stochastic algorithm. LCS seeks to cleverly explore the search space, but does not perform an exhaustive search of rule combinations, and is not guaranteed to converge on an optimal solution.

Deletion

The last step in a generic LCS learning cycle is to maintain the maximum population size. The deletion mechanism will select classifiers for deletion (commonly using roulette wheel selection). The probability of a classifier being selected for deletion is inversely proportional to its fitness. When a classifier is selected for deletion, its numerosity parameter is reduced by one. When the numerosity of a classifier is reduced to zero, it is removed entirely from the population.Training

LCS will cycle through these steps repeatedly for some user defined number of training iterations, or until some user defined termination criteria have been met. For online learning, LCS will obtain a completely new training instance each iteration from the environment. For offline learning, LCS will iterate through a finite training dataset. Once it reaches the last instance in the dataset, it will go back to the first instance and cycle through the dataset again.Rule compaction

Once training is complete, the rule population will inevitably contain some poor, redundant and inexperienced rules. It is common to apply a ''rule compaction'', or ''condensation'' heuristic as a post-processing step. This resulting compacted rule population is ready to be applied as a prediction model (e.g. make predictions on testing instances), and/or to be interpreted for knowledge discovery.Prediction

Whether or not rule compaction has been applied, the output of an LCS algorithm is a population of classifiers which can be applied to making predictions on previously unseen instances. The prediction mechanism is not part of the supervised LCS learning cycle itself, however it would play an important role in a reinforcement learning LCS learning cycle. For now we consider how the prediction mechanism can be applied for making predictions to test data. When making predictions, the LCS learning components are deactivated so that the population does not continue to learn from incoming testing data. A test instance is passed to where a match set is formed as usual. At this point the match set is differently passed to a prediction array. Rules in the match set can predict different actions, therefore a voting scheme is applied. In a simple voting scheme, the action with the strongest supporting 'votes' from matching rules wins, and becomes the selected prediction. All rules do not get an equal vote. Rather the strength of the vote for a single rule is commonly proportional to its numerosity and fitness. This voting scheme and the nature of how LCS's store knowledge, suggests that LCS algorithms are implicitly ''ensemble learners''.Interpretation

Individual LCS rules are typically human readable IF:THEN expression. Rules that constitute the LCS prediction model can be ranked by different rule parameters and manually inspected. Global strategies to guide knowledge discovery using statistical and graphical have also been proposed. With respect to other advanced machine learning approaches, such asartificial neural network

In machine learning, a neural network (also artificial neural network or neural net, abbreviated ANN or NN) is a computational model inspired by the structure and functions of biological neural networks.

A neural network consists of connected ...

s, random forests, or genetic programming, learning classifier systems are particularly well suited to problems that require interpretable solutions.

History

Early years

John Henry Holland was best known for his work popularizinggenetic algorithm

In computer science and operations research, a genetic algorithm (GA) is a metaheuristic inspired by the process of natural selection that belongs to the larger class of evolutionary algorithms (EA). Genetic algorithms are commonly used to g ...

s (GA), through his ground-breaking book "Adaptation in Natural and Artificial Systems" in 1975 and his formalization of Holland's schema theorem. In 1976, Holland conceptualized an extension of the GA concept to what he called a "cognitive system", and provided the first detailed description of what would become known as the first learning classifier system in the paper "Cognitive Systems based on Adaptive Algorithms".Holland JH, Reitman JS (1978) Cognitive systems based on

adaptive algorithms Reprinted in: Evolutionary computation.

The fossil record. In: David BF (ed) IEEE Press, New York

1998.

This first system, named Cognitive System One (CS-1) was conceived as a modeling tool, designed to model a real system (i.e. ''environment'') with unknown underlying dynamics using a population of human readable rules. The goal was for a set of rules to perform online machine learning to adapt to the environment based on infrequent payoff/reward (i.e. reinforcement learning) and apply these rules to generate a behavior that matched the real system. This early, ambitious implementation was later regarded as overly complex, yielding inconsistent results.

Beginning in 1980, Kenneth de Jong and his student Stephen Smith took a different approach to rule-based machine learning with (LS-1), where learning was viewed as an offline optimization process rather than an online adaptation process. This new approach was more similar to a standard genetic algorithm but evolved independent sets of rules. Since that time LCS methods inspired by the online learning framework introduced by Holland at the University of Michigan have been referred to as Michigan-style LCS, and those inspired by Smith and De Jong at the University of Pittsburgh have been referred to as Pittsburgh-style LCS. In 1986, Holland developed what would be considered the standard Michigan-style LCS for the next decade.Holland, John H. "Escaping brittleness: the possibilities of general purpose learning algorithms applied to parallel rule-based system." ''Machine learning''(1986): 593-623./ref> Other important concepts that emerged in the early days of LCS research included (1) the formalization of a ''bucket brigade algorithm'' (BBA) for credit assignment/learning, (2) selection of parent rules from a common 'environmental niche' (i.e. the ''match set'' rather than from the whole ''population'' (3) ''covering'', first introduced as a ''create'' operator,Wilson, S. W.

Knowledge growth in an artificial animal

Proceedings of the First International Conference on Genetic Algorithms and their Applications." (1985). (4) the formalization of an ''action set'' (5) a simplified algorithm architecture, (6) ''strength-based fitness'', (7) consideration of single-step, or supervised learning problems and the introduction of the ''correct set'' (8) ''accuracy-based fitness'' (9) the combination of fuzzy logic with LCS (which later spawned a lineage of ''fuzzy LCS algorithms''), (10) encouraging ''long action chains'' and ''default hierarchies'' for improving performance on multi-step problems, (11) examining latent learning (which later inspired a new branch of ''anticipatory classifier systems'' (ACS)W. Stolzmann, "Anticipatory classifier systems," in Proceedings of the 3rd Annual Genetic Programming Conference, pp. 658–664, 1998. ), and (12) the introduction of the first Q-learning-like credit assignment technique. While not all of these concepts are applied in modern LCS algorithms, each were landmarks in the development of the LCS paradigm.

The revolution

Interest in learning classifier systems was reinvigorated in the mid 1990s largely due to two events; the development of the Q-Learning algorithm forreinforcement learning

Reinforcement learning (RL) is an interdisciplinary area of machine learning and optimal control concerned with how an intelligent agent should take actions in a dynamic environment in order to maximize a reward signal. Reinforcement learnin ...

, and the introduction of significantly simplified Michigan-style LCS architectures by Stewart Wilson. Wilson's Zeroth-level Classifier System (ZCS) focused on increasing algorithmic understandability based on Hollands standard LCS implementation. This was done, in part, by removing rule-bidding and the internal message list, essential to the original BBA credit assignment, and replacing it with a hybrid BBA/ Q-Learning strategy. ZCS demonstrated that a much simpler LCS architecture could perform as well as the original, more complex implementations. However, ZCS still suffered from performance drawbacks including the proliferation of over-general classifiers.

In 1995, Wilson published his landmark paper, "Classifier fitness based on accuracy" in which he introduced the classifier system XCS. XCS took the simplified architecture of ZCS and added an accuracy-based fitness, a niche GA (acting in the action set , an explicit generalization mechanism called ''subsumption'', and an adaptation of the Q-Learning credit assignment. XCS was popularized by its ability to reach optimal performance while evolving accurate and maximally general classifiers as well as its impressive problem flexibility (able to perform both reinforcement learning

Reinforcement learning (RL) is an interdisciplinary area of machine learning and optimal control concerned with how an intelligent agent should take actions in a dynamic environment in order to maximize a reward signal. Reinforcement learnin ...

and supervised learning

In machine learning, supervised learning (SL) is a paradigm where a Statistical model, model is trained using input objects (e.g. a vector of predictor variables) and desired output values (also known as a ''supervisory signal''), which are often ...

). XCS later became the best known and most studied LCS algorithm and defined a new family of ''accuracy-based LCS''. ZCS alternatively became synonymous with ''strength-based LCS''. XCS is also important, because it successfully bridged the gap between LCS and the field of reinforcement learning

Reinforcement learning (RL) is an interdisciplinary area of machine learning and optimal control concerned with how an intelligent agent should take actions in a dynamic environment in order to maximize a reward signal. Reinforcement learnin ...

. Following the success of XCS, LCS were later described as reinforcement learning systems endowed with a generalization capability. Reinforcement learning

Reinforcement learning (RL) is an interdisciplinary area of machine learning and optimal control concerned with how an intelligent agent should take actions in a dynamic environment in order to maximize a reward signal. Reinforcement learnin ...

typically seeks to learn a value function that maps out a complete representation of the state/action space. Similarly, the design of XCS drives it to form an all-inclusive and accurate representation of the problem space (i.e. a ''complete map'') rather than focusing on high payoff niches in the environment (as was the case with strength-based LCS). Conceptually, complete maps don't only capture what you should do, or what is correct, but also what you shouldn't do, or what's incorrect. Differently, most strength-based LCSs, or exclusively supervised learning LCSs seek a rule set of efficient generalizations in the form of a ''best action map'' (or a ''partial map''). Comparisons between strength vs. accuracy-based fitness and complete vs. best action maps have since been examined in greater detail.

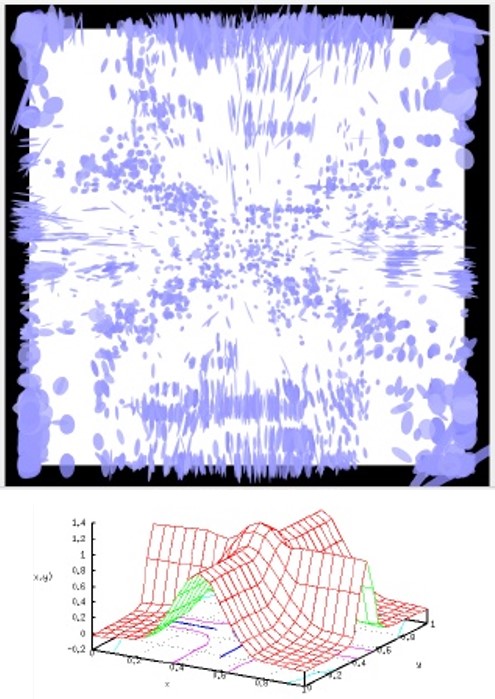

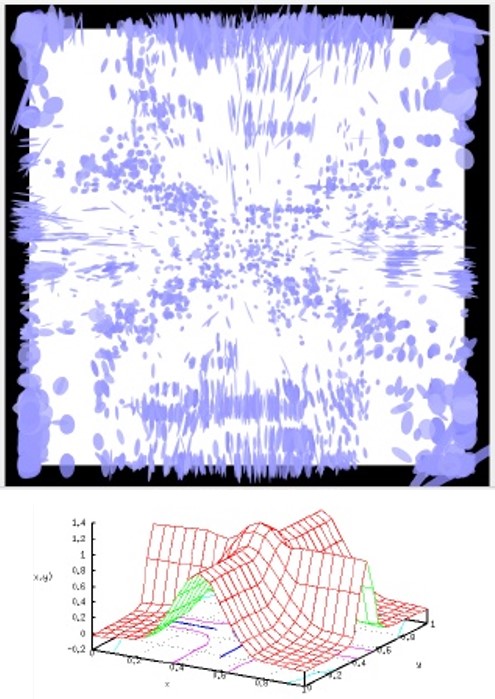

In the wake of XCS

XCS inspired the development of a whole new generation of LCS algorithms and applications. In 1995, Congdon was the first to apply LCS to real-world epidemiological investigations of disease followed closely by Holmes who developed the BOOLE++, EpiCS, and later EpiXCS for epidemiological classification. These early works inspired later interest in applying LCS algorithms to complex and large-scale data mining tasks epitomized bybioinformatics

Bioinformatics () is an interdisciplinary field of science that develops methods and Bioinformatics software, software tools for understanding biological data, especially when the data sets are large and complex. Bioinformatics uses biology, ...

applications. In 1998, Stolzmann introduced anticipatory classifier systems (ACS) which included rules in the form of 'condition-action-effect, rather than the classic 'condition-action' representation. ACS was designed to predict the perceptual consequences of an action in all possible situations in an environment. In other words, the system evolves a model that specifies not only what to do in a given situation, but also provides information of what will happen after a specific action will be executed. This family of LCS algorithms is best suited to multi-step problems, planning, speeding up learning, or disambiguating perceptual aliasing (i.e. where the same observation is obtained in distinct states but requires different actions). Butz later pursued this anticipatory family of LCS developing a number of improvements to the original method. In 2002, Wilson introduced XCSF, adding a computed action in order to perform function approximation. In 2003, Bernado-Mansilla introduced a sUpervised Classifier System (UCS), which specialized the XCS algorithm to the task of supervised learning

In machine learning, supervised learning (SL) is a paradigm where a Statistical model, model is trained using input objects (e.g. a vector of predictor variables) and desired output values (also known as a ''supervisory signal''), which are often ...

, single-step problems, and forming a best action set. UCS removed the reinforcement learning

Reinforcement learning (RL) is an interdisciplinary area of machine learning and optimal control concerned with how an intelligent agent should take actions in a dynamic environment in order to maximize a reward signal. Reinforcement learnin ...

strategy in favor of a simple, accuracy-based rule fitness as well as the explore/exploit learning phases, characteristic of many reinforcement learners. Bull introduced a simple accuracy-based LCS (YCS) and a simple strength-based LCS Minimal Classifier System (MCS) in order to develop a better theoretical understanding of the LCS framework. Bacardit introduced GAssist and BioHEL, Pittsburgh-style LCSs designed for data mining and scalability

Scalability is the property of a system to handle a growing amount of work. One definition for software systems specifies that this may be done by adding resources to the system.

In an economic context, a scalable business model implies that ...

to large datasets in bioinformatics

Bioinformatics () is an interdisciplinary field of science that develops methods and Bioinformatics software, software tools for understanding biological data, especially when the data sets are large and complex. Bioinformatics uses biology, ...

applications. In 2008, Drugowitsch published the book titled "Design and Analysis of Learning Classifier Systems" including some theoretical examination of LCS algorithms. Butz introduced the first rule online learning visualization within a GUI for XCSF (see the image at the top of this page). Urbanowicz extended the UCS framework and introduced ExSTraCS, explicitly designed for supervised learning

In machine learning, supervised learning (SL) is a paradigm where a Statistical model, model is trained using input objects (e.g. a vector of predictor variables) and desired output values (also known as a ''supervisory signal''), which are often ...

in noisy problem domains (e.g. epidemiology and bioinformatics). ExSTraCS integrated (1) expert knowledge to drive covering and genetic algorithm towards important features in the data, (2) a form of long-term memory referred to as attribute tracking, allowing for more efficient learning and the characterization of heterogeneous data patterns, and (3) a flexible rule representation similar to Bacardit's mixed discrete-continuous attribute list representation. Both Bacardit and Urbanowicz explored statistical and visualization strategies to interpret LCS rules and perform knowledge discovery for data mining. Browne and Iqbal explored the concept of reusing building blocks in the form of code fragments and were the first to solve the 135-bit multiplexer benchmark problem by first learning useful building blocks from simpler multiplexer problems. ExSTraCS 2.0 was later introduced to improve Michigan-style LCS scalability, successfully solving the 135-bit multiplexer benchmark problem for the first time directly. The n-bit multiplexer

In electronics, a multiplexer (or mux; spelled sometimes as multiplexor), also known as a data selector, is a device that selects between several Analog signal, analog or Digital signal (electronics), digital input signals and forwards the sel ...

problem is highly epistatic and heterogeneous, making it a very challenging machine learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task ( ...

task.

Variants

Michigan-Style Learning Classifier System

Michigan-Style LCSs are characterized by a population of rules where the genetic algorithm operates at the level of individual rules and the solution is represented by the entire rule population. Michigan style systems also learn incrementally which allows them to perform both reinforcement learning and supervised learning, as well as both online and offline learning. Michigan-style systems have the advantage of being applicable to a greater number of problem domains, and the unique benefits of incremental learning.Pittsburgh-Style Learning Classifier System

Pittsburgh-Style LCSs are characterized by a population of variable length rule-sets where each rule-set is a potential solution. The genetic algorithm typically operates at the level of an entire rule-set. Pittsburgh-style systems can also uniquely evolve ordered rule lists, as well as employ a default rule. These systems have the natural advantage of identifying smaller rule sets, making these systems more interpretable with regards to manual rule inspection.Hybrid systems

Systems that seek to combine key strengths of both systems have also been proposed.Advantages

* Adaptive: They can acclimate to a changing environment in the case of online learning. * Model free: They make limited assumptions about the environment, or the patterns of association within the data. ** They can model complex, epistatic, heterogeneous, or distributed underlying patterns without relying on prior knowledge. ** They make no assumptions about the number of predictive vs. non-predictive features in the data. * Ensemble Learner: No single model is applied to a given instance that universally provides a prediction. Instead a relevant and often conflicting set of rules contribute a 'vote' which can be interpreted as a fuzzy prediction. * Stochastic Learner: Non-deterministic learning is advantageous in large-scale or high complexity problems where deterministic or exhaustive learning becomes intractable. * Implicitly Multi-objective: Rules evolve towards accuracy with implicit and explicit pressures encouraging maximal generality/simplicity. This implicit generalization pressure is unique to LCS. Effectively, more general rules, will appear more often in match sets. In turn, they have a more frequent opportunity to be selected as parents, and pass on their more general (genomes) to offspring rules. * Interpretable:In the interest of data mining and knowledge discovery individual LCS rules are logical, and can be made to be human interpretable IF:THEN statements. Effective strategies have also been introduced to allow for global knowledge discovery identifying significant features, and patterns of association from the rule population as a whole. * Flexible application ** Single or multi-step problems ** Supervised, Reinforcement or Unsupervised Learning ** Binary Class and Multi-Class Classification ** Regression ** Discrete or continuous features (or some mix of both types) ** Clean or noisy problem domains ** Balanced or imbalanced datasets. ** Accommodates missing data (i.e. missing feature values in training instances)Disadvantages

* Limited Software Availability: There are a limited number of open source, accessible LCS implementations, and even fewer that are designed to be user friendly or accessible to machine learning practitioners. * Interpretation: While LCS algorithms are certainly more interpretable than some advanced machine learners, users must interpret a set of rules (sometimes large sets of rules to comprehend the LCS model.). Methods for rule compaction, and interpretation strategies remains an area of active research. * Theory/Convergence Proofs: There is a relatively small body of theoretical work behind LCS algorithms. This is likely due to their relative algorithmic complexity (applying a number of interacting components) as well as their stochastic nature. * Overfitting: Like any machine learner, LCS can suffer from overfitting despite implicit and explicit generalization pressures. * Run Parameters: LCSs often have many run parameters to consider/optimize. Typically, most parameters can be left to the community determined defaults with the exception of two critical parameters: Maximum rule population size, and the maximum number of learning iterations. Optimizing these parameters are likely to be very problem dependent. * Notoriety: Despite their age, LCS algorithms are still not widely known even in machine learning communities. As a result, LCS algorithms are rarely considered in comparison to other established machine learning approaches. This is likely due to the following factors: (1) LCS is a relatively complicated algorithmic approach, (2) LCS, rule-based modeling is a different paradigm of modeling than almost all other machine learning approaches. (3) LCS software implementations are not as common. * Computationally Expensive: While certainly more feasible than some exhaustive approaches, LCS algorithms can be computationally expensive. For simple, linear learning problems there is no need to apply an LCS. LCS algorithms are best suited to complex problem spaces, or problem spaces in which little prior knowledge exists.Problem domains

* Adaptive-control * Data Mining * Engineering Design * Feature Selection * Function Approximation * Game-Play * Image Classification * Knowledge Handling * Medical Diagnosis * Modeling * Navigation * Optimization * Prediction * Querying * Robotics * Routing * Rule-Induction * Scheduling * StrategyTerminology

The name, "Learning Classifier System (LCS)", is a bit misleading since there are manymachine learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task ( ...

algorithms that 'learn to classify' (e.g. decision tree

A decision tree is a decision support system, decision support recursive partitioning structure that uses a Tree (graph theory), tree-like Causal model, model of decisions and their possible consequences, including probability, chance event ou ...

s, artificial neural network

In machine learning, a neural network (also artificial neural network or neural net, abbreviated ANN or NN) is a computational model inspired by the structure and functions of biological neural networks.

A neural network consists of connected ...

s), but are not LCSs. The term 'rule-based machine learning ( RBML)' is useful, as it more clearly captures the essential 'rule-based' component of these systems, but it also generalizes to methods that are not considered to be LCSs (e.g. association rule learning, or artificial immune systems). More general terms such as, 'genetics-based machine learning', and even 'genetic algorithm'Congdon, Clare Bates. "A comparison of genetic algorithms and other machine learning systems on a complex classification task from common disease research." PhD diss., The University of Michigan, 1995. have also been applied to refer to what would be more characteristically defined as a learning classifier system. Due to their similarity to genetic algorithm

In computer science and operations research, a genetic algorithm (GA) is a metaheuristic inspired by the process of natural selection that belongs to the larger class of evolutionary algorithms (EA). Genetic algorithms are commonly used to g ...

s, Pittsburgh-style learning classifier systems are sometimes generically referred to as 'genetic algorithms'. Beyond this, some LCS algorithms, or closely related methods, have been referred to as 'cognitive systems', 'adaptive agents', ' production systems', or generically as a 'classifier system'.Wilson, Stewart W., and David E. Goldberg. "A critical review of classifier systems." In ''Proceedings of the third international conference on Genetic algorithms'', pp. 244-255. Morgan Kaufmann Publishers Inc., 1989. This variation in terminology contributes to some confusion in the field.

Up until the 2000s nearly all learning classifier system methods were developed with reinforcement learning problems in mind. As a result, the term ‘learning classifier system’ was commonly defined as the combination of ‘trial-and-error’ reinforcement learning with the global search of a genetic algorithm. Interest in supervised learning applications, and even unsupervised learning have since broadened the use and definition of this term.

See also

* Rule-based machine learning * Production system * Expert system *Genetic algorithm

In computer science and operations research, a genetic algorithm (GA) is a metaheuristic inspired by the process of natural selection that belongs to the larger class of evolutionary algorithms (EA). Genetic algorithms are commonly used to g ...

* Association rule learning

* Artificial immune system

* Population-based Incremental Learning

* Machine learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task ( ...

References

{{ReflistExternal links

Video tutorial

Learning Classifier Systems in a Nutshell

- (2016) Go inside a basic LCS algorithm to learn their components and how they work.

Webpages

LCS & GBML Central

UWE Learning Classifier Research Group

Prediction Dynamics

Evolutionary algorithms