|

Tournament Selection

Tournament selection is a method of selecting an individual from a population of individuals in a evolutionary algorithm. Tournament selection involves running several "tournaments" among a few individuals (or "chromosomes") chosen at random from the population. The winner of each tournament (the one with the best fitness) is selected for crossover. '' Selection pressure'' is then a probabilistic measure of a chromosome's likelihood of participation in the tournament based on the participant selection pool size, is easily adjusted by changing the tournament size. The reason is that if the tournament size is larger, weak individuals have a smaller chance to be selected, because, if a weak individual is selected to be in a tournament, there is a higher probability that a stronger individual is also in that tournament. Pseudo Code The tournament selection method may be described in pseudo code: choose k (the tournament size) individuals from the population at random choose the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Evolutionary Algorithm

Evolutionary algorithms (EA) reproduce essential elements of the biological evolution in a computer algorithm in order to solve "difficult" problems, at least Approximation, approximately, for which no exact or satisfactory solution methods are known. They belong to the class of Metaheuristic, metaheuristics and are a subset of Population Based Bio-Inspired Algorithms, population based bio-inspired algorithms and evolutionary computation, which itself are part of the field of computational intelligence. The mechanisms of biological evolution that an EA mainly imitates are reproduction, mutation, genetic recombination, recombination and natural selection, selection. Candidate solutions to the optimization problem play the role of individuals in a population, and the fitness function determines the quality of the solutions (see also loss function). Evolution of the population then takes place after the repeated application of the above operators. Evolutionary algorithms often perfor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Chromosome (genetic Algorithm)

A chromosome or genotype in evolutionary algorithms (EA) is a set of parameters which define a proposed solution of the problem that the evolutionary algorithm is trying to solve. The set of all solutions, also called ''individuals'' according to the biological model, is known as the ''Population model (evolutionary algorithm), population''. The genome of an individual consists of one, more rarely of several, chromosomes and corresponds to the genetic representation of the task to be solved. A chromosome is composed of a set of genes, where a gene consists of one or more semantically connected Parameter, parameters, which are often also called ''decision variables''. They determine one or more Phenotype, phenotypic characteristics of the individual or at least have an influence on them. In the basic form of genetic algorithms, the chromosome is represented as a binary string (computer science), string, while in later variants and in EAs in general, a wide variety of other data str ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Crossover (genetic Algorithm)

Crossover in evolutionary algorithms and evolutionary computation, also called recombination, is a genetic operator used to combine the chromosome (genetic algorithm), genetic information of two parents to generate new offspring. It is one way to stochastic, stochastically generate new candidate solution, solutions from an existing population, and is analogous to the chromosomal crossover, crossover that happens during sexual reproduction in biology. New solutions can also be generated by cloning (programming), cloning an existing solution, which is analogous to asexual reproduction. Newly generated solutions may be mutation (genetic algorithm), mutated before being added to the population. The aim of recombination is to transfer good characteristics from two different parents to one child. Different algorithms in evolutionary computation may use different data structures to store genetic information, and each genetic representation can be recombined with different crossover operat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Selection Pressure

Evolutionary pressure, selective pressure or selection pressure is exerted by factors that reduce or increase reproductive success in a portion of a population, driving natural selection. It is a quantitative description of the amount of change occurring in processes investigated by evolutionary biology, but the formal concept is often extended to other areas of research. In population genetics, selective pressure is usually expressed as a selection coefficient. Amino acids selective pressure It has been shown that putting an amino acid bio-synthesizing gene like ''HIS4'' gene under amino acid selective pressure in yeast causes enhancement of expression of adjacent genes which is due to the transcriptional co-regulation of two adjacent genes in Eukaryota. Antibiotic resistance Drug resistance in bacteria is an example of an outcome of natural selection. When a drug is used on a species of bacteria, those that cannot resist die and do not produce offspring, while those that s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fitness Proportionate Selection

Fitness proportionate selection, also known as roulette wheel selection or spinning wheel selection, is a selection technique used in evolutionary algorithms for selecting potentially useful solutions for recombination. Method In fitness proportionate selection, as in all selection methods, the fitness function assigns a fitness to possible solutions or chromosomes. This fitness level is used to associate a probability of selection with each individual chromosome. If f_i is the fitness of individual i in the population, its probability of being selected is : p_i = \frac, where N is the number of individuals in the population. This could be imagined similar to a Roulette wheel in a casino. Usually a proportion of the wheel is assigned to each of the possible selections based on their fitness value. This could be achieved by dividing the fitness of a selection by the total fitness of all the selections, thereby normalizing them to 1. Then a random selection is made similar to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reward-based Selection

Reward-based selection is a technique used in evolutionary algorithms for selecting potentially useful solutions for recombination. The probability of being selected for an individual is proportional to the cumulative reward obtained by the individual. The cumulative reward can be computed as a sum of the individual reward and the reward inherited from parents. Description Reward-based selection can be used within Multi-armed bandit framework for Multi-objective optimization to obtain a better approximation of the Pareto front. The newborn a'^ and its parents receive a reward r^, if a'^ was selected for new population Q^, otherwise the reward is zero. Several reward definitions are possible: *1. r^=1, if the newborn individual a'^ was selected for new population Q^. *2. r^ = 1 - \frac \mbox a'^ \in Q^ , where rank(a'^) is the rank of newly inserted individual in the population of \mu individuals. Rank can be computed using a well-known non-dominated sorting procedure. *3. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fitness Function

A fitness function is a particular type of objective or cost function that is used to summarize, as a single figure of merit, how close a given candidate solution is to achieving the set aims. It is an important component of evolutionary algorithms (EA), such as genetic programming, evolution strategies or genetic algorithms. An EA is a metaheuristic that reproduces the basic principles of biological evolution as a computer algorithm in order to solve challenging optimization or planning tasks, at least approximately. For this purpose, many candidate solutions are generated, which are evaluated using a fitness function in order to guide the evolutionary development towards the desired goal. Similar quality functions are also used in other metaheuristics, such as ant colony optimization or particle swarm optimization. In the field of EAs, each candidate solution, also called an ''individual'', is commonly represented as a string of numbers (referred to as a chromosome). A ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

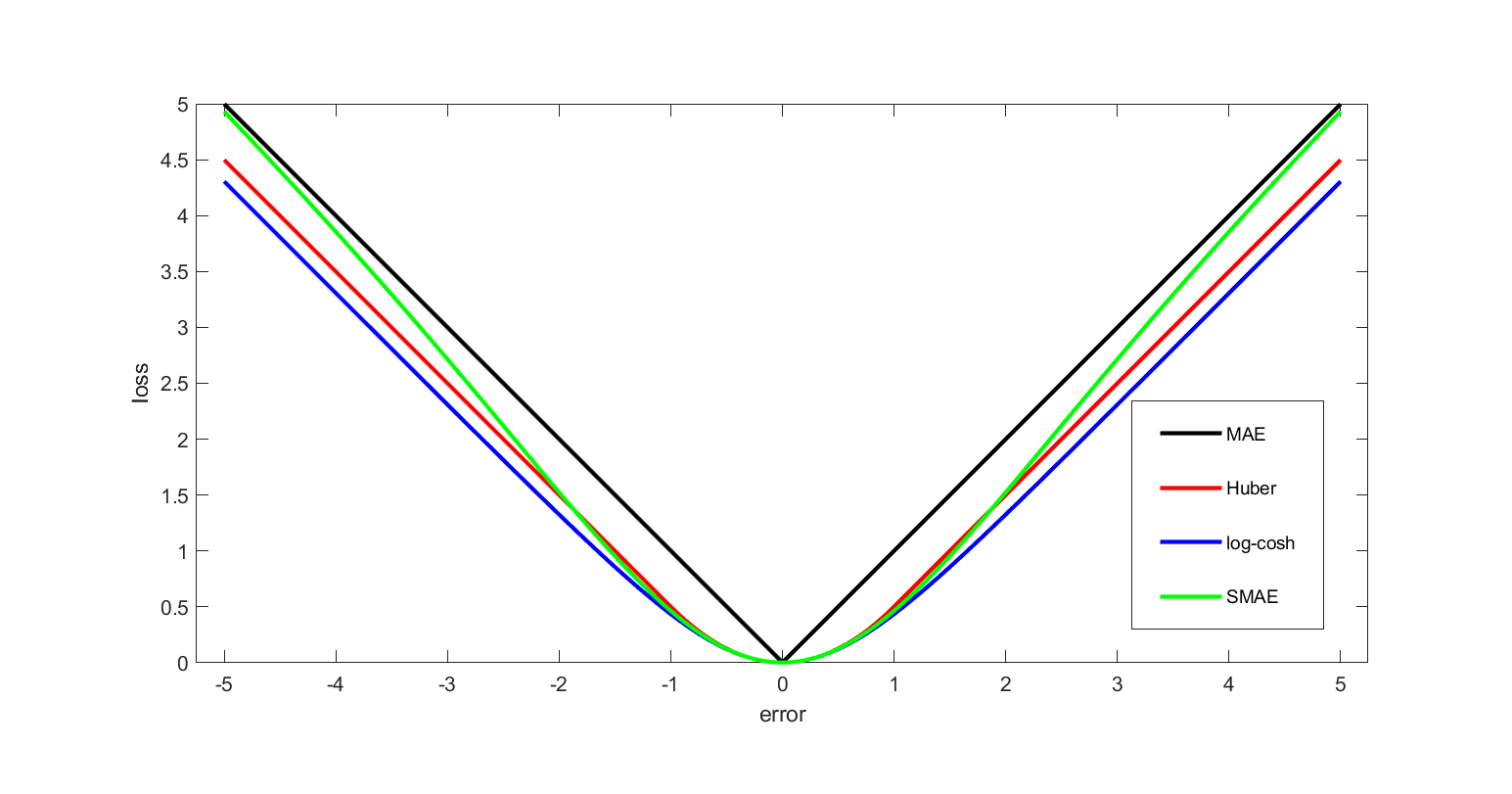

Loss Function

In mathematical optimization and decision theory, a loss function or cost function (sometimes also called an error function) is a function that maps an event or values of one or more variables onto a real number intuitively representing some "cost" associated with the event. An optimization problem seeks to minimize a loss function. An objective function is either a loss function or its opposite (in specific domains, variously called a reward function, a profit function, a utility function, a fitness function, etc.), in which case it is to be maximized. The loss function could include terms from several levels of the hierarchy. In statistics, typically a loss function is used for parameter estimation, and the event in question is some function of the difference between estimated and true values for an instance of data. The concept, as old as Pierre-Simon Laplace, Laplace, was reintroduced in statistics by Abraham Wald in the middle of the 20th century. In the context of economi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |