error detecting code on:

[Wikipedia]

[Google]

[Amazon]

In information theory and

In information theory and

In information theory and

In information theory and coding theory

Coding theory is the study of the properties of codes and their respective fitness for specific applications. Codes are used for data compression, cryptography, error detection and correction, data transmission and data storage. Codes are studied ...

with applications in computer science

Computer science is the study of computation, automation, and information. Computer science spans theoretical disciplines (such as algorithms, theory of computation, information theory, and automation) to practical disciplines (includi ...

and telecommunication

Telecommunication is the transmission of information by various types of technologies over wire, radio, optical, or other electromagnetic systems. It has its origin in the desire of humans for communication over a distance greater than that fe ...

, error detection and correction (EDAC) or error control are techniques that enable reliable delivery of digital data over unreliable communication channel

A communication channel refers either to a physical transmission medium such as a wire, or to a logical connection over a multiplexed medium such as a radio channel in telecommunications and computer networking. A channel is used for informa ...

s. Many communication channels are subject to channel noise, and thus errors may be introduced during transmission from the source to a receiver. Error detection techniques allow detecting such errors, while error correction enables reconstruction of the original data in many cases.

Definitions

''Error detection'' is the detection of errors caused by noise or other impairments during transmission from the transmitter to the receiver. ''Error correction'' is the detection of errors and reconstruction of the original, error-free data.History

In classical antiquity, copyists of theHebrew Bible

The Hebrew Bible or Tanakh (;"Tanach"

'' stichs (lines of verse). As the prose books of the Bible were hardly ever written in stichs, the copyists, in order to estimate the amount of work, had to count the letters. This also helped ensure accuracy in the transmission of the text with the production of subsequent copies. Between the 7th and 10th centuries CE a group of Jewish scribes formalized and expanded this to create the Numerical Masorah to ensure accurate reproduction of the sacred text. It included counts of the number of words in a line, section, book and groups of books, noting the middle stich of a book, word use statistics, and commentary. Standards became such that a deviation in even a single letter in a Torah scroll was considered unacceptable. The effectiveness of their error correction method was verified by the accuracy of copying through the centuries demonstrated by discovery of the

SoftECC: A System for Software Memory Integrity Checking

A Tunable, Software-based DRAM Error Detection and Correction Library for HPC

Detection and Correction of Silent Data Corruption for Large-Scale High-Performance Computing

The on-line textbook: Information Theory, Inference, and Learning Algorithms

by David J.C. MacKay, contains chapters on elementary error-correcting codes; on the theoretical limits of error-correction; and on the latest state-of-the-art error-correcting codes, including low-density parity-check codes,

ECC Page

- implementations of popular ECC encoding and decoding routines Detection and correction

'' stichs (lines of verse). As the prose books of the Bible were hardly ever written in stichs, the copyists, in order to estimate the amount of work, had to count the letters. This also helped ensure accuracy in the transmission of the text with the production of subsequent copies. Between the 7th and 10th centuries CE a group of Jewish scribes formalized and expanded this to create the Numerical Masorah to ensure accurate reproduction of the sacred text. It included counts of the number of words in a line, section, book and groups of books, noting the middle stich of a book, word use statistics, and commentary. Standards became such that a deviation in even a single letter in a Torah scroll was considered unacceptable. The effectiveness of their error correction method was verified by the accuracy of copying through the centuries demonstrated by discovery of the

Dead Sea Scrolls

The Dead Sea Scrolls (also the Qumran Caves Scrolls) are ancient Jewish and Hebrew religious manuscripts discovered between 1946 and 1956 at the Qumran Caves in what was then Mandatory Palestine, near Ein Feshkha in the West Bank, on the ...

in 1947–1956, dating from c.150 BCE-75 CE.

The modern development of error correction code

In computing, telecommunication, information theory, and coding theory, an error correction code, sometimes error correcting code, (ECC) is used for controlling errors in data over unreliable or noisy communication channels. The central idea is ...

s is credited to Richard Hamming

Richard Wesley Hamming (February 11, 1915 – January 7, 1998) was an American mathematician whose work had many implications for computer engineering and telecommunications. His contributions include the Hamming code (which makes use of a ...

in 1947. A description of Hamming's code appeared in Claude Shannon

Claude Elwood Shannon (April 30, 1916 – February 24, 2001) was an American mathematician, electrical engineer, and cryptographer known as a "father of information theory".

As a 21-year-old master's degree student at the Massachusetts Inst ...

's ''A Mathematical Theory of Communication'' and was quickly generalized by Marcel J. E. Golay.

Introduction

All error-detection and correction schemes add some redundancy (i.e., some extra data) to a message, which receivers can use to check consistency of the delivered message, and to recover data that has been determined to be corrupted. Error-detection and correction schemes can be either systematic or non-systematic. In a systematic scheme, the transmitter sends the original data, and attaches a fixed number of ''check bits'' (or ''parity data''), which are derived from the data bits by somedeterministic algorithm

In computer science, a deterministic algorithm is an algorithm that, given a particular input, will always produce the same output, with the underlying machine always passing through the same sequence of states. Deterministic algorithms are by far ...

. If only error detection is required, a receiver can simply apply the same algorithm to the received data bits and compare its output with the received check bits; if the values do not match, an error has occurred at some point during the transmission. In a system that uses a non-systematic code, the original message is transformed into an encoded message carrying the same information and that has at least as many bits as the original message.

Good error control performance requires the scheme to be selected based on the characteristics of the communication channel. Common channel model

A communication channel refers either to a physical transmission medium such as a wire, or to a logical connection over a multiplexed medium such as a radio channel in telecommunications and computer networking. A channel is used for info ...

s include memoryless

In probability and statistics, memorylessness is a property of certain probability distributions. It usually refers to the cases when the distribution of a "waiting time" until a certain event does not depend on how much time has elapsed already ...

models where errors occur randomly and with a certain probability, and dynamic models where errors occur primarily in bursts. Consequently, error-detecting and correcting codes can be generally distinguished between ''random-error-detecting/correcting'' and ''burst-error-detecting/correcting''. Some codes can also be suitable for a mixture of random errors and burst errors.

If the channel characteristics cannot be determined, or are highly variable, an error-detection scheme may be combined with a system for retransmissions of erroneous data. This is known as automatic repeat request

Automatic repeat request (ARQ), also known as automatic repeat query, is an error-control method for data transmission that uses acknowledgements (messages sent by the receiver indicating that it has correctly received a packet) and timeouts ...

(ARQ), and is most notably used in the Internet. An alternate approach for error control is hybrid automatic repeat request

Hybrid automatic repeat request (hybrid ARQ or HARQ) is a combination of high-rate forward error correction (FEC) and automatic repeat request (ARQ) error-control. In standard ARQ, redundant bits are added to data to be transmitted using an er ...

(HARQ), which is a combination of ARQ and error-correction coding.

Types of error correction

There are three major types of error correction.Automatic repeat request

Automatic repeat request

Automatic repeat request (ARQ), also known as automatic repeat query, is an error-control method for data transmission that uses acknowledgements (messages sent by the receiver indicating that it has correctly received a packet) and timeouts ...

(ARQ) is an error control method for data transmission that makes use of error-detection codes, acknowledgment and/or negative acknowledgment messages, and timeouts to achieve reliable data transmission. An ''acknowledgment'' is a message sent by the receiver to indicate that it has correctly received a data frame

A frame is a digital data transmission unit in computer networking and telecommunication. In packet switched systems, a frame is a simple container for a single network packet. In other telecommunications systems, a frame is a repeating structure s ...

.

Usually, when the transmitter does not receive the acknowledgment before the timeout occurs (i.e., within a reasonable amount of time after sending the data frame), it retransmits the frame until it is either correctly received or the error persists beyond a predetermined number of retransmissions.

Three types of ARQ protocols are Stop-and-wait ARQ

Stop-and-wait ARQ, also referred to as alternating bit protocol, is a method in telecommunications to send information between two connected devices. It ensures that information is not lost due to dropped packets and that packets are received ...

, Go-Back-N ARQ

Go-Back-''N'' ARQ is a specific instance of the automatic repeat request (ARQ) protocol, in which the sending process continues to send a number of frames specified by a ''window size'' even without receiving an acknowledgement (ACK) packet fr ...

, and Selective Repeat ARQ

Selective Repeat ARQ/Selective Reject ARQ is a specific instance of the automatic repeat request (ARQ) protocol used to manage sequence numbers and retransmissions in reliable communications.

Summary

Selective Repeat is part of the automatic ...

.

ARQ is appropriate if the communication channel has varying or unknown capacity, such as is the case on the Internet. However, ARQ requires the availability of a back channel

Back Channel is a canal in the Port of Long Beach, California, United States, and is nearby to Terminal Island, Island Grissom, and Thenard. It is also close to the port's East Basin and the Gerald Desmond Bridge.

See also

* Long Beach Naval ...

, results in possibly increased latency due to retransmissions, and requires the maintenance of buffers and timers for retransmissions, which in the case of network congestion

Network congestion in data networking and queueing theory is the reduced quality of service that occurs when a network node or link is carrying more data than it can handle. Typical effects include queueing delay, packet loss or the blocking of ...

can put a strain on the server and overall network capacity.A. J. McAuley, ''Reliable Broadband Communication Using a Burst Erasure Correcting Code'', ACM SIGCOMM, 1990.

For example, ARQ is used on shortwave radio data links in the form of ARQ-E, or combined with multiplexing as ARQ-M.

Forward error correction

Forward error correction (FEC) is a process of adding redundant data such as an error-correcting code (ECC) to a message so that it can be recovered by a receiver even when a number of errors (up to the capability of the code being used) are introduced, either during the process of transmission or on storage. Since the receiver does not have to ask the sender for retransmission of the data, abackchannel

Backchannel is the use of networked computers to maintain a real-time online conversation alongside the primary group activity or live spoken remarks. The term was coined from the linguistics term to describe listeners' behaviours during verbal ...

is not required in forward error correction. Error-correcting codes are used in lower-layer communication such as cellular network, high-speed fiber-optic communication and Wi-Fi

Wi-Fi () is a family of wireless network protocols, based on the IEEE 802.11 family of standards, which are commonly used for local area networking of devices and Internet access, allowing nearby digital devices to exchange data by radio wav ...

, as well as for reliable storage in media such as flash memory, hard disk and RAM

Ram, ram, or RAM may refer to:

Animals

* A male sheep

* Ram cichlid, a freshwater tropical fish

People

* Ram (given name)

* Ram (surname)

* Ram (director) (Ramsubramaniam), an Indian Tamil film director

* RAM (musician) (born 1974), Dutch

* ...

.

Error-correcting codes are usually distinguished between convolutional code

In telecommunication, a convolutional code is a type of error-correcting code that generates parity symbols via the sliding application of a boolean polynomial function to a data stream. The sliding application represents the 'convolution' of t ...

s and block code

In coding theory, block codes are a large and important family of error-correcting codes that encode data in blocks.

There is a vast number of examples for block codes, many of which have a wide range of practical applications. The abstract defini ...

s:

* ''Convolutional codes'' are processed on a bit-by-bit basis. They are particularly suitable for implementation in hardware, and the Viterbi decoder allows optimal decoding.

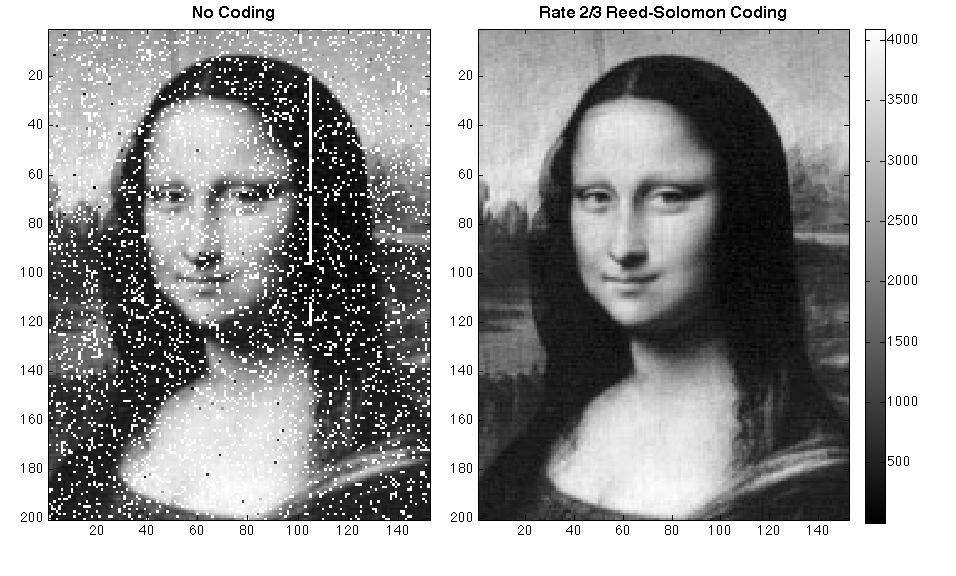

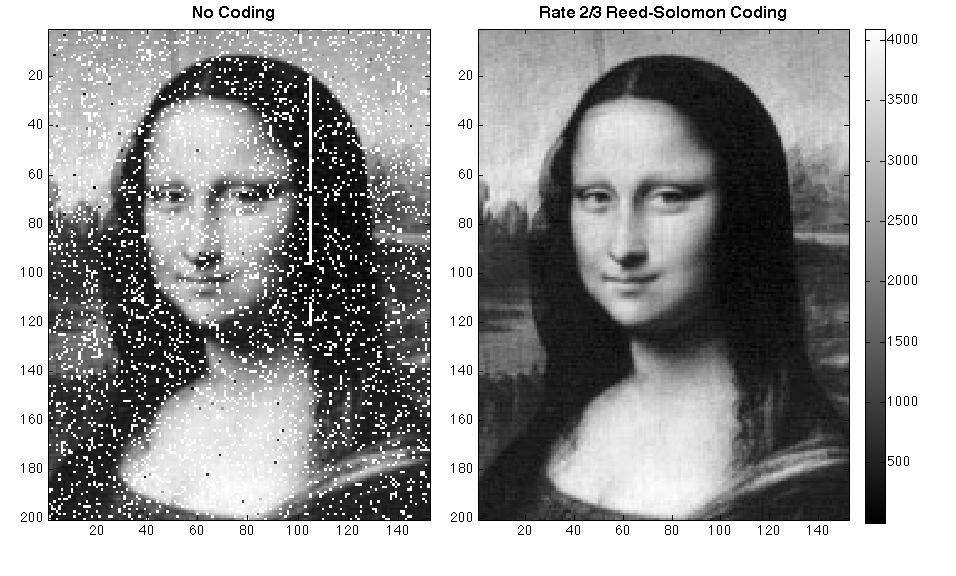

* ''Block codes'' are processed on a block-by-block basis. Early examples of block codes are repetition code

In coding theory, the repetition code is one of the most basic error-correcting codes. In order to transmit a message over a noisy channel that may corrupt the transmission in a few places, the idea of the repetition code is to just repeat the me ...

s, Hamming codes and multidimensional parity-check code

A multidimensional parity-check code (MDPC) is a simple type of error correcting code that operates by arranging the message into a multidimensional grid, and calculating a parity digit for each row and column. In general, an ''n''-dimensional ...

s. They were followed by a number of efficient codes, Reed–Solomon codes being the most notable due to their current widespread use. Turbo code

In information theory, turbo codes (originally in French ''Turbocodes'') are a class of high-performance forward error correction (FEC) codes developed around 1990–91, but first published in 1993. They were the first practical codes to closel ...

s and low-density parity-check codes (LDPC) are relatively new constructions that can provide almost optimal efficiency.

Shannon's theorem

In information theory, the noisy-channel coding theorem (sometimes Shannon's theorem or Shannon's limit), establishes that for any given degree of noise contamination of a communication channel, it is possible to communicate discrete data (dig ...

is an important theorem in forward error correction, and describes the maximum information rate

In telecommunications and computing, bit rate (bitrate or as a variable ''R'') is the number of bits that are conveyed or processed per unit of time.

The bit rate is expressed in the unit bit per second (symbol: bit/s), often in conjunction w ...

at which reliable communication is possible over a channel that has a certain error probability or signal-to-noise ratio (SNR). This strict upper limit is expressed in terms of the channel capacity. More specifically, the theorem says that there exist codes such that with increasing encoding length the probability of error on a discrete memoryless channel can be made arbitrarily small, provided that the code rate

In telecommunication and information theory, the code rate (or information rateHuffman, W. Cary, and Pless, Vera, ''Fundamentals of Error-Correcting Codes'', Cambridge, 2003.) of a forward error correction code is the proportion of the data-str ...

is smaller than the channel capacity. The code rate is defined as the fraction ''k/n'' of ''k'' source symbols and ''n'' encoded symbols.

The actual maximum code rate allowed depends on the error-correcting code used, and may be lower. This is because Shannon's proof was only of existential nature, and did not show how to construct codes which are both optimal and have efficient encoding and decoding algorithms.

Hybrid schemes

Hybrid ARQ

Hybrid automatic repeat request (hybrid ARQ or HARQ) is a combination of high-rate forward error correction (FEC) and automatic repeat request (ARQ) error-control. In standard ARQ, redundant bits are added to data to be transmitted using an erro ...

is a combination of ARQ and forward error correction. There are two basic approaches:

* Messages are always transmitted with FEC parity data (and error-detection redundancy). A receiver decodes a message using the parity information, and requests retransmission using ARQ only if the parity data was not sufficient for successful decoding (identified through a failed integrity check).

* Messages are transmitted without parity data (only with error-detection information). If a receiver detects an error, it requests FEC information from the transmitter using ARQ, and uses it to reconstruct the original message.

The latter approach is particularly attractive on an erasure channel when using a rateless erasure code In coding theory, fountain codes (also known as rateless erasure codes) are a class of erasure codes with the property that a potentially limitless sequence of encoding symbols can be generated from a given set of source symbols such that the origi ...

.

Error detection schemes

Error detection is most commonly realized using a suitablehash function

A hash function is any function that can be used to map data of arbitrary size to fixed-size values. The values returned by a hash function are called ''hash values'', ''hash codes'', ''digests'', or simply ''hashes''. The values are usually ...

(or specifically, a checksum

A checksum is a small-sized block of data derived from another block of digital data for the purpose of detecting errors that may have been introduced during its transmission or storage. By themselves, checksums are often used to verify data ...

, cyclic redundancy check or other algorithm). A hash function adds a fixed-length ''tag'' to a message, which enables receivers to verify the delivered message by recomputing the tag and comparing it with the one provided.

There exists a vast variety of different hash function designs. However, some are of particularly widespread use because of either their simplicity or their suitability for detecting certain kinds of errors (e.g., the cyclic redundancy check's performance in detecting burst error

In telecommunication, a burst error or error burst is a contiguous sequence of symbols, received over a communication channel, such that the first and last symbols are in error and there exists no contiguous subsequence of ''m'' correctly receive ...

s).

Minimum distance coding

A random-error-correcting code based on minimum distance coding can provide a strict guarantee on the number of detectable errors, but it may not protect against apreimage attack

In cryptography, a preimage attack on cryptographic hash functions tries to find a message that has a specific hash value. A cryptographic hash function should resist attacks on its preimage (set of possible inputs).

In the context of attack, the ...

.

Repetition codes

Arepetition code

In coding theory, the repetition code is one of the most basic error-correcting codes. In order to transmit a message over a noisy channel that may corrupt the transmission in a few places, the idea of the repetition code is to just repeat the me ...

is a coding scheme that repeats the bits across a channel to achieve error-free communication. Given a stream of data to be transmitted, the data are divided into blocks of bits. Each block is transmitted some predetermined number of times. For example, to send the bit pattern "1011", the four-bit block can be repeated three times, thus producing "1011 1011 1011". If this twelve-bit pattern was received as "1010 1011 1011" – where the first block is unlike the other two – an error has occurred.

A repetition code is very inefficient, and can be susceptible to problems if the error occurs in exactly the same place for each group (e.g., "1010 1010 1010" in the previous example would be detected as correct). The advantage of repetition codes is that they are extremely simple, and are in fact used in some transmissions of numbers station

A numbers station is a shortwave radio station characterized by broadcasts of formatted numbers, which are believed to be addressed to intelligence officers operating in foreign countries. Most identified stations use speech synthesis to vocal ...

s.

Parity bit

A ''parity bit'' is a bit that is added to a group of source bits to ensure that the number of set bits (i.e., bits with value 1) in the outcome is even or odd. It is a very simple scheme that can be used to detect single or any other odd number (i.e., three, five, etc.) of errors in the output. An even number of flipped bits will make the parity bit appear correct even though the data is erroneous. Parity bits added to each "word" sent are called transverse redundancy checks, while those added at the end of a stream of "words" are calledlongitudinal redundancy check

In telecommunication, a longitudinal redundancy check (LRC), or horizontal redundancy check, is a form of redundancy check that is applied independently to each of a parallel group of bit streams. The data must be divided into transmission block ...

s. For example, if each of a series of m-bit "words" has a parity bit added, showing whether there were an odd or even number of ones in that word, any word with a single error in it will be detected. It will not be known where in the word the error is, however. If, in addition, after each stream of n words a parity sum is sent, each bit of which shows whether there were an odd or even number of ones at that bit-position sent in the most recent group, the exact position of the error can be determined and the error corrected. This method is only guaranteed to be effective, however, if there are no more than 1 error in every group of n words. With more error correction bits, more errors can be detected and in some cases corrected.

There are also other bit-grouping techniques.

Checksum

A ''checksum'' of a message is amodular arithmetic

In mathematics, modular arithmetic is a system of arithmetic for integers, where numbers "wrap around" when reaching a certain value, called the modulus. The modern approach to modular arithmetic was developed by Carl Friedrich Gauss in his boo ...

sum of message code words of a fixed word length (e.g., byte values). The sum may be negated by means of a ones'-complement operation prior to transmission to detect unintentional all-zero messages.

Checksum schemes include parity bits, check digit

A check digit is a form of redundancy check used for error detection on identification numbers, such as bank account numbers, which are used in an application where they will at least sometimes be input manually. It is analogous to a binary parity ...

s, and longitudinal redundancy check

In telecommunication, a longitudinal redundancy check (LRC), or horizontal redundancy check, is a form of redundancy check that is applied independently to each of a parallel group of bit streams. The data must be divided into transmission block ...

s. Some checksum schemes, such as the Damm algorithm In error detection, the Damm algorithm is a check digit algorithm that detects all single-digit errors and all adjacent transposition errors. It was presented by H. Michael Damm in 2004.

Strengths and weaknesses Strengths

The Damm algorithm is ...

, the Luhn algorithm

The Luhn algorithm or Luhn formula, also known as the " modulus 10" or "mod 10" algorithm, named after its creator, IBM scientist Hans Peter Luhn, is a simple checksum formula used to validate a variety of identification numbers, such as credit ...

, and the Verhoeff algorithm The Verhoeff algorithm is a checksum formula for error detection developed by the Dutch mathematician Jacobus Verhoeff and was first published in 1969. It was the first decimal check digit algorithm which detects all single-digit errors, and all ...

, are specifically designed to detect errors commonly introduced by humans in writing down or remembering identification numbers.

Cyclic redundancy check

A ''cyclic redundancy check'' (CRC) is a non-securehash function

A hash function is any function that can be used to map data of arbitrary size to fixed-size values. The values returned by a hash function are called ''hash values'', ''hash codes'', ''digests'', or simply ''hashes''. The values are usually ...

designed to detect accidental changes to digital data in computer networks. It is not suitable for detecting maliciously introduced errors. It is characterized by specification of a ''generator polynomial'', which is used as the divisor

In mathematics, a divisor of an integer n, also called a factor of n, is an integer m that may be multiplied by some integer to produce n. In this case, one also says that n is a multiple of m. An integer n is divisible or evenly divisible by ...

in a polynomial long division

In algebra, polynomial long division is an algorithm for dividing a polynomial by another polynomial of the same or lower degree, a generalized version of the familiar arithmetic technique called long division. It can be done easily by hand, becau ...

over a finite field

In mathematics, a finite field or Galois field (so-named in honor of Évariste Galois) is a field that contains a finite number of elements. As with any field, a finite field is a set on which the operations of multiplication, addition, subtr ...

, taking the input data as the dividend

A dividend is a distribution of profits by a corporation to its shareholders. When a corporation earns a profit or surplus, it is able to pay a portion of the profit as a dividend to shareholders. Any amount not distributed is taken to be re-i ...

. The remainder

In mathematics, the remainder is the amount "left over" after performing some computation. In arithmetic, the remainder is the integer "left over" after dividing one integer by another to produce an integer quotient ( integer division). In algeb ...

becomes the result.

A CRC has properties that make it well suited for detecting burst error

In telecommunication, a burst error or error burst is a contiguous sequence of symbols, received over a communication channel, such that the first and last symbols are in error and there exists no contiguous subsequence of ''m'' correctly receive ...

s. CRCs are particularly easy to implement in hardware and are therefore commonly used in computer network

A computer network is a set of computers sharing resources located on or provided by network nodes. The computers use common communication protocols over digital interconnections to communicate with each other. These interconnections are ...

s and storage devices such as hard disk drives.

The parity bit can be seen as a special-case 1-bit CRC.

Cryptographic hash function

The output of a ''cryptographic hash function'', also known as a ''message digest'', can provide strong assurances aboutdata integrity

Data integrity is the maintenance of, and the assurance of, data accuracy and consistency over its entire life-cycle and is a critical aspect to the design, implementation, and usage of any system that stores, processes, or retrieves data. The ter ...

, whether changes of the data are accidental (e.g., due to transmission errors) or maliciously introduced. Any modification to the data will likely be detected through a mismatching hash value. Furthermore, given some hash value, it is typically infeasible to find some input data (other than the one given) that will yield the same hash value. If an attacker can change not only the message but also the hash value, then a ''keyed hash'' or message authentication code (MAC) can be used for additional security. Without knowing the key, it is not possible for the attacker to easily or conveniently calculate the correct keyed hash value for a modified message.

Error correction code

Any error-correcting code can be used for error detection. A code with ''minimumHamming distance

In information theory, the Hamming distance between two strings of equal length is the number of positions at which the corresponding symbols are different. In other words, it measures the minimum number of ''substitutions'' required to chan ...

'', ''d'', can detect up to ''d'' − 1 errors in a code word. Using minimum-distance-based error-correcting codes for error detection can be suitable if a strict limit on the minimum number of errors to be detected is desired.

Codes with minimum Hamming distance ''d'' = 2 are degenerate cases of error-correcting codes, and can be used to detect single errors. The parity bit is an example of a single-error-detecting code.

Applications

Applications that require low latency (such as telephone conversations) cannot useautomatic repeat request

Automatic repeat request (ARQ), also known as automatic repeat query, is an error-control method for data transmission that uses acknowledgements (messages sent by the receiver indicating that it has correctly received a packet) and timeouts ...

(ARQ); they must use forward error correction (FEC). By the time an ARQ system discovers an error and re-transmits it, the re-sent data will arrive too late to be usable.

Applications where the transmitter immediately forgets the information as soon as it is sent (such as most television cameras) cannot use ARQ; they must use FEC because when an error occurs, the original data is no longer available.

Applications that use ARQ must have a return channel In communications systems, the return channel (also reverse channel or return link) is the transmission link from a user terminal to the central hub. Return links are often, but not always, slower than the corresponding forward links. Examples whe ...

; applications having no return channel cannot use ARQ.

Applications that require extremely low error rates (such as digital money transfers) must use ARQ due to the possibility of uncorrectable errors with FEC.

Reliability and inspection engineering also make use of the theory of error-correcting codes.

Internet

In a typicalTCP/IP

The Internet protocol suite, commonly known as TCP/IP, is a framework for organizing the set of communication protocols used in the Internet and similar computer networks according to functional criteria. The foundational protocols in the suit ...

stack, error control is performed at multiple levels:

* Each Ethernet frame

In computer networking, an Ethernet frame is a data link layer protocol data unit and uses the underlying Ethernet physical layer transport mechanisms. In other words, a data unit on an Ethernet link transports an Ethernet frame as its payload ...

uses CRC-32

A cyclic redundancy check (CRC) is an error-detecting code commonly used in digital networks and storage devices to detect accidental changes to digital data. Blocks of data entering these systems get a short ''check value'' attached, based on t ...

error detection. Frames with detected errors are discarded by the receiver hardware.

* The IPv4 header contains a checksum

A checksum is a small-sized block of data derived from another block of digital data for the purpose of detecting errors that may have been introduced during its transmission or storage. By themselves, checksums are often used to verify data ...

protecting the contents of the header. Packets with incorrect checksums are dropped within the network or at the receiver.

* The checksum was omitted from the IPv6

Internet Protocol version 6 (IPv6) is the most recent version of the Internet Protocol (IP), the communications protocol that provides an identification and location system for computers on networks and routes traffic across the Internet. IPv ...

header in order to minimize processing costs in network routing

Routing is the process of selecting a path for traffic in a network or between or across multiple networks. Broadly, routing is performed in many types of networks, including circuit-switched networks, such as the public switched telephone netw ...

and because current link layer technology is assumed to provide sufficient error detection (see also RFC 3819).

* UDP has an optional checksum covering the payload and addressing information in the UDP and IP headers. Packets with incorrect checksums are discarded by the network stack

The protocol stack or network stack is an implementation of a computer networking protocol suite or protocol family. Some of these terms are used interchangeably but strictly speaking, the ''suite'' is the definition of the communication protoco ...

. The checksum is optional under IPv4, and required under IPv6. When omitted, it is assumed the data-link layer provides the desired level of error protection.

* TCP provides a checksum for protecting the payload and addressing information in the TCP and IP headers. Packets with incorrect checksums are discarded by the network stack, and eventually get retransmitted using ARQ, either explicitly (such as through three-way handshake

The Transmission Control Protocol (TCP) is one of the main protocols of the Internet protocol suite. It originated in the initial network implementation in which it complemented the Internet Protocol (IP). Therefore, the entire suite is commonly ...

) or implicitly due to a timeout

Time-out, Time Out, or timeout may refer to:

Time

* Time-out (sport), in various sports, a break in play, called by a team

* Television timeout, a break in sporting action so that a commercial break may be taken

* Timeout (computing), an enginee ...

.

Deep-space telecommunications

The development of error-correction codes was tightly coupled with the history of deep-space missions due to the extreme dilution of signal power over interplanetary distances, and the limited power availability aboard space probes. Whereas early missions sent their data uncoded, starting in 1968, digital error correction was implemented in the form of (sub-optimally decoded)convolutional code

In telecommunication, a convolutional code is a type of error-correcting code that generates parity symbols via the sliding application of a boolean polynomial function to a data stream. The sliding application represents the 'convolution' of t ...

s and Reed–Muller code

Reed–Muller codes are error-correcting codes that are used in wireless communications applications, particularly in deep-space communication. Moreover, the proposed 5G standard relies on the closely related polar codes for error correction in ...

s.K. Andrews et al., ''The Development of Turbo and LDPC Codes for Deep-Space Applications'', Proceedings of the IEEE, Vol. 95, No. 11, Nov. 2007. The Reed–Muller code was well suited to the noise the spacecraft was subject to (approximately matching a bell curve), and was implemented for the Mariner spacecraft and used on missions between 1969 and 1977.

The Voyager 1

''Voyager 1'' is a space probe launched by NASA on September 5, 1977, as part of the Voyager program to study the outer Solar System and interstellar space beyond the Sun's heliosphere. Launched 16 days after its twin ''Voyager 2'', ''Voya ...

and Voyager 2

''Voyager 2'' is a space probe launched by NASA on August 20, 1977, to study the outer planets and interstellar space beyond the Sun's heliosphere. As a part of the Voyager program, it was launched 16 days before its twin, '' Voyager 1'', o ...

missions, which started in 1977, were designed to deliver color imaging and scientific information from Jupiter

Jupiter is the fifth planet from the Sun and the largest in the Solar System. It is a gas giant with a mass more than two and a half times that of all the other planets in the Solar System combined, but slightly less than one-thousandth t ...

and Saturn. This resulted in increased coding requirements, and thus, the spacecraft were supported by (optimally Viterbi-decoded) convolutional codes that could be concatenated

In formal language theory and computer programming, string concatenation is the operation of joining character strings end-to-end. For example, the concatenation of "snow" and "ball" is "snowball". In certain formalisations of concatenat ...

with an outer Golay (24,12,8) code. The Voyager 2 craft additionally supported an implementation of a Reed–Solomon code. The concatenated Reed–Solomon–Viterbi (RSV) code allowed for very powerful error correction, and enabled the spacecraft's extended journey to Uranus

Uranus is the seventh planet from the Sun. Its name is a reference to the Greek god of the sky, Uranus ( Caelus), who, according to Greek mythology, was the great-grandfather of Ares (Mars), grandfather of Zeus (Jupiter) and father of ...

and Neptune. After ECC system upgrades in 1989, both crafts used V2 RSV coding.

The Consultative Committee for Space Data Systems

The Consultative Committee for Space Data Systems (CCSDS) was founded in 1982 for governmental and quasi-governmental space agencies to discuss and develop standards for space data and information systems. Currently composed of "eleven member agenc ...

currently recommends usage of error correction codes with performance similar to the Voyager 2 RSV code as a minimum. Concatenated codes are increasingly falling out of favor with space missions, and are replaced by more powerful codes such as Turbo code

In information theory, turbo codes (originally in French ''Turbocodes'') are a class of high-performance forward error correction (FEC) codes developed around 1990–91, but first published in 1993. They were the first practical codes to closel ...

s or LDPC code

In information theory, a low-density parity-check (LDPC) code is a linear error correcting code, a method of transmitting a message over a noisy transmission channel. An LDPC code is constructed using a sparse Tanner graph (subclass of the ...

s.

The different kinds of deep space and orbital missions that are conducted suggest that trying to find a one-size-fits-all error correction system will be an ongoing problem. For missions close to Earth, the nature of the noise

Noise is unwanted sound considered unpleasant, loud or disruptive to hearing. From a physics standpoint, there is no distinction between noise and desired sound, as both are vibrations through a medium, such as air or water. The difference aris ...

in the communication channel

A communication channel refers either to a physical transmission medium such as a wire, or to a logical connection over a multiplexed medium such as a radio channel in telecommunications and computer networking. A channel is used for informa ...

is different from that which a spacecraft on an interplanetary mission experiences. Additionally, as a spacecraft increases its distance from Earth, the problem of correcting for noise becomes more difficult.

Satellite broadcasting

The demand for satellitetransponder

In telecommunications, a transponder is a device that, upon receiving a signal, emits a different signal in response. The term is a blend of ''transmitter'' and ''responder''.

In air navigation or radio frequency identification, a flight trans ...

bandwidth continues to grow, fueled by the desire to deliver television (including new channels and high-definition television

High-definition television (HD or HDTV) describes a television system which provides a substantially higher image resolution than the previous generation of technologies. The term has been used since 1936; in more recent times, it refers to the g ...

) and IP data. Transponder availability and bandwidth constraints have limited this growth. Transponder capacity is determined by the selected modulation scheme and the proportion of capacity consumed by FEC.

Data storage

Error detection and correction codes are often used to improve the reliability of data storage media. A parity track capable of detecting single-bit errors was present on the firstmagnetic tape data storage

Magnetic-tape data storage is a system for storing digital information on magnetic tape using digital recording.

Tape was an important medium for primary data storage in early computers, typically using large open reels of 7-track, later 9- ...

in 1951. The optimal rectangular code

In telecommunication, a longitudinal redundancy check (LRC), or horizontal redundancy check, is a form of redundancy check that is applied independently to each of a parallel group of bit streams. The data must be divided into transmission block ...

used in group coded recording tapes not only detects but also corrects single-bit errors. Some file formats, particularly archive formats

In computing, an archive file is a computer file that is composed of one or more files along with metadata. Archive files are used to collect multiple data files together into a single file for easier portability and storage, or simply to compre ...

, include a checksum (most often CRC32) to detect corruption and truncation and can employ redundancy or parity file

Parchive (a portmanteau of parity archive, and formally known as Parity Volume Set Specification) is an erasure code system that produces par files for checksum verification of data integrity, with the capability to perform data recovery operatio ...

s to recover portions of corrupted data. Reed-Solomon codes are used in compact disc

The compact disc (CD) is a digital optical disc data storage format that was co-developed by Philips and Sony to store and play digital audio recordings. In August 1982, the first compact disc was manufactured. It was then released in Oc ...

s to correct errors caused by scratches.

Modern hard drives use Reed–Solomon codes to detect and correct minor errors in sector reads, and to recover corrupted data from failing sectors and store that data in the spare sectors. RAID

Raid, RAID or Raids may refer to:

Attack

* Raid (military), a sudden attack behind the enemy's lines without the intention of holding ground

* Corporate raid, a type of hostile takeover in business

* Panty raid, a prankish raid by male college ...

systems use a variety of error correction techniques to recover data when a hard drive completely fails. Filesystems such as ZFS

ZFS (previously: Zettabyte File System) is a file system with volume management capabilities. It began as part of the Sun Microsystems Solaris operating system in 2001. Large parts of Solaris – including ZFS – were published under an ope ...

or Btrfs

Btrfs (pronounced as "better F S", "butter F S", "b-tree F S", or simply by spelling it out) is a computer storage format that combines a file system based on the copy-on-write (COW) principle with a logical volume manager (not to be confused ...

, as well as some RAID

Raid, RAID or Raids may refer to:

Attack

* Raid (military), a sudden attack behind the enemy's lines without the intention of holding ground

* Corporate raid, a type of hostile takeover in business

* Panty raid, a prankish raid by male college ...

implementations, support data scrubbing

Data scrubbing is an error correction technique that uses a background task to periodically inspect main memory or storage for errors, then corrects detected errors using redundant data in the form of different checksums or copies of data. Data ...

and resilvering, which allows bad blocks to be detected and (hopefully) recovered before they are used. The recovered data may be re-written to exactly the same physical location, to spare blocks elsewhere on the same piece of hardware, or the data may be rewritten onto replacement hardware.

Error-correcting memory

Dynamic random-access memory (DRAM) may provide stronger protection againstsoft error

In electronics and computing, a soft error is a type of error where a signal or datum is wrong. Errors may be caused by a defect, usually understood either to be a mistake in design or construction, or a broken component. A soft error is also a s ...

s by relying on error-correcting codes. Such error-correcting memory, known as ''ECC'' or ''EDAC-protected'' memory, is particularly desirable for mission-critical applications, such as scientific computing, financial, medical, etc. as well as extraterrestrial applications due to the increased radiation in space.

Error-correcting memory controllers traditionally use Hamming codes, although some use triple modular redundancy

Triple is used in several contexts to mean "threefold" or a " treble":

Sports

* Triple (baseball), a three-base hit

* A basketball three-point field goal

* A figure skating jump with three rotations

* In bowling terms, three strikes in a row

* I ...

. Interleaving allows distributing the effect of a single cosmic ray potentially upsetting multiple physically neighboring bits across multiple words by associating neighboring bits to different words. As long as a single-event upset

A single-event upset (SEU), also known as a single-event error (SEE), is a change of state caused by one single ionizing particle (ions, electrons, photons...) striking a sensitive node in a live micro-electronic device, such as in a microprocesso ...

(SEU) does not exceed the error threshold (e.g., a single error) in any particular word between accesses, it can be corrected (e.g., by a single-bit error-correcting code), and the illusion of an error-free memory system may be maintained.

In addition to hardware providing features required for ECC memory to operate, operating system

An operating system (OS) is system software that manages computer hardware, software resources, and provides common services for computer programs.

Time-sharing operating systems schedule tasks for efficient use of the system and may also i ...

s usually contain related reporting facilities that are used to provide notifications when soft errors are transparently recovered. One example is the Linux kernel's ''EDAC'' subsystem (previously known as ''Bluesmoke''), which collects the data from error-checking-enabled components inside a computer system; besides collecting and reporting back the events related to ECC memory, it also supports other checksumming errors, including those detected on the PCI bus

PCI may refer to:

Business and economics

* Payment card industry, businesses associated with debit, credit, and other payment cards

** Payment Card Industry Data Security Standard, a set of security requirements for credit card processors

* Prov ...

. A few systems also support memory scrubbing to catch and correct errors early before they become unrecoverable.

See also

* Berger code *Burst error-correcting code

In coding theory, burst error-correcting codes employ methods of correcting burst errors, which are errors that occur in many consecutive bits rather than occurring in bits independently of each other.

Many codes have been designed to correct rand ...

* ECC memory

Error correction code memory (ECC memory) is a type of computer data storage that uses an error correction code (ECC) to detect and correct n-bit data corruption which occurs in memory. ECC memory is used in most computers where data corruption c ...

, a type of computer data storage

* Link adaptation Link adaptation, comprising adaptive coding and modulation (ACM) and others (such as Power Control), is a term used in wireless communications to denote the matching of the modulation, coding and other signal and protocol parameters to the condit ...

*

* List of hash functions

This is a list of hash functions, including cyclic redundancy checks, checksum functions, and cryptographic hash functions.

Cyclic redundancy checks

Adler-32 is often mistaken for a CRC, but it is not: it is a checksum.

Checksums

Univers ...

References

Further reading

* {{cite book, author1=Shu Lin , author2=Daniel J. Costello, Jr. , title = Error Control Coding: Fundamentals and Applications, year = 1983, publisher = Prentice Hall, isbn = 0-13-283796-XSoftECC: A System for Software Memory Integrity Checking

A Tunable, Software-based DRAM Error Detection and Correction Library for HPC

Detection and Correction of Silent Data Corruption for Large-Scale High-Performance Computing

External links

The on-line textbook: Information Theory, Inference, and Learning Algorithms

by David J.C. MacKay, contains chapters on elementary error-correcting codes; on the theoretical limits of error-correction; and on the latest state-of-the-art error-correcting codes, including low-density parity-check codes,

turbo code

In information theory, turbo codes (originally in French ''Turbocodes'') are a class of high-performance forward error correction (FEC) codes developed around 1990–91, but first published in 1993. They were the first practical codes to closel ...

s, and fountain codes In coding theory, fountain codes (also known as rateless erasure codes) are a class of erasure codes with the property that a potentially limitless sequence of encoding symbols can be generated from a given set of source symbols such that the origi ...

.

ECC Page

- implementations of popular ECC encoding and decoding routines Detection and correction