Decision trees on:

[Wikipedia]

[Google]

[Amazon]

A decision tree is a

The decision tree illustrates that when sequentially distributing lifeguards, placing a first lifeguard on beach #1 would be optimal if there is only the budget for 1 lifeguard. But if there is a budget for two guards, then placing both on beach #2 would prevent more overall drownings.

The decision tree illustrates that when sequentially distributing lifeguards, placing a first lifeguard on beach #1 would be optimal if there is only the budget for 1 lifeguard. But if there is a budget for two guards, then placing both on beach #2 would prevent more overall drownings.

Now, once we have chosen the root node we can split the samples into two groups based on whether a sample is positive or negative for the root node mutation. The groups will be called group A and group B. For example, if we use M1 to split the samples in the root node we get NC2 and C2 samples in group A and the rest of the samples NC4, NC3, NC1, C1 in group B.

Disregarding the mutation chosen for the root node, proceed to place the next best features that have the highest values for information gain or the phi function in the left or right child nodes of the decision tree. Once we choose the root node and the two child nodes for the tree of depth = 3 we can just add the leaves. The leaves will represent the final classification decision the model has produced based on the mutations a sample either has or does not have. The left tree is the decision tree we obtain from using information gain to split the nodes and the right tree is what we obtain from using the phi function to split the nodes.

Now, once we have chosen the root node we can split the samples into two groups based on whether a sample is positive or negative for the root node mutation. The groups will be called group A and group B. For example, if we use M1 to split the samples in the root node we get NC2 and C2 samples in group A and the rest of the samples NC4, NC3, NC1, C1 in group B.

Disregarding the mutation chosen for the root node, proceed to place the next best features that have the highest values for information gain or the phi function in the left or right child nodes of the decision tree. Once we choose the root node and the two child nodes for the tree of depth = 3 we can just add the leaves. The leaves will represent the final classification decision the model has produced based on the mutations a sample either has or does not have. The left tree is the decision tree we obtain from using information gain to split the nodes and the right tree is what we obtain from using the phi function to split the nodes.

Now assume the

Now assume the

Extensive Decision Tree tutorials and examplesGallery of example decision treesGradient Boosted Decision Trees

{{Authority control Decision analysis

decision support

A decision support system (DSS) is an information system that supports business or organizational decision-making activities. DSSs serve the management, operations and planning levels of an organization (usually mid and higher management) and h ...

tool that uses a tree-like model of decisions and their possible consequences, including chance event outcomes, resource costs, and utility

As a topic of economics, utility is used to model worth or value. Its usage has evolved significantly over time. The term was introduced initially as a measure of pleasure or happiness as part of the theory of utilitarianism by moral philosoph ...

. It is one way to display an algorithm

In mathematics and computer science, an algorithm () is a finite sequence of rigorous instructions, typically used to solve a class of specific problems or to perform a computation. Algorithms are used as specifications for performing ...

that only contains conditional control statements.

Decision trees are commonly used in operations research

Operations research ( en-GB, operational research) (U.S. Air Force Specialty Code: Operations Analysis), often shortened to the initialism OR, is a discipline that deals with the development and application of analytical methods to improve dec ...

, specifically in decision analysis Decision analysis (DA) is the discipline comprising the philosophy, methodology, and professional practice necessary to address important decisions in a formal manner. Decision analysis includes many procedures, methods, and tools for identifying, ...

, to help identify a strategy most likely to reach a goal, but are also a popular tool in machine learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence.

Machine ...

.

Overview

A decision tree is aflowchart

A flowchart is a type of diagram that represents a workflow or process. A flowchart can also be defined as a diagrammatic representation of an algorithm, a step-by-step approach to solving a task.

The flowchart shows the steps as boxes of va ...

-like structure in which each internal node represents a "test" on an attribute (e.g. whether a coin flip comes up heads or tails), each branch represents the outcome of the test, and each leaf node represents a class label (decision taken after computing all attributes). The paths from root to leaf represent classification rules.

In decision analysis Decision analysis (DA) is the discipline comprising the philosophy, methodology, and professional practice necessary to address important decisions in a formal manner. Decision analysis includes many procedures, methods, and tools for identifying, ...

, a decision tree and the closely related influence diagram are used as a visual and analytical decision support tool, where the expected value

In probability theory, the expected value (also called expectation, expectancy, mathematical expectation, mean, average, or first moment) is a generalization of the weighted average. Informally, the expected value is the arithmetic mean of a ...

s (or expected utility The expected utility hypothesis is a popular concept in economics that serves as a reference guide for decisions when the payoff is uncertain. The theory recommends which option rational individuals should choose in a complex situation, based on the ...

) of competing alternatives are calculated.

A decision tree consists of three types of nodes:

# Decision nodes – typically represented by squares

# Chance nodes – typically represented by circles

# End nodes – typically represented by triangles

Decision trees are commonly used in operations research

Operations research ( en-GB, operational research) (U.S. Air Force Specialty Code: Operations Analysis), often shortened to the initialism OR, is a discipline that deals with the development and application of analytical methods to improve dec ...

and operations management. If, in practice, decisions have to be taken online with no recall under incomplete knowledge, a decision tree should be paralleled by a probability

Probability is the branch of mathematics concerning numerical descriptions of how likely an Event (probability theory), event is to occur, or how likely it is that a proposition is true. The probability of an event is a number between 0 and ...

model as a best choice model or online selection model algorithm

In mathematics and computer science, an algorithm () is a finite sequence of rigorous instructions, typically used to solve a class of specific problems or to perform a computation. Algorithms are used as specifications for performing ...

. Another use of decision trees is as a descriptive means for calculating conditional probabilities

In probability theory, conditional probability is a measure of the probability of an event occurring, given that another event (by assumption, presumption, assertion or evidence) has already occurred. This particular method relies on event B occu ...

.

Decision trees, influence diagrams, utility function

As a topic of economics, utility is used to model worth or value. Its usage has evolved significantly over time. The term was introduced initially as a measure of pleasure or happiness as part of the theory of utilitarianism by moral philosoph ...

s, and other decision analysis Decision analysis (DA) is the discipline comprising the philosophy, methodology, and professional practice necessary to address important decisions in a formal manner. Decision analysis includes many procedures, methods, and tools for identifying, ...

tools and methods are taught to undergraduate students in schools of business, health economics, and public health, and are examples of operations research or management science

Management science (or managerial science) is a wide and interdisciplinary study of solving complex problems and making strategic decisions as it pertains to institutions, corporations, governments and other types of organizational entities. It is ...

methods.

Decision-tree building blocks

Decision-tree elements

Drawn from left to right, a decision tree has only burst nodes (splitting paths) but no sink nodes (converging paths). So used manually they can grow very big and are then often hard to draw fully by hand. Traditionally, decision trees have been created manually – as the aside example shows – although increasingly, specialized software is employed.Decision rules

The decision tree can be linearized into decision rules, where the outcome is the contents of the leaf node, and the conditions along the path form a conjunction in the if clause. In general, the rules have the form: : ''if'' condition1 ''and'' condition2 ''and'' condition3 ''then'' outcome. Decision rules can be generated by constructingassociation rules

Association rule learning is a rule-based machine learning method for discovering interesting relations between variables in large databases. It is intended to identify strong rules discovered in databases using some measures of interestingness.P ...

with the target variable on the right. They can also denote temporal or causal relations.

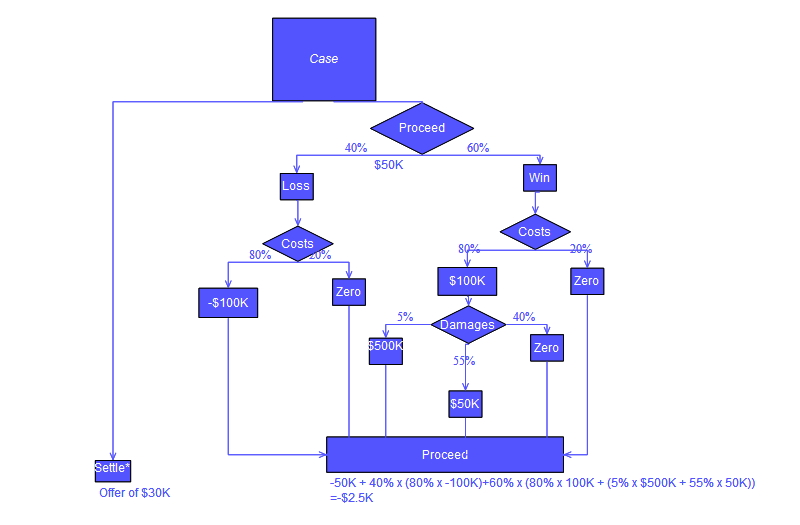

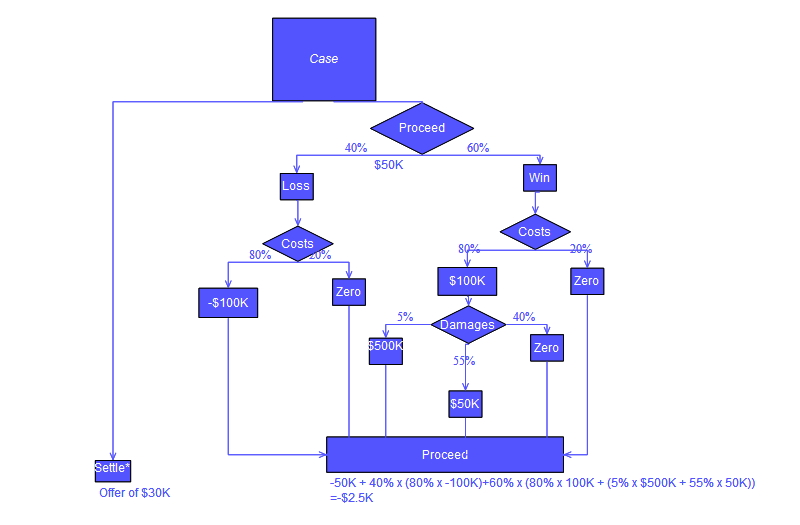

Decision tree using flowchart symbols

Commonly a decision tree is drawn usingflowchart

A flowchart is a type of diagram that represents a workflow or process. A flowchart can also be defined as a diagrammatic representation of an algorithm, a step-by-step approach to solving a task.

The flowchart shows the steps as boxes of va ...

symbols as it is easier for many to read and understand. Note there is a conceptual error in the "Proceed" calculation of the tree shown below; the error relates to the calculation of "costs" awarded in a legal action.

Analysis example

Analysis can take into account the decision maker's (e.g., the company's) preference orutility function

As a topic of economics, utility is used to model worth or value. Its usage has evolved significantly over time. The term was introduced initially as a measure of pleasure or happiness as part of the theory of utilitarianism by moral philosoph ...

, for example:

The basic interpretation in this situation is that the company prefers B's risk and payoffs under realistic risk preference coefficients (greater than $400K—in that range of risk aversion, the company would need to model a third strategy, "Neither A nor B").

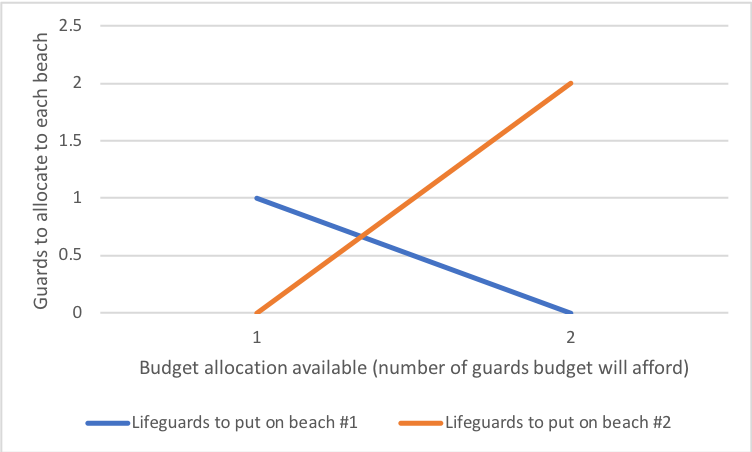

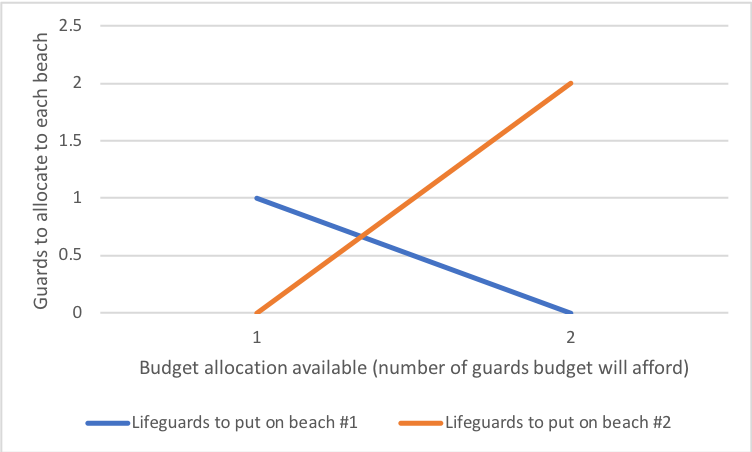

Another example, commonly used in operations research

Operations research ( en-GB, operational research) (U.S. Air Force Specialty Code: Operations Analysis), often shortened to the initialism OR, is a discipline that deals with the development and application of analytical methods to improve dec ...

courses, is the distribution of lifeguards on beaches (a.k.a. the "Life's a Beach" example). The example describes two beaches with lifeguards to be distributed on each beach. There is maximum budget ''B'' that can be distributed among the two beaches (in total), and using a marginal returns table, analysts can decide how many lifeguards to allocate to each beach.

In this example, a decision tree can be drawn to illustrate the principles of diminishing returns

In economics, diminishing returns are the decrease in marginal (incremental) output of a production process as the amount of a single factor of production is incrementally increased, holding all other factors of production equal ( ceteris par ...

on beach #1.

The decision tree illustrates that when sequentially distributing lifeguards, placing a first lifeguard on beach #1 would be optimal if there is only the budget for 1 lifeguard. But if there is a budget for two guards, then placing both on beach #2 would prevent more overall drownings.

The decision tree illustrates that when sequentially distributing lifeguards, placing a first lifeguard on beach #1 would be optimal if there is only the budget for 1 lifeguard. But if there is a budget for two guards, then placing both on beach #2 would prevent more overall drownings.

Influence diagram

Much of the information in a decision tree can be represented more compactly as an influence diagram, focusing attention on the issues and relationships between events.Association rule induction

Decision trees can also be seen asgenerative model

In statistical classification, two main approaches are called the generative approach and the discriminative approach. These compute classifiers by different approaches, differing in the degree of statistical modelling. Terminology is incons ...

s of induction rules from empirical data. An optimal decision tree is then defined as a tree that accounts for most of the data, while minimizing the number of levels (or "questions"). Several algorithms to generate such optimal trees have been devised, such as ID3/4/5, CLS, ASSISTANT, and CART.

Advantages and disadvantages

Among decision support tools, decision trees (and influence diagrams) have several advantages. Decision trees: * Are simple to understand and interpret. People are able to understand decision tree models after a brief explanation. * Have value even with little hard data. Important insights can be generated based on experts describing a situation (its alternatives, probabilities, and costs) and their preferences for outcomes. * Help determine worst, best, and expected values for different scenarios. * Use a white box model. If a given result is provided by a model. * Can be combined with other decision techniques. * The action of more than one decision-maker can be considered. Disadvantages of decision trees: * They are unstable, meaning that a small change in the data can lead to a large change in the structure of the optimal decision tree. * They are often relatively inaccurate. Many other predictors perform better with similar data. This can be remedied by replacing a single decision tree with arandom forest

Random forests or random decision forests is an ensemble learning method for classification, regression and other tasks that operates by constructing a multitude of decision trees at training time. For classification tasks, the output of th ...

of decision trees, but a random forest is not as easy to interpret as a single decision tree.

* For data including categorical variables with different numbers of levels, information gain in decision trees is biased in favor of those attributes with more levels.

* Calculations can get very complex, particularly if many values are uncertain and/or if many outcomes are linked.

Optimizing a decision tree

A few things should be considered when improving the accuracy of the decision tree classifier. The following are some possible optimizations to consider when looking to make sure the decision tree model produced makes the correct decision or classification. Note that these things are not the only things to consider but only some. Increasing the number of levels of the tree Theaccuracy

Accuracy and precision are two measures of '' observational error''.

''Accuracy'' is how close a given set of measurements (observations or readings) are to their '' true value'', while ''precision'' is how close the measurements are to each ot ...

of the decision tree can change based on the depth of the decision tree. In many cases, the tree’s leaves are pure nodes. When a node is pure, it means that all the data in that node belongs to a single class. For example, if the classes in the data set are Cancer and Non-Cancer a leaf node would be considered pure when all the sample data in a leaf node is part of only one class, either cancer or non-cancer. It is important to note that a deeper tree is not always better when optimizing the decision tree. A deeper tree can influence the runtime in a negative way. If a certain classification algorithm is being used, then a deeper tree could mean the runtime of this classification algorithm is significantly slower. There is also the possibility that the actual algorithm building the decision tree will get significantly slower as the tree gets deeper. If the tree-building algorithm being used splits pure nodes, then a decrease in the overall accuracy of the tree classifier could be experienced. Occasionally, going deeper in the tree can cause an accuracy decrease in general, so it is very important to test modifying the depth of the decision tree and selecting the depth that produces the best results. To summarize, observe the points below, we will define the number D as the depth of the tree.

Possible advantages of increasing the number D:

* Accuracy of the decision-tree classification model increases.

Possible disadvantages of increasing D

* Runtime issues

* Decrease in accuracy in general

* Pure node splits while going deeper can cause issues.

The ability to test the differences in classification results when changing D is imperative. We must be able to easily change and test the variables that could affect the accuracy and reliability of the decision tree-model.

The choice of node-splitting functions

The node splitting function used can have an impact on improving the accuracy of the decision tree. For example, using the information-gain function may yield better results than using the phi function. The phi function is known as a measure of “goodness” of a candidate split at a node in the decision tree. The information gain function is known as a measure of the “reduction in entropy

Entropy is a scientific concept, as well as a measurable physical property, that is most commonly associated with a state of disorder, randomness, or uncertainty. The term and the concept are used in diverse fields, from classical thermodyna ...

”. In the following, we will build two decision trees. One decision tree will be built using the phi function to split the nodes and one decision tree will be built using the information gain function to split the nodes.

The main advantages and disadvantages of information gain

Information is an abstract concept that refers to that which has the power to inform. At the most fundamental level information pertains to the interpretation of that which may be sensed. Any natural process that is not completely random, ...

and phi function

* One major drawback of information gain is that the feature that is chosen as the next node in the tree tends to have more unique values.

* An advantage of information gain is that it tends to choose the most impactful features that are close to the root of the tree. It is a very good measure for deciding the relevance of some features.

* The phi function is also a good measure for deciding the relevance of some features based on "goodness".

This is the information gain function formula. The formula states the information gain is a function of the entropy of a node of the decision tree minus the entropy of a candidate split at node t of a decision tree.

This is the phi function formula. The phi function is maximized when the chosen feature splits the samples in a way that produces homogenous splits and have around the same number of samples in each split.

We will set D, which is the depth of the decision tree we are building, to three (D = 3). We also have the following data set of cancer and non-cancer samples and the mutation features that the samples either have or do not have. If a sample has a feature mutation then the sample is positive for that mutation, and it will be represented by one. If a sample does not have a feature mutation then the sample is negative for that mutation, and it will be represented by zero.

To summarize, C stands for cancer and NC stands for non-cancer. The letter M stands for mutation

In biology, a mutation is an alteration in the nucleic acid sequence of the genome of an organism, virus, or extrachromosomal DNA. Viral genomes contain either DNA or RNA. Mutations result from errors during DNA or viral replication, m ...

, and if a sample has a particular mutation it will show up in the table as a one and otherwise zero.

Now, we can use the formulas to calculate the phi function values and information gain values for each M in the dataset. Once all the values are calculated the tree can be produced. The first thing to be done is to select the root node. In information gain and the phi function we consider the optimal split to be the mutation that produces the highest value for information gain or the phi function. Now assume that M1 has the highest phi function value and M4 has the highest information gain value. The M1 mutation will be the root of our phi function tree and M4 will be the root of our information gain tree. You can observe the root nodes below

Now, once we have chosen the root node we can split the samples into two groups based on whether a sample is positive or negative for the root node mutation. The groups will be called group A and group B. For example, if we use M1 to split the samples in the root node we get NC2 and C2 samples in group A and the rest of the samples NC4, NC3, NC1, C1 in group B.

Disregarding the mutation chosen for the root node, proceed to place the next best features that have the highest values for information gain or the phi function in the left or right child nodes of the decision tree. Once we choose the root node and the two child nodes for the tree of depth = 3 we can just add the leaves. The leaves will represent the final classification decision the model has produced based on the mutations a sample either has or does not have. The left tree is the decision tree we obtain from using information gain to split the nodes and the right tree is what we obtain from using the phi function to split the nodes.

Now, once we have chosen the root node we can split the samples into two groups based on whether a sample is positive or negative for the root node mutation. The groups will be called group A and group B. For example, if we use M1 to split the samples in the root node we get NC2 and C2 samples in group A and the rest of the samples NC4, NC3, NC1, C1 in group B.

Disregarding the mutation chosen for the root node, proceed to place the next best features that have the highest values for information gain or the phi function in the left or right child nodes of the decision tree. Once we choose the root node and the two child nodes for the tree of depth = 3 we can just add the leaves. The leaves will represent the final classification decision the model has produced based on the mutations a sample either has or does not have. The left tree is the decision tree we obtain from using information gain to split the nodes and the right tree is what we obtain from using the phi function to split the nodes.

Now assume the

Now assume the classification Classification is a process related to categorization, the process in which ideas and objects are recognized, differentiated and understood.

Classification is the grouping of related facts into classes.

It may also refer to:

Business, organizat ...

results from both trees are given using a confusion matrix

In the field of machine learning and specifically the problem of statistical classification, a confusion matrix, also known as an error matrix, is a specific table layout that allows visualization of the performance of an algorithm, typically a ...

.

Information gain confusion matrix:

Phi function confusion matrix:

The tree using information gain has the same results when using the phi function when calculating the accuracy. When we classify the samples based on the model using information gain we get one true positive, one false positive, zero false negatives, and four true negatives. For the model using the phi function we get two true positives, zero false positives, one false negative, and three true negatives. The next step is to evaluate the effectiveness of the decision tree using some key metrics that will be discussed in the evaluating a decision tree section below. The metrics that will be discussed below can help determine the next steps to be taken when optimizing the decision tree.

Other techniques

The above information is not where it ends for building and optimizing a decision tree. There are many techniques for improving the decision tree classification models we build. One of the techniques is making our decision tree model from a bootstrapped

In general, bootstrapping usually refers to a self-starting process that is supposed to continue or grow without external input.

Etymology

Tall boots may have a tab, loop or handle at the top known as a bootstrap, allowing one to use fingers ...

dataset. The bootstrapped dataset helps remove the bias that occurs when building a decision tree model with the same data the model is tested with. The ability to leverage the power of random forests can also help significantly improve the overall accuracy of the model being built. This method generates many decisions from many decision trees and tallies up the votes from each decision tree to make the final classification. There are many techniques, but the main objective is to test building your decision tree model in different ways to make sure it reaches the highest performance level possible.

Evaluating a decision tree

It is important to know the measurements used to evaluate decision trees. The main metrics used areaccuracy

Accuracy and precision are two measures of '' observational error''.

''Accuracy'' is how close a given set of measurements (observations or readings) are to their '' true value'', while ''precision'' is how close the measurements are to each ot ...

, sensitivity

Sensitivity may refer to:

Science and technology Natural sciences

* Sensitivity (physiology), the ability of an organism or organ to respond to external stimuli

** Sensory processing sensitivity in humans

* Sensitivity and specificity, statisti ...

, specificity, precision, miss rate, false discovery rate

In statistics, the false discovery rate (FDR) is a method of conceptualizing the rate of type I errors in null hypothesis testing when conducting multiple comparisons. FDR-controlling procedures are designed to control the FDR, which is the expe ...

, and false omission rate. All these measurements are derived from the number of true positives, false positives

A false positive is an error in binary classification in which a test result incorrectly indicates the presence of a condition (such as a disease when the disease is not present), while a false negative is the opposite error, where the test result ...

, True negatives, and false negatives obtained when running a set of samples through the decision tree classification model. Also, a confusion matrix can be made to display these results. All these main metrics tell something different about the strengths and weaknesses of the classification model built based on your decision tree. For example, A low sensitivity with high specificity could indicate the classification model built from the decision tree does not do well identifying cancer samples over non-cancer samples.

Let us take the confusion matrix below. The confusion matrix shows us the decision tree model classifier built gave 11 true positives, 1 false positive, 45 false negatives, and 105 true negatives.

We will now calculate the values accuracy, sensitivity, specificity, precision, miss rate, false discovery rate, and false omission rate.

Accuracy:

Sensitivity (TPR – true positive tate):

Specificity (TNR – true negative rate):

Precision (PPV – positive predictive value):

Miss Rate (FNR – false negative rate):

False discovery rate (FDR):

False omission rate (FOR):

Once we have calculated the key metrics we can make some initial conclusions on the performance of the decision tree model built. The accuracy that we calculated was 71.60%. The accuracy value is good to start but we would like to get our models as accurate as possible while maintaining the overall performance. The sensitivity value of 19.64% means that out of everyone who was actually positive for cancer tested positive. If we look at the specificity value of 99.06% we know that out of all the samples that were negative for cancer actually tested negative. When it comes to sensitivity and specificity it is important to have a balance between the two values ,so if we can decrease our specificity to increase the sensitivity that would prove to be beneficial. These are just a few examples on how to use these values and the meanings behind them to evaluate the decision tree model and improve upon the next iteration.See also

* Behavior tree (artificial intelligence, robotics and control) *Boosting (machine learning)

In machine learning, boosting is an ensemble meta-algorithm for primarily reducing bias, and also variance in supervised learning, and a family of machine learning algorithms that convert weak learners to strong ones. Boosting is based on the q ...

* Decision cycle

A decision cycle is a sequence of steps used by an entity on a repeated basis to reach and implement decisions and to learn from the results. The "decision cycle" phrase has a history of use to broadly categorize various methods of making decision ...

* Decision list

* Decision matrix

A decision matrix is a list of values in rows and columns that allows an analyst to systematically identify, analyze, and rate the performance of relationships between sets of values and information. Elements of a decision matrix show decisions bas ...

* Decision table

Decision tables are a concise visual representation for specifying which actions to perform depending on given conditions. They are algorithms whose output is a set of actions. The information expressed in decision tables could also be represente ...

* Decision tree model of computation

* Design rationale

A design rationale is an explicit documentation of the reasons behind decisions made when designing a system or artifact. As initially developed by W.R. Kunz and Horst Rittel, design rationale seeks to provide argumentation-based structure to ...

* DRAKON

DRAKON is a free and open source algorithmic visual programming and modeling language developed within the Buran space project following ergonomic design principles. The language provides a uniform way to represent flowcharts of any c ...

* Markov chain

A Markov chain or Markov process is a stochastic model describing a sequence of possible events in which the probability of each event depends only on the state attained in the previous event. Informally, this may be thought of as, "What happen ...

* Random forest

Random forests or random decision forests is an ensemble learning method for classification, regression and other tasks that operates by constructing a multitude of decision trees at training time. For classification tasks, the output of th ...

* Ordinal priority approach

* Odds algorithm

* Topological combinatorics The mathematical discipline of topological combinatorics is the application of topological and algebro-topological methods to solving problems in combinatorics.

History

The discipline of combinatorial topology used combinatorial concepts in to ...

* Truth table

A truth table is a mathematical table used in logic—specifically in connection with Boolean algebra (logic), Boolean algebra, boolean functions, and propositional calculus—which sets out the functional values of logical expression (mathematics) ...

References

External links

Extensive Decision Tree tutorials and examples

{{Authority control Decision analysis