|

Decision Tree Model

In computational complexity theory, the decision tree model is the model of computation in which an algorithm can be considered to be a decision tree, i.e. a sequence of ''queries'' or ''tests'' that are done adaptively, so the outcome of previous tests can influence the tests performed next. Typically, these tests have a small number of outcomes (such as a yes–no question) and can be performed quickly (say, with unit computational cost), so the worst-case time complexity of an algorithm in the decision tree model corresponds to the depth of the corresponding tree. This notion of computational complexity of a problem or an algorithm in the decision tree model is called its decision tree complexity or query complexity. Decision tree models are instrumental in establishing lower bounds for the complexity of certain classes of computational problems and algorithms. Several variants of decision tree models have been introduced, depending on the computational model and type of quer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Aanderaa–Karp–Rosenberg Conjecture

In theoretical computer science, the Aanderaa–Karp–Rosenberg conjecture (also known as the Aanderaa–Rosenberg conjecture or the evasiveness conjecture) is a group of related conjectures about the number of questions of the form "Is there an edge between vertex u and vertex v?" that have to be answered to determine whether or not an undirected graph has a particular property such as planarity or bipartiteness. They are named after Stål Aanderaa, Richard M. Karp, and Arnold L. Rosenberg. According to the conjecture, for a wide class of properties, no algorithm can guarantee that it will be able to skip any questions: any algorithm for determining whether the graph has the property, no matter how clever, might need to examine every pair of vertices before it can give its answer. A property satisfying this conjecture is called evasive. More precisely, the Aanderaa–Rosenberg conjecture states that any deterministic algorithm must test at least a constant fraction of all p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

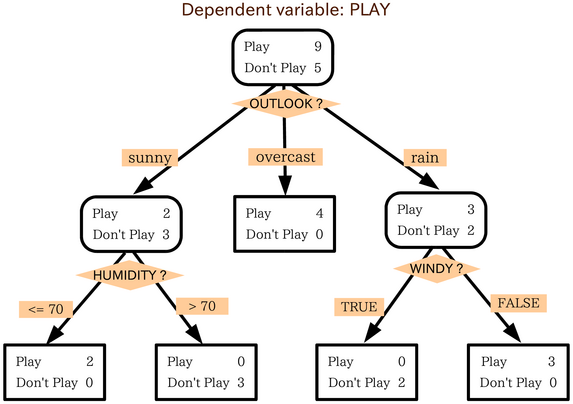

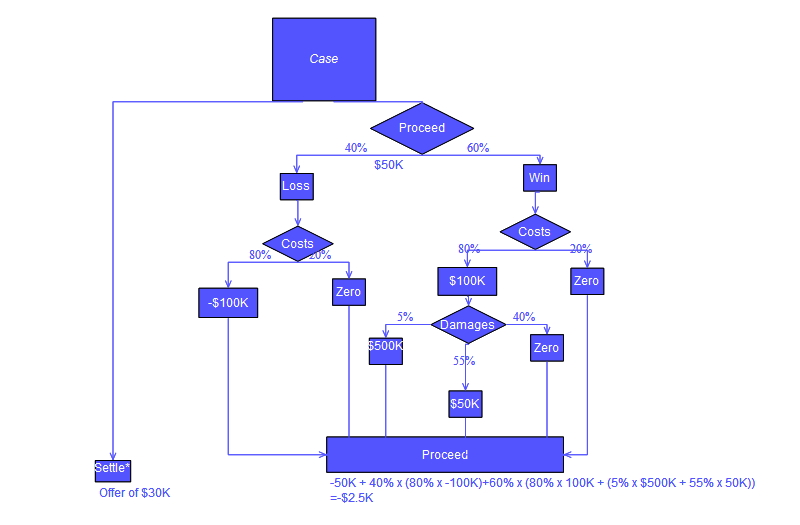

Decision Tree

A decision tree is a decision support system, decision support recursive partitioning structure that uses a Tree (graph theory), tree-like Causal model, model of decisions and their possible consequences, including probability, chance event outcomes, resource costs, and utility. It is one way to display an algorithm that only contains conditional control statements. Decision trees are commonly used in operations research, specifically in decision analysis, to help identify a strategy most likely to reach a goal, but are also a popular tool in Decision tree learning, machine learning. Overview A decision tree is a flowchart-like structure in which each internal node represents a test on an attribute (e.g. whether a coin flip comes up heads or tails), each branch represents the outcome of the test, and each leaf node represents a class label (decision taken after computing all attributes). The paths from root to leaf represent classification rules. In decision analysis, a de ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Comparison Sort

A comparison sort is a type of sorting algorithm that only reads the list elements through a single abstract comparison operation (often a "less than or equal to" operator or a three-way comparison) that determines which of two elements should occur first in the final sorted list. The only requirement is that the operator forms a total preorder over the data, with: # if ''a'' ≤ ''b'' and ''b'' ≤ ''c'' then ''a'' ≤ ''c'' (transitivity) # for all ''a'' and ''b'', ''a'' ≤ ''b'' or ''b'' ≤ ''a'' ( connexity). It is possible that both ''a'' ≤ ''b'' and ''b'' ≤ ''a''; in this case either may come first in the sorted list. In a stable sort, the input order determines the sorted order in this case. Comparison sorts studied in the literature are "comparison-based". Elements ''a'' and ''b'' can be swapped or otherwise re-arranged by the algorithm only when the order between these elements has been established based on the outcomes of prior comparisons. This is the case when ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hao Huang (mathematician)

Hao Huang is a mathematician known for solving the sensitivity conjecture. Huang is currently an associate professor in the mathematics department at National University of Singapore. Huang received a B.S. degree in mathematics at Peking University in 2007. He obtained his Ph.D. in mathematics from his dissertation titled ''Various Problems in Extremal Combinatorics'' from the University of California, Los Angeles (UCLA) in 2012 advised by Benny Sudakov. His postdoctoral research was done at the Institute for Advanced Study in Princeton, New Jersey and DIMACS at Rutgers University in 2012-2014, followed by a year at the Institute for Mathematics and its Applications at the University of Minnesota. Huang then became an assistant professor from 2015 to 2021 in the Department of Mathematics at Emory University. In July 2019, Huang announced a breakthrough, which gave a proof of the sensitivity conjecture. At that point the conjecture had been open for nearly 30 years, having been ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Analysis Of Boolean Functions

In mathematics and theoretical computer science, analysis of Boolean functions is the study of real-valued functions on \^n or \^n (such functions are sometimes known as pseudo-Boolean functions) from a spectral perspective. The functions studied are often, but not always, Boolean-valued, making them Boolean functions. The area has found many applications in combinatorics, social choice theory, random graphs, and theoretical computer science, especially in hardness of approximation, property testing, and probably approximately correct learning, PAC learning. Basic concepts We will mostly consider functions defined on the domain \^n. Sometimes it is more convenient to work with the domain \^n instead. If f is defined on \^n, then the corresponding function defined on \^n is :f_(x_1,\ldots,x_n) = f((-1)^,\ldots,(-1)^). Similarly, for us a Boolean function is a \-valued function, though often it is more convenient to consider \-valued functions instead. Fourier expansion Every real- ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deutsch–Jozsa Algorithm

The Deutsch–Jozsa algorithm is a deterministic quantum algorithm proposed by David Deutsch and Richard Jozsa in 1992 with improvements by Richard Cleve, Artur Ekert, Chiara Macchiavello, and Michele Mosca in 1998. Although of little practical use, it is one of the first examples of a quantum algorithm that is exponentially faster than any possible deterministic classical algorithm. The Deutsch–Jozsa problem is specifically designed to be easy for a quantum algorithm and hard for any deterministic classical algorithm. It is a black box problem that can be solved efficiently by a quantum computer with no error, whereas a deterministic classical computer would need an exponential number of queries to the black box to solve the problem. More formally, it yields an oracle relative to which EQP, the class of problems that can be solved exactly in polynomial time on a quantum computer, and P are different. Since the problem is easy to solve on a probabilistic classical computer, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Total Function

In mathematics, a partial function from a set to a set is a function from a subset of (possibly the whole itself) to . The subset , that is, the '' domain'' of viewed as a function, is called the domain of definition or natural domain of . If equals , that is, if is defined on every element in , then is said to be a total function. In other words, a partial function is a binary relation over two sets that associates to every element of the first set ''at most'' one element of the second set; it is thus a univalent relation. This generalizes the concept of a (total) function by not requiring ''every'' element of the first set to be associated to an element of the second set. A partial function is often used when its exact domain of definition is not known, or is difficult to specify. However, even when the exact domain of definition is known, partial functions are often used for simplicity or brevity. This is the case in calculus, where, for example, the quotient ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Noam Nisan

Noam Nisan (; born June 20, 1961) is an Israeli computer scientist and professor of computer science at the Hebrew University of Jerusalem. He is known for his research in computational complexity theory and algorithmic game theory. Biography Nisan did his undergraduate studies at the Hebrew University, graduating in 1984. He went to the University of California, Berkeley, for graduate school, and received a Ph.D. in 1988 under the supervision of Richard Karp. After postdoctoral studies at the Massachusetts Institute of Technology he joined the Hebrew University faculty in 1990.Curriculum vitae retrieved 2012-03-01. Selected publications Nisan is the author of[...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nondeterministic Algorithm

In computer science and computer programming, a nondeterministic algorithm is an algorithm that, even for the same input, can exhibit different behaviors on different runs, as opposed to a deterministic algorithm. Different models of computation give rise to different reasons that an algorithm may be non-deterministic, and different ways to evaluate its performance or correctness: *A concurrent algorithm can perform differently on different runs due to a race condition A race condition or race hazard is the condition of an electronics, software, or other system where the system's substantive behavior is dependent on the sequence or timing of other uncontrollable events, leading to unexpected or inconsistent .... This can happen even with a single-threaded algorithm when it interacts with resources external to it. In general, such an algorithm is considered to perform correctly only when ''all'' possible runs produce the desired results. *A probabilistic algorithm's behavior ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Certificate (complexity)

In computational complexity theory, a certificate (also called a witness) is a string that certifies the answer to a computation, or certifies the membership of some string in a language. A certificate is often thought of as a solution path within a verification process, which is used to check whether a problem gives the answer "Yes" or "No". In the decision tree model of computation, certificate complexity is the minimum number of the n input variables of a decision tree that need to be assigned a value in order to definitely establish the value of the Boolean function f. Use in definitions The notion of certificate is used to define semi-decidability: a formal language L is semi-decidable if there is a two-place predicate relationR \subseteq \Sigma^* \times \Sigma^* such that R is computable, and such that for all x \in \Sigma^*: x ∈ L ⇔ there exists y such that R(x, y) Certificates also give definitions for some complexity classes which can alternatively be chara ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |