Data Warehousing on:

[Wikipedia]

[Google]

[Amazon]

In

In

In

In computing

Computing is any goal-oriented activity requiring, benefiting from, or creating computing machinery. It includes the study and experimentation of algorithmic processes, and development of both hardware and software. Computing has scientific, ...

, a data warehouse (DW or DWH), also known as an enterprise data warehouse (EDW), is a system used for reporting and data analysis

Data analysis is a process of inspecting, cleansing, transforming, and modeling data with the goal of discovering useful information, informing conclusions, and supporting decision-making. Data analysis has multiple facets and approaches, en ...

and is considered a core component of business intelligence

Business intelligence (BI) comprises the strategies and technologies used by enterprises for the data analysis and management of business information. Common functions of business intelligence technologies include reporting, online analytical pr ...

. DWs are central repositories of integrated data from one or more disparate sources. They store current and historical data in one single place that are used for creating analytical reports for workers throughout the enterprise.

The data stored in the warehouse is upload

Uploading refers to ''transmitting'' data from one computer system to another through means of a network. Common methods of uploading include: uploading via web browsers, FTP clients], and computer terminal, terminals (SCP/ SFTP). Uploadi ...

ed from the operational systems (such as marketing or sales). The data may pass through an operational data store and may require data cleansing

Data cleansing or data cleaning is the process of detecting and correcting (or removing) corrupt or inaccurate records from a record set, table, or database and refers to identifying incomplete, incorrect, inaccurate or irrelevant parts of the d ...

for additional operations to ensure data quality

Data quality refers to the state of qualitative or quantitative pieces of information. There are many definitions of data quality, but data is generally considered high quality if it is "fit for tsintended uses in operations, decision making and p ...

before it is used in the DW for reporting.

Extract, transform, load

In computing, extract, transform, load (ETL) is a three-phase process where data is extracted, transformed (cleaned, sanitized, scrubbed) and loaded into an output data container. The data can be collated from one or more sources and it can also ...

(ETL) and extract, load, transform

Extract, load, transform (ELT) is an alternative to extract, transform, load (ETL) used with data lake implementations. In contrast to ETL, in ELT models the data is not transformed on entry to the data lake, but stored in its original raw forma ...

(ELT) are the two main approaches used to build a data warehouse system.

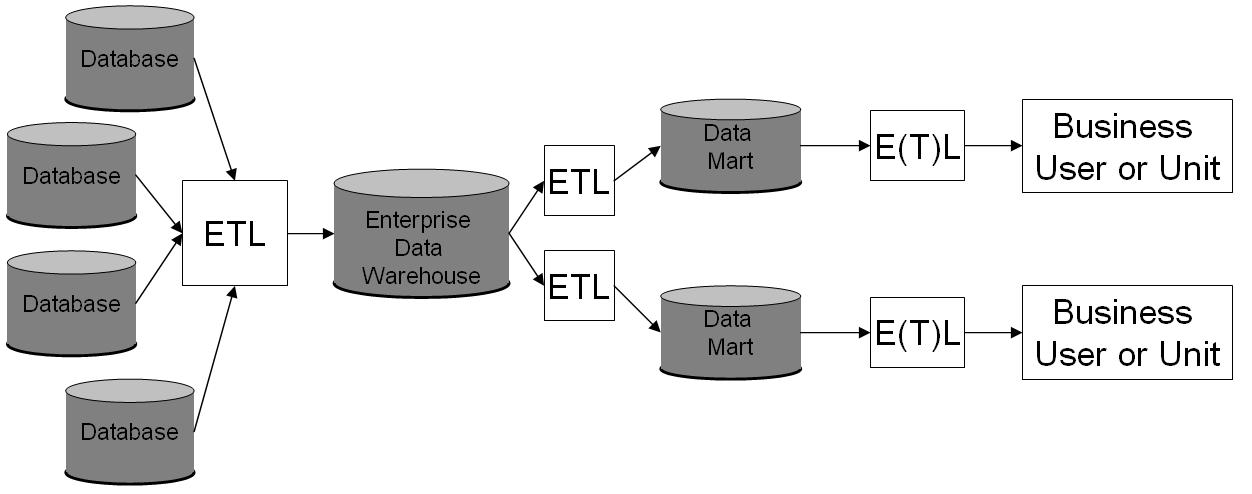

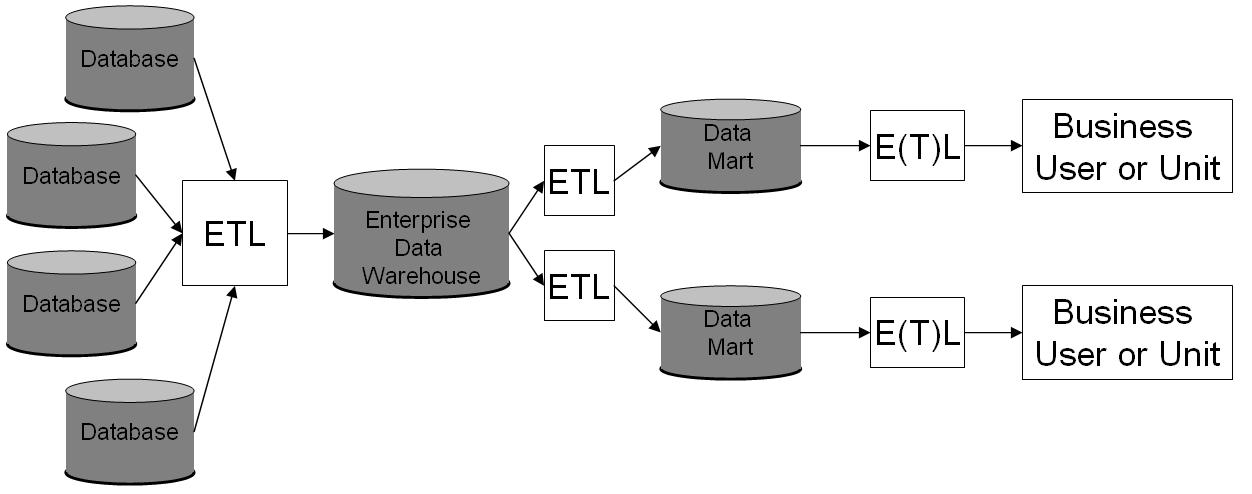

ETL-based data warehousing

The typicalextract, transform, load

In computing, extract, transform, load (ETL) is a three-phase process where data is extracted, transformed (cleaned, sanitized, scrubbed) and loaded into an output data container. The data can be collated from one or more sources and it can also ...

(ETL)-based data warehouse uses staging

Staging may refer to:

Computing

* Staging (cloud computing), a process used to assemble, test, and review a new solution before it is moved into production and the existing solution is decommissioned

* Staging (data), intermediately storing data b ...

, data integration

Data integration involves combining data residing in different sources and providing users with a unified view of them.

This process becomes significant in a variety of situations, which include both commercial (such as when two similar companies ...

, and access layers to house its key functions. The staging layer or staging database stores raw data extracted from each of the disparate source data systems. The integration layer integrates the disparate data sets by transforming the data from the staging layer, often storing this transformed data in an operational data store (ODS) database. The integrated data are then moved to yet another database, often called the data warehouse database, where the data is arranged into hierarchical groups, often called dimensions, and into facts and aggregate facts. The combination of facts and dimensions is sometimes called a star schema

In computing, the star schema is the simplest style of data mart schema and is the approach most widely used to develop data warehouses and dimensional data marts. The star schema consists of one or more fact tables referencing any number of di ...

. The access layer helps users retrieve data.

The main source of the data is cleansed

''Cleansed'' is the third play by the English playwright Sarah Kane. It was first performed in 1998 at the Royal Court Theatre Downstairs in London. The play is set in a university which (according to the blurb of the published script) is oper ...

, transformed, catalogued, and made available for use by managers and other business professionals for data mining, online analytical processing

Online analytical processing, or OLAP (), is an approach to answer multi-dimensional analytical (MDA) queries swiftly in computing. OLAP is part of the broader category of business intelligence, which also encompasses relational databases, re ...

, market research

Market research is an organized effort to gather information about target markets and customers: know about them, starting with who they are. It is an important component of business strategy and a major factor in maintaining competitiveness. Ma ...

and decision support

A decision support system (DSS) is an information system that supports business or organizational decision-making activities. DSSs serve the management, operations and planning levels of an organization (usually mid and higher management) and h ...

. However, the means to retrieve and analyze data, to extract, transform, and load data, and to manage the data dictionary

A data dictionary, or metadata repository, as defined in the ''IBM Dictionary of Computing'', is a "centralized repository of information about data such as meaning, relationships to other data, origin, usage, and format". ''Oracle'' defines it ...

are also considered essential components of a data warehousing system. Many references to data warehousing use this broader context. Thus, an expanded definition for data warehousing includes business intelligence tools, tools to extract, transform, and load data into the repository, and tools to manage and retrieve metadata.

ELT-based data warehousing

ELT

ELT may refer to:

Education

* English language teaching

* Expanded learning time, an American education strategy

* Kolb's experiential learning theory

Mathematics and science

* Ending lamination theorem

* Extremely large telescope, a type ...

-based data warehousing gets rid of a separate ETL tool for data transformation. Instead, it maintains a staging area inside the data warehouse itself. In this approach, data gets extracted from heterogeneous source systems and are then directly loaded into the data warehouse, before any transformation occurs. All necessary transformations are then handled inside the data warehouse itself. Finally, the manipulated data gets loaded into target tables in the same data warehouse.

Benefits

A data warehouse maintains a copy of information from the source transaction systems. This architectural complexity provides the opportunity to: * Integrate data from multiple sources into a single database and data model. More congregation of data to single database so a single query engine can be used to present data in an ODS. * Mitigate the problem of database isolation level lock contention intransaction processing

Transaction processing is information processing in computer science that is divided into individual, indivisible operations called ''transactions''. Each transaction must succeed or fail as a complete unit; it can never be only partially compl ...

systems caused by attempts to run large, long-running analysis queries in transaction processing databases.

* Maintain data history, even if the source transaction systems do not.

* Integrate data from multiple source systems, enabling a central view across the enterprise. This benefit is always valuable, but particularly so when the organization has grown by merger.

* Improve data quality

Data quality refers to the state of qualitative or quantitative pieces of information. There are many definitions of data quality, but data is generally considered high quality if it is "fit for tsintended uses in operations, decision making and p ...

, by providing consistent codes and descriptions, flagging or even fixing bad data.

* Present the organization's information consistently.

* Provide a single common data model

A common data model (CDM) can refer to any standardised data model which allows for data and information exchange between different applications and data sources. Common data models aim to standardise logical infrastructure so that related appli ...

for all data of interest regardless of the data's source.

* Restructure the data so that it makes sense to the business users.

* Restructure the data so that it delivers excellent query performance, even for complex analytic queries, without impacting the operational systems.

* Add value to operational business applications, notably customer relationship management

Customer relationship management (CRM) is a process in which a business or other organization administers its interactions with customers, typically using data analysis to study large amounts of information.

CRM systems compile data from a r ...

(CRM) systems.

*Make decision–support queries easier to write.

*Organize and disambiguate repetitive data.

Generic

The environment for data warehouses and marts includes the following: * Source systems that provide data to the warehouse or mart; * Data integration technology and processes that are needed to prepare the data for use; * Different architectures for storing data in an organization's data warehouse or data marts; *Different tools and applications for a variety of users; *Metadata, data quality, and governance processes must be in place to ensure that the warehouse or mart meets its purposes. In regards to source systems listed above, R. Kelly Rainer states, "A common source for the data in data warehouses is the company's operational databases, which can be relational databases". Regarding data integration, Rainer states, "It is necessary to extract data from source systems, transform them, and load them into a data mart or warehouse". Rainer discusses storing data in an organization's data warehouse or data marts. Metadata is data about data. "IT personnel need information about data sources; database, table, and column names; refresh schedules; and data usage measures". Today, the most successful companies are those that can respond quickly and flexibly to market changes and opportunities. A key to this response is the effective and efficient use of data and information by analysts and managers. A "data warehouse" is a repository of historical data that is organized by the subject to support decision-makers in the organization. Once data is stored in a data mart or warehouse, it can be accessed.Related systems (data mart, OLAP, OLTP, predictive analytics)

Adata mart

A data mart is a structure/access pattern specific to ''data warehouse'' environments, used to retrieve client-facing data. The data mart is a subset of the data warehouse and is usually oriented to a specific business line or team. Whereas data w ...

is a simple form of a data warehouse that is focused on a single subject (or functional area), hence they draw data from a limited number of sources such as sales, finance or marketing. Data marts are often built and controlled by a single department within an organization. The sources could be internal operational systems, a central data warehouse, or external data. Denormalization is the norm for data modeling techniques in this system. Given that data marts generally cover only a subset of the data contained in a data warehouse, they are often easier and faster to implement.

Types of data marts include dependent

A dependant is a person who relies on another as a primary source of income. A common-law spouse who is financially supported by their partner may also be included in this definition. In some jurisdictions, supporting a dependant may enab ...

, independent, and hybrid data marts.

Online analytical processing

Online analytical processing, or OLAP (), is an approach to answer multi-dimensional analytical (MDA) queries swiftly in computing. OLAP is part of the broader category of business intelligence, which also encompasses relational databases, re ...

(OLAP) is characterized by a relatively low volume of transactions. Queries are often very complex and involve aggregations. For OLAP systems, response time is an effective measure. OLAP applications are widely used by Data Mining techniques. OLAP databases store aggregated, historical data in multi-dimensional schemas (usually star schema

In computing, the star schema is the simplest style of data mart schema and is the approach most widely used to develop data warehouses and dimensional data marts. The star schema consists of one or more fact tables referencing any number of di ...

s). OLAP systems typically have a data latency of a few hours, as opposed to data marts, where latency is expected to be closer to one day. The OLAP approach is used to analyze multidimensional data from multiple sources and perspectives. The three basic operations in OLAP are Roll-up (Consolidation), Drill-down, and Slicing & Dicing.

Online transaction processing (OLTP) is characterized by a large number of short on-line transactions (INSERT, UPDATE, DELETE). OLTP systems emphasize very fast query processing and maintaining data integrity

Data integrity is the maintenance of, and the assurance of, data accuracy and consistency over its entire life-cycle and is a critical aspect to the design, implementation, and usage of any system that stores, processes, or retrieves data. The ter ...

in multi-access environments. For OLTP systems, effectiveness is measured by the number of transactions per second. OLTP databases contain detailed and current data. The schema used to store transactional databases is the entity model (usually 3NF). Normalization is the norm for data modeling techniques in this system.

Predictive analytics

Predictive analytics encompasses a variety of statistical techniques from data mining, predictive modeling, and machine learning that analyze current and historical facts to make predictions about future or otherwise unknown events.

In busin ...

is about finding and quantifying hidden patterns in the data using complex mathematical models that can be used to predict

A prediction (Latin ''præ-'', "before," and ''dicere'', "to say"), or forecasting, forecast, is a statement about a future event (probability theory), event or data. They are often, but not always, based upon experience or knowledge. There i ...

future outcomes. Predictive analysis is different from OLAP in that OLAP focuses on historical data analysis and is reactive in nature, while predictive analysis focuses on the future. These systems are also used for customer relationship management

Customer relationship management (CRM) is a process in which a business or other organization administers its interactions with customers, typically using data analysis to study large amounts of information.

CRM systems compile data from a r ...

(CRM).

History

The concept of data warehousing dates back to the late 1980s when IBM researchers Barry Devlin and Paul Murphy developed the "business data warehouse". In essence, the data warehousing concept was intended to provide an architectural model for the flow of data from operational systems to decision support environments. The concept attempted to address the various problems associated with this flow, mainly the high costs associated with it. In the absence of a data warehousing architecture, an enormous amount of redundancy was required to support multiple decision support environments. In larger corporations, it was typical for multiple decision support environments to operate independently. Though each environment served different users, they often required much of the same stored data. The process of gathering, cleaning and integrating data from various sources, usually from long-term existing operational systems (usually referred to aslegacy system

In computing, a legacy system is an old method, technology, computer system, or application program, "of, relating to, or being a previous or outdated computer system", yet still in use. Often referencing a system as "legacy" means that it paved ...

s), was typically in part replicated for each environment. Moreover, the operational systems were frequently reexamined as new decision support requirements emerged. Often new requirements necessitated gathering, cleaning and integrating new data from "data mart

A data mart is a structure/access pattern specific to ''data warehouse'' environments, used to retrieve client-facing data. The data mart is a subset of the data warehouse and is usually oriented to a specific business line or team. Whereas data w ...

s" that was tailored for ready access by users.

Additionally, with the publication of The IRM Imperative (Wiley & Sons, 1991) by James M. Kerr, the idea of managing and putting a dollar value on an organization's data resources and then reporting that value as an asset on a balance sheet became popular. In the book, Kerr described a way to populate subject-area databases from data derived from transaction-driven systems to create a storage area where summary data could be further leveraged to inform executive decision-making. This concept served to promote further thinking of how a data warehouse could be developed and managed in a practical way within any enterprise.

Key developments in early years of data warehousing:

* 1960s – General Mills

General Mills, Inc., is an American multinational manufacturer and marketer of branded processed consumer foods sold through retail stores. Founded on the banks of the Mississippi River at Saint Anthony Falls in Minneapolis, the company ori ...

and Dartmouth College

Dartmouth College (; ) is a private research university in Hanover, New Hampshire. Established in 1769 by Eleazar Wheelock, it is one of the nine colonial colleges chartered before the American Revolution. Although founded to educate Native ...

, in a joint research project, develop the terms ''dimensions'' and ''facts''.Kimball 2013, pg. 15

* 1970s – ACNielsen

The Nielsen Corporation, self-referentially known as The Nielsen Company, and formerly known as ACNielsen or AC Nielsen, is a global marketing research firm, with worldwide headquarters in New York City, United States. Regional headquarters for ...

and IRI provide dimensional data marts for retail sales.

* 1970s – Bill Inmon begins to define and discuss the term Data Warehouse.

* 1975 – Sperry Univac introduces MAPPER (MAintain, Prepare, and Produce Executive Reports), a database management and reporting system that includes the world's first 4GL

A fourth-generation programming language (4GL) is any computer programming language that belongs to a class of languages envisioned as an advancement upon third-generation programming languages (3GL). Each of the programming language generations ai ...

. It is the first platform designed for building Information Centers (a forerunner of contemporary data warehouse technology).

* 1983 – Teradata

Teradata Corporation is an American software company that provides cloud database and analytics-related software, products, and services. The company was formed in 1979 in Brentwood, California, as a collaboration between researchers at Caltech a ...

introduces the DBC/1012 database computer specifically designed for decision support.

* 1984 – Metaphor Computer Systems, founded by David Liddle David Liddle is co-founder of Interval Research Corporation, consulting professor of computer science at Stanford University. While at Xerox PARC he was credited with heading development of the Xerox Star computer system. In 1982 he co-founded Meta ...

and Don Massaro, releases a hardware/software package and GUI for business users to create a database management and analytic system.

* 1988 – Barry Devlin and Paul Murphy publish the article "An architecture for a business and information system" where they introduce the term "business data warehouse".

* 1990 – Red Brick Systems, founded by Ralph Kimball

Ralph Kimball (born July 18, 1944) is an author on the subject of data warehousing and business intelligence. He is one of the original architects of data warehousing and is known for long-term convictions that data warehouses must be designed to ...

, introduces Red Brick Warehouse, a database management system specifically for data warehousing.

* 1991 - James M. Kerr authors The IRM Imperative, which suggests data resources could be reported as an asset on a balance sheet, furthering commercial interest in the establishment of data warehouses.

* 1991 – Prism Solutions, founded by Bill Inmon, introduces Prism Warehouse Manager, software for developing a data warehouse.

* 1992 – Bill Inmon publishes the book ''Building the Data Warehouse''.

* 1995 – The Data Warehousing Institute, a for-profit organization that promotes data warehousing, is founded.

* 1996 – Ralph Kimball

Ralph Kimball (born July 18, 1944) is an author on the subject of data warehousing and business intelligence. He is one of the original architects of data warehousing and is known for long-term convictions that data warehouses must be designed to ...

publishes the book ''The Data Warehouse Toolkit''.

* 2000 – Dan Linstedt releases in the public domain the Data vault modeling, conceived in 1990 as an alternative to Inmon and Kimball to provide long-term historical storage of data coming in from multiple operational systems, with emphasis on tracing, auditing and resilience to change of the source data model.

* 2008 – Bill Inmon, along with Derek Strauss and Genia Neushloss, publishes "DW 2.0: The Architecture for the Next Generation of Data Warehousing", explaining his top-down approach to data warehousing and coining the term, data-warehousing 2.0.

* 2012 – Bill Inmon develops and makes public technology known as "textual disambiguation". Textual disambiguation applies context to raw text and reformats the raw text and context into a standard data base format. Once raw text is passed through textual disambiguation, it can easily and efficiently be accessed and analyzed by standard business intelligence technology. Textual disambiguation is accomplished through the execution of textual ETL. Textual disambiguation is useful wherever raw text is found, such as in documents, Hadoop, email, and so forth.

Information storage

Facts

A fact is a value, or measurement, which represents a fact about the managed entity or system. Facts, as reported by the reporting entity, are said to be at raw level; e.g., in a mobile telephone system, if a BTS (base transceiver station

A base transceiver station (BTS) is a piece of equipment that facilitates wireless communication between user equipment (UE) and a network. UEs are devices like mobile phones (handsets), WLL phones, computers with wireless Internet connectivity, ...

) receives 1,000 requests for traffic channel allocation, allocates for 820, and rejects the remaining, it would report three facts or measurements to a management system:

*

*

*

Facts at the raw level are further aggregated to higher levels in various dimensions

In physics and mathematics, the dimension of a mathematical space (or object) is informally defined as the minimum number of coordinates needed to specify any point within it. Thus, a line has a dimension of one (1D) because only one coord ...

to extract more service or business-relevant information from it. These are called aggregates or summaries or aggregated facts.

For instance, if there are three BTS in a city, then the facts above can be aggregated from the BTS to the city level in the network dimension. For example:

*

*

Dimensional versus normalized approach for storage of data

There are three or more leading approaches to storing data in a data warehouse – the most important approaches are the dimensional approach and the normalized approach. The dimensional approach refers toRalph Kimball

Ralph Kimball (born July 18, 1944) is an author on the subject of data warehousing and business intelligence. He is one of the original architects of data warehousing and is known for long-term convictions that data warehouses must be designed to ...

's approach in which it is stated that the data warehouse should be modeled using a Dimensional Model/star schema

In computing, the star schema is the simplest style of data mart schema and is the approach most widely used to develop data warehouses and dimensional data marts. The star schema consists of one or more fact tables referencing any number of di ...

. The normalized approach, also called the 3NF model (Third Normal Form), refers to Bill Inmon's approach in which it is stated that the data warehouse should be modeled using an E-R model/normalized model.

Dimensional approach

In a dimensional approach, transaction data is partitioned into "facts", which are generally numeric transaction data, and "dimensions

In physics and mathematics, the dimension of a mathematical space (or object) is informally defined as the minimum number of coordinates needed to specify any point within it. Thus, a line has a dimension of one (1D) because only one coord ...

", which are the reference information that gives context to the facts. For example, a sales transaction can be broken up into facts such as the number of products ordered and the total price paid for the products, and into dimensions such as order date, customer name, product number, order ship-to and bill-to locations, and salesperson responsible for receiving the order.

A key advantage of a dimensional approach is that the data warehouse is easier for the user to understand and to use. Also, the retrieval of data from the data warehouse tends to operate very quickly. Dimensional structures are easy to understand for business users, because the structure is divided into measurements/facts and context/dimensions. Facts are related to the organization's business processes and operational system whereas the dimensions surrounding them contain context about the measurement (Kimball, Ralph 2008). Another advantage offered by dimensional model is that it does not involve a relational database every time. Thus, this type of modeling technique is very useful for end-user queries in data warehouse.

The model of facts and dimensions can also be understood as a data cube

In computer programming contexts, a data cube (or datacube) is a multi-dimensional ("n-D") array of values. Typically, the term data cube is applied in contexts where these arrays are massively larger than the hosting computer's main memory; exam ...

. Where the dimensions are the categorical coordinates in a multi-dimensional cube, the fact is a value corresponding to the coordinates.

The main disadvantages of the dimensional approach are the following:

# To maintain the integrity of facts and dimensions, loading the data warehouse with data from different operational systems is complicated.

# It is difficult to modify the data warehouse structure if the organization adopting the dimensional approach changes the way in which it does business.

Normalized approach

In the normalized approach, the data in the data warehouse are stored following, to a degree, database normalization rules. Tables are grouped together by ''subject areas'' that reflect general data categories (e.g., data on customers, products, finance, etc.). The normalized structure divides data into entities, which creates several tables in a relational database. When applied in large enterprises the result is dozens of tables that are linked together by a web of joins. Furthermore, each of the created entities is converted into separate physical tables when the database is implemented (Kimball, Ralph 2008). The main advantage of this approach is that it is straightforward to add information into the database. Some disadvantages of this approach are that, because of the number of tables involved, it can be difficult for users to join data from different sources into meaningful information and to access the information without a precise understanding of the sources of data and of thedata structure

In computer science, a data structure is a data organization, management, and storage format that is usually chosen for Efficiency, efficient Data access, access to data. More precisely, a data structure is a collection of data values, the rel ...

of the data warehouse.

Both normalized and dimensional models can be represented in entity-relationship diagrams as both contain joined relational tables. The difference between the two models is the degree of normalization (also known as Normal Forms). These approaches are not mutually exclusive, and there are other approaches. Dimensional approaches can involve normalizing data to a degree (Kimball, Ralph 2008).

In ''Information-Driven Business'', Robert Hillard proposes an approach to comparing the two approaches based on the information needs of the business problem. The technique shows that normalized models hold far more information than their dimensional equivalents (even when the same fields are used in both models) but this extra information comes at the cost of usability. The technique measures information quantity in terms of information entropy

In information theory, the entropy of a random variable is the average level of "information", "surprise", or "uncertainty" inherent to the variable's possible outcomes. Given a discrete random variable X, which takes values in the alphabet \ ...

and usability in terms of the Small Worlds data transformation measure.

Design methods

Bottom-up design

In the ''bottom-up'' approach,data mart

A data mart is a structure/access pattern specific to ''data warehouse'' environments, used to retrieve client-facing data. The data mart is a subset of the data warehouse and is usually oriented to a specific business line or team. Whereas data w ...

s are first created to provide reporting and analytical capabilities for specific business process

A business process, business method or business function is a collection of related, structured activities or tasks by people or equipment in which a specific sequence produces a service or product (serves a particular business goal) for a parti ...

es. These data marts can then be integrated to create a comprehensive data warehouse. The data warehouse bus architecture is primarily an implementation of "the bus", a collection of conformed dimensions and conformed facts, which are dimensions that are shared (in a specific way) between facts in two or more data marts.

Top-down design

The ''top-down'' approach is designed using a normalized enterprisedata model

A data model is an abstract model that organizes elements of data and standardizes how they relate to one another and to the properties of real-world entities. For instance, a data model may specify that the data element representing a car be c ...

. "Atomic" data, that is, data at the greatest level of detail, are stored in the data warehouse. Dimensional data marts containing data needed for specific business processes or specific departments are created from the data warehouse.Gartner, Of Data Warehouses, Operational Data Stores, Data Marts and Data Outhouses, Dec 2005

Hybrid design

Data warehouses (DW) often resemble thehub and spokes architecture

Network architecture is the design of a computer network. It is a framework for the specification of a network's physical components and their functional organization and configuration, its operational principles and procedures, as well as commun ...

. Legacy system

In computing, a legacy system is an old method, technology, computer system, or application program, "of, relating to, or being a previous or outdated computer system", yet still in use. Often referencing a system as "legacy" means that it paved ...

s feeding the warehouse often include customer relationship management

Customer relationship management (CRM) is a process in which a business or other organization administers its interactions with customers, typically using data analysis to study large amounts of information.

CRM systems compile data from a r ...

and enterprise resource planning

Enterprise resource planning (ERP) is the integrated management of main business processes, often in real time and mediated by software and technology. ERP is usually referred to as a category of business management software—typically a sui ...

, generating large amounts of data. To consolidate these various data models, and facilitate the extract transform load process, data warehouses often make use of an operational data store, the information from which is parsed into the actual DW. To reduce data redundancy, larger systems often store the data in a normalized way. Data marts for specific reports can then be built on top of the data warehouse.

A hybrid DW database is kept on third normal form to eliminate data redundancy In computer main memory, auxiliary storage and computer buses, data redundancy is the existence of data that is additional to the actual data and permits correction of errors in stored or transmitted data. The additional data can simply be a compl ...

. A normal relational database, however, is not efficient for business intelligence reports where dimensional modelling is prevalent. Small data marts can shop for data from the consolidated warehouse and use the filtered, specific data for the fact tables and dimensions required. The DW provides a single source of information from which the data marts can read, providing a wide range of business information. The hybrid architecture allows a DW to be replaced with a master data management

Master data management (MDM) is a technology-enabled discipline in which business and information technology work together to ensure the uniformity, accuracy, stewardship, semantic consistency and accountability of the enterprise's official shared ...

repository where operational (not static) information could reside.

The data vault modeling components follow hub and spokes architecture. This modeling style is a hybrid design, consisting of the best practices from both third normal form and star schema

In computing, the star schema is the simplest style of data mart schema and is the approach most widely used to develop data warehouses and dimensional data marts. The star schema consists of one or more fact tables referencing any number of di ...

. The data vault model is not a true third normal form, and breaks some of its rules, but it is a top-down architecture with a bottom up design. The data vault model is geared to be strictly a data warehouse. It is not geared to be end-user accessible, which, when built, still requires the use of a data mart or star schema-based release area for business purposes.

Data warehouse characteristics

There are basic features that define the data in the data warehouse that include subject orientation, data integration, time-variant, nonvolatile data, and data granularity.Subject-oriented

Unlike the operational systems, the data in the data warehouse revolves around the subjects of the enterprise. Subject orientation is not database normalization. Subject orientation can be really useful for decision-making. Gathering the required objects is called subject-oriented.Integrated

The data found within the data warehouse is integrated. Since it comes from several operational systems, all inconsistencies must be removed. Consistencies include naming conventions, measurement of variables, encoding structures, physical attributes of data, and so forth.Time-variant

While operational systems reflect current values as they support day-to-day operations, data warehouse data represents a long time horizon (up to 10 years) which means it stores mostly historical data. It is mainly meant for data mining and forecasting. (E.g. if a user is searching for a buying pattern of a specific customer, the user needs to look at data on the current and past purchases.)Nonvolatile

The data in the data warehouse is read-only, which means it cannot be updated, created, or deleted (unless there is a regulatory or statutory obligation to do so).Data warehouse options

Aggregation

In the data warehouse process, data can be aggregated in data marts at different levels of abstraction. The user may start looking at the total sale units of a product in an entire region. Then the user looks at the states in that region. Finally, they may examine the individual stores in a certain state. Therefore, typically, the analysis starts at a higher level and drills down to lower levels of details.Virtualization

With data virtualization, the data used remains in its original locations and real-time access is established to allow analytics across multiple sources creating a virtual data warehouse. This can aid in resolving some technical difficulties such as compatibility problems when combining data from various platforms, lowering the risk of error caused by faulty data, and guaranteeing that the newest data is used. Furthermore, avoiding the creation of a new database containing personal information can make it easier to comply with privacy regulations. However, with data virtualization, the connection to all necessary data sources must be operational as there is no local copy of the data, which is one of the main drawbacks of the approach.Data warehouse architecture

The different methods used to construct/organize a data warehouse specified by an organization are numerous. The hardware utilized, software created and data resources specifically required for the correct functionality of a data warehouse are the main components of the data warehouse architecture. All data warehouses have multiple phases in which the requirements of the organization are modified and fine-tuned.Versus operational system

Operational systems are optimized for the preservation ofdata integrity

Data integrity is the maintenance of, and the assurance of, data accuracy and consistency over its entire life-cycle and is a critical aspect to the design, implementation, and usage of any system that stores, processes, or retrieves data. The ter ...

and speed of recording of business transactions through use of database normalization and an entity-relationship model. Operational system designers generally follow Codd's 12 rules of database normalization to ensure data integrity. Fully normalized database designs (that is, those satisfying all Codd rules) often result in information from a business transaction being stored in dozens to hundreds of tables. Relational database

A relational database is a (most commonly digital) database based on the relational model of data, as proposed by E. F. Codd in 1970. A system used to maintain relational databases is a relational database management system (RDBMS). Many relatio ...

s are efficient at managing the relationships between these tables. The databases have very fast insert/update performance because only a small amount of data in those tables is affected each time a transaction is processed. To improve performance, older data are usually periodically purged from operational systems.

Data warehouses are optimized for analytic access patterns. Analytic access patterns generally involve selecting specific fields and rarely if ever , which selects all fields/columns, as is more common in operational databases. Because of these differences in access patterns, operational databases (loosely, OLTP) benefit from the use of a row-oriented DBMS whereas analytics databases (loosely, OLAP) benefit from the use of a column-oriented DBMS

A column-oriented DBMS or columnar DBMS is a database management system (DBMS) that stores data tables by column rather than by row. Benefits include more efficient access to data when only querying a subset of columns (by eliminating the need to r ...

. Unlike operational systems which maintain a snapshot of the business, data warehouses generally maintain an infinite history which is implemented through ETL processes that periodically migrate data from the operational systems over to the data warehouse.

Evolution in organization use

These terms refer to the level of sophistication of a data warehouse: ; Offline operational data warehouse: Data warehouses in this stage of evolution are updated on a regular time cycle (usually daily, weekly or monthly) from the operational systems and the data is stored in an integrated reporting-oriented database. ; Offline data warehouse: Data warehouses at this stage are updated from data in the operational systems on a regular basis and the data warehouse data are stored in a data structure designed to facilitate reporting. ; On-time data warehouse: Online Integrated Data Warehousing represent the real-time Data warehouses stage data in the warehouse is updated for every transaction performed on the source data ; Integrated data warehouse: These data warehouses assemble data from different areas of business, so users can look up the information they need across other systems.See also

* List of business intelligence software *Data mesh

Data mesh is a sociotechnical approach to build a decentralized data architecture by leveraging a domain-oriented, self-serve design (in a software development perspective), and borrows Eric Evans’ theory of domain-driven design and Manuel Pais� ...

, a domain-oriented data architecture paradigm for managing big data

* Virtual Database Manager Virtual Database Manager (VDB) is software designed to represent some non-relational data in a virtual data warehouse without copying the original data and allow a real time access to the data.

VDB is a framework written in Java allowing access to ...

, represents non-relational data in a virtual data warehouse

References

Further reading

* Davenport, Thomas H. and Harris, Jeanne G. ''Competing on Analytics: The New Science of Winning'' (2007) Harvard Business School Press. * Ganczarski, Joe. ''Data Warehouse Implementations: Critical Implementation Factors Study'' (2009) VDM Verlag * Kimball, Ralph and Ross, Margy. ''The Data Warehouse Toolkit'' Third Edition (2013) Wiley, * Linstedt, Graziano, Hultgren. ''The Business of Data Vault Modeling'' Second Edition (2010) Dan linstedt, * William Inmon. ''Building the Data Warehouse'' (2005) John Wiley and Sons, {{DEFAULTSORT:Data Warehouse Business intelligence Data management