|

Dimension (data Warehouse)

A dimension is a structure that categorizes facts and measures in order to enable users to answer business questions. Commonly used dimensions are people, products, place and time. (Note: People and time sometimes are not modeled as dimensions.) In a data warehouse, dimensions provide structured labeling information to otherwise unordered numeric measures. The dimension is a data set composed of individual, non-overlapping data elements. The primary functions of dimensions are threefold: to provide filtering, grouping and labelling. These functions are often described as " slice and dice". A common data warehouse example involves sales as the measure, with customer and product as dimensions. In each sale a customer buys a product. The data can be sliced by removing all customers except for a group under study, and then diced by grouping by product. A dimensional data element is similar to a categorical variable in statistics. Typically dimensions in a data warehouse are organiz ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Warehouse

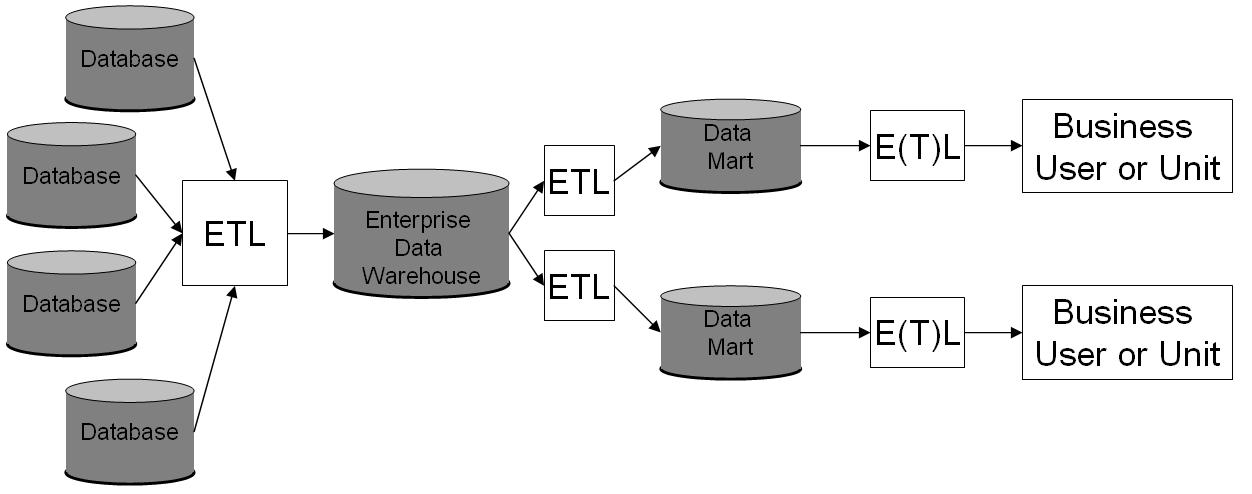

In computing, a data warehouse (DW or DWH), also known as an enterprise data warehouse (EDW), is a system used for Business reporting, reporting and data analysis and is considered a core component of business intelligence. DWs are central Repository (version control), repositories of integrated data from one or more disparate sources. They store current and historical data in one single place that are used for creating analytical reports for workers throughout the enterprise. The data stored in the warehouse is uploaded from the operational systems (such as marketing or sales). The data may pass through an operational data store and may require data cleansing for additional operations to ensure data quality before it is used in the DW for reporting. Extract, transform, load (ETL) and extract, load, transform (ELT) are the two main approaches used to build a data warehouse system. ETL-based data warehousing The typical extract, transform, load (ETL)-based data warehouse uses ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Candidate Key

A candidate key, or simply a key, of a relational database is a minimal superkey. In other words, it is any set of columns that have a unique combination of values in each row (which makes it a superkey), with the additional constraint that removing any column would possibly produce duplicate rows (which makes it a minimal superkey). Specific candidate keys are sometimes called primary keys, secondary keys or alternate keys. The columns in a candidate key are called prime attributes, and a column that does not occur in any candidate key is called a non-prime attribute. Every relation without NULL values will have at least one candidate key: Since there cannot be duplicate rows, the set of all columns is a superkey, and if that isn't minimal, some subset of that will be minimal. There is a functional dependency from the candidate key to all the attributes in the relation. The candidate keys of a relation are all the possible ways we can identify a row. As such, they are an impo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fact Table

In data warehousing, a fact table consists of the measurements, metrics or facts of a business process. It is located at the center of a star schema or a snowflake schema surrounded by dimension tables. Where multiple fact tables are used, these are arranged as a fact constellation schema. A fact table typically has two types of columns: those that contain facts and those that are a foreign key to dimension tables. The primary key of a fact table is usually a composite key that is made up of all of its foreign keys. Fact tables contain the content of the data warehouse and store different types of measures like additive, non-additive, and semi-additive measures. Fact tables provide the (usually) additive values that act as independent variables by which dimensional attributes are analyzed. Fact tables are often defined by their ''grain''. The grain of a fact table represents the most atomic level by which the facts may be defined. The grain of a sales fact table might be stated as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Slowly Changing Dimension

A slowly changing dimension (SCD) in data management and data warehousing is a dimension which contains relatively static data which can change slowly but unpredictably, rather than according to a regular schedule. Some examples of typical slowly changing dimensions are entities as names of geographical locations, customers, or products. Some scenarios can cause referential integrity problems. For example, a database may contain a fact table that stores sales records. This fact table would be linked to dimensions by means of foreign keys. One of these dimensions may contain data about the company's salespeople: e.g., the regional offices in which they work. However, the salespeople are sometimes transferred from one regional office to another. For historical sales reporting purposes it may be necessary to keep a record of the fact that a particular sales person had been assigned to a particular regional office at an earlier date, whereas that sales person is now assigned to a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Degenerate Dimension

According to Ralph Kimball, in a data warehouse, a degenerate dimension is a dimension key in the fact table that does not have its own dimension table, because all the interesting attributes have been placed in analytic dimensions. The term "degenerate dimension" was originated by Ralph Kimball. As Bob Becker says: Other uses of the term Although most writers and practitioners use the term degenerate dimension correctly, it is very easy to find misleading definitions in online and printed sources. For example, the Oracle FAQ defines a degenerate dimension as a "data dimension that is stored in the fact table rather than a separate dimension table. This eliminates the need to join to a dimension table. You can use the data in the degenerate dimension to limit or 'slice and dice' your fact table measures." This common interpretation implies that it is good dimensional modeling practice to place dimension attributes in the fact table, as long as you call them a degenerate dimension. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Warehouse

In computing, a data warehouse (DW or DWH), also known as an enterprise data warehouse (EDW), is a system used for Business reporting, reporting and data analysis and is considered a core component of business intelligence. DWs are central Repository (version control), repositories of integrated data from one or more disparate sources. They store current and historical data in one single place that are used for creating analytical reports for workers throughout the enterprise. The data stored in the warehouse is uploaded from the operational systems (such as marketing or sales). The data may pass through an operational data store and may require data cleansing for additional operations to ensure data quality before it is used in the DW for reporting. Extract, transform, load (ETL) and extract, load, transform (ELT) are the two main approaches used to build a data warehouse system. ETL-based data warehousing The typical extract, transform, load (ETL)-based data warehouse uses ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Categorical Variable

In statistics, a categorical variable (also called qualitative variable) is a variable that can take on one of a limited, and usually fixed, number of possible values, assigning each individual or other unit of observation to a particular group or nominal category on the basis of some qualitative property. In computer science and some branches of mathematics, categorical variables are referred to as enumerations or enumerated types. Commonly (though not in this article), each of the possible values of a categorical variable is referred to as a level. The probability distribution associated with a random categorical variable is called a categorical distribution. Categorical data is the statistical data type consisting of categorical variables or of data that has been converted into that form, for example as grouped data. More specifically, categorical data may derive from observations made of qualitative data that are summarised as counts or cross tabulations, or from observations o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Coordinated Universal Time

Coordinated Universal Time or UTC is the primary time standard by which the world regulates clocks and time. It is within about one second of mean solar time (such as UT1) at 0° longitude (at the IERS Reference Meridian as the currently used prime meridian) and is not adjusted for daylight saving time. It is effectively a successor to Greenwich Mean Time (GMT). The coordination of time and frequency transmissions around the world began on 1 January 1960. UTC was first officially adopted as CCIR Recommendation 374, ''Standard-Frequency and Time-Signal Emissions'', in 1963, but the official abbreviation of UTC and the official English name of Coordinated Universal Time (along with the French equivalent) were not adopted until 1967. The system has been adjusted several times, including a brief period during which the time-coordination radio signals broadcast both UTC and "Stepped Atomic Time (SAT)" before a new UTC was adopted in 1970 and implemented in 1972. This change also a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Tuple-versioning

Tuple-versioning (also called point-in-time) is a mechanism used in a relational database management system to store past states of a relation. Normally, only the current state is captured. Using tuple-versioning techniques, typically two values for time are stored along with each tuple: a start time and an end time. These two values indicate the validity of the rest of the values in the tuple. Typically when tuple-versioning techniques are used, the current tuple has a valid start time, but a null value for end time. Therefore, it is easy and efficient to obtain the current values for all tuples by querying for the null end time. A single query that searches for tuples with start time less than, and end time greater than, a given time (where null end time is treated as a value greater than the given time) will give as a result the valid tuples at the given time. For example, if a person's job changes from Engineer to Manager, there would be two tuples in an Employee table, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ralph Kimball

Ralph Kimball (born July 18, 1944) is an author on the subject of data warehousing and business intelligence. He is one of the original architects of data warehousing and is known for long-term convictions that data warehouses must be designed to be understandable and fast. His bottom-up methodology, also known as dimensional modeling or the Kimball methodology, is one of the two main data warehousing methodologies alongside Bill Inmon. He is the principal author of the best-selling books ''The Data Warehouse Toolkit'', ''The Data Warehouse Lifecycle Toolkit'', ''The Data Warehouse ETL Toolkit'' and ''The Kimball Group Reader'', published by Wiley and Sons. Career After receiving a Ph.D. in 1973 from Stanford University in electrical engineering (specializing in man-machine systems), Ralph joined the Xerox Palo Alto Research Center (PARC). At PARC Ralph was a principal designer of the Xerox Star Workstation, the first commercial product to use mice, icons and windows. Kimball the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Extract, Transform, Load

In computing, extract, transform, load (ETL) is a three-phase process where data is extracted, transformed (cleaned, sanitized, scrubbed) and loaded into an output data container. The data can be collated from one or more sources and it can also be outputted to one or more destinations. ETL processing is typically executed using software applications but it can also be done manually by system operators. ETL software typically automates the entire process and can be run manually or on reoccurring schedules either as single jobs or aggregated into a batch of jobs. A properly designed ETL system extracts data from source systems and enforces data type and data validity standards and ensures it conforms structurally to the requirements of the output. Some ETL systems can also deliver data in a presentation-ready format so that application developers can build applications and end users can make decisions. The ETL process became a popular concept in the 1970s and is often used in d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Integration

Data integration involves combining data residing in different sources and providing users with a unified view of them. This process becomes significant in a variety of situations, which include both commercial (such as when two similar companies need to merge their databases) and scientific (combining research results from different bioinformatics repositories, for example) domains. Data integration appears with increasing frequency as the volume (that is, big data) and the need to share existing data explodes. It has become the focus of extensive theoretical work, and numerous open problems remain unsolved. Data integration encourages collaboration between internal as well as external users. The data being integrated must be received from a heterogeneous database system and transformed to a single coherent data store that provides synchronous data across a network of files for clients. A common use of data integration is in data mining when analyzing and extracting informati ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |