|

Quasi-opportunistic Supercomputing

Quasi-opportunistic supercomputing is a computational paradigm for supercomputing on a large number of geographically disperse computers. Quasi-opportunistic supercomputing aims to provide a higher quality of service than opportunistic resource sharing. The quasi-opportunistic approach coordinates computers which are often under different ownerships to achieve reliable and fault-tolerant high performance with more control than opportunistic computer grids in which computational resources are used whenever they may become available.''Quasi-opportunistic supercomputing in grids'' by Valentin Kravtsov, David Carmeli, Werner Dubitzky, Ariel Orda, Assaf Schuster, Benny Yoshpa, in IEEE International Symposium on High Performance Distributed Computing, 2007, pages 233-24/ref> While the "opportunistic match-making" approach to task scheduling on computer grids is simpler in that it merely matches tasks to whatever resources may be available at a given time, demanding supercompute ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pleiades Supercomputer

Pleiades () is a petascale supercomputer housed at the NASA Advanced Supercomputing Division, NASA Advanced Supercomputing (NAS) facility at NASA's Ames Research Center located at Moffett Federal Airfield, Moffett Field near Mountain View, California, Mountain View, California. It is maintained by NASA and partners Hewlett Packard Enterprise (formerly Silicon Graphics International) and Intel. As of November 2019 it is ranked the 32nd most powerful computer on the TOP500 list with a LINPACK benchmarks, LINPACK rating of 5.95 FLOPS, petaflops (5.95 quadrillion floating point operations per second) and a peak performance of 7.09 petaflops from its most recent hardware upgrade. The system serves as NASA's largest supercomputing resource, supporting missions in aeronautics, human spaceflight, astrophysics, and Earth science. History Built in 2008 and named for the Pleiades Open cluster, open star cluster, the supercomputer debuted as the third most powerful supercomputer in the world ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

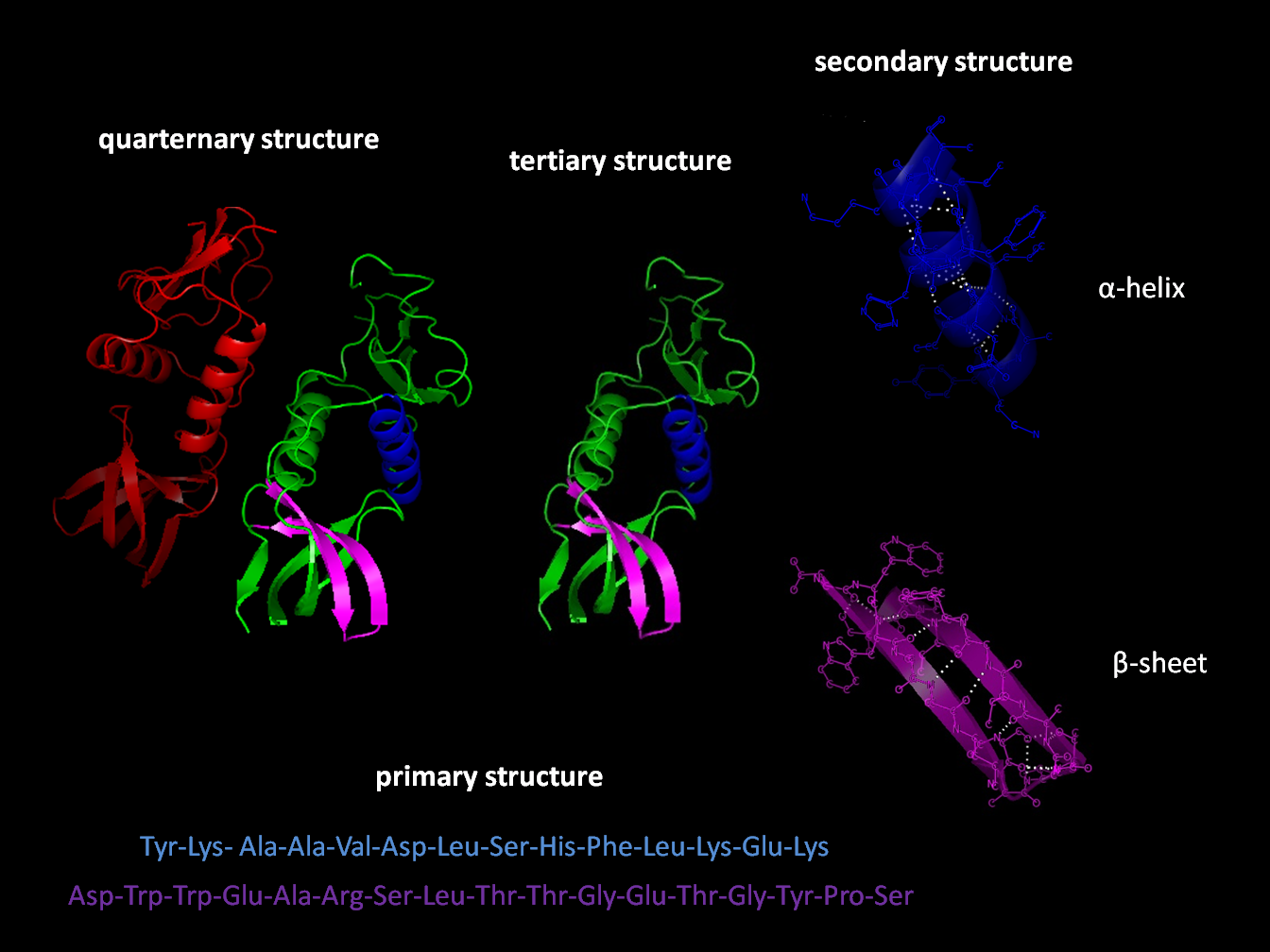

Protein Folding

Protein folding is the physical process by which a protein chain is translated to its native three-dimensional structure, typically a "folded" conformation by which the protein becomes biologically functional. Via an expeditious and reproducible process, a polypeptide folds into its characteristic three-dimensional structure from a random coil. Each protein exists first as an unfolded polypeptide or random coil after being translated from a sequence of mRNA to a linear chain of amino acids. At this stage the polypeptide lacks any stable (long-lasting) three-dimensional structure (the left hand side of the first figure). As the polypeptide chain is being synthesized by a ribosome, the linear chain begins to fold into its three-dimensional structure. Folding of many proteins begins even during translation of the polypeptide chain. Amino acids interact with each other to produce a well-defined three-dimensional structure, the folded protein (the right hand side of the figure), ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fault Tolerant

Fault tolerance is the property that enables a system to continue operating properly in the event of the failure of one or more faults within some of its components. If its operating quality decreases at all, the decrease is proportional to the severity of the failure, as compared to a naively designed system, in which even a small failure can cause total breakdown. Fault tolerance is particularly sought after in high-availability, mission-critical, or even life-critical systems. The ability of maintaining functionality when portions of a system break down is referred to as graceful degradation. A fault-tolerant design enables a system to continue its intended operation, possibly at a reduced level, rather than failing completely, when some part of the system fails. The term is most commonly used to describe computer systems designed to continue more or less fully operational with, perhaps, a reduction in throughput or an increase in response time in the event of some partial fa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Clusters

A computer cluster is a set of computers that work together so that they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software. The components of a cluster are usually connected to each other through fast local area networks, with each node (computer used as a server) running its own instance of an operating system. In most circumstances, all of the nodes use the same hardware and the same operating system, although in some setups (e.g. using Open Source Cluster Application Resources (OSCAR)), different operating systems can be used on each computer, or different hardware. Clusters are usually deployed to improve performance and availability over that of a single computer, while typically being much more cost-effective than single computers of comparable speed or availability. Computer clusters emerged as a result of convergence of a number of computing trends including t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

European Community

The European Economic Community (EEC) was a regional organization created by the Treaty of Rome of 1957,Today the largely rewritten treaty continues in force as the ''Treaty on the functioning of the European Union'', as renamed by the Lisbon Treaty. aiming to foster economic integration among its member states. It was subsequently renamed the European Community (EC) upon becoming integrated into the first pillar of the newly formed European Union in 1993. In the popular language, however, the singular ''European Community'' was sometimes inaccuratelly used in the wider sense of the plural '' European Communities'', in spite of the latter designation covering all the three constituent entities of the first pillar. In 2009, the EC formally ceased to exist and its institutions were directly absorbed by the EU. This made the Union the formal successor institution of the Community. The Community's initial aim was to bring about economic integration, including a common market and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

DEISA

The Distributed European Infrastructure for Supercomputing Applications (DEISA) was a European Union supercomputer project. A consortium of eleven national supercomputing centres from seven European countries promoted pan-European research on European high-performance computing systems. By extending the European collaborative environment in the area of supercomputing, DEISA followed suggestions of the European Strategy Forum on Research Infrastructures. History The DEISA project started as DEISA1 in 2002 developing and supporting a pan-European distributed high performance computing infrastructure. The initial project was funded by the European Commission in the sixth of the Framework Programmes for Research and Technological Development (FP6) from 2004 through 2008. The funding continued for the follow-up project DEISA2 in the Seventh Framework Programme (FP7) through 2011. The DEISA infrastructure coupled eleven national supercomputing centres with a dedicated (mostly 10&nbs ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

High Performance Computing

High-performance computing (HPC) uses supercomputers and computer clusters to solve advanced computation problems. Overview HPC integrates systems administration (including network and security knowledge) and parallel programming into a multidisciplinary field that combines digital electronics, computer architecture, system software, programming languages, algorithms and computational techniques. HPC technologies are the tools and systems used to implement and create high performance computing systems. Recently, HPC systems have shifted from supercomputing to computing clusters and grids. Because of the need of networking in clusters and grids, High Performance Computing Technologies are being promoted by the use of a collapsed network backbone, because the collapsed backbone architecture is simple to troubleshoot and upgrades can be applied to a single router as opposed to multiple ones. The term is most commonly associated with computing used for scientific research or com ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Volunteer Computing

Volunteer computing is a type of distributed computing in which people donate their computers' unused resources to a research-oriented project, and sometimes in exchange for credit points. The fundamental idea behind it is that a modern desktop computer is sufficiently powerful to perform billions of operations a second, but for most users only between 10-15% of its capacity is used. Typical uses like basic word processing or web browsing leave the computer mostly idle. The practice of volunteer computing, which dates back to the mid-1990s, can potentially make substantial processing power available to researchers at minimal cost. Typically, a program running on a volunteer's computer periodically contacts a research application to request jobs and report results. A middleware system usually serves as an intermediary. History The first volunteer computing project was the Great Internet Mersenne Prime Search, which was started in January 1996. It was followed in 1997 by distribute ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mersenne Prime

In mathematics, a Mersenne prime is a prime number that is one less than a power of two. That is, it is a prime number of the form for some integer . They are named after Marin Mersenne, a French Minim friar, who studied them in the early 17th century. If is a composite number then so is . Therefore, an equivalent definition of the Mersenne primes is that they are the prime numbers of the form for some prime . The exponents which give Mersenne primes are 2, 3, 5, 7, 13, 17, 19, 31, ... and the resulting Mersenne primes are 3, 7, 31, 127, 8191, 131071, 524287, 2147483647, ... . Numbers of the form without the primality requirement may be called Mersenne numbers. Sometimes, however, Mersenne numbers are defined to have the additional requirement that be prime. The smallest composite Mersenne number with prime exponent ''n'' is . Mersenne primes were studied in antiquity because of their close connection to perfect numbers: the Euclid–Euler theorem as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Great Internet Mersenne Prime Search

The Great Internet Mersenne Prime Search (GIMPS) is a collaborative project of volunteers who use freely available software to search for Mersenne prime numbers. GIMPS was founded in 1996 by George Woltman, who also wrote the Prime95 client and its Linux port MPrime. Scott Kurowski wrote the back end PrimeNet server to demonstrate volunteer computing software by Entropia, a company he founded in 1997. GIMPS is registered as Mersenne Research, Inc. with Kurowski as Executive Vice President and board director. GIMPS is said to be one of the first large scale volunteer computing projects over the Internet for research purposes. , the project has found a total of seventeen Mersenne primes, fifteen of which were the largest known prime number at their respective times of discovery. The largest known prime is 282,589,933 − 1 (or M82,589,933 for short) and was discovered on December 7, 2018, by Patrick Laroche. On December 4, 2020, the project passed a major milestone afte ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Middleware

Middleware is a type of computer software that provides services to software applications beyond those available from the operating system. It can be described as "software glue". Middleware makes it easier for software developers to implement communication and input/output, so they can focus on the specific purpose of their application. It gained popularity in the 1980s as a solution to the problem of how to link newer applications to older legacy systems, although the term had been in use since 1968. In distributed applications The term is most commonly used for software that enables communication and management of data in distributed applications. An IETF workshop in 2000 defined middleware as "those services found above the transport (i.e. over TCP/IP) layer set of services but below the application environment" (i.e. below application-level APIs). In this more specific sense ''middleware'' can be described as the dash ("-") in '' client-server'', or the ''-to-'' in ''peer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |