|

Quantities Of Information

The mathematical theory of information is based on probability theory and statistics, and measures information with several quantities of information. The choice of logarithmic base in the following formulae determines the unit of information entropy that is used. The most common unit of information is the ''bit'', or more correctly the shannon, based on the binary logarithm. Although ''bit'' is more frequently used in place of ''shannon'', its name is not distinguished from the bit as used in data processing to refer to a binary value or stream regardless of its entropy (information content). Other units include the nat, based on the natural logarithm, and the hartley, based on the base 10 or common logarithm. In what follows, an expression of the form p \log p \, is considered by convention to be equal to zero whenever p is zero. This is justified because \lim_ p \log p = 0 for any logarithmic base. Self-information Shannon derived a measure of information content cal ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Binary Entropy Function

Binary may refer to: Science and technology Mathematics * Binary number, a representation of numbers using only two values (0 and 1) for each digit * Binary function, a function that takes two arguments * Binary operation, a mathematical operation that takes two arguments * Binary relation, a relation involving two elements * Finger binary, a system for counting in binary numbers on the fingers of human hands Computing * Binary code, the representation of text and data using only the digits 1 and 0 * Bit, or binary digit, the basic unit of information in computers * Binary file, composed of something other than human-readable text ** Executable, a type of binary file that contains machine code for the computer to execute * Binary tree, a computer tree data structure in which each node has at most two children * Binary-coded decimal, a method for encoding for decimal digits in binary sequences Astronomy * Binary star, a star system with two stars in it * Binary planet, t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Posterior Probability

The posterior probability is a type of conditional probability that results from updating the prior probability with information summarized by the likelihood via an application of Bayes' rule. From an epistemological perspective, the posterior probability contains everything there is to know about an uncertain proposition (such as a scientific hypothesis, or parameter values), given prior knowledge and a mathematical model describing the observations available at a particular time. After the arrival of new information, the current posterior probability may serve as the prior in another round of Bayesian updating. In the context of Bayesian statistics, the posterior probability distribution usually describes the epistemic uncertainty about statistical parameters conditional on a collection of observed data. From a given posterior distribution, various point and interval estimates can be derived, such as the maximum a posteriori (MAP) or the highest posterior density int ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kullback–Leibler Divergence

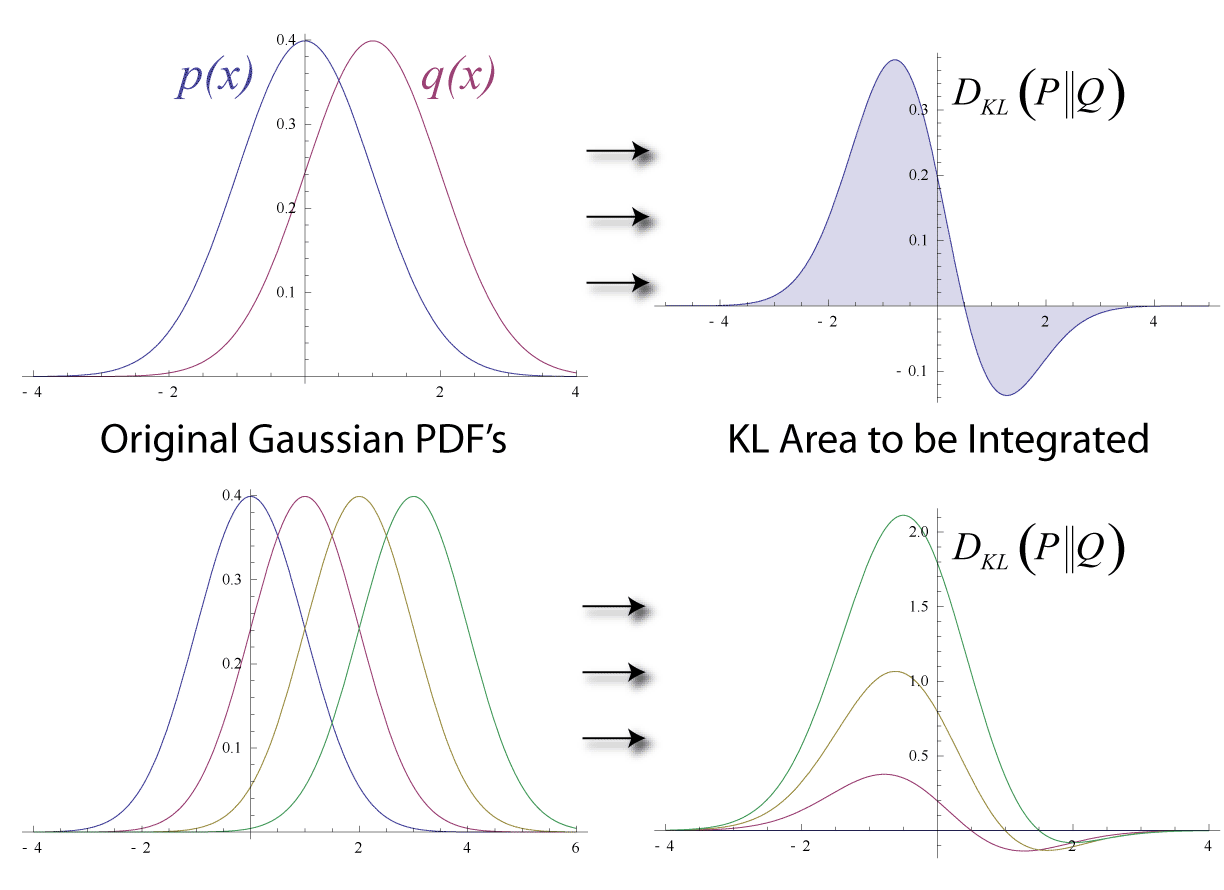

In mathematical statistics, the Kullback–Leibler (KL) divergence (also called relative entropy and I-divergence), denoted D_\text(P \parallel Q), is a type of statistical distance: a measure of how much a model probability distribution is different from a true probability distribution . Mathematically, it is defined as D_\text(P \parallel Q) = \sum_ P(x) \, \log \frac\text A simple interpretation of the KL divergence of from is the expected excess surprise from using as a model instead of when the actual distribution is . While it is a measure of how different two distributions are and is thus a distance in some sense, it is not actually a metric, which is the most familiar and formal type of distance. In particular, it is not symmetric in the two distributions (in contrast to variation of information), and does not satisfy the triangle inequality. Instead, in terms of information geometry, it is a type of divergence, a generalization of squared distance, and for cer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Symmetric Function

In mathematics, a function of n variables is symmetric if its value is the same no matter the order of its arguments. For example, a function f\left(x_1,x_2\right) of two arguments is a symmetric function if and only if f\left(x_1,x_2\right) = f\left(x_2,x_1\right) for all x_1 and x_2 such that \left(x_1,x_2\right) and \left(x_2,x_1\right) are in the domain of f. The most commonly encountered symmetric functions are polynomial functions, which are given by the symmetric polynomials. A related notion is alternating polynomials, which change sign under an interchange of variables. Aside from polynomial functions, tensors that act as functions of several vectors can be symmetric, and in fact the space of symmetric k-tensors on a vector space V is isomorphic to the space of homogeneous polynomials of degree k on V. Symmetric functions should not be confused with even and odd functions, which have a different sort of symmetry. Symmetrization Given any function f in n variab ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mutual Information

In probability theory and information theory, the mutual information (MI) of two random variables is a measure of the mutual Statistical dependence, dependence between the two variables. More specifically, it quantifies the "Information content, amount of information" (in Units of information, units such as shannon (unit), shannons (bits), Nat (unit), nats or Hartley (unit), hartleys) obtained about one random variable by observing the other random variable. The concept of mutual information is intimately linked to that of Entropy (information theory), entropy of a random variable, a fundamental notion in information theory that quantifies the expected "amount of information" held in a random variable. Not limited to real-valued random variables and linear dependence like the Pearson correlation coefficient, correlation coefficient, MI is more general and determines how different the joint distribution of the pair (X,Y) is from the product of the marginal distributions of X and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Metric (mathematics)

In mathematics, a metric space is a set together with a notion of ''distance'' between its elements, usually called points. The distance is measured by a function called a metric or distance function. Metric spaces are a general setting for studying many of the concepts of mathematical analysis and geometry. The most familiar example of a metric space is 3-dimensional Euclidean space with its usual notion of distance. Other well-known examples are a sphere equipped with the angular distance and the hyperbolic plane. A metric may correspond to a metaphorical, rather than physical, notion of distance: for example, the set of 100-character Unicode strings can be equipped with the Hamming distance, which measures the number of characters that need to be changed to get from one string to another. Since they are very general, metric spaces are a tool used in many different branches of mathematics. Many types of mathematical objects have a natural notion of distance and th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Distribution

In probability theory and statistics, a probability distribution is a Function (mathematics), function that gives the probabilities of occurrence of possible events for an Experiment (probability theory), experiment. It is a mathematical description of a Randomness, random phenomenon in terms of its sample space and the Probability, probabilities of Event (probability theory), events (subsets of the sample space). For instance, if is used to denote the outcome of a coin toss ("the experiment"), then the probability distribution of would take the value 0.5 (1 in 2 or 1/2) for , and 0.5 for (assuming that fair coin, the coin is fair). More commonly, probability distributions are used to compare the relative occurrence of many different random values. Probability distributions can be defined in different ways and for discrete or for continuous variables. Distributions with special properties or for especially important applications are given specific names. Introduction A prob ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kullback%E2%80%93Leibler Divergence

In mathematical statistics, the Kullback–Leibler (KL) divergence (also called relative entropy and I-divergence), denoted D_\text(P \parallel Q), is a type of statistical distance: a measure of how much a model probability distribution is different from a true probability distribution . Mathematically, it is defined as D_\text(P \parallel Q) = \sum_ P(x) \, \log \frac\text A simple interpretation of the KL divergence of from is the expected excess surprise from using as a model instead of when the actual distribution is . While it is a measure of how different two distributions are and is thus a distance in some sense, it is not actually a metric, which is the most familiar and formal type of distance. In particular, it is not symmetric in the two distributions (in contrast to variation of information), and does not satisfy the triangle inequality. Instead, in terms of information geometry, it is a type of divergence, a generalization of squared distance, and for cer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conditional Expectation

In probability theory, the conditional expectation, conditional expected value, or conditional mean of a random variable is its expected value evaluated with respect to the conditional probability distribution. If the random variable can take on only a finite number of values, the "conditions" are that the variable can only take on a subset of those values. More formally, in the case when the random variable is defined over a discrete probability space, the "conditions" are a partition of a set, partition of this probability space. Depending on the context, the conditional expectation can be either a random variable or a function. The random variable is denoted E(X\mid Y) analogously to conditional probability. The function form is either denoted E(X\mid Y=y) or a separate function symbol such as f(y) is introduced with the meaning E(X\mid Y) = f(Y). Examples Example 1: Dice rolling Consider the roll of a fair die and let ''A'' = 1 if the number is even (i.e., 2, 4, or 6) and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conditional Entropy

In information theory, the conditional entropy quantifies the amount of information needed to describe the outcome of a random variable Y given that the value of another random variable X is known. Here, information is measured in shannons, nats, or hartleys. The ''entropy of Y conditioned on X'' is written as \Eta(Y, X). Definition The conditional entropy of Y given X is defined as :\Eta(Y, X)\ = -\sum_p(x,y)\log \frac where \mathcal X and \mathcal Y denote the support sets of X and Y. ''Note:'' Here, the convention is that the expression 0 \log 0 should be treated as being equal to zero. This is because \lim_ \theta\, \log \theta = 0. Intuitively, notice that by definition of expected value and of conditional probability, \displaystyle H(Y, X) can be written as H(Y, X) = \mathbb (X,Y)/math>, where f is defined as \displaystyle f(x,y) := -\log\left(\frac\right) = -\log(p(y, x)). One can think of \displaystyle f as associating each pair \displaystyle (x, y) with ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conditional Probability

In probability theory, conditional probability is a measure of the probability of an Event (probability theory), event occurring, given that another event (by assumption, presumption, assertion or evidence) is already known to have occurred. This particular method relies on event A occurring with some sort of relationship with another event B. In this situation, the event A can be analyzed by a conditional probability with respect to B. If the event of interest is and the event is known or assumed to have occurred, "the conditional probability of given ", or "the probability of under the condition ", is usually written as or occasionally . This can also be understood as the fraction of probability B that intersects with A, or the ratio of the probabilities of both events happening to the "given" one happening (how many times A occurs rather than not assuming B has occurred): P(A \mid B) = \frac. For example, the probability that any given person has a cough on any given day ma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |