|

Probit Function

In probability theory and statistics, the probit function is the quantile function associated with the standard normal distribution. It has applications in data analysis and machine learning, in particular exploratory statistical graphics and specialized regression modeling of binary response variables. Mathematically, the probit is the inverse of the cumulative distribution function of the standard normal distribution, which is denoted as \Phi(z), so the probit is defined as :\operatorname(p) = \Phi^(p) \quad \text \quad p \in (0,1). Largely because of the central limit theorem, the standard normal distribution plays a fundamental role in probability theory and statistics. If we consider the familiar fact that the standard normal distribution places 95% of probability between −1.96 and 1.96, and is symmetric around zero, it follows that :\Phi(-1.96) = 0.025 = 1-\Phi(1.96).\,\! The probit function gives the 'inverse' computation, generating a value of a standard normal ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probit Plot

In probability theory and statistics, the probit function is the quantile function associated with the standard normal distribution. It has applications in data analysis and machine learning, in particular exploratory statistical graphics and specialized regression modeling of binary response variables. Mathematically, the probit is the inverse of the cumulative distribution function of the standard normal distribution, which is denoted as \Phi(z), so the probit is defined as :\operatorname(p) = \Phi^(p) \quad \text \quad p \in (0,1). Largely because of the central limit theorem, the standard normal distribution plays a fundamental role in probability theory and statistics. If we consider the familiar fact that the standard normal distribution places 95% of probability between −1.96 and 1.96, and is symmetric around zero, it follows that :\Phi(-1.96) = 0.025 = 1-\Phi(1.96).\,\! The probit function gives the 'inverse' computation, generating a value of a standard normal ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bimodal Distribution

In statistics, a multimodal distribution is a probability distribution with more than one mode. These appear as distinct peaks (local maxima) in the probability density function, as shown in Figures 1 and 2. Categorical, continuous, and discrete data can all form multimodal distributions. Among univariate analyses, multimodal distributions are commonly bimodal. Terminology When the two modes are unequal the larger mode is known as the major mode and the other as the minor mode. The least frequent value between the modes is known as the antimode. The difference between the major and minor modes is known as the amplitude. In time series the major mode is called the acrophase and the antimode the batiphase. Galtung's classification Galtung introduced a classification system (AJUS) for distributions: *A: unimodal distribution – peak in the middle *J: unimodal – peak at either end *U: bimodal – peaks at both ends *S: bimodal or multimodal – multiple peaks This c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

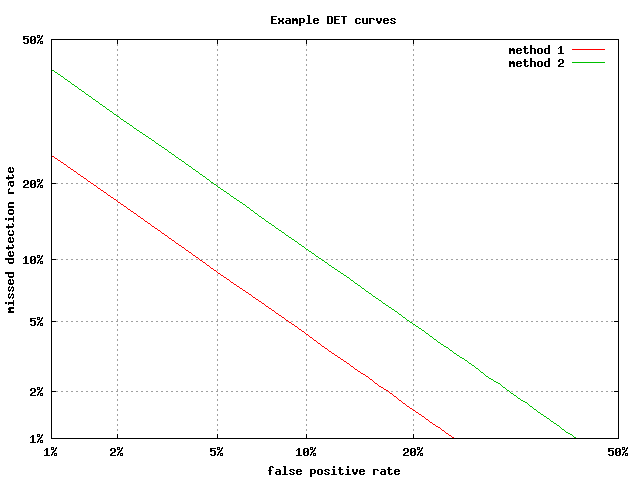

Detection Error Tradeoff

A detection error tradeoff (DET) graph is a graphical plot of error rates for binary classification systems, plotting the false rejection rate vs. false acceptance rate.A. Martin, A., G. Doddington, T. Kamm, M. Ordowski, and M. Przybocki.The DET Curve in Assessment of Detection Task Performance, Proc. Eurospeech '97, Rhodes, Greece, September 1997, Vol. 4, pp. 1895-1898. The x- and y-axes are scaled non-linearly by their standard normal deviates (or just by logarithmic transformation), yielding tradeoff curves that are more linear than ROC curves, and use most of the image area to highlight the differences of importance in the critical operating region. Axis warping The normal deviate mapping (or normal quantile function, or inverse normal cumulative distribution) is given by the probit function, so that the horizontal axis is ''x'' = probit(''Pfa'') and the vertical is ''y'' = probit(''Pfr''), where ''Pfa'' and ''Pfr'' are the false-accept and false-reject rates. The probit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Generalized Linear Model

In statistics, a generalized linear model (GLM) is a flexible generalization of ordinary linear regression. The GLM generalizes linear regression by allowing the linear model to be related to the response variable via a ''link function'' and by allowing the magnitude of the variance of each measurement to be a function of its predicted value. Generalized linear models were formulated by John Nelder and Robert Wedderburn as a way of unifying various other statistical models, including linear regression, logistic regression and Poisson regression. They proposed an iteratively reweighted least squares method for maximum likelihood estimation (MLE) of the model parameters. MLE remains popular and is the default method on many statistical computing packages. Other approaches, including Bayesian regression and least squares fitting to variance stabilized responses, have been developed. Intuition Ordinary linear regression predicts the expected value of a given unknown quantity ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regression Analysis

In statistical modeling, regression analysis is a set of statistical processes for estimating the relationships between a dependent variable (often called the 'outcome' or 'response' variable, or a 'label' in machine learning parlance) and one or more independent variables (often called 'predictors', 'covariates', 'explanatory variables' or 'features'). The most common form of regression analysis is linear regression, in which one finds the line (or a more complex linear combination) that most closely fits the data according to a specific mathematical criterion. For example, the method of ordinary least squares computes the unique line (or hyperplane) that minimizes the sum of squared differences between the true data and that line (or hyperplane). For specific mathematical reasons (see linear regression), this allows the researcher to estimate the conditional expectation (or population average value) of the dependent variable when the independent variables take on a given ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Logistic Regression

In statistics, the logistic model (or logit model) is a statistical model that models the probability of an event taking place by having the log-odds for the event be a linear function (calculus), linear combination of one or more independent variables. In regression analysis, logistic regression (or logit regression) is estimation theory, estimating the parameters of a logistic model (the coefficients in the linear combination). Formally, in binary logistic regression there is a single binary variable, binary dependent variable, coded by an indicator variable, where the two values are labeled "0" and "1", while the independent variables can each be a binary variable (two classes, coded by an indicator variable) or a continuous variable (any real value). The corresponding probability of the value labeled "1" can vary between 0 (certainly the value "0") and 1 (certainly the value "1"), hence the labeling; the function that converts log-odds to probability is the logistic function, h ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Logit Model

In statistics, the logistic model (or logit model) is a statistical model that models the probability of an event taking place by having the log-odds for the event be a linear combination of one or more independent variables. In regression analysis, logistic regression (or logit regression) is estimating the parameters of a logistic model (the coefficients in the linear combination). Formally, in binary logistic regression there is a single binary dependent variable, coded by an indicator variable, where the two values are labeled "0" and "1", while the independent variables can each be a binary variable (two classes, coded by an indicator variable) or a continuous variable (any real value). The corresponding probability of the value labeled "1" can vary between 0 (certainly the value "0") and 1 (certainly the value "1"), hence the labeling; the function that converts log-odds to probability is the logistic function, hence the name. The unit of measurement for the log-odd ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Logit

In statistics, the logit ( ) function is the quantile function associated with the standard logistic distribution. It has many uses in data analysis and machine learning, especially in data transformations. Mathematically, the logit is the inverse of the standard logistic function \sigma(x) = 1/(1+e^), so the logit is defined as :\operatorname p = \sigma^(p) = \ln \frac \quad \text \quad p \in (0,1). Because of this, the logit is also called the log-odds since it is equal to the logarithm of the odds \frac where is a probability. Thus, the logit is a type of function that maps probability values from (0, 1) to real numbers in (-\infty, +\infty), akin to the probit function. Definition If is a probability, then is the corresponding odds; the of the probability is the logarithm of the odds, i.e.: :\operatorname(p)=\ln\left( \frac \right) =\ln(p)-\ln(1-p)=-\ln\left( \frac-1\right)=2\operatorname(2p-1) The base of the logarithm function used is of little importance in the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

R Programming Language

R is a programming language for statistical computing and graphics supported by the R Core Team and the R Foundation for Statistical Computing. Created by statisticians Ross Ihaka and Robert Gentleman, R is used among data miners, bioinformaticians and statisticians for data analysis and developing statistical software. Users have created packages to augment the functions of the R language. According to user surveys and studies of scholarly literature databases, R is one of the most commonly used programming languages used in data mining. R ranks 12th in the TIOBE index, a measure of programming language popularity, in which the language peaked in 8th place in August 2020. The official R software environment is an open-source free software environment within the GNU package, available under the GNU General Public License. It is written primarily in C, Fortran, and R itself (partially self-hosting). Precompiled executables are provided for various operating systems. R ha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematica

Wolfram Mathematica is a software system with built-in libraries for several areas of technical computing that allow machine learning, statistics, symbolic computation, data manipulation, network analysis, time series analysis, NLP, optimization, plotting functions and various types of data, implementation of algorithms, creation of user interfaces, and interfacing with programs written in other programming languages. It was conceived by Stephen Wolfram, and is developed by Wolfram Research of Champaign, Illinois. The Wolfram Language is the programming language used in ''Mathematica''. Mathematica 1.0 was released on June 23, 1988 in Champaign, Illinois and Santa Clara, California. __TOC__ Notebook interface Wolfram Mathematica (called ''Mathematica'' by some of its users) is split into two parts: the kernel and the front end. The kernel interprets expressions (Wolfram Language code) and returns result expressions, which can then be displayed by the front end. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

MATLAB

MATLAB (an abbreviation of "MATrix LABoratory") is a proprietary multi-paradigm programming language and numeric computing environment developed by MathWorks. MATLAB allows matrix manipulations, plotting of functions and data, implementation of algorithms, creation of user interfaces, and interfacing with programs written in other languages. Although MATLAB is intended primarily for numeric computing, an optional toolbox uses the MuPAD symbolic engine allowing access to symbolic computing abilities. An additional package, Simulink, adds graphical multi-domain simulation and model-based design for dynamic and embedded systems. As of 2020, MATLAB has more than 4 million users worldwide. They come from various backgrounds of engineering, science, and economics. History Origins MATLAB was invented by mathematician and computer programmer Cleve Moler. The idea for MATLAB was based on his 1960s PhD thesis. Moler became a math professor at the University of New Mexico an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |