|

Logit Model

In statistics, the logit ( ) function is the quantile function associated with the standard logistic distribution. It has many uses in data analysis and machine learning, especially in data transformations. Mathematically, the logit is the inverse of the standard logistic function \sigma(x) = 1/(1+e^), so the logit is defined as : \operatorname p = \sigma^(p) = \ln \frac \quad \text \quad p \in (0,1). Because of this, the logit is also called the log-odds since it is equal to the logarithm of the odds \frac where is a probability. Thus, the logit is a type of function that maps probability values from (0, 1) to real numbers in (-\infty, +\infty), akin to the probit function. Definition If is a probability, then is the corresponding odds; the of the probability is the logarithm of the odds, i.e.: : \operatorname(p)=\ln\left( \frac \right) =\ln(p)-\ln(1-p)=-\ln\left( \frac-1\right)=2\operatorname(2p-1). The base of the logarithm function used is of little import ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Logit

In statistics, the logit ( ) function is the quantile function associated with the standard logistic distribution. It has many uses in data analysis and machine learning, especially in Data transformation (statistics), data transformations. Mathematically, the logit is the inverse function, inverse of the logistic function, standard logistic function \sigma(x) = 1/(1+e^), so the logit is defined as : \operatorname p = \sigma^(p) = \ln \frac \quad \text \quad p \in (0,1). Because of this, the logit is also called the log-odds since it is equal to the logarithm of the odds \frac where is a probability. Thus, the logit is a type of function that maps probability values from (0, 1) to real numbers in (-\infty, +\infty), akin to the probit, probit function. Definition If is a probability, then is the corresponding odds; the of the probability is the logarithm of the odds, i.e.: : \operatorname(p)=\ln\left( \frac \right) =\ln(p)-\ln(1-p)=-\ln\left( \frac-1\right)=2\operatornam ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nat (unit)

The natural unit of information (symbol: nat), sometimes also nit or nepit, is a unit of information or information entropy, based on natural logarithms and powers of e (mathematical constant), ''e'', rather than the powers of 2 and binary logarithm, base 2 logarithms, which define the shannon (unit), shannon. This unit is also known by its unit symbol, the nat. One nat is the information content of an event when the probability of that event occurring is 1/e (mathematical constant), ''e''. One nat is equal to shannon (unit), shannons ≈ 1.44 Sh or, equivalently, hartley (unit), hartleys ≈ 0.434 Hart. History Boulton and Chris Wallace (computer scientist), Wallace used the term ''nit'' in conjunction with minimum message length, which was subsequently changed by the minimum description length community to ''nat'' to avoid confusion with the nit (unit), nit used as a unit of luminance. Alan Turing used the ''natural ban (unit), ban''. Entropy Shan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Binomial Distribution

In probability theory and statistics, the binomial distribution with parameters and is the discrete probability distribution of the number of successes in a sequence of statistical independence, independent experiment (probability theory), experiments, each asking a yes–no question, and each with its own Boolean-valued function, Boolean-valued outcome (probability), outcome: ''success'' (with probability ) or ''failure'' (with probability ). A single success/failure experiment is also called a Bernoulli trial or Bernoulli experiment, and a sequence of outcomes is called a Bernoulli process; for a single trial, i.e., , the binomial distribution is a Bernoulli distribution. The binomial distribution is the basis for the binomial test of statistical significance. The binomial distribution is frequently used to model the number of successes in a sample of size drawn with replacement from a population of size . If the sampling is carried out without replacement, the draws ar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Parameter

In probability and statistics, an exponential family is a parametric set of probability distributions of a certain form, specified below. This special form is chosen for mathematical convenience, including the enabling of the user to calculate expectations, covariances using differentiation based on some useful algebraic properties, as well as for generality, as exponential families are in a sense very natural sets of distributions to consider. The term exponential class is sometimes used in place of "exponential family", or the older term Koopman–Darmois family. Sometimes loosely referred to as ''the'' exponential family, this class of distributions is distinct because they all possess a variety of desirable properties, most importantly the existence of a sufficient statistic. The concept of exponential families is credited to E. J. G. Pitman, G. Darmois, and B. O. Koopman in 1935–1936. Exponential families of distributions provide a general framework for selecting ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bernoulli Distribution

In probability theory and statistics, the Bernoulli distribution, named after Swiss mathematician Jacob Bernoulli, is the discrete probability distribution of a random variable which takes the value 1 with probability p and the value 0 with probability q = 1-p. Less formally, it can be thought of as a model for the set of possible outcomes of any single experiment that asks a yes–no question. Such questions lead to outcome (probability), outcomes that are Boolean-valued function, Boolean-valued: a single bit whose value is success/yes and no, yes/Truth value, true/Binary code, one with probability ''p'' and failure/no/false (logic), false/Binary code, zero with probability ''q''. It can be used to represent a (possibly biased) coin toss where 1 and 0 would represent "heads" and "tails", respectively, and ''p'' would be the probability of the coin landing on heads (or vice versa where 1 would represent tails and ''p'' would be the probability of tails). In particular, unfair co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Link Function

In statistics, a generalized linear model (GLM) is a flexible generalization of ordinary linear regression. The GLM generalizes linear regression by allowing the linear model to be related to the response variable via a ''link function'' and by allowing the magnitude of the variance of each measurement to be a function of its predicted value. Generalized linear models were formulated by John Nelder and Robert Wedderburn as a way of unifying various other statistical models, including linear regression, logistic regression and Poisson regression. They proposed an iteratively reweighted least squares method for maximum likelihood estimation (MLE) of the model parameters. MLE remains popular and is the default method on many statistical computing packages. Other approaches, including Bayesian regression and least squares fitting to variance stabilized responses, have been developed. Intuition Ordinary linear regression predicts the expected value of a given unknown quantity ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Generalized Linear Model

In statistics, a generalized linear model (GLM) is a flexible generalization of ordinary linear regression. The GLM generalizes linear regression by allowing the linear model to be related to the response variable via a ''link function'' and by allowing the magnitude of the variance of each measurement to be a function of its predicted value. Generalized linear models were formulated by John Nelder and Robert Wedderburn as a way of unifying various other statistical models, including linear regression, logistic regression and Poisson regression. They proposed an iteratively reweighted least squares method for maximum likelihood estimation (MLE) of the model parameters. MLE remains popular and is the default method on many statistical computing packages. Other approaches, including Bayesian regression and least squares fitting to variance stabilized responses, have been developed. Intuition Ordinary linear regression predicts the expected value of a given unknown quanti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Logistic Regression

In statistics, a logistic model (or logit model) is a statistical model that models the logit, log-odds of an event as a linear function (calculus), linear combination of one or more independent variables. In regression analysis, logistic regression (or logit regression) estimation theory, estimates the parameters of a logistic model (the coefficients in the linear or non linear combinations). In binary logistic regression there is a single binary variable, binary dependent variable, coded by an indicator variable, where the two values are labeled "0" and "1", while the independent variables can each be a binary variable (two classes, coded by an indicator variable) or a continuous variable (any real value). The corresponding probability of the value labeled "1" can vary between 0 (certainly the value "0") and 1 (certainly the value "1"), hence the labeling; the function that converts log-odds to probability is the logistic function, hence the name. The unit of measurement for the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

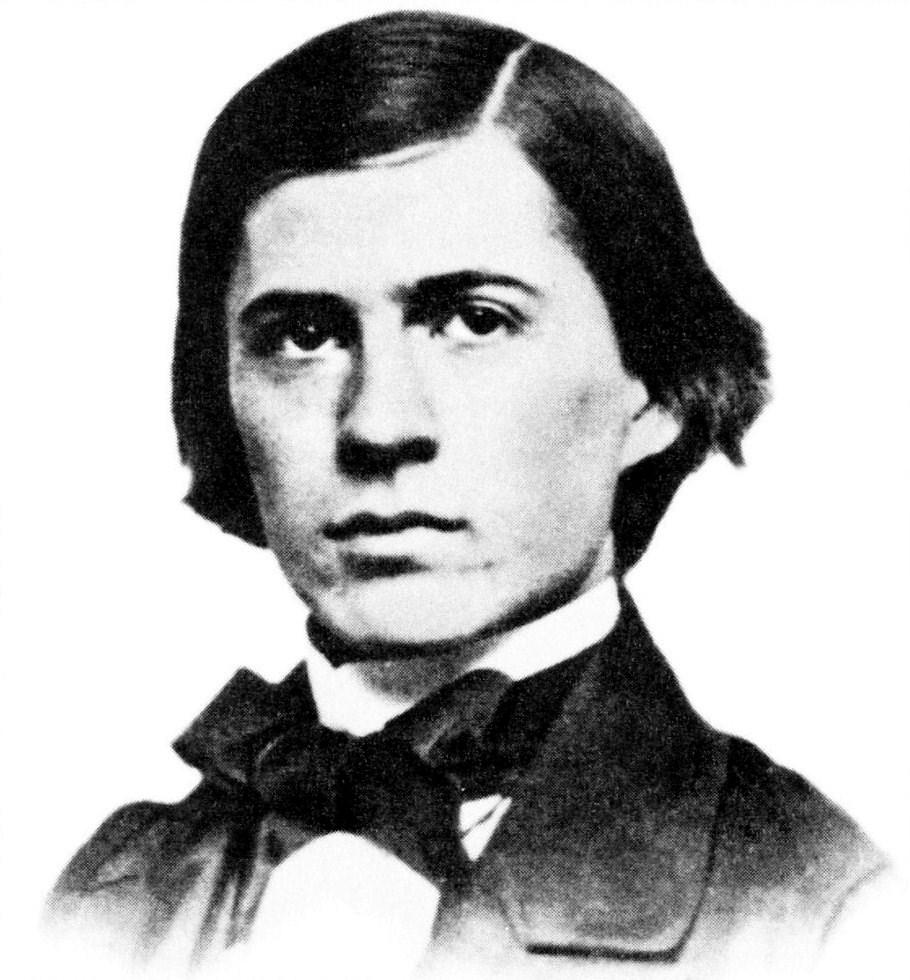

Charles Sanders Peirce

Charles Sanders Peirce ( ; September 10, 1839 – April 19, 1914) was an American scientist, mathematician, logician, and philosopher who is sometimes known as "the father of pragmatism". According to philosopher Paul Weiss (philosopher), Paul Weiss, Peirce was "the most original and versatile of America's philosophers and America's greatest logician". Bertrand Russell wrote "he was one of the most original minds of the later nineteenth century and certainly the greatest American thinker ever". Educated as a chemist and employed as a scientist for thirty years, Peirce meanwhile made major contributions to logic, such as theories of Algebraic logic, relations and Quantifier (logic), quantification. Clarence Irving Lewis, C. I. Lewis wrote, "The contributions of C. S. Peirce to symbolic logic are more numerous and varied than those of any other writer—at least in the nineteenth century." For Peirce, logic also encompassed much of what is now called epistemology and the philoso ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Joseph Berkson

Joseph Berkson (14 May 1899 – 12 September 1982) was trained as a physicist (BSc 1920, College of City of New York CNY M.A., 1922, Columbia), physician (M.D., 1927, Johns Hopkins), and statistician (Dr.Sc., 1928, Johns Hopkins).O'Fallon WM (1998). "Berkson, Joseph". Armitage P, Colton T, Editors-in-Chief. ''Encyclopedia of Biostatistics.'' Chichester: John Wiley & Sons. Volume 1, pp. 290-295. He is best known for having identified a source of bias in observational studies caused by selection effects known as Berkson's paradox. In 1950, as Head (1934–1964) of the Division of Biometry and Medical Statistics of the Mayo Clinic, Rochester, Minnesota, Berkson wrote a key paper entitled ''Are there two regressions?'' In this paper Berkson proposed an error model for regression analysis that contradicted the classical error model until that point assumed to generally apply and this has since been termed the Berkson error model. Whereas the classical error model is statistically ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Chester Ittner Bliss

Chester Ittner Bliss (February 1, 1899 – March 14, 1979) was primarily a biologist, who is best known for his contributions to statistics. He was born in Springfield, Ohio in 1899 and died in 1979. He was the first secretary of the International Biometric Society. Academic qualifications *Bachelor of Arts in Entomology from Ohio State University, 1921 *Master of Arts from Columbia University, 1922 *PhD from Columbia University, 1926 Remarkably, his statistical knowledge was largely self-taught and developed according to the problems he wanted to solve (Cochran & Finney 1979). Nevertheless, in 1942 he was elected as a Fellow of the American Statistical Association. Major contributions The idea of the probit function was published by Bliss in a 1934 article in ''Science'' on how to treat data such as the percentage of a pest killed by a pesticide. Bliss proposed transforming the percentage killed into a "probability unit" (or "probit"). Arguably his most important contributi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Taylor Series

In mathematics, the Taylor series or Taylor expansion of a function is an infinite sum of terms that are expressed in terms of the function's derivatives at a single point. For most common functions, the function and the sum of its Taylor series are equal near this point. Taylor series are named after Brook Taylor, who introduced them in 1715. A Taylor series is also called a Maclaurin series when 0 is the point where the derivatives are considered, after Colin Maclaurin, who made extensive use of this special case of Taylor series in the 18th century. The partial sum formed by the first terms of a Taylor series is a polynomial of degree that is called the th Taylor polynomial of the function. Taylor polynomials are approximations of a function, which become generally more accurate as increases. Taylor's theorem gives quantitative estimates on the error introduced by the use of such approximations. If the Taylor series of a function is convergent, its sum is the limit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |