|

Permute Instruction

Permute (and Shuffle) instructions, part of bit manipulation as well as vector processing, copy unaltered contents from a source array to a destination array, where the indices are specified by a second source array. The size (bitwidth) of the source elements is not restricted but remains the same as the destination size. There exists two important permute variants, known as gather and scatter, respectively. The gather variant is as follows: for i = 0 to length-1 dest = src ndices[i where the scatter variant is: for i = 0 to length-1 dest ndices[i = src[i] Note that unlike in memory-based Gather-scatter (vector addressing), gather-scatter all three of dest, src, and indices are ''registers'' (or parts of registers in the case of bit-level permute), not memory locations. The scatter variant can be seen to "scatter" the source elements ''across'' (into) to the destination, where the "gather" variant is gathering data ''from'' the indexed source elements. Given tha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bit Manipulation

Bit manipulation is the act of algorithmically manipulating bits or other pieces of data shorter than a word. Computer programming tasks that require bit manipulation include low-level device control, error detection and correction algorithms, data compression, encryption algorithms, and optimization. For most other tasks, modern programming languages allow the programmer to work directly with abstractions instead of bits that represent those abstractions. Source code that does bit manipulation makes use of the bitwise operations: AND, OR, XOR, NOT, and possibly other operations analogous to the boolean operators; there are also bit shifts and operations to count ones and zeros, find high and low one or zero, set, reset and test bits, extract and insert fields, mask and zero fields, gather and scatter bits to and from specified bit positions or fields. Integer arithmetic operators can also effect bit-operations in conjunction with the other operators. Bit manipulation, in s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cryptographic

Cryptography, or cryptology (from grc, , translit=kryptós "hidden, secret"; and ''graphein'', "to write", or '' -logia'', "study", respectively), is the practice and study of techniques for secure communication in the presence of adversarial behavior. More generally, cryptography is about constructing and analyzing protocols that prevent third parties or the public from reading private messages. Modern cryptography exists at the intersection of the disciplines of mathematics, computer science, information security, electrical engineering, digital signal processing, physics, and others. Core concepts related to information security ( data confidentiality, data integrity, authentication, and non-repudiation) are also central to cryptography. Practical applications of cryptography include electronic commerce, chip-based payment cards, digital currencies, computer passwords, and military communications. Cryptography prior to the modern age was effectively synonymous wi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

OpenCL

OpenCL (Open Computing Language) is a framework for writing programs that execute across heterogeneous platforms consisting of central processing units (CPUs), graphics processing units (GPUs), digital signal processors (DSPs), field-programmable gate arrays (FPGAs) and other processors or hardware accelerators. OpenCL specifies programming languages (based on C99, C++14 and C++17) for programming these devices and application programming interfaces (APIs) to control the platform and execute programs on the compute devices. OpenCL provides a standard interface for parallel computing using task- and data-based parallelism. OpenCL is an open standard maintained by the non-profit technology consortium Khronos Group. Conformant implementations are available from Altera, AMD, ARM, Creative, IBM, Imagination, Intel, Nvidia, Qualcomm, Samsung, Vivante, Xilinx, and ZiiLABS. Overview OpenCL views a computing system as consisting of a number of ''compute devices'', which migh ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

GNU Compiler Collection

The GNU Compiler Collection (GCC) is an optimizing compiler produced by the GNU Project supporting various programming languages, hardware architectures and operating systems. The Free Software Foundation (FSF) distributes GCC as free software under the GNU General Public License (GNU GPL). GCC is a key component of the GNU toolchain and the standard compiler for most projects related to GNU and the Linux kernel. With roughly 15 million lines of code in 2019, GCC is one of the biggest free programs in existence. It has played an important role in the growth of free software, as both a tool and an example. When it was first released in 1987 by Richard Stallman, GCC 1.0 was named the GNU C Compiler since it only handled the C programming language. It was extended to compile C++ in December of that year. Front ends were later developed for Objective-C, Objective-C++, Fortran, Ada, D and Go, among others. The OpenMP and OpenACC specifications are also supported in the C and C ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

LLVM

LLVM is a set of compiler and toolchain technologies that can be used to develop a front end for any programming language and a back end for any instruction set architecture. LLVM is designed around a language-independent intermediate representation (IR) that serves as a portable, high-level assembly language that can be optimized with a variety of transformations over multiple passes. LLVM is written in C++ and is designed for compile-time, link-time, run-time, and "idle-time" optimization. Originally implemented for C and C++, the language-agnostic design of LLVM has since spawned a wide variety of front ends: languages with compilers that use LLVM (or which do not directly use LLVM but can generate compiled programs as LLVM IR) include ActionScript, Ada, C#, Common Lisp, PicoLisp, Crystal, CUDA, D, Delphi, Dylan, Forth, Fortran, Free Basic, Free Pascal, Graphical G, Halide, Haskell, Java bytecode, Julia, Kotlin, Lua, Objective-C, OpenCL, PostgreSQL's SQL and PLpg ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scalable Vector Extension

AArch64 or ARM64 is the 64-bit extension of the ARM architecture family. It was first introduced with the Armv8-A architecture. Arm releases a new extension every year. ARMv8.x and ARMv9.x extensions and features Announced in October 2011, ARMv8-A represents a fundamental change to the ARM architecture. It adds an optional 64-bit architecture, named "AArch64", and the associated new "A64" instruction set. AArch64 provides user-space compatibility with the existing 32-bit architecture ("AArch32" / ARMv7-A), and instruction set ("A32"). The 16-32bit Thumb instruction set is referred to as "T32" and has no 64-bit counterpart. ARMv8-A allows 32-bit applications to be executed in a 64-bit OS, and a 32-bit OS to be under the control of a 64-bit hypervisor. ARM announced their Cortex-A53 and Cortex-A57 cores on 30 October 2012. Apple was the first to release an ARMv8-A compatible core (Cyclone) in a consumer product (iPhone 5S). AppliedMicro, using an FPGA, was the first to demo ARMv8 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

PowerPC G4

PowerPC G4 is a designation formerly used by Apple and Eyetech to describe a ''fourth generation'' of 32-bit PowerPC microprocessors. Apple has applied this name to various (though closely related) processor models from Freescale, a former part of Motorola. Motorola and Freescale's proper name of this family of processors is PowerPC 74xx. Macintosh computers such as the PowerBook G4 and iBook G4 laptops and the Power Mac G4 and Power Mac G4 Cube desktops all took their name from the processor. PowerPC G4 processors were also used in the eMac, first-generation Xserves, first-generation Mac Minis, and the iMac G4 before the introduction of the PowerPC 970. Apple completely phased out the G4 series for desktop models after it selected the 64-bit IBM-produced PowerPC 970 processor as the basis for its PowerPC G5 series. The last desktop model that used the G4 was the Mac Mini which now comes with an Apple M1 processor. The last portable to use the G4 was the iBook G4 but was replaced ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

AVX-512

AVX-512 are 512-bit extensions to the 256-bit Advanced Vector Extensions SIMD instructions for x86 instruction set architecture (ISA) proposed by Intel in July 2013, and implemented in Intel's Xeon Phi x200 (Knights Landing) and Skylake-X CPUs; this includes the Core-X series (excluding the Core i5-7640X and Core i7-7740X), as well as the new Xeon Scalable Processor Family and Xeon D-2100 Embedded Series. AVX-512 consists of multiple extensions that may be implemented independently. This policy is a departure from the historical requirement of implementing the entire instruction block. Only the core extension AVX-512F (AVX-512 Foundation) is required by all AVX-512 implementations. Besides widening most 256-bit instructions, the extensions introduce various new operations, such as new data conversions, scatter operations, and permutations. The number of AVX registers is increased from 16 to 32, and eight new "mask registers" are added, which allow for variable selection and blendi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Power ISA

Power ISA is a reduced instruction set computer (RISC) instruction set architecture (ISA) currently developed by the OpenPOWER Foundation, led by IBM. It was originally developed by IBM and the now-defunct Power.org industry group. Power ISA is an evolution of the PowerPC ISA, created by the mergers of the core PowerPC ISA and the optional Book E for embedded applications. The merger of these two components in 2006 was led by Power.org founders IBM and Freescale Semiconductor. The ISA is divided into several ''categories'' which are described in a certain ''Book''. Processors implement a set of these categories as required for their task. Different classes of processors are required to implement certain categories, for example a server-class processor includes the categories: ''Base'', ''Server'', ''Floating-Point'', ''64-Bit'', etc. All processors implement the Base category. Power ISA is a RISC load/store architecture. It has multiple sets of registers: * ''32'' × 32-b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

SIMD

Single instruction, multiple data (SIMD) is a type of parallel processing in Flynn's taxonomy. SIMD can be internal (part of the hardware design) and it can be directly accessible through an instruction set architecture (ISA), but it should not be confused with an ISA. SIMD describes computers with multiple processing elements that perform the same operation on multiple data points simultaneously. Such machines exploit data level parallelism, but not concurrency: there are simultaneous (parallel) computations, but each unit performs the exact same instruction at any given moment (just with different data). SIMD is particularly applicable to common tasks such as adjusting the contrast in a digital image or adjusting the volume of digital audio. Most modern CPU designs include SIMD instructions to improve the performance of multimedia use. SIMD has three different subcategories in Flynn's 1972 Taxonomy, one of which is SIMT. SIMT should not be confused with software thr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

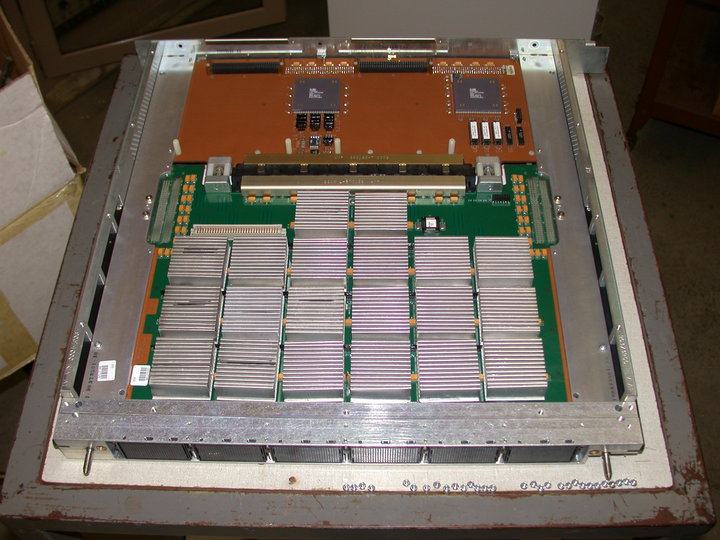

Vector Processing

In computing, a vector processor or array processor is a central processing unit (CPU) that implements an instruction set where its instructions are designed to operate efficiently and effectively on large one-dimensional arrays of data called ''vectors''. This is in contrast to scalar processors, whose instructions operate on single data items only, and in contrast to some of those same scalar processors having additional single instruction, multiple data (SIMD) or SWAR Arithmetic Units. Vector processors can greatly improve performance on certain workloads, notably numerical simulation and similar tasks. Vector processing techniques also operate in video-game console hardware and in graphics accelerators. Vector machines appeared in the early 1970s and dominated supercomputer design through the 1970s into the 1990s, notably the various Cray platforms. The rapid fall in the price-to-performance ratio of conventional microprocessor designs led to a decline in vector supercomput ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |