|

Negative Entropy

In information theory and statistics, negentropy is used as a measure of distance to normality. The concept and phrase "negative entropy" was introduced by Erwin Schrödinger in his 1944 popular-science book '' What is Life?'' Later, Léon Brillouin shortened the phrase to ''negentropy''. In 1974, Albert Szent-Györgyi proposed replacing the term ''negentropy'' with ''syntropy''. That term may have originated in the 1940s with the Italian mathematician Luigi Fantappiè, who tried to construct a unified theory of biology and physics. Buckminster Fuller tried to popularize this usage, but ''negentropy'' remains common. In a note to '' What is Life?'' Schrödinger explained his use of this phrase. Information theory In information theory and statistics, negentropy is used as a measure of distance to normality. Out of all distributions with a given mean and variance, the normal or Gaussian distribution is the one with the highest entropy. Negentropy measures the difference in ent ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Entropy And Life

Research concerning the relationship between the thermodynamic quantity entropy and the evolution of life began around the turn of the 20th century. In 1910, American historian Henry Adams printed and distributed to university libraries and history professors the small volume ''A Letter to American Teachers of History'' proposing a theory of history based on the second law of thermodynamics and on the principle of entropy. The 1944 book '' What is Life?'' by Nobel-laureate physicist Erwin Schrödinger stimulated further research in the field. In his book, Schrödinger originally stated that life feeds on negative entropy, or negentropy as it is sometimes called, but in a later edition corrected himself in response to complaints and stated that the true source is free energy. More recent work has restricted the discussion to Gibbs free energy because biological processes on Earth normally occur at a constant temperature and pressure, such as in the atmosphere or at the bottom of th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Differential Entropy

Differential entropy (also referred to as continuous entropy) is a concept in information theory that began as an attempt by Claude Shannon to extend the idea of (Shannon) entropy, a measure of average surprisal of a random variable, to continuous probability distributions. Unfortunately, Shannon did not derive this formula, and rather just assumed it was the correct continuous analogue of discrete entropy, but it is not. The actual continuous version of discrete entropy is the limiting density of discrete points (LDDP). Differential entropy (described here) is commonly encountered in the literature, but it is a limiting case of the LDDP, and one that loses its fundamental association with discrete entropy. In terms of measure theory, the differential entropy of a probability measure is the negative relative entropy from that measure to the Lebesgue measure, where the latter is treated as if it were a probability measure, despite being unnormalized. Definition Let X be a r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Max Planck

Max Karl Ernst Ludwig Planck (, ; 23 April 1858 – 4 October 1947) was a German theoretical physicist whose discovery of energy quanta won him the Nobel Prize in Physics in 1918. Planck made many substantial contributions to theoretical physics, but his fame as a physicist rests primarily on his role as the originator of quantum theory, which revolutionized human understanding of atomic and subatomic processes. In 1948, the German scientific institution Kaiser Wilhelm Society (of which Planck was twice president) was renamed Max Planck Society (MPG). The MPG now includes 83 institutions representing a wide range of scientific directions. Life and career Planck came from a traditional, intellectual family. His paternal great-grandfather and grandfather were both theology professors in Göttingen; his father was a law professor at the University of Kiel and Munich. One of his uncles was also a judge. Planck was born in 1858 in Kiel, Holstein, to Johann Julius Wilhel ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Isothermal Process

In thermodynamics, an isothermal process is a type of thermodynamic process in which the temperature ''T'' of a system remains constant: Δ''T'' = 0. This typically occurs when a system is in contact with an outside thermal reservoir, and a change in the system occurs slowly enough to allow the system to be continuously adjusted to the temperature of the reservoir through heat exchange (see quasi-equilibrium). In contrast, an '' adiabatic process'' is where a system exchanges no heat with its surroundings (''Q'' = 0). Simply, we can say that in an isothermal process * T = \text * \Delta T = 0 * dT = 0 * For ideal gases only, internal energy \Delta U = 0 while in adiabatic processes: * Q = 0. Etymology The adjective "isothermal" is derived from the Greek words "ἴσος" ("isos") meaning "equal" and "θέρμη" ("therme") meaning "heat". Examples Isothermal processes can occur in any kind of system that has some means of regulating the temperature, includ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

François Jacques Dominique Massieu

François () is a French masculine given name and surname, equivalent to the English name Francis. People with the given name * Francis I of France, King of France (), known as "the Father and Restorer of Letters" * Francis II of France, King of France and King consort of Scots (), known as the husband of Mary Stuart, Queen of Scots * François Amoudruz (1926–2020), French resistance fighter * François-Marie Arouet (better known as Voltaire; 1694–1778), French Enlightenment writer, historian, and philosopher *François Aubry (other), several people *François Baby (other), several people * François Beauchemin (born 1980), Canadian ice hockey player for the Anaheim Duck *François Blanc (1806–1877), French entrepreneur and operator of casinos *François Boucher (other), several people *François Caron (other), several people * François Cevert (1944–1973), French racing driver * François Chau (born 1959), Cambodian American actor * F ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Capacity For Entropy

In information theory and statistics, negentropy is used as a measure of distance to normality. The concept and phrase "negative entropy" was introduced by Erwin Schrödinger in his 1944 popular-science book '' What is Life?'' Later, Léon Brillouin shortened the phrase to ''negentropy''. In 1974, Albert Szent-Györgyi proposed replacing the term ''negentropy'' with ''syntropy''. That term may have originated in the 1940s with the Italian mathematician Luigi Fantappiè, who tried to construct a unified theory of biology and physics. Buckminster Fuller tried to popularize this usage, but ''negentropy'' remains common. In a note to '' What is Life?'' Schrödinger explained his use of this phrase. Information theory In information theory and statistics, negentropy is used as a measure of distance to normality. Out of all distributions with a given mean and variance, the normal or Gaussian distribution is the one with the highest entropy. Negentropy measures the difference in en ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Josiah Willard Gibbs

Josiah Willard Gibbs (; February 11, 1839 – April 28, 1903) was an American scientist who made significant theoretical contributions to physics, chemistry, and mathematics. His work on the applications of thermodynamics was instrumental in transforming physical chemistry into a rigorous inductive science. Together with James Clerk Maxwell and Ludwig Boltzmann, he created statistical mechanics (a term that he coined), explaining the laws of thermodynamics as consequences of the statistical properties of Statistical ensemble (mathematical physics), ensembles of the possible states of a physical system composed of many particles. Gibbs also worked on the application of Maxwell's equations to problems in physical optics. As a mathematician, he invented modern vector calculus (independently of the British scientist Oliver Heaviside, who carried out similar work during the same period). In 1863, Yale University, Yale awarded Gibbs the first American Doctor of Philosophy, doctorate ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Free Enthalpy

In thermodynamics, the Gibbs free energy (or Gibbs energy; symbol G) is a thermodynamic potential that can be used to calculate the maximum amount of work that may be performed by a thermodynamically closed system at constant temperature and pressure. It also provides a necessary condition for processes such as chemical reactions that may occur under these conditions. The Gibbs free energy change , measured in joules in SI) is the ''maximum'' amount of non-expansion work that can be extracted from a closed system (one that can exchange heat and work with its surroundings, but not matter) at fixed temperature and pressure. This maximum can be attained only in a completely reversible process. When a system transforms reversibly from an initial state to a final state under these conditions, the decrease in Gibbs free energy equals the work done by the system to its surroundings, minus the work of the pressure forces. The Gibbs energy is the thermodynamic potential that is minimi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kullback–Leibler Divergence

In mathematical statistics, the Kullback–Leibler divergence (also called relative entropy and I-divergence), denoted D_\text(P \parallel Q), is a type of statistical distance: a measure of how one probability distribution ''P'' is different from a second, reference probability distribution ''Q''. A simple interpretation of the KL divergence of ''P'' from ''Q'' is the expected excess surprise from using ''Q'' as a model when the actual distribution is ''P''. While it is a distance, it is not a metric, the most familiar type of distance: it is not symmetric in the two distributions (in contrast to variation of information), and does not satisfy the triangle inequality. Instead, in terms of information geometry, it is a type of divergence, a generalization of squared distance, and for certain classes of distributions (notably an exponential family), it satisfies a generalized Pythagorean theorem (which applies to squared distances). In the simple case, a relative entropy ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

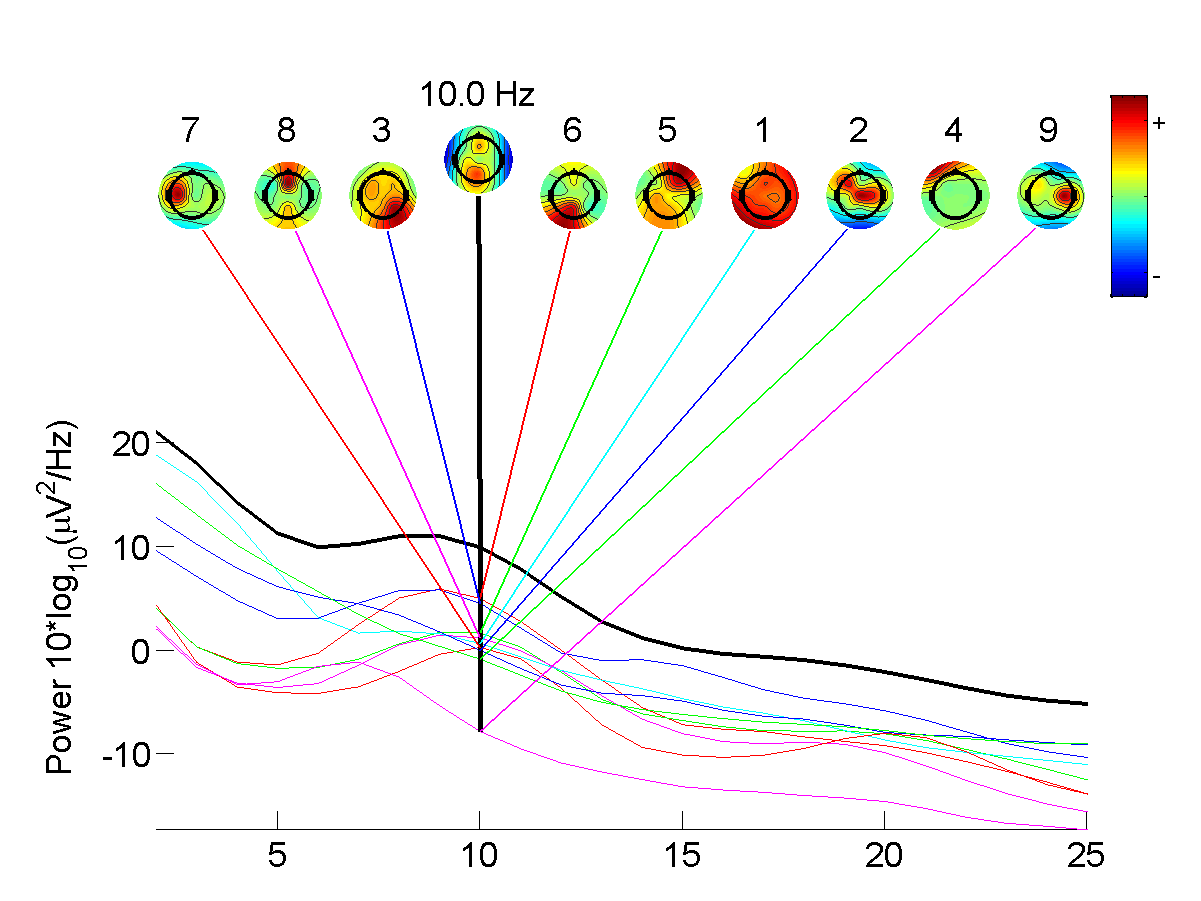

Independent Component Analysis

In signal processing, independent component analysis (ICA) is a computational method for separating a multivariate signal into additive subcomponents. This is done by assuming that at most one subcomponent is Gaussian and that the subcomponents are statistically independent from each other. ICA is a special case of blind source separation. A common example application is the " cocktail party problem" of listening in on one person's speech in a noisy room. Introduction Independent component analysis attempts to decompose a multivariate signal into independent non-Gaussian signals. As an example, sound is usually a signal that is composed of the numerical addition, at each time t, of signals from several sources. The question then is whether it is possible to separate these contributing sources from the observed total signal. When the statistical independence assumption is correct, blind ICA separation of a mixed signal gives very good results. It is also used for signals that a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Entropy

In information theory, the entropy of a random variable is the average level of "information", "surprise", or "uncertainty" inherent to the variable's possible outcomes. Given a discrete random variable X, which takes values in the alphabet \mathcal and is distributed according to p: \mathcal\to, 1/math>: \Eta(X) := -\sum_ p(x) \log p(x) = \mathbb \log p(X), where \Sigma denotes the sum over the variable's possible values. The choice of base for \log, the logarithm, varies for different applications. Base 2 gives the unit of bits (or " shannons"), while base ''e'' gives "natural units" nat, and base 10 gives units of "dits", "bans", or " hartleys". An equivalent definition of entropy is the expected value of the self-information of a variable. The concept of information entropy was introduced by Claude Shannon in his 1948 paper " A Mathematical Theory of Communication",PDF archived froherePDF archived frohere and is also referred to as Shannon entropy. Shannon's theory ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |