|

Memory Consistency

In computer science, a consistency model specifies a contract between the programmer and a system, wherein the system guarantees that if the programmer follows the rules for operations on memory, memory will be consistent and the results of reading, writing, or updating memory will be predictable. Consistency models are used in distributed systems like distributed shared memory systems or distributed data stores (such as filesystems, databases, optimistic replication systems or web caching). Consistency is different from coherence, which occurs in systems that are cached or cache-less, and is consistency of data with respect to all processors. Coherence deals with maintaining a global order in which writes to a single location or single variable are seen by all processors. Consistency deals with the ordering of operations to multiple locations with respect to all processors. High level languages, such as C++ and Java, maintain the consistency contract by translating memory ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Computer Science

Computer science is the study of computation, information, and automation. Computer science spans Theoretical computer science, theoretical disciplines (such as algorithms, theory of computation, and information theory) to Applied science, applied disciplines (including the design and implementation of Computer architecture, hardware and Software engineering, software). Algorithms and data structures are central to computer science. The theory of computation concerns abstract models of computation and general classes of computational problem, problems that can be solved using them. The fields of cryptography and computer security involve studying the means for secure communication and preventing security vulnerabilities. Computer graphics (computer science), Computer graphics and computational geometry address the generation of images. Programming language theory considers different ways to describe computational processes, and database theory concerns the management of re ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Causal Consistency

Causal consistency is one of the major memory consistency models. In concurrent programming, where concurrent processes are accessing a shared memory, a consistency model restricts which accesses are legal. This is useful for defining correct data structures in distributed shared memory or distributed transactions. Causal Consistency is “Available under Partition”, meaning that a process can read and write the memory (memory is Available) even while there is no functioning network connection (network is Partitioned) between processes; it is an asynchronous model. Contrast to strong consistency models, such as sequential consistency or linearizability, which cannot be both safe and live under partition, and are slow to respond because they require synchronisation. Causal consistency was proposed in 1990s as a weaker consistency model for shared memory models. Causal consistency is closely related to the concept of Causal Broadcast in communication protocols. In these ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Weak Consistency

The name weak consistency can be used in two senses. In the first sense, strict and more popular, weak consistency is one of the consistency models used in the domain of concurrent programming (e.g. in distributed shared memory, distributed transactions etc.). A protocol is said to support weak consistency if: #All accesses to synchronization variables are seen by all processes (or nodes, processors) in the same order (sequentially) - these are synchronization operations. Accesses to critical sections are seen sequentially. #All other accesses may be seen in different order on different processes (or nodes, processors). #The set of both read and write operations in between different synchronization operations is the same in each process. Therefore, there can be no access to a synchronization variable if there are pending write operations. And there can not be any new read/write operation started if the system is performing any synchronization operation. In the second, more genera ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Vector-field Consistency

In vector calculus and physics, a vector field is an assignment of a vector to each point in a space, most commonly Euclidean space \mathbb^n. A vector field on a plane can be visualized as a collection of arrows with given magnitudes and directions, each attached to a point on the plane. Vector fields are often used to model, for example, the speed and direction of a moving fluid throughout three dimensional space, such as the wind, or the strength and direction of some force, such as the magnetic or gravitational force, as it changes from one point to another point. The elements of differential and integral calculus extend naturally to vector fields. When a vector field represents force, the line integral of a vector field represents the work done by a force moving along a path, and under this interpretation conservation of energy is exhibited as a special case of the fundamental theorem of calculus. Vector fields can usefully be thought of as representing the velocity o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Serializability

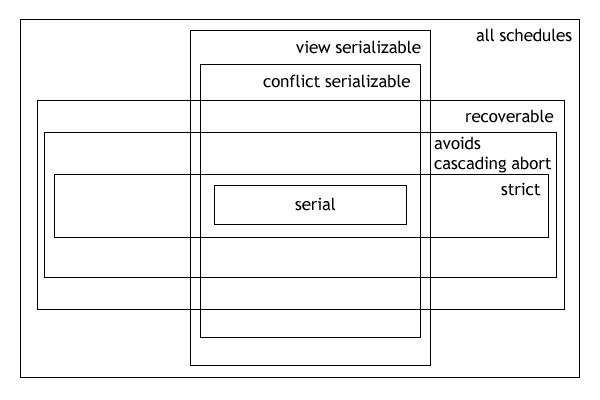

In the fields of databases and transaction processing (transaction management), a schedule (or history) of a system is an abstract model to describe the order of executions in a set of transactions running in the system. Often it is a ''list'' of operations (actions) ordered by time, performed by a set of transactions that are executed together in the system. If the order in time between certain operations is not determined by the system, then a ''partial order'' is used. Examples of such operations are requesting a read operation, reading, writing, aborting, committing, requesting a lock, locking, etc. Often, only a subset of the transaction operation types are included in a schedule. Schedules are fundamental concepts in database concurrency control theory. In practice, most general purpose database systems employ conflict-serializable and strict recoverable schedules. Notation Grid notation: * Columns: The different transactions in the schedule. * Rows: The time order of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Fork Consistency

In cutlery or kitchenware, a fork (from 'pitchfork') is a utensil, now usually made of metal, whose long handle terminates in a head that branches into several narrow and often slightly curved tines with which one can spear foods either to hold them to cut with a knife or to lift them to the mouth. History Bone forks have been found in archaeological sites of the Bronze Age Qijia culture (2400–1900 BC), the Shang dynasty (c. 1600–c. 1050 BC), as well as later Chinese dynasties.Needham (2000). ''Science and Civilisation in China. Volume 6: Biology and biological technology. Part V: Fermentations and food science.'' Cambridge University Press. Pages 105–110. A stone carving from an Eastern Han tomb (in Ta-kua-liang, Suide County, Shaanxi) depicts three hanging two-pronged forks in a dining scene. Similar forks have also been depicted on top of a stove in a scene at another Eastern Han tomb (in Suide County, Shaanxi). In Ancient Egypt, large forks were used as cooking ute ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Delta Consistency

Delta consistency is one of the consistency models used in the domain of parallel programming, for example in distributed shared memory, distributed transactions, and Optimistic replication The delta consistency model states that an update will propagate through the system and all replicas will be consistent In deductive logic, a consistent theory is one that does not lead to a logical contradiction. A theory T is consistent if there is no formula \varphi such that both \varphi and its negation \lnot\varphi are elements of the set of consequences ... after a fixed time period δ. In other words, the result of any read operation is consistent with a read on the original copy of an object except for a (short) bounded interval after a modification. So basically, if you have an object which is modified, during the short period of time following its modification, the read will not be consistent. Then, after a fixed time period, the modification is propagated and the read will be co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

ACID (computer Science)

An acid is a molecule or ion capable of either donating a proton (i.e. hydrogen cation, H+), known as a Brønsted–Lowry acid, or forming a covalent bond with an electron pair, known as a Lewis acid. The first category of acids are the proton donors, or Brønsted–Lowry acids. In the special case of aqueous solutions, proton donors form the hydronium ion H3O+ and are known as Arrhenius acids. Brønsted and Lowry generalized the Arrhenius theory to include non-aqueous solvents. A Brønsted–Lowry or Arrhenius acid usually contains a hydrogen atom bonded to a chemical structure that is still energetically favorable after loss of H+. Aqueous Arrhenius acids have characteristic properties that provide a practical description of an acid. Acids form aqueous solutions with a sour taste, can turn blue litmus red, and react with bases and certain metals (like calcium) to form salts. The word ''acid'' is derived from the Latin , meaning 'sour'. An aqueous solution of an a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Eventual Consistency

Eventual consistency is a consistency model used in distributed computing to achieve high availability. Put simply: if no new updates are made to a given data item, ''eventually'' all accesses to that item will return the last updated value. Eventual consistency, also called optimistic replication, is widely deployed in distributed systems and has origins in early mobile computing projects. A system that has achieved eventual consistency is often said to have converged, or achieved replica convergence. Eventual consistency is a weak guarantee – most stronger models, like linearizability, are trivially eventually consistent. Eventually-consistent services are often classified as providing BASE semantics (basically-available, soft-state, eventual consistency), in contrast to traditional ACID (atomicity, consistency, isolation, durability). In chemistry, a base is the opposite of an acid, which helps in remembering the acronym. According to the same resource, these are the rou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Release Consistency

Release consistency is one of the synchronization-based consistency models used in concurrent programming (e.g. in distributed shared memory, distributed transactions etc.). Introduction In modern parallel computing systems, memory consistency must be maintained to avoid undesirable outcomes. Strict consistency models like sequential consistency are intuitively composed but can be quite restrictive in terms of performance as they would disable instruction level parallelism which is widely applied in sequential programming. To achieve better performance, some relaxed models are explored and release consistency is an aggressive relaxing attempt. Release consistency vs. sequential consistency Hardware structure and program-level effort Sequential consistency can be achieved simply by hardware implementation, while release consistency is also based on an observation that most of the parallel programs are properly synchronized. In programming level, synchronization is applied to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Slow Memory

Slow may refer to various basic dictionary-related meanings: * Slow velocity, the rate of change of position of a moving body ** Slow speed, in kinematics, the magnitude of the velocity of an object * Slow tempo, the speed or pace of a piece of music * Slow motion, an effect in film-making * Slow reaction rate, the speed at which a chemical reaction takes place Slow, SLOW, Slowing or Slowness may also refer to: Music * Slow (band), a 1980s Canadian band Albums * ''Slow'' (Richie Kotzen album), 2001 * ''Slow'' (Starflyer 59 album), 2016 * ''Slow'' (Luna Sea album), 2005 * ''Slow'' (Ann Hampton Callaway album), 2004 * ''Slowness'' (album), an album by cantopop singer Kay Tse Songs * "Slow" (Kylie Minogue song), 2003 * "Slow" (Rumer song), 2010 * "Slow" (Matoma song), 2017 * "Slow" (Jackson Wang & Ciara song), 2023 * "Slow" (Black Midi song), 2021 * "Slow", song by The Fratellis from the album '' Eyes Wide, Tongue Tied'' (2015) * "Slow", song by Lisa Mitchell from th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |