|

Delta Consistency

Delta consistency is one of the consistency models used in the domain of parallel programming, for example in distributed shared memory, distributed transactions, and Optimistic replication The delta consistency model states that an update will propagate through the system and all replicas will be consistent In classical deductive logic, a consistent theory is one that does not lead to a logical contradiction. The lack of contradiction can be defined in either semantic or syntactic terms. The semantic definition states that a theory is consisten ... after a fixed time period δ. In other words, the result of any read operation is consistent with a read on the original copy of an object except for a (short) bounded interval after a modification. So basically, if you have an object which is modified, during the short period of time following its modification, the read will not be consistent. Then, after a fixed time period, the modification is propagated and the read will b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Consistency Model

In computer science, a consistency model specifies a contract between the programmer and a system, wherein the system guarantees that if the programmer follows the rules for operations on memory, memory will be consistent and the results of reading, writing, or updating memory will be predictable. Consistency models are used in distributed systems like distributed shared memory systems or distributed data stores (such as filesystems, databases, optimistic replication systems or web caching). Consistency is different from coherence, which occurs in systems that are cached or cache-less, and is consistency of data with respect to all processors. Coherence deals with maintaining a global order in which writes to a single location or single variable are seen by all processors. Consistency deals with the ordering of operations to multiple locations with respect to all processors. High level languages, such as C++ and Java, maintain the consistency contract by translating mem ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parallel Programming

Parallel computing is a type of computation in which many calculations or processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance increases must largely come from increasing the number of processors (or cores) on a die, rather tha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

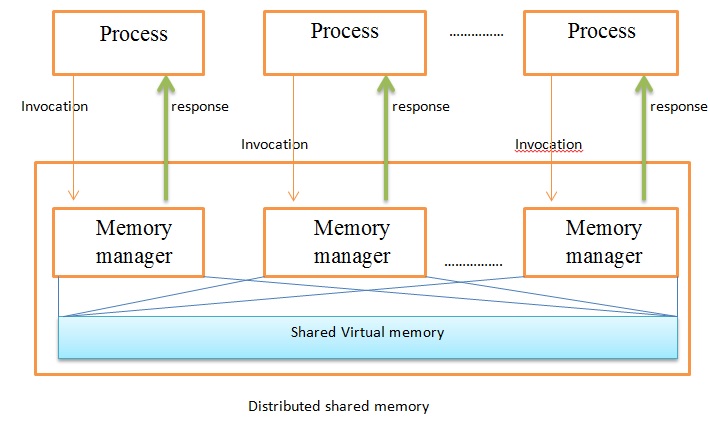

Distributed Shared Memory

In computer science, distributed shared memory (DSM) is a form of memory architecture where physically separated memories can be addressed as a single shared address space. The term "shared" does not mean that there is a single centralized memory, but that the address space is shared—i.e., the same physical address on two processors refers to the same location in memory. Distributed global address space (DGAS), is a similar term for a wide class of software and hardware implementations, in which each node of a cluster has access to shared memory in addition to each node's private (i.e., not shared) memory. Overview A distributed-memory system, often called a multicomputer, consists of multiple independent processing nodes with local memory modules which is connected by a general interconnection network. Software DSM systems can be implemented in an operating system, or as a programming library and can be thought of as extensions of the underlying virtual memory arch ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Distributed Transactions

A distributed transaction is a database transaction in which two or more network hosts are involved. Usually, hosts provide transactional resources, while the transaction manager is responsible for creating and managing a global transaction that encompasses all operations against such resources. Distributed transactions, as any other transactions, must have all four ACID (atomicity, consistency, isolation, durability) properties, where atomicity guarantees all-or-nothing outcomes for the unit of work (operations bundle). Open Group, a vendor consortium, proposed the X/Open Distributed Transaction Processing (DTP) Model (X/Open XA), which became a de facto standard for behavior of transaction model components. Databases are common transactional resources and, often, transactions span a couple of such databases. In this case, a distributed transaction can be seen as a database transaction that must be synchronized (or provide ACID properties) among multiple participating database ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Optimistic Replication

Optimistic replication, also known as lazy replication, is a strategy for replication, in which replicas are allowed to diverge. Traditional pessimistic replication systems try to guarantee from the beginning that all of the replicas are identical to each other, as if there was only a single copy of the data all along. Optimistic replication does away with this in favor of eventual consistency, meaning that replicas are guaranteed to converge only when the system has been quiesced for a period of time. As a result, there is no longer a need to wait for all of the copies to be synchronized when updating data, which helps concurrency and parallelism. The trade-off is that different replicas may require explicit reconciliation later on, which might then prove difficult or even insoluble. Algorithms An optimistic replication algorithm consists of five elements: # Operation submission: Users submit operations at independent sites. # Propagation: Each site shares the operations it ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Consistent

In classical deductive logic, a consistent theory is one that does not lead to a logical contradiction. The lack of contradiction can be defined in either semantic or syntactic terms. The semantic definition states that a theory is consistent if it has a model, i.e., there exists an interpretation under which all formulas in the theory are true. This is the sense used in traditional Aristotelian logic, although in contemporary mathematical logic the term ''satisfiable'' is used instead. The syntactic definition states a theory T is consistent if there is no formula \varphi such that both \varphi and its negation \lnot\varphi are elements of the set of consequences of T. Let A be a set of closed sentences (informally "axioms") and \langle A\rangle the set of closed sentences provable from A under some (specified, possibly implicitly) formal deductive system. The set of axioms A is consistent when \varphi, \lnot \varphi \in \langle A \rangle for no formula \varphi. If ther ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |