|

Multiple Instance Learning

In machine learning, multiple-instance learning (MIL) is a type of supervised learning. Instead of receiving a set of instances which are individually Labeled data, labeled, the learner receives a set of labeled ''bags'', each containing many instances. In the simple case of multiple-instance binary classification, a bag may be labeled negative if all the instances in it are negative. On the other hand, a bag is labeled positive if there is at least one instance in it which is positive. From a collection of labeled bags, the learner tries to either (i) induce a concept that will label individual instances correctly or (ii) learn how to label bags without inducing the concept. Babenko (2008)Babenko, Boris. "Multiple instance learning: algorithms and applications." View Article PubMed/NCBI Google Scholar (2008). gives a simple example for MIL. Imagine several people, and each of them has a key chain that contains few keys. Some of these people are able to enter a certain room, and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task (computing), tasks without explicit Machine code, instructions. Within a subdiscipline in machine learning, advances in the field of deep learning have allowed Neural network (machine learning), neural networks, a class of statistical algorithms, to surpass many previous machine learning approaches in performance. ML finds application in many fields, including natural language processing, computer vision, speech recognition, email filtering, agriculture, and medicine. The application of ML to business problems is known as predictive analytics. Statistics and mathematical optimisation (mathematical programming) methods comprise the foundations of machine learning. Data mining is a related field of study, focusing on exploratory data analysi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sally Goldman

Sally Ann Goldman is an American computer scientist specializing in computational learning theory. She was a professor in the Department of Computer Science and Engineering at Washington University in St. Louis, and Edwin H. Murty Professor of Engineering, before leaving academia to join Google Research. She is also a successful amateur powerlifter. Education and career Goldman is originally from St. Louis, Missouri. She majored in computer science at Brown University, and then went to the Massachusetts Institute of Technology (MIT) for graduate study in computer science. She completed her Ph.D. there in 1990, with the dissertation ''Learning Binary Relations, Total Orders, and Read-Once Formulas'' supervised by Ron Rivest. As a faculty member at Washington University in St. Louis, she became Edwin H. Murty Professor of Engineering before leaving academia in 2008 to work for Google Research. Personal life Goldman was married to Kenneth J. Goldman, also a computer scientist fro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Supervised Learning

In machine learning, supervised learning (SL) is a paradigm where a Statistical model, model is trained using input objects (e.g. a vector of predictor variables) and desired output values (also known as a ''supervisory signal''), which are often human-made labels. The training process builds a function that maps new data to expected output values. An optimal scenario will allow for the algorithm to accurately determine output values for unseen instances. This requires the learning algorithm to Generalization (learning), generalize from the training data to unseen situations in a reasonable way (see inductive bias). This statistical quality of an algorithm is measured via a ''generalization error''. Steps to follow To solve a given problem of supervised learning, the following steps must be performed: # Determine the type of training samples. Before doing anything else, the user should decide what kind of data is to be used as a Training, validation, and test data sets, trainin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Feature (machine Learning)

In machine learning and pattern recognition, a feature is an individual measurable property or characteristic of a data set. Choosing informative, discriminating, and independent features is crucial to produce effective algorithms for pattern recognition, classification, and regression tasks. Features are usually numeric, but other types such as strings and graphs are used in syntactic pattern recognition, after some pre-processing step such as one-hot encoding. The concept of "features" is related to that of explanatory variables used in statistical techniques such as linear regression. Feature types In feature engineering, two types of features are commonly used: numerical and categorical. Numerical features are continuous values that can be measured on a scale. Examples of numerical features include age, height, weight, and income. Numerical features can be used in machine learning algorithms directly. Categorical features are discrete values that can be grouped into ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Boosting (machine Learning)

In machine learning (ML), boosting is an Ensemble learning, ensemble metaheuristic for primarily reducing Bias–variance tradeoff, bias (as opposed to variance). It can also improve the Stability (learning theory), stability and accuracy of ML Statistical classification, classification and Regression analysis, regression algorithms. Hence, it is prevalent in supervised learning for converting weak learners to strong learners. The concept of boosting is based on the question posed by Michael Kearns (computer scientist), Kearns and Leslie Valiant, Valiant (1988, 1989):Michael Kearns(1988)''Thoughts on Hypothesis Boosting'' Unpublished manuscript (Machine Learning class project, December 1988) "Can a set of weak learners create a single strong learner?" A weak learner is defined as a Statistical classification, classifier that is only slightly correlated with the true classification. A strong learner is a classifier that is arbitrarily well-correlated with the true classification. R ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

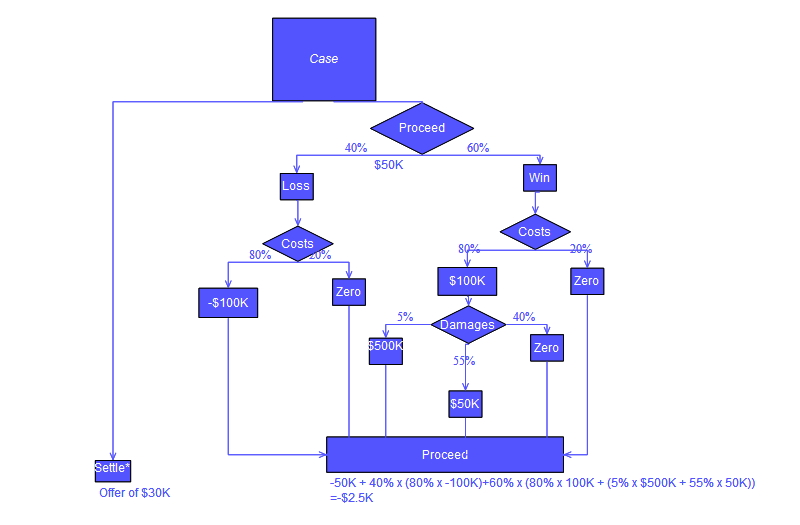

Decision Trees

A decision tree is a decision support system, decision support recursive partitioning structure that uses a Tree (graph theory), tree-like Causal model, model of decisions and their possible consequences, including probability, chance event outcomes, resource costs, and utility. It is one way to display an algorithm that only contains conditional control statements. Decision trees are commonly used in operations research, specifically in decision analysis, to help identify a strategy most likely to reach a goal, but are also a popular tool in Decision tree learning, machine learning. Overview A decision tree is a flowchart-like structure in which each internal node represents a test on an attribute (e.g. whether a coin flip comes up heads or tails), each branch represents the outcome of the test, and each leaf node represents a class label (decision taken after computing all attributes). The paths from root to leaf represent classification rules. In decision analysis, a de ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Neural Networks

A neural network is a group of interconnected units called neurons that send signals to one another. Neurons can be either Cell (biology), biological cells or signal pathways. While individual neurons are simple, many of them together in a network can perform complex tasks. There are two main types of neural networks. *In neuroscience, a ''biological neural network'' is a physical structure found in brains and complex nervous systems – a population of nerve cells connected by synapses. *In machine learning, an ''Neural network (machine learning), artificial neural network'' is a mathematical model used to approximate nonlinear functions. Artificial neural networks are used to solve artificial intelligence problems. In biology In the context of biology, a neural network is a population of biological neurons chemically connected to each other by synapses. A given neuron can be connected to hundreds of thousands of synapses. Each neuron sends and receives Electrochemistry, ele ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Support Vector Machines

In machine learning, support vector machines (SVMs, also support vector networks) are supervised max-margin models with associated learning algorithms that analyze data for classification and regression analysis. Developed at AT&T Bell Laboratories, SVMs are one of the most studied models, being based on statistical learning frameworks of VC theory proposed by Vapnik (1982, 1995) and Chervonenkis (1974). In addition to performing linear classification, SVMs can efficiently perform non-linear classification using the ''kernel trick'', representing the data only through a set of pairwise similarity comparisons between the original data points using a kernel function, which transforms them into coordinates in a higher-dimensional feature space. Thus, SVMs use the kernel trick to implicitly map their inputs into high-dimensional feature spaces, where linear classification can be performed. Being max-margin models, SVMs are resilient to noisy data (e.g., misclassified examples). ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Axis-aligned Object

In geometry, an axis-aligned object (axis-parallel, axis-oriented) is an object in ''n''-dimensional space whose shape is aligned with the coordinate axes of the space. Examples are axis-aligned rectangles (or hyperrectangles), the ones with edges parallel to the coordinate axes. Minimum bounding boxes are often implicitly assumed to be axis-aligned. A more general case is rectilinear polygons, the ones with all sides parallel to coordinate axes or rectilinear polyhedra. Many problems in computational geometry allow for faster algorithms when restricted to (collections of) axis-oriented objects, such as axis-aligned rectangles or axis-aligned line segments. A different kind of example are axis-aligned ellipsoids, i.e., the ellipsoid An ellipsoid is a surface that can be obtained from a sphere by deforming it by means of directional Scaling (geometry), scalings, or more generally, of an affine transformation. An ellipsoid is a quadric surface; that is, a Surface (mathemat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Supervised Learning

In machine learning, supervised learning (SL) is a paradigm where a Statistical model, model is trained using input objects (e.g. a vector of predictor variables) and desired output values (also known as a ''supervisory signal''), which are often human-made labels. The training process builds a function that maps new data to expected output values. An optimal scenario will allow for the algorithm to accurately determine output values for unseen instances. This requires the learning algorithm to Generalization (learning), generalize from the training data to unseen situations in a reasonable way (see inductive bias). This statistical quality of an algorithm is measured via a ''generalization error''. Steps to follow To solve a given problem of supervised learning, the following steps must be performed: # Determine the type of training samples. Before doing anything else, the user should decide what kind of data is to be used as a Training, validation, and test data sets, trainin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Boosting (meta-algorithm)

In machine learning (ML), boosting is an ensemble metaheuristic for primarily reducing bias (as opposed to variance). It can also improve the stability and accuracy of ML classification and regression algorithms. Hence, it is prevalent in supervised learning for converting weak learners to strong learners. The concept of boosting is based on the question posed by Kearns and Valiant (1988, 1989):Michael Kearns(1988)''Thoughts on Hypothesis Boosting'' Unpublished manuscript (Machine Learning class project, December 1988) "Can a set of weak learners create a single strong learner?" A weak learner is defined as a classifier that is only slightly correlated with the true classification. A strong learner is a classifier that is arbitrarily well-correlated with the true classification. Robert Schapire answered the question in the affirmative in a paper published in 1990. This has had significant ramifications in machine learning and statistics, most notably leading to the developm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |