|

Locality-sensitive Hashing

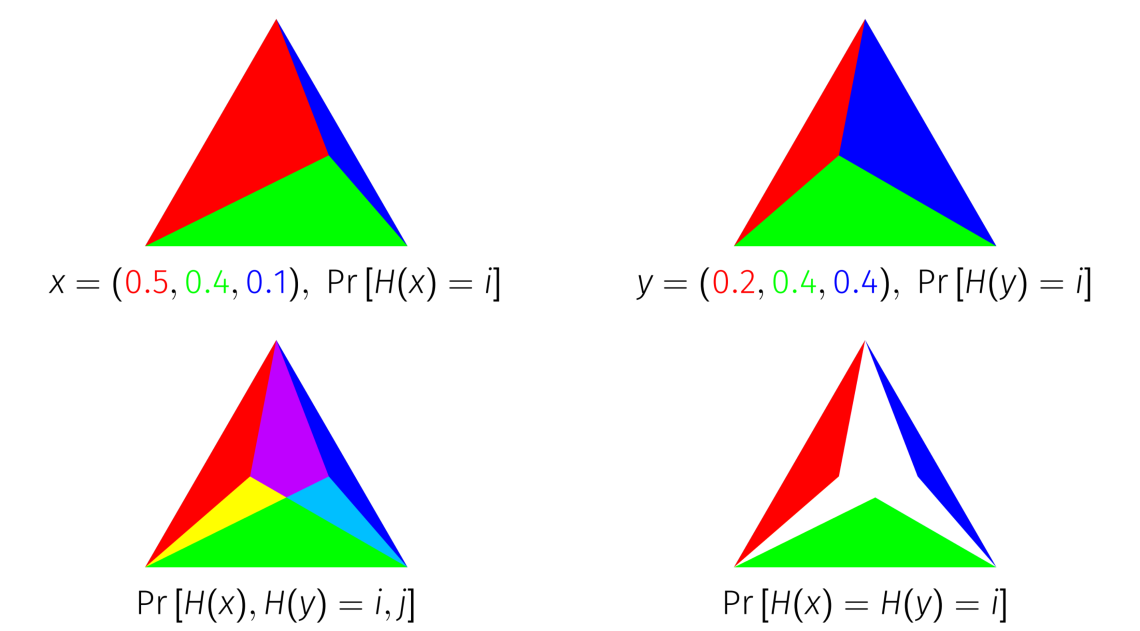

In computer science, locality-sensitive hashing (LSH) is an algorithmic technique that hashes similar input items into the same "buckets" with high probability. (The number of buckets is much smaller than the universe of possible input items.) Since similar items end up in the same buckets, this technique can be used for data clustering and nearest neighbor search. It differs from conventional hashing techniques in that hash collisions are maximized, not minimized. Alternatively, the technique can be seen as a way to reduce the dimensionality of high-dimensional data; high-dimensional input items can be reduced to low-dimensional versions while preserving relative distances between items. Hashing-based approximate nearest neighbor search algorithms generally use one of two main categories of hashing methods: either data-independent methods, such as locality-sensitive hashing (LSH); or data-dependent methods, such as locality-preserving hashing (LPH). Definitions An ''LSH family'' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Science

Computer science is the study of computation, automation, and information. Computer science spans theoretical disciplines (such as algorithms, theory of computation, information theory, and automation) to Applied science, practical disciplines (including the design and implementation of Computer architecture, hardware and Computer programming, software). Computer science is generally considered an area of research, academic research and distinct from computer programming. Algorithms and data structures are central to computer science. The theory of computation concerns abstract models of computation and general classes of computational problem, problems that can be solved using them. The fields of cryptography and computer security involve studying the means for secure communication and for preventing Vulnerability (computing), security vulnerabilities. Computer graphics (computer science), Computer graphics and computational geometry address the generation of images. Progr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parallel RAM

In computer science, a parallel random-access machine (parallel RAM or PRAM) is a shared-memory abstract machine. As its name indicates, the PRAM is intended as the parallel-computing analogy to the random-access machine (RAM) (not to be confused with random-access memory). In the same way that the RAM is used by sequential-algorithm designers to model algorithmic performance (such as time complexity), the PRAM is used by parallel-algorithm designers to model parallel algorithmic performance (such as time complexity, where the number of processors assumed is typically also stated). Similar to the way in which the RAM model neglects practical issues, such as access time to cache memory versus main memory, the PRAM model neglects such issues as synchronization and communication, but provides any (problem-size-dependent) number of processors. Algorithm cost, for instance, is estimated using two parameters O(time) and O(time × processor_number). Read/write conflicts Read/write conflic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Jaccard Index

The Jaccard index, also known as the Jaccard similarity coefficient, is a statistic used for gauging the similarity and diversity of sample sets. It was developed by Grove Karl Gilbert in 1884 as his ratio of verification (v) and now is frequently referred to as the Critical Success Index in meteorology. It was later developed independently by Paul Jaccard, originally giving the French name ''coefficient de communauté'', and independently formulated again by T. Tanimoto. Thus, the Tanimoto index or Tanimoto coefficient are also used in some fields. However, they are identical in generally taking the ratio of Intersection over Union. The Jaccard coefficient measures similarity between finite sample sets, and is defined as the size of the intersection divided by the size of the union of the sample sets: : J(A,B) = = . Note that by design, 0\le J(A,B)\le 1. If ''A'' intersection ''B'' is empty, then ''J''(''A'',''B'') = 0. The Jaccard coefficient is widely used in c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hamming Distance

In information theory, the Hamming distance between two strings of equal length is the number of positions at which the corresponding symbols are different. In other words, it measures the minimum number of ''substitutions'' required to change one string into the other, or the minimum number of ''errors'' that could have transformed one string into the other. In a more general context, the Hamming distance is one of several string metrics for measuring the edit distance between two sequences. It is named after the American mathematician Richard Hamming. A major application is in coding theory, more specifically to block codes, in which the equal-length strings are vectors over a finite field. Definition The Hamming distance between two equal-length strings of symbols is the number of positions at which the corresponding symbols are different. Examples The symbols may be letters, bits, or decimal digits, among other possibilities. For example, the Hamming distance between: ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Digital Video Fingerprinting

Video fingerprinting or video hashing are a class of dimension reduction techniques in which a system identifies, extracts, and then summarizes characteristic components of a video as a unique or a set of multiple perceptual hashes, enabling that video to be uniquely identified. This technology has proven to be effective at searching and comparing video files. History and process Video fingerprinting was first developed into practical use by Philips in 2002.Oostveen, J., Kalker, T., & Haitsma, J. (2002, March). Feature extraction and a database strategy for video fingerprinting. In ''International Conference on Advances in Visual Information Systems'' (pp. 117-128). Springer, Berlin, Heidelberg. Different methods exist for video fingerprinting. Van Oostveen relied on changes in patterns of image intensity over successive video frames. This makes the video fingerprinting robust against limited changes in color - or the transformation of color into gray scale of the original video ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Audio Fingerprint

An acoustic fingerprint is a condensed digital summary, a fingerprint, deterministically generated from an audio signal, that can be used to identify an audio sample or quickly locate similar items in an audio database. Practical uses of acoustic fingerprinting include identifying songs, melodies, tunes, or advertisements; sound effect library management; and video file identification. Media identification using acoustic fingerprints can be used to monitor the use of specific musical works and performances on radio broadcast, records, CDs, streaming media and peer-to-peer networks. This identification has been used in copyright compliance, licensing, and other monetization schemes. Attributes A robust acoustic fingerprint algorithm must take into account the perceptual characteristics of the audio. If two files sound alike to the human ear, their acoustic fingerprints should match, even if their binary representations are quite different. Acoustic fingerprints are n ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nearest Neighbor Search

Nearest neighbor search (NNS), as a form of proximity search, is the optimization problem of finding the point in a given set that is closest (or most similar) to a given point. Closeness is typically expressed in terms of a dissimilarity function: the less similar the objects, the larger the function values. Formally, the nearest-neighbor (NN) search problem is defined as follows: given a set ''S'' of points in a space ''M'' and a query point ''q'' ∈ ''M'', find the closest point in ''S'' to ''q''. Donald Knuth in vol. 3 of ''The Art of Computer Programming'' (1973) called it the post-office problem, referring to an application of assigning to a residence the nearest post office. A direct generalization of this problem is a ''k''-NN search, where we need to find the ''k'' closest points. Most commonly ''M'' is a metric space and dissimilarity is expressed as a distance metric, which is symmetric and satisfies the triangle inequality. Even more common, ''M'' is taken ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gene Expression

Gene expression is the process by which information from a gene is used in the synthesis of a functional gene product that enables it to produce end products, protein or non-coding RNA, and ultimately affect a phenotype, as the final effect. These products are often proteins, but in non-protein-coding genes such as transfer RNA (tRNA) and small nuclear RNA (snRNA), the product is a functional non-coding RNA. Gene expression is summarized in the central dogma of molecular biology first formulated by Francis Crick in 1958, further developed in his 1970 article, and expanded by the subsequent discoveries of reverse transcription and RNA replication. The process of gene expression is used by all known life—eukaryotes (including multicellular organisms), prokaryotes (bacteria and archaea), and utilized by viruses—to generate the macromolecular machinery for life. In genetics, gene expression is the most fundamental level at which the genotype gives rise to the phenotype, '' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

VisualRank

VisualRank is a system for finding and ranking images by analysing and comparing their content, rather than searching image names, Web links or other text. Google scientists made their VisualRank work public in a paper describing applying PageRank to Google image search at the International World Wide Web Conference in Beijing in 2008. . Methods Both computer vision techniques and locality-sensitive hashing (LSH) are used in the VisualRank algorithm. Consider an image search initiated by a text query. An existing search technique based on image metadata and surrounding text is used to retrieve the initial result candidates (PageRank), which along with other images in the index are clustered in a graph according to their similarity (which is precomputed). Centrality is then measured on the clustering, which will return the most canonical image(s) with respect to the query. The idea here is that agreement between users of the web about the image and its related concepts will ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Genome-wide Association Study

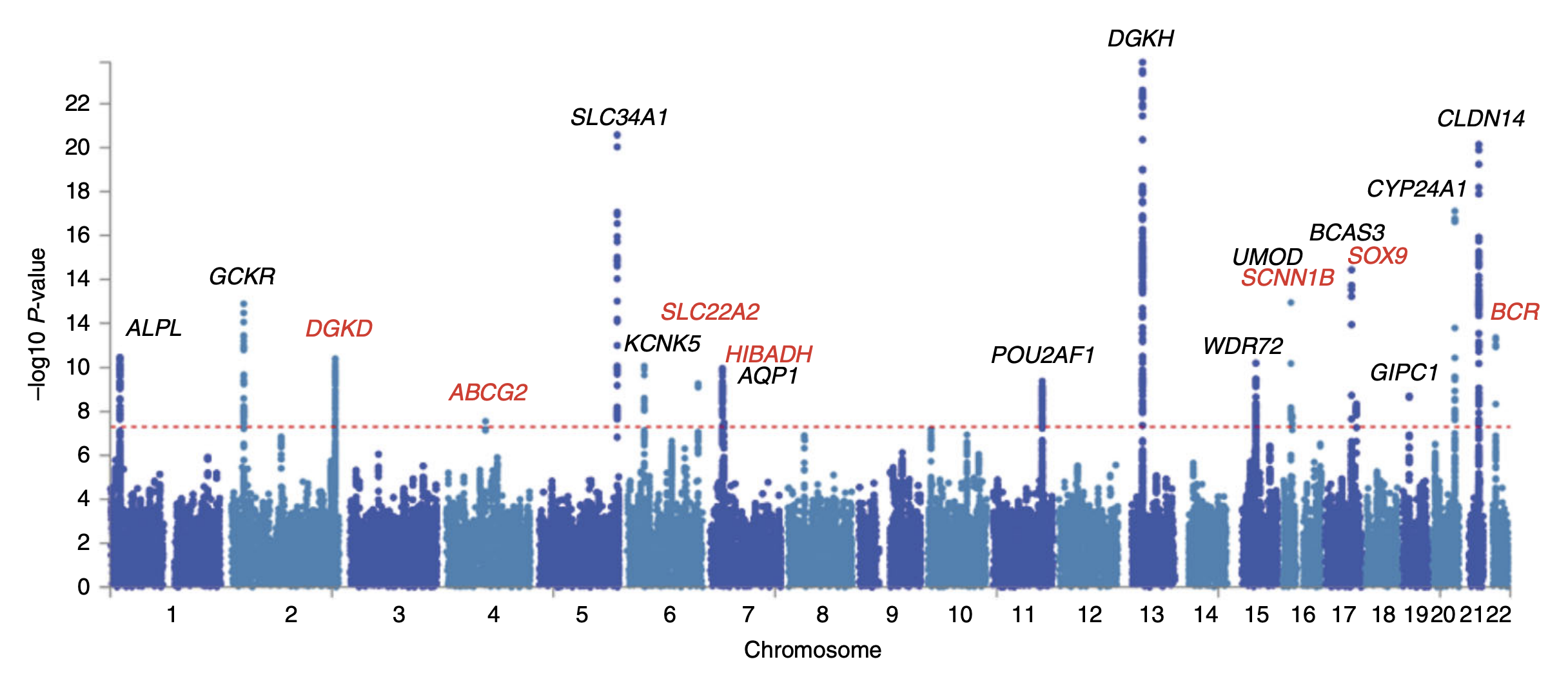

In genomics, a genome-wide association study (GWA study, or GWAS), also known as whole genome association study (WGA study, or WGAS), is an observational study of a genome-wide set of Single-nucleotide polymorphism, genetic variants in different individuals to see if any variant is associated with a trait. GWA studies typically focus on associations between single-nucleotide polymorphisms (SNPs) and traits like major human diseases, but can equally be applied to any other genetic variants and any other organisms. When applied to human data, GWA studies compare the DNA of participants having varying phenotypes for a particular trait or disease. These participants may be people with a disease (cases) and similar people without the disease (controls), or they may be people with different phenotypes for a particular trait, for example blood pressure. This approach is known as phenotype-first, in which the participants are classified first by their clinical manifestation(s), as oppose ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hierarchical Clustering

In data mining and statistics, hierarchical clustering (also called hierarchical cluster analysis or HCA) is a method of cluster analysis that seeks to build a hierarchy of clusters. Strategies for hierarchical clustering generally fall into two categories: * Agglomerative: This is a " bottom-up" approach: Each observation starts in its own cluster, and pairs of clusters are merged as one moves up the hierarchy. * Divisive: This is a "top-down" approach: All observations start in one cluster, and splits are performed recursively as one moves down the hierarchy. In general, the merges and splits are determined in a greedy manner. The results of hierarchical clustering are usually presented in a dendrogram. The standard algorithm for hierarchical agglomerative clustering (HAC) has a time complexity of \mathcal(n^3) and requires \Omega(n^2) memory, which makes it too slow for even medium data sets. However, for some special cases, optimal efficient agglomerative methods (of c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Space-filling Curve

In mathematical analysis, a space-filling curve is a curve whose range contains the entire 2-dimensional unit square (or more generally an ''n''-dimensional unit hypercube). Because Giuseppe Peano (1858–1932) was the first to discover one, space-filling curves in the 2-dimensional plane are sometimes called ''Peano curves'', but that phrase also refers to the Peano curve, the specific example of a space-filling curve found by Peano. Definition Intuitively, a curve in two or three (or higher) dimensions can be thought of as the path of a continuously moving point. To eliminate the inherent vagueness of this notion, Jordan in 1887 introduced the following rigorous definition, which has since been adopted as the precise description of the notion of a ''curve'': In the most general form, the range of such a function may lie in an arbitrary topological space, but in the most commonly studied cases, the range will lie in a Euclidean space such as the 2-dimensional plane (a ''pla ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |