|

Link Prediction

In network theory, link prediction is the problem of predicting the existence of a link between two entities in a network. Examples of link prediction include predicting friendship links among users in a social network, predicting co-authorship links in a citation network, and predicting interactions between genes and proteins in a biological network. Link prediction can also have a temporal aspect, where, given a snapshot of the set of links at time t, the goal is to predict the links at time t + 1. Link prediction is widely applicable. In e-commerce, link prediction is often a subtask for recommending items to users. In the curation of citation databases, it can be used for record deduplication. In bioinformatics, it has been used to predict protein-protein interactions (PPI). It is also used to identify hidden groups of terrorists and criminals in security related applications. Problem definition Consider a network G = (V, E), where V represents the entity nodes in the networ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Network Theory

Network theory is the study of graphs as a representation of either symmetric relations or asymmetric relations between discrete objects. In computer science and network science, network theory is a part of graph theory: a network can be defined as a graph in which nodes and/or edges have attributes (e.g. names). Network theory has applications in many disciplines including statistical physics, particle physics, computer science, electrical engineering, biology, archaeology, economics, finance, operations research, climatology, ecology, public health, sociology, and neuroscience. Applications of network theory include logistical networks, the World Wide Web, Internet, gene regulatory networks, metabolic networks, social networks, epistemological networks, etc.; see List of network theory topics for more examples. Euler's solution of the Seven Bridges of Königsberg problem is considered to be the first true proof in the theory of networks. Network optimization Ne ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Entity Resolution

Record linkage (also known as data matching, data linkage, entity resolution, and many other terms) is the task of finding records in a data set that refer to the same entity across different data sources (e.g., data files, books, websites, and databases). Record linkage is necessary when joining different data sets based on entities that may or may not share a common identifier (e.g., database key, URI, National identification number), which may be due to differences in record shape, storage location, or curator style or preference. A data set that has undergone RL-oriented reconciliation may be referred to as being ''cross-linked''. Naming conventions "Record linkage" is the term used by statisticians, epidemiologists, and historians, among others, to describe the process of joining records from one data source with another that describe the same entity. However, many other terms are used for this process. Unfortunately, this profusion of terminology has led to few cross-refe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Collaborative Filtering

Collaborative filtering (CF) is a technique used by recommender systems.Francesco Ricci and Lior Rokach and Bracha ShapiraIntroduction to Recommender Systems Handbook Recommender Systems Handbook, Springer, 2011, pp. 1-35 Collaborative filtering has two senses, a narrow one and a more general one. In the newer, narrower sense, collaborative filtering is a method of making automatic predictions (filtering) about the interests of a user by collecting preferences or taste information from many users (collaborating). The underlying assumption of the collaborative filtering approach is that if a person ''A'' has the same opinion as a person ''B'' on an issue, A is more likely to have B's opinion on a different issue than that of a randomly chosen person. For example, a collaborative filtering recommendation system for preferences in television programming could make predictions about which television show a user should like given a partial list of that user's tastes (likes or dislike ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Markov Logic Network

A Markov logic network (MLN) is a probabilistic logic which applies the ideas of a Markov network to first-order logic, enabling uncertain inference. Markov logic networks generalize first-order logic, in the sense that, in a certain limit, all unsatisfiable statements have a probability of zero, and all tautologies have probability one. History Work in this area began in 2003 by Pedro Domingos and Matt Richardson, and they began to use the term MLN to describe it. Description Briefly, it is a collection of formulas from first-order logic, to each of which is assigned a real number, the weight. Taken as a Markov network, the vertices of the network graph are atomic formulas, and the edges are the logical connectives used to construct the formula. Each formula is considered to be a clique, and the Markov blanket is the set of formulas in which a given atom appears. A potential function is associated to each formula, and takes the value of one when the formula is true, and zero ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cosine Similarity

In data analysis, cosine similarity is a measure of similarity between two sequences of numbers. For defining it, the sequences are viewed as vectors in an inner product space, and the cosine similarity is defined as the cosine of the angle between them, that is, the dot product of the vectors divided by the product of their lengths. It follows that the cosine similarity does not depend on the magnitudes of the vectors, but only on their angle. The cosine similarity always belongs to the interval 1, 1 For example, two proportional vectors have a cosine similarity of 1, two orthogonal vectors have a similarity of 0, and two opposite vectors have a similarity of -1. The cosine similarity is particularly used in positive space, where the outcome is neatly bounded in ,1/math>. For example, in information retrieval and text mining, each word is assigned a different coordinate and a document is represented by the vector of the numbers of occurrences of each word in the document. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probabilistic Soft Logic

Probabilistic Soft Logic (PSL) is a statistical relational learning (SRL) framework for modeling probabilistic and relational domains. It is applicable to a variety of machine learning problems, such as collective classification, entity resolution, link prediction, and ontology alignment. PSL combines two tools: first-order logic, with its ability to succinctly represent complex phenomena, and probabilistic graphical models, which capture the uncertainty and incompleteness inherent in real-world knowledge. More specifically, PSL uses "soft" logic as its logical component and Markov random fields as its statistical model. PSL provides sophisticated inference techniques for finding the most likely answer (i.e. the maximum a posteriori (MAP) state). The "softening" of the logical formulas makes inference a polynomial time operation rather than an NP-hard operation. Description The SRL community has introduced multiple approaches that combine graphical models and first ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dot Product

In mathematics, the dot product or scalar productThe term ''scalar product'' means literally "product with a scalar as a result". It is also used sometimes for other symmetric bilinear forms, for example in a pseudo-Euclidean space. is an algebraic operation that takes two equal-length sequences of numbers (usually coordinate vectors), and returns a single number. In Euclidean geometry, the dot product of the Cartesian coordinates of two vectors is widely used. It is often called the inner product (or rarely projection product) of Euclidean space, even though it is not the only inner product that can be defined on Euclidean space (see Inner product space for more). Algebraically, the dot product is the sum of the products of the corresponding entries of the two sequences of numbers. Geometrically, it is the product of the Euclidean magnitudes of the two vectors and the cosine of the angle between them. These definitions are equivalent when using Cartesian coordinates. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Node2vec

node2vec is an algorithm to generate vector representations of nodes on a graph. The ''node2vec'' framework learns low-dimensional representations for nodes in a graph through the use of random walks through a graph starting at a target node. It is useful for a variety of machine learning applications. Besides reducing the engineering effort, representations learned by the algorithm lead to greater predictive power. ''node2vec'' follows the intuition that random walks through a graph can be treated like sentences in a corpus. Each node in a graph is treated like an individual word, and a random walk is treated as a sentence. By feeding these "sentences" into a skip-gram, or by using the continuous bag of words model paths found by random walks can be treated as sentences, and traditional data-mining techniques for documents can be used. The algorithm generalizes prior work which is based on rigid notions of network neighborhoods, and argues that the added flexibility in exploring ne ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Graph Embedding

In topological graph theory, an embedding (also spelled imbedding) of a graph G on a surface \Sigma is a representation of G on \Sigma in which points of \Sigma are associated with vertices and simple arcs ( homeomorphic images of ,1/math>) are associated with edges in such a way that: * the endpoints of the arc associated with an edge e are the points associated with the end vertices of e, * no arcs include points associated with other vertices, * two arcs never intersect at a point which is interior to either of the arcs. Here a surface is a compact, connected 2- manifold. Informally, an embedding of a graph into a surface is a drawing of the graph on the surface in such a way that its edges may intersect only at their endpoints. It is well known that any finite graph can be embedded in 3-dimensional Euclidean space \mathbb^3.. A planar graph is one that can be embedded in 2-dimensional Euclidean space \mathbb^2. Often, an embedding is regarded as an equivalence class ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Similarity (network Science)

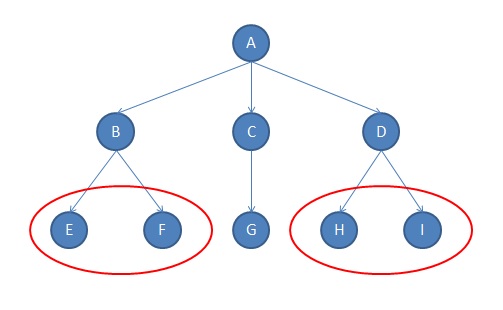

Similarity in network analysis occurs when two nodes (or other more elaborate structures) fall in the same equivalence class. There are three fundamental approaches to constructing measures of network similarity: structural equivalence, automorphic equivalence, and regular equivalence.Newman, M.E.J. 2010. ''Networks: An Introduction.'' Oxford, UK: Oxford University Press. There is a hierarchy of the three equivalence concepts: any set of structural equivalences are also automorphic and regular equivalences. Any set of automorphic equivalences are also regular equivalences. Not all regular equivalences are necessarily automorphic or structural; and not all automorphic equivalences are necessarily structural. Visualizing similarity and distance Clustering tools Agglomerative Hierarchical clustering of nodes on the basis of the similarity of their profiles of ties to other nodes provides a joining tree or Dendrogram that visualizes the degree of similarity among cases - and c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |