|

Collaborative Filtering

Collaborative filtering (CF) is, besides content-based filtering, one of two major techniques used by recommender systems.Francesco Ricci and Lior Rokach and Bracha ShapiraIntroduction to Recommender Systems Handbook, Recommender Systems Handbook, Springer, 2011, pp. 1–35 Collaborative filtering has two senses, a narrow one and a more general one. In the newer, narrower sense, collaborative filtering is a method of making automatic predictions (filtering) about a user's interests by utilizing preferences or taste information collected from many users (collaborating). This approach assumes that if persons ''A'' and ''B'' share similar opinions on one issue, they are more likely to agree on other issues compared to a random pairing of ''A'' with another person. For instance, a collaborative filtering system for television programming could predict which shows a user might enjoy based on a limited list of the user's tastes (likes or dislikes). These predictions are specific to t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Item-item Collaborative Filtering

Item-item collaborative filtering, or item-based, or item-to-item, is a form of collaborative filtering for recommender systems based on the similarity between items calculated using people's ratings of those items. Item-item collaborative filtering was invented and used by Amazon.com in 1998. It was first published in an academic conference in 2001. Earlier collaborative filtering systems based on rating similarity between users (known as user-user collaborative filtering) had several problems: * systems performed poorly when they had many items but comparatively few ratings * computing similarities between all pairs of users was expensive * user profiles changed quickly and the entire system model had to be recomputed Item-item models resolve these problems in systems that have more users than items. Item-item models use rating distributions ''per item'', not ''per user''. With more users than items, each item tends to have more ratings than each user, so an item's average ratin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task (computing), tasks without explicit Machine code, instructions. Within a subdiscipline in machine learning, advances in the field of deep learning have allowed Neural network (machine learning), neural networks, a class of statistical algorithms, to surpass many previous machine learning approaches in performance. ML finds application in many fields, including natural language processing, computer vision, speech recognition, email filtering, agriculture, and medicine. The application of ML to business problems is known as predictive analytics. Statistics and mathematical optimisation (mathematical programming) methods comprise the foundations of machine learning. Data mining is a related field of study, focusing on exploratory data analysi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vector Space

In mathematics and physics, a vector space (also called a linear space) is a set (mathematics), set whose elements, often called vector (mathematics and physics), ''vectors'', can be added together and multiplied ("scaled") by numbers called scalar (mathematics), ''scalars''. The operations of vector addition and scalar multiplication must satisfy certain requirements, called ''vector axioms''. Real vector spaces and complex vector spaces are kinds of vector spaces based on different kinds of scalars: real numbers and complex numbers. Scalars can also be, more generally, elements of any field (mathematics), field. Vector spaces generalize Euclidean vectors, which allow modeling of Physical quantity, physical quantities (such as forces and velocity) that have not only a Magnitude (mathematics), magnitude, but also a Orientation (geometry), direction. The concept of vector spaces is fundamental for linear algebra, together with the concept of matrix (mathematics), matrices, which ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Structure

In computer science, a data structure is a data organization and storage format that is usually chosen for Efficiency, efficient Data access, access to data. More precisely, a data structure is a collection of data values, the relationships among them, and the Function (computer programming), functions or Operator (computer programming), operations that can be applied to the data, i.e., it is an algebraic structure about data. Usage Data structures serve as the basis for abstract data types (ADT). The ADT defines the logical form of the data type. The data structure implements the physical form of the data type. Different types of data structures are suited to different kinds of applications, and some are highly specialized to specific tasks. For example, Relational database, relational databases commonly use B-tree indexes for data retrieval, while compiler Implementation, implementations usually use hash tables to look up Identifier (computer languages), identifiers. Data s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scalability

Scalability is the property of a system to handle a growing amount of work. One definition for software systems specifies that this may be done by adding resources to the system. In an economic context, a scalable business model implies that a company can increase sales given increased resources. For example, a package delivery system is scalable because more packages can be delivered by adding more delivery vehicles. However, if all packages had to first pass through a single warehouse for sorting, the system would not be as scalable, because one warehouse can handle only a limited number of packages. In computing, scalability is a characteristic of computers, networks, algorithms, networking protocols, programs and applications. An example is a search engine, which must support increasing numbers of users, and the number of topics it indexes. Webscale is a computer architectural approach that brings the capabilities of large-scale cloud computing companies into enterprise ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sparsity

In numerical analysis and scientific computing, a sparse matrix or sparse array is a matrix in which most of the elements are zero. There is no strict definition regarding the proportion of zero-value elements for a matrix to qualify as sparse but a common criterion is that the number of non-zero elements is roughly equal to the number of rows or columns. By contrast, if most of the elements are non-zero, the matrix is considered dense. The number of zero-valued elements divided by the total number of elements (e.g., ''m'' × ''n'' for an ''m'' × ''n'' matrix) is sometimes referred to as the sparsity of the matrix. Conceptually, sparsity corresponds to systems with few pairwise interactions. For example, consider a line of balls connected by springs from one to the next: this is a sparse system, as only adjacent balls are coupled. By contrast, if the same line of balls were to have springs connecting each ball to all other balls, the system would correspond to a dense matrix. T ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nearest Neighbor Search

Nearest neighbor search (NNS), as a form of proximity search, is the optimization problem of finding the point in a given set that is closest (or most similar) to a given point. Closeness is typically expressed in terms of a dissimilarity function: the less similar the objects, the larger the function values. Formally, the nearest-neighbor (NN) search problem is defined as follows: given a set ''S'' of points in a space ''M'' and a query point ''q'' ∈ ''M'', find the closest point in ''S'' to ''q''. Donald Knuth in vol. 3 of '' The Art of Computer Programming'' (1973) called it the post-office problem, referring to an application of assigning to a residence the nearest post office. A direct generalization of this problem is a ''k''-NN search, where we need to find the ''k'' closest points. Most commonly ''M'' is a metric space and dissimilarity is expressed as a distance metric, which is symmetric and satisfies the triangle inequality. Even more common, ''M'' is take ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Locality-sensitive Hashing

In computer science, locality-sensitive hashing (LSH) is a fuzzy hashing technique that hashes similar input items into the same "buckets" with high probability. (The number of buckets is much smaller than the universe of possible input items.) Since similar items end up in the same buckets, this technique can be used for data clustering and nearest neighbor search. It differs from conventional hashing techniques in that hash collisions are maximized, not minimized. Alternatively, the technique can be seen as a way to reduce the dimensionality of high-dimensional data; high-dimensional input items can be reduced to low-dimensional versions while preserving relative distances between items. Hashing-based approximate nearest-neighbor search algorithms generally use one of two main categories of hashing methods: either data-independent methods, such as locality-sensitive hashing (LSH); or data-dependent methods, such as locality-preserving hashing (LPH). Locality-preserving hashin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cosine Similarity

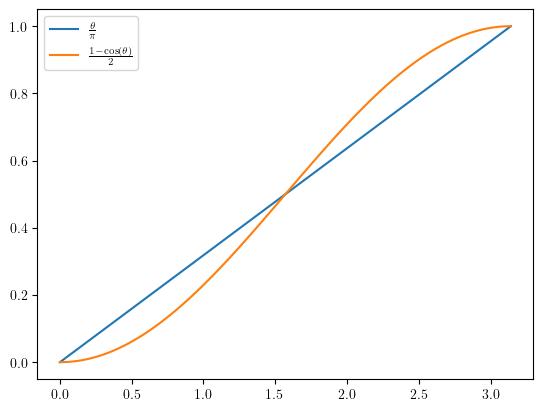

In data analysis, cosine similarity is a measure of similarity between two non-zero vectors defined in an inner product space. Cosine similarity is the cosine of the angle between the vectors; that is, it is the dot product of the vectors divided by the product of their lengths. It follows that the cosine similarity does not depend on the magnitudes of the vectors, but only on their angle. The cosine similarity always belongs to the interval 1, +1 For example, two proportional vectors have a cosine similarity of +1, two orthogonal vectors have a similarity of 0, and two opposite vectors have a similarity of −1. In some contexts, the component values of the vectors cannot be negative, in which case the cosine similarity is bounded in ,1/math>. For example, in information retrieval and text mining, each word is assigned a different coordinate and a document is represented by the vector of the numbers of occurrences of each word in the document. Cosine similarity then gives a u ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pearson Product-moment Correlation Coefficient

In statistics, the Pearson correlation coefficient (PCC) is a correlation coefficient that measures linear correlation between two sets of data. It is the ratio between the covariance of two variables and the product of their standard deviations; thus, it is essentially a normalized measurement of the covariance, such that the result always has a value between −1 and 1. As with covariance itself, the measure can only reflect a linear correlation of variables, and ignores many other types of relationships or correlations. As a simple example, one would expect the age and height of a sample of children from a school to have a Pearson correlation coefficient significantly greater than 0, but less than 1 (as 1 would represent an unrealistically perfect correlation). Naming and history It was developed by Karl Pearson from a related idea introduced by Francis Galton in the 1880s, and for which the mathematical formula was derived and published by Auguste Bravais in 1844. The naming ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Weighted Average

The weighted arithmetic mean is similar to an ordinary arithmetic mean (the most common type of average), except that instead of each of the data points contributing equally to the final average, some data points contribute more than others. The notion of weighted mean plays a role in descriptive statistics and also occurs in a more general form in several other areas of mathematics. If all the weights are equal, then the weighted mean is the same as the arithmetic mean. While weighted means generally behave in a similar fashion to arithmetic means, they do have a few counterintuitive properties, as captured for instance in Simpson's paradox. Examples Basic example Given two school with 20 students, one with 30 test grades in each class as follows: :Morning class = :Afternoon class = The mean for the morning class is 80 and the mean of the afternoon class is 90. The unweighted mean of the two means is 85. However, this does not account for the difference in number of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |