|

Hyperexponential Distribution

In probability theory, a hyperexponential distribution is a continuous probability distribution whose probability density function of the random variable ''X'' is given by : f_X(x) = \sum_^n f_(x)\;p_i, where each ''Y''''i'' is an exponentially distributed random variable with rate parameter ''λ''''i'', and ''p''''i'' is the probability that ''X'' will take on the form of the exponential distribution with rate ''λ''''i''. It is named the ''hyper''exponential distribution since its coefficient of variation is greater than that of the exponential distribution, whose coefficient of variation is 1, and the hypoexponential distribution, which has a coefficient of variation smaller than one. While the exponential distribution is the continuous analogue of the geometric distribution, the hyperexponential distribution is not analogous to the hypergeometric distribution. The hyperexponential distribution is an example of a mixture density. An example of a hyperexponential random va ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hyperexponential , also known as hyperexponentiation.

{{disambig ...

Hyperexponential can refer to: * The hyperexponential distribution in probability. * Tetration In mathematics, tetration (or hyper-4) is an operation based on iterated, or repeated, exponentiation. There is no standard notation for tetration, though \uparrow \uparrow and the left-exponent ''xb'' are common. Under the definition as rep ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mixture Density

In probability and statistics, a mixture distribution is the probability distribution of a random variable that is derived from a collection of other random variables as follows: first, a random variable is selected by chance from the collection according to given probabilities of selection, and then the value of the selected random variable is realized. The underlying random variables may be random real numbers, or they may be random vectors (each having the same dimension), in which case the mixture distribution is a multivariate distribution. In cases where each of the underlying random variables is continuous, the outcome variable will also be continuous and its probability density function is sometimes referred to as a mixture density. The cumulative distribution function (and the probability density function if it exists) can be expressed as a convex combination (i.e. a weighted sum, with non-negative weights that sum to 1) of other distribution functions and density func ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hyper-Erlang Distribution

In probability theory, a hyper-Erlang distribution is a continuous probability distribution which takes a particular Erlang distribution E''i'' with probability ''p''''i''. A hyper-Erlang distributed random variable ''X'' has a probability density function given by : A(x) = \sum_^n p_i E_(x) where each ''p''''i'' > 0 with the ''p''''i'' summing to 1 and each of the E''l''''i'' being an Erlang distribution The Erlang distribution is a two-parameter family of continuous probability distributions with support x \in independent exponential distribution">exponential variables with mean 1/\lambda each. Equivalently, it is the distribution of the tim ... with ''l''''i'' stages each of which has parameter ''λ''''i''. See also * Phase-type distribution References {{ProbDistributions, continuous-semi-infinite Continuous distributions ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Phase-type Distribution

A phase-type distribution is a probability distribution constructed by a convolution or mixture of exponential distributions. It results from a system of one or more inter-related Poisson processes occurring in sequence, or phases. The sequence in which each of the phases occurs may itself be a stochastic process. The distribution can be represented by a random variable describing the time until absorption of a Markov process with one absorbing state. Each of the states of the Markov process represents one of the phases. It has a discrete-time equivalent the discrete phase-type distribution. The set of phase-type distributions is dense in the field of all positive-valued distributions, that is, it can be used to approximate any positive-valued distribution. Definition Consider a continuous-time Markov process with ''m'' + 1 states, where ''m'' ≥ 1, such that the states 1,...,''m'' are transient states and state 0 is an absorbing state. Further, let the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Prony's Method

Prony analysis (Prony's method) was developed by Gaspard Riche de Prony in 1795. However, practical use of the method awaited the digital computer. Similar to the Fourier transform, Prony's method extracts valuable information from a uniformly sampled signal and builds a series of damped complex exponentials or damped sinusoids. This allows for the estimation of frequency, amplitude, phase and damping components of a signal. The method Let f(t) be a signal consisting of N evenly spaced samples. Prony's method fits a function :\hat(t) = \sum_^ A_i e^ \cos(\omega_i t + \phi_i) to the observed f(t). After some manipulation utilizing Euler's formula, the following result is obtained. This allows more direct computation of terms. : \begin \hat(t) &= \sum_^ A_i e^ \cos(\omega_i t + \phi_i) \\ &= \sum_^ \frac A_i \left( e^e^ + e^e^\right) \end where: * \lambda^_i = \sigma_i \pm j \omega_i are the eigenvalues of the system, * \sigma_i = -\omega_ \xi_i are the damping comp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Heavy-tailed Distribution

In probability theory, heavy-tailed distributions are probability distributions whose tails are not exponentially bounded: that is, they have heavier tails than the exponential distribution. In many applications it is the right tail of the distribution that is of interest, but a distribution may have a heavy left tail, or both tails may be heavy. There are three important subclasses of heavy-tailed distributions: the fat-tailed distributions, the long-tailed distributions and the subexponential distributions. In practice, all commonly used heavy-tailed distributions belong to the subexponential class. There is still some discrepancy over the use of the term heavy-tailed. There are two other definitions in use. Some authors use the term to refer to those distributions which do not have all their power moments finite; and some others to those distributions that do not have a finite variance. The definition given in this article is the most general in use, and includes all d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Distribution

In probability theory and statistics, a probability distribution is the mathematical function that gives the probabilities of occurrence of different possible outcomes for an experiment. It is a mathematical description of a random phenomenon in terms of its sample space and the probabilities of events (subsets of the sample space). For instance, if is used to denote the outcome of a coin toss ("the experiment"), then the probability distribution of would take the value 0.5 (1 in 2 or 1/2) for , and 0.5 for (assuming that the coin is fair). Examples of random phenomena include the weather conditions at some future date, the height of a randomly selected person, the fraction of male students in a school, the results of a survey to be conducted, etc. Introduction A probability distribution is a mathematical description of the probabilities of events, subsets of the sample space. The sample space, often denoted by \Omega, is the set of all possible outcomes of a rando ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Moment-generating Function

In probability theory and statistics, the moment-generating function of a real-valued random variable is an alternative specification of its probability distribution. Thus, it provides the basis of an alternative route to analytical results compared with working directly with probability density functions or cumulative distribution functions. There are particularly simple results for the moment-generating functions of distributions defined by the weighted sums of random variables. However, not all random variables have moment-generating functions. As its name implies, the moment-generating function can be used to compute a distribution’s moments: the ''n''th moment about 0 is the ''n''th derivative of the moment-generating function, evaluated at 0. In addition to real-valued distributions (univariate distributions), moment-generating functions can be defined for vector- or matrix-valued random variables, and can even be extended to more general cases. The moment-generating func ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Telephony

Telephony ( ) is the field of technology involving the development, application, and deployment of telecommunication services for the purpose of electronic transmission of voice, fax, or data, between distant parties. The history of telephony is intimately linked to the invention and development of the telephone. Telephony is commonly referred to as the construction or operation of telephones and telephonic systems and as a system of telecommunications in which telephonic equipment is employed in the transmission of speech or other sound between points, with or without the use of wires. The term is also used frequently to refer to computer hardware, software, and computer network systems, that perform functions traditionally performed by telephone equipment. In this context the technology is specifically referred to as Internet telephony, or voice over Internet Protocol (VoIP). Overview The first telephones were connected directly in pairs. Each user had a separate telephone wire ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

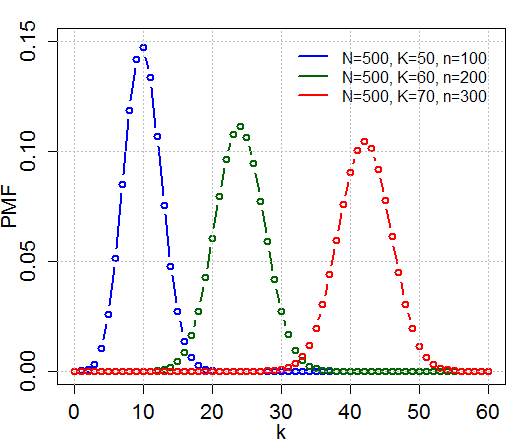

Hypergeometric Distribution

In probability theory and statistics, the hypergeometric distribution is a discrete probability distribution that describes the probability of k successes (random draws for which the object drawn has a specified feature) in n draws, ''without'' replacement, from a finite population of size N that contains exactly K objects with that feature, wherein each draw is either a success or a failure. In contrast, the binomial distribution describes the probability of k successes in n draws ''with'' replacement. Definitions Probability mass function The following conditions characterize the hypergeometric distribution: * The result of each draw (the elements of the population being sampled) can be classified into one of two mutually exclusive categories (e.g. Pass/Fail or Employed/Unemployed). * The probability of a success changes on each draw, as each draw decreases the population ('' sampling without replacement'' from a finite population). A random variable X follows the hyper ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Theory

Probability theory is the branch of mathematics concerned with probability. Although there are several different probability interpretations, probability theory treats the concept in a rigorous mathematical manner by expressing it through a set of axioms. Typically these axioms formalise probability in terms of a probability space, which assigns a measure taking values between 0 and 1, termed the probability measure, to a set of outcomes called the sample space. Any specified subset of the sample space is called an event. Central subjects in probability theory include discrete and continuous random variables, probability distributions, and stochastic processes (which provide mathematical abstractions of non-deterministic or uncertain processes or measured quantities that may either be single occurrences or evolve over time in a random fashion). Although it is not possible to perfectly predict random events, much can be said about their behavior. Two major results in probab ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Geometric Distribution

In probability theory and statistics, the geometric distribution is either one of two discrete probability distributions: * The probability distribution of the number ''X'' of Bernoulli trials needed to get one success, supported on the set \; * The probability distribution of the number ''Y'' = ''X'' − 1 of failures before the first success, supported on the set \. Which of these is called the geometric distribution is a matter of convention and convenience. These two different geometric distributions should not be confused with each other. Often, the name ''shifted'' geometric distribution is adopted for the former one (distribution of the number ''X''); however, to avoid ambiguity, it is considered wise to indicate which is intended, by mentioning the support explicitly. The geometric distribution gives the probability that the first occurrence of success requires ''k'' independent trials, each with success probability ''p''. If the probability of succe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |