|

Hypotheses Suggested By The Data

In statistics, hypotheses suggested by a given dataset, when tested with the same dataset that suggested them, are likely to be accepted even when they are not true. This is because circular reasoning (double dipping) would be involved: something seems true in the limited data set; therefore we hypothesize that it is true in general; therefore we wrongly test it on the same, limited data set, which seems to confirm that it is true. Generating hypotheses based on data already observed, in the absence of testing them on new data, is referred to as post hoc theorizing (from Latin '' post hoc'', "after this"). The correct procedure is to test any hypothesis on a data set that was not used to generate the hypothesis. The general problem Testing a hypothesis suggested by the data can very easily result in false positives (type I errors). If one looks long enough and in enough different places, eventually data can be found to support any hypothesis. Yet, these positive data do not by ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ''wikt:Statistik#German, Statistik'', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

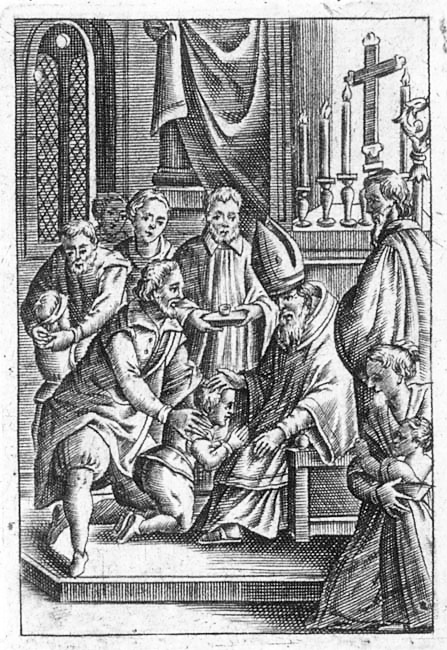

Confirmation Sample

In Christian denominations that practice infant baptism, confirmation is seen as the sealing of the covenant created in baptism. Those being confirmed are known as confirmands. For adults, it is an affirmation of belief. It involves laying on of hands. Catholicism views confirmation as a sacrament. The sacrament is called chrismation in the Eastern Christianity. In the East it is conferred immediately after baptism. In Western Christianity, confirmation is ordinarily administered when a child reaches the age of reason or early adolescence. When an adult is baptized, the sacrament is conferred immediately after baptism in the same ceremony. Among those Christians who practice teen-aged confirmation, the practice may be perceived, secondarily, as a " coming of age" rite. In many Protestant denominations, such as the Anglican, Lutheran, Methodist and Reformed traditions, confirmation is a rite that often includes a profession of faith by an already baptized person. Confirma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Post Hoc Analysis

In a scientific study, post hoc analysis (from Latin '' post hoc'', "after this") consists of statistical analyses that were specified after the data were seen. They are usually used to uncover specific differences between three or more group means when an analysis of variance (ANOVA) test is significant. This typically creates a multiple testing problem because each potential analysis is effectively a statistical test. Multiple testing procedures are sometimes used to compensate, but that is often difficult or impossible to do precisely. Post hoc analysis that is conducted and interpreted without adequate consideration of this problem is sometimes called ''data dredging Data dredging (also known as data snooping or ''p''-hacking) is the misuse of data analysis to find patterns in data that can be presented as statistically significant, thus dramatically increasing and understating the risk of false positives. T ...'' by critics because the statistical associations that it fin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

P Hacking

Data dredging (also known as data snooping or ''p''-hacking) is the misuse of data analysis to find patterns in data that can be presented as statistically significant, thus dramatically increasing and understating the risk of false positives. This is done by performing many statistical tests on the data and only reporting those that come back with significant results. The process of data dredging involves testing multiple hypotheses using a single data set by exhaustively searching—perhaps for combinations of variables that might show a correlation, and perhaps for groups of cases or observations that show differences in their mean or in their breakdown by some other variable. Conventional tests of statistical significance are based on the probability that a particular result would arise if chance alone were at work, and necessarily accept some risk of mistaken conclusions of a certain type (mistaken rejections of the null hypothesis). This level of risk is called the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

HARKing

HARKing (hypothesizing after the results are known) is an acronym coined by social psychologist Norbert Kerr that refers to the questionable research practice of “presenting a post hoc hypothesis in the introduction of a research report as if it were an a priori hypothesis”. Hence, a key characteristic of HARKing is that post hoc hypothesizing is falsely portrayed as a priori hypothesizing. HARKing may occur when a researcher tests an a priori hypothesis but then omits that hypothesis from their research report after they find out the results of their test; inappropriate forms of post hoc analysis and/or post hoc theorizing then may lead to a post hoc hypothesis. Types Several types of HARKing have been distinguished, including: ;THARKing: Transparently hypothesizing after the results are known, rather than the secretive, undisclosed, HARKing that was first proposed by Kerr (1998). In this case, researchers openly declare that they developed their hypotheses after they observe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Exploratory Data Analysis

In statistics, exploratory data analysis (EDA) is an approach of analyzing data sets to summarize their main characteristics, often using statistical graphics and other data visualization methods. A statistical model can be used or not, but primarily EDA is for seeing what the data can tell us beyond the formal modeling and thereby contrasts traditional hypothesis testing. Exploratory data analysis has been promoted by John Tukey since 1970 to encourage statisticians to explore the data, and possibly formulate hypotheses that could lead to new data collection and experiments. EDA is different from initial data analysis (IDA), which focuses more narrowly on checking assumptions required for model fitting and hypothesis testing, and handling missing values and making transformations of variables as needed. EDA encompasses IDA. Overview Tukey defined data analysis in 1961 as: "Procedures for analyzing data, techniques for interpreting the results of such procedures, ways of pla ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Dredging

Data dredging (also known as data snooping or ''p''-hacking) is the misuse of data analysis to find patterns in data that can be presented as statistically significant, thus dramatically increasing and understating the risk of false positives. This is done by performing many statistical tests on the data and only reporting those that come back with significant results. The process of data dredging involves testing multiple hypotheses using a single data set by exhaustively searching—perhaps for combinations of variables that might show a correlation, and perhaps for groups of cases or observations that show differences in their mean or in their breakdown by some other variable. Conventional tests of statistical significance are based on the probability that a particular result would arise if chance alone were at work, and necessarily accept some risk of mistaken conclusions of a certain type (mistaken rejections of the null hypothesis). This level of risk is called the ''s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Analysis

Data analysis is a process of inspecting, cleansing, transforming, and modeling data with the goal of discovering useful information, informing conclusions, and supporting decision-making. Data analysis has multiple facets and approaches, encompassing diverse techniques under a variety of names, and is used in different business, science, and social science domains. In today's business world, data analysis plays a role in making decisions more scientific and helping businesses operate more effectively. Data mining is a particular data analysis technique that focuses on statistical modeling and knowledge discovery for predictive rather than purely descriptive purposes, while business intelligence covers data analysis that relies heavily on aggregation, focusing mainly on business information. In statistical applications, data analysis can be divided into descriptive statistics, exploratory data analysis (EDA), and confirmatory data analysis (CDA). EDA focuses on discovering ne ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bonferroni Correction

In statistics, the Bonferroni correction is a method to counteract the multiple comparisons problem. Background The method is named for its use of the Bonferroni inequalities. An extension of the method to confidence intervals was proposed by Olive Jean Dunn. Statistical hypothesis testing is based on rejecting the null hypothesis if the likelihood of the observed data under the null hypotheses is low. If multiple hypotheses are tested, the probability of observing a rare event increases, and therefore, the likelihood of incorrectly rejecting a null hypothesis (i.e., making a Type I error) increases. The Bonferroni correction compensates for that increase by testing each individual hypothesis at a significance level of \alpha/m, where \alpha is the desired overall alpha level and m is the number of hypotheses. For example, if a trial is testing m = 20 hypotheses with a desired \alpha = 0.05, then the Bonferroni correction would test each individual hypothesis at \alpha = 0.05/20 = ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Biometrika

''Biometrika'' is a peer-reviewed scientific journal published by Oxford University Press for thBiometrika Trust The editor-in-chief is Paul Fearnhead (Lancaster University). The principal focus of this journal is theoretical statistics. It was established in 1901 and originally appeared quarterly. It changed to three issues per year in 1977 but returned to quarterly publication in 1992. History ''Biometrika'' was established in 1901 by Francis Galton, Karl Pearson, and Raphael Weldon to promote the study of biometrics. The history of ''Biometrika'' is covered by Cox (2001). The name of the journal was chosen by Pearson, but Francis Edgeworth insisted that it be spelt with a "k" and not a "c". Since the 1930s, it has been a journal for statistical theory and methodology. Galton's role in the journal was essentially that of a patron and the journal was run by Pearson and Weldon and after Weldon's death in 1906 by Pearson alone until he died in 1936. In the early days, the American ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Henry Scheffé

Henry Scheffé (April 11, 1907 – July 5, 1977) was an American statistician. He is known for the Lehmann–Scheffé theorem and Scheffé's method. Education and career Scheffé was born in New York City on April 11, 1907, the child of German immigrants. The family moved to Islip, New York, where Scheffé went to high school. He graduated in 1924, took night classes at Cooper Union, and a year later entered the Polytechnic Institute of Brooklyn. He transferred to the University of Wisconsin in 1928, and earned a bachelor's degree in mathematics there in 1931. Staying at Wisconsin, he married his wife Miriam in 1934 and finished his PhD in 1935, on the subject of differential equations, under the supervision of Rudolf Ernest Langer. After teaching mathematics at Wisconsin, Oregon State University, and Reed College, Scheffé moved to Princeton University in 1941. At Princeton, he began working in statistics instead of in pure mathematics, and assisted the U.S. war effort as a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Analysis Of Variance

Analysis of variance (ANOVA) is a collection of statistical models and their associated estimation procedures (such as the "variation" among and between groups) used to analyze the differences among means. ANOVA was developed by the statistician Ronald Fisher. ANOVA is based on the law of total variance, where the observed variance in a particular variable is partitioned into components attributable to different sources of variation. In its simplest form, ANOVA provides a statistical test of whether two or more population means are equal, and therefore generalizes the ''t''-test beyond two means. In other words, the ANOVA is used to test the difference between two or more means. History While the analysis of variance reached fruition in the 20th century, antecedents extend centuries into the past according to Stigler. These include hypothesis testing, the partitioning of sums of squares, experimental techniques and the additive model. Laplace was performing hypothesis testing ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.png)