|

HARKing

HARKing (hypothesizing after the results are known) is an acronym coined by social psychologist Norbert Kerr that refers to the questionable research practice of “presenting a post hoc hypothesis in the introduction of a research report as if it were an a priori hypothesis”. Hence, a key characteristic of HARKing is that post hoc hypothesizing is falsely portrayed as a priori hypothesizing. HARKing may occur when a researcher tests an a priori hypothesis but then omits that hypothesis from their research report after they find out the results of their test; inappropriate forms of post hoc analysis and/or post hoc theorizing then may lead to a post hoc hypothesis. Types Several types of HARKing have been distinguished, including: ;THARKing: Transparently hypothesizing after the results are known, rather than the secretive, undisclosed, HARKing that was first proposed by Kerr (1998). In this case, researchers openly declare that they developed their hypotheses after they observe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Preregistration

Preregistration is the practice of registering the hypotheses, methods, and/or analyses of a scientific study before it is conducted. This can include analyzing primary data or secondary data. Clinical trial registration is similar, although it may not require the registration of a study's analysis protocol. Finally, registered reports include the peer review and in principle acceptance of a study protocol prior to data collection. Preregistration assists in the identification and/or reduction of a variety of potentially problematic research practices, including p-hacking, publication bias, data dredging, inappropriate forms of post hoc analysis, and (relatedly) HARKing. It has recently gained prominence in the open science community as a potential solution to some of the issues that are thought to underlie the replication crisis. However, critics have argued that it may not be necessary when other open science practices are implemented such as Registered Reports. Types Standa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

P-hacking

Data dredging (also known as data snooping or ''p''-hacking) is the misuse of data analysis to find patterns in data that can be presented as statistically significant, thus dramatically increasing and understating the risk of false positives. This is done by performing many statistical tests on the data and only reporting those that come back with significant results. The process of data dredging involves testing multiple hypotheses using a single data set by exhaustively searching—perhaps for combinations of variables that might show a correlation, and perhaps for groups of cases or observations that show differences in their mean or in their breakdown by some other variable. Conventional tests of statistical significance are based on the probability that a particular result would arise if chance alone were at work, and necessarily accept some risk of mistaken conclusions of a certain type (mistaken rejections of the null hypothesis). This level of risk is called the ''si ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Publication Bias

In published academic research, publication bias occurs when the outcome of an experiment or research study biases the decision to publish or otherwise distribute it. Publishing only results that show a significant finding disturbs the balance of findings in favor of positive results. The study of publication bias is an important topic in metascience. Despite similar quality of execution and design, papers with statistically significant results are three times more likely to be published than those with null results. This unduly motivates researchers to manipulate their practices to ensure statistically significant results, such as by data dredging. Many factors contribute to publication bias. For instance, once a scientific finding is well established, it may become newsworthy to publish reliable papers that fail to reject the null hypothesis. Most commonly, investigators simply decline to submit results, leading to non-response bias. Investigators may also assume they made a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Replication Crisis

The replication crisis (also called the replicability crisis and the reproducibility crisis) is an ongoing methodological crisis in which the results of many scientific studies are difficult or impossible to reproduce. Because the reproducibility of empirical results is an essential part of the scientific method, such failures undermine the credibility of theories building on them and potentially call into question substantial parts of scientific knowledge. The replication crisis is frequently discussed in relation to psychology and medicine, where considerable efforts have been undertaken to reinvestigate classic results, to determine both their reliability and, if found unreliable, the reasons for the failure. Data strongly indicate that other natural, and social sciences are affected as well. The phrase ''replication crisis'' was coined in the early 2010s as part of a growing awareness of the problem. Considerations of causes and remedies have given rise to a new scientific ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Metascience

Metascience (also known as meta-research) is the use of scientific methodology to study science itself. Metascience seeks to increase the quality of scientific research while reducing inefficiency. It is also known as "''research on research''" and "''the science of science''", as it uses research methods to study how research is done and find where improvements can be made. Metascience concerns itself with all fields of research and has been described as "a bird's eye view of science". In the words of John Ioannidis, "Science is the best thing that has happened to human beings ... but we can do it better." In 1966, an early meta-research paper examined the statistical methods of 295 papers published in ten high-profile medical journals. It found that "in almost 73% of the reports read ... conclusions were drawn when the justification for these conclusions was invalid." Meta-research in the following decades found many methodological flaws, inefficiencies, and poor practices in r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Post Hoc Analysis

In a scientific study, post hoc analysis (from Latin '' post hoc'', "after this") consists of statistical analyses that were specified after the data were seen. They are usually used to uncover specific differences between three or more group means when an analysis of variance (ANOVA) test is significant. This typically creates a multiple testing problem because each potential analysis is effectively a statistical test. Multiple testing procedures are sometimes used to compensate, but that is often difficult or impossible to do precisely. Post hoc analysis that is conducted and interpreted without adequate consideration of this problem is sometimes called ''data dredging Data dredging (also known as data snooping or ''p''-hacking) is the misuse of data analysis to find patterns in data that can be presented as statistically significant, thus dramatically increasing and understating the risk of false positives. T ...'' by critics because the statistical associations that it fin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Testing Hypotheses Suggested By The Data

In statistics, hypotheses suggested by a given dataset, when tested with the same dataset that suggested them, are likely to be accepted even when they are not true. This is because circular reasoning (double dipping) would be involved: something seems true in the limited data set; therefore we hypothesize that it is true in general; therefore we wrongly test it on the same, limited data set, which seems to confirm that it is true. Generating hypotheses based on data already observed, in the absence of testing them on new data, is referred to as post hoc theorizing (from Latin '' post hoc'', "after this"). The correct procedure is to test any hypothesis on a data set that was not used to generate the hypothesis. The general problem Testing a hypothesis suggested by the data can very easily result in false positives (type I errors). If one looks long enough and in enough different places, eventually data can be found to support any hypothesis. Yet, these positive data do not by ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Norbert Kerr

Norbert Lee Kerr (born December 10, 1948) is an American social psychologist and Emeritus Professor of Psychology at Michigan State University. As of 2014, he also held a part-time appointment as Professor of Social Psychology at the University of Kent in England. He has researched the Kohler effect and factors influencing decision-making by jury, juries. References External linksFaculty page at Social Psychology Network * {{US-psychologist-stub 1948 births Living people People from Lebanon, Missouri Washington University in St. Louis alumni American social psychologists University of Illinois Urbana-Champaign alumni Michigan State University faculty University of California, San Diego faculty Academics of the University of Kent Fellows of the Association for Psychological Science ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Karl Popper

Sir Karl Raimund Popper (28 July 1902 – 17 September 1994) was an Austrian-British philosopher, academic and social commentator. One of the 20th century's most influential philosophers of science, Popper is known for his rejection of the classical inductivist views on the scientific method in favour of empirical falsification. According to Popper, a theory in the empirical sciences can never be proven, but it can be falsified, meaning that it can (and should) be scrutinised with decisive experiments. Popper was opposed to the classical justificationist account of knowledge, which he replaced with critical rationalism, namely "the first non-justificational philosophy of criticism in the history of philosophy". In political discourse, he is known for his vigorous defence of liberal democracy and the principles of social criticism that he believed made a flourishing open society possible. His political philosophy embraced ideas from major democratic political ideologies, inc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scientific Method

The scientific method is an empirical method for acquiring knowledge that has characterized the development of science since at least the 17th century (with notable practitioners in previous centuries; see the article history of scientific method for additional detail.) It involves careful observation, applying rigorous skepticism about what is observed, given that cognitive assumptions can distort how one interprets the observation. It involves formulating hypotheses, via induction, based on such observations; the testability of hypotheses, experimental and the measurement-based statistical testing of deductions drawn from the hypotheses; and refinement (or elimination) of the hypotheses based on the experimental findings. These are ''principles'' of the scientific method, as distinguished from a definitive series of steps applicable to all scientific enterprises. Although procedures vary from one field of inquiry to another, the underlying process is frequently the sa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Power (statistics)

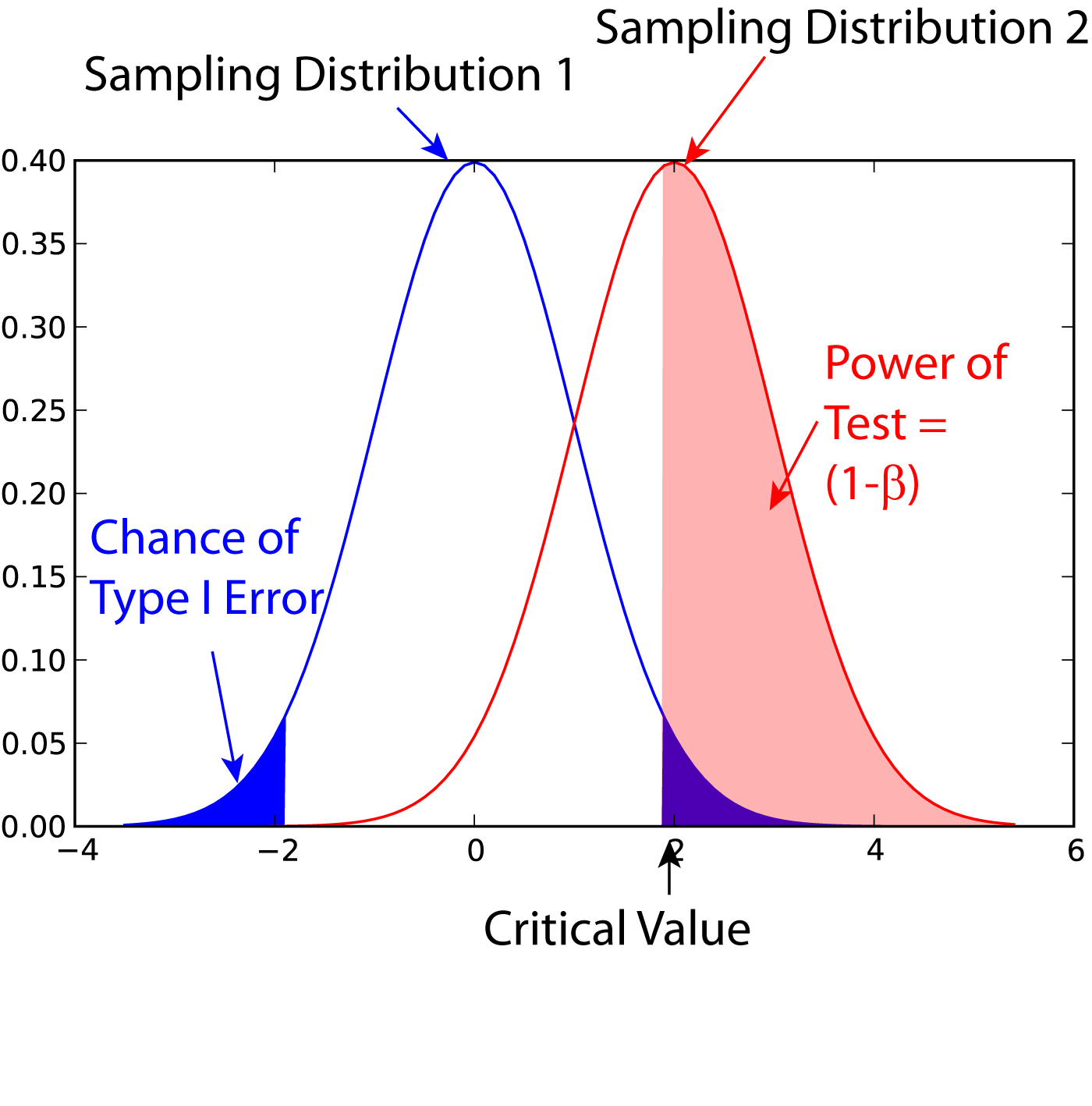

In statistics, the power of a binary hypothesis test is the probability that the test correctly rejects the null hypothesis (H_0) when a specific alternative hypothesis (H_1) is true. It is commonly denoted by 1-\beta, and represents the chances of a true positive detection conditional on the actual existence of an effect to detect. Statistical power ranges from 0 to 1, and as the power of a test increases, the probability \beta of making a type II error by wrongly failing to reject the null hypothesis decreases. Notation This article uses the following notation: * ''β'' = probability of a Type II error, known as a "false negative" * 1 − ''β'' = probability of a "true positive", i.e., correctly rejecting the null hypothesis. "1 − ''β''" is also known as the power of the test. * ''α'' = probability of a Type I error, known as a "false positive" * 1 − ''α'' = probability of a "true negative", i.e., correctly not rejecting the null hypothesis Description For a ty ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Null Result

In science, a null result is a result without the expected content: that is, the proposed result is absent. It is an experimental outcome which does not show an otherwise expected effect. This does not imply a result of zero or nothing, simply a result that does not support the hypothesis. In statistical hypothesis testing, a null result occurs when an experimental result is not significantly different from what is to be expected under the null hypothesis; its probability (under the null hypothesis) does not exceed the significance level, i.e., the threshold set prior to testing for rejection of the null hypothesis. The significance level varies, but common choices include 0.10, 0.05, and 0.01. As an example in physics, the results of the Michelson–Morley experiment were of this type, as it did not detect the expected velocity relative to the postulated luminiferous aether. This experiment's famous failed detection, commonly referred to as the ''null result'', contributed to the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.png)