|

Algorithmic Probability

In algorithmic information theory, algorithmic probability, also known as Solomonoff probability, is a mathematical method of assigning a prior probability to a given observation. It was invented by Ray Solomonoff in the 1960s. It is used in inductive inference theory and analyses of algorithms. In his general theory of inductive inference, Solomonoff uses the method together with Bayes' rule to obtain probabilities of prediction for an algorithm's future outputs. In the mathematical formalism used, the observations have the form of finite binary strings viewed as outputs of Turing machines, and the universal prior is a probability distribution over the set of finite binary strings calculated from a probability distribution over programs (that is, inputs to a universal Turing machine). The prior is universal in the Turing-computability sense, i.e. no string has zero probability. It is not computable, but it can be approximated. Overview Algorithmic probability is the main ingre ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

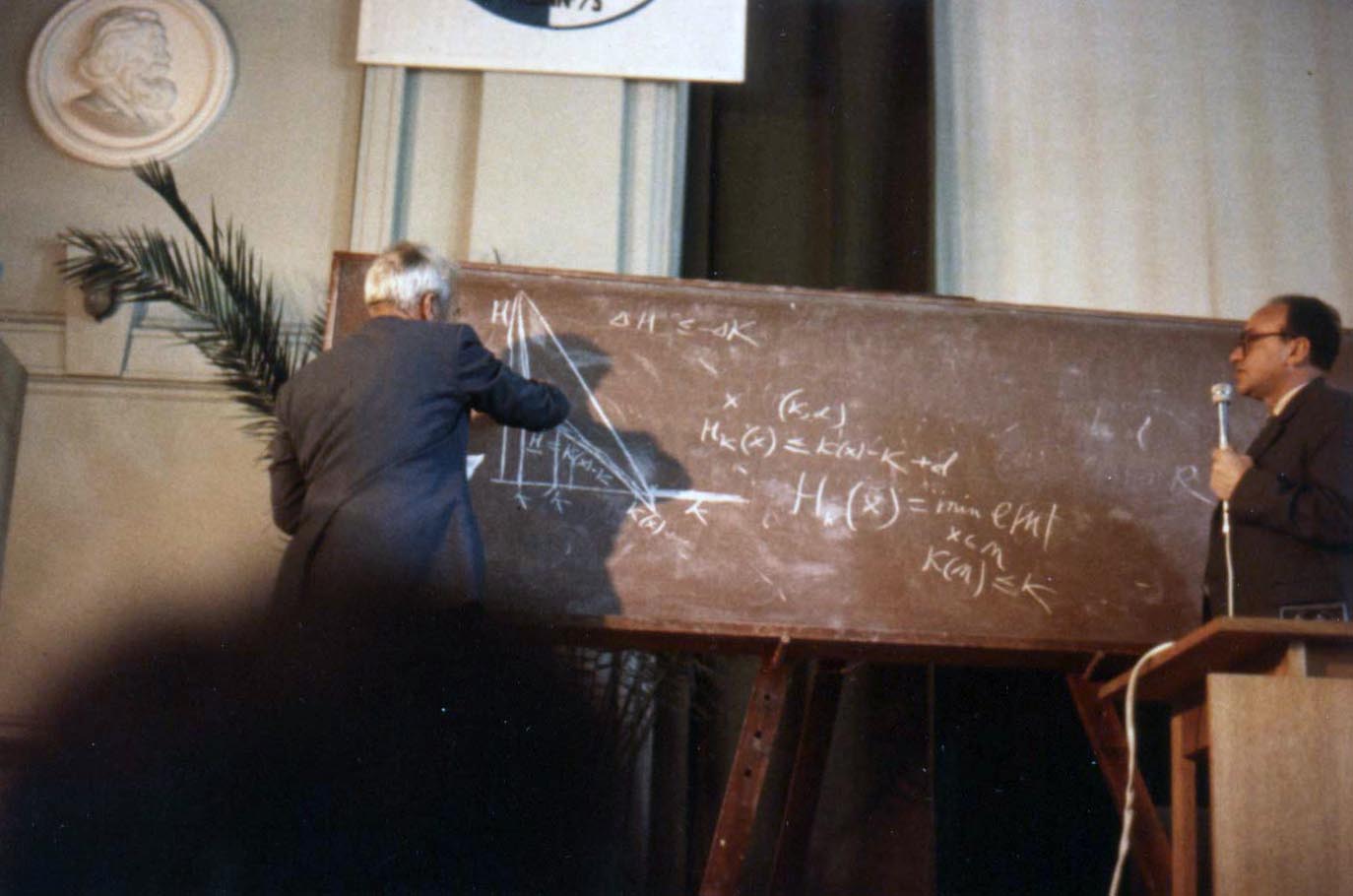

Ray Solomonoff

Ray Solomonoff (July 25, 1926 – December 7, 2009) was the inventor of algorithmic probability, his General Theory of Inductive Inference (also known as Universal Inductive Inference),Samuel Rathmanner and Marcus Hutter. A philosophical treatise of universal induction. Entropy, 13(6):1076–1136, 2011. and was a founder of algorithmic information theory. He was an originator of the branch of artificial intelligence based on machine learning, prediction and probability. He circulated the first report on non-semantic machine learning in 1956."An Inductive Inference Machine", Dartmouth College, N.H., version of Aug. 14, 1956(pdf scanned copy of the original)/ref> Solomonoff first described algorithmic probability in 1960, publishing the theorem that launched Kolmogorov complexity and algorithmic information theory. He first described these results at a conference at Caltech in 1960, and in a report, Feb. 1960, "A Preliminary Report on a General Theory of Inductive Inference." He clar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Solomonoff's Theory Of Inductive Inference

Solomonoff's theory of inductive inference is a mathematical theory of Inductive reasoning, induction introduced by Ray Solomonoff, based on probability theory and Computer science, theoretical computer science. In essence, Solomonoff's induction derives the posterior probability of any Computability, computable theory, given a sequence of observed data. This posterior probability is derived from Bayes' theorem, Bayes' rule and some ''universal'' prior, that is, a prior that assigns a positive probability to any computable theory. Solomonoff's induction naturally formalizes Occam's razorJJ McCall. Induction: From Kolmogorov and Solomonoff to De Finetti and Back to Kolmogorov – Metroeconomica, 2004 – Wiley Online Library.D Stork. Foundations of Occam's razor and parsimony in learning from ricoh.com – NIPS 2001 Workshop, 2001A.N. Soklakov. Occam's razor as a formal basis for a physical theorfrom arxiv.org– Foundations of Physics Letters, 2002 – SpringerM Hutter. On the exis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Solomonoff's Theory Of Inductive Inference

Solomonoff's theory of inductive inference is a mathematical proof that if a universe is generated by an algorithm, then observations of that universe, encoded as a dataset, are best predicted by the smallest executable archive of that dataset. This formalization of Occam's razorJJ McCall. Induction: From Kolmogorov and Solomonoff to De Finetti and Back to Kolmogorov – Metroeconomica, 2004 – Wiley Online Library.D Stork. Foundations of Occam's razor and parsimony in learning from ricoh.com – NIPS 2001 Workshop, 2001A.N. Soklakov. Occam's razor as a formal basis for a physical theorfrom arxiv.org– Foundations of Physics Letters, 2002 – SpringerM Hutter. On the existence and convergence of computable universal priorarxiv.org– Algorithmic Learning Theory, 2003 – Springer for induction was introduced by Ray Solomonoff, based on probability theory and theoretical computer science. In essence, Solomonoff's induction derives the posterior probability of any computable theor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algorithmic Information Theory

Algorithmic information theory (AIT) is a branch of theoretical computer science that concerns itself with the relationship between computation and information of computably generated objects (as opposed to stochastically generated), such as strings or any other data structure. In other words, it is shown within algorithmic information theory that computational incompressibility "mimics" (except for a constant that only depends on the chosen universal programming language) the relations or inequalities found in information theory. According to Gregory Chaitin, it is "the result of putting Shannon's information theory and Turing's computability theory into a cocktail shaker and shaking vigorously." Besides the formalization of a universal measure for irreducible information content of computably generated objects, some main achievements of AIT were to show that: in fact algorithmic complexity follows (in the self-delimited case) the same inequalities (except for a constant) tha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Andrey Kolmogorov

Andrey Nikolaevich Kolmogorov ( rus, Андре́й Никола́евич Колмого́ров, p=ɐnˈdrʲej nʲɪkɐˈlajɪvʲɪtɕ kəlmɐˈɡorəf, a=Ru-Andrey Nikolaevich Kolmogorov.ogg, 25 April 1903 – 20 October 1987) was a Soviet mathematician who contributed to the mathematics of probability theory, topology, intuitionistic logic, turbulence, classical mechanics, algorithmic information theory and computational complexity. Biography Early life Andrey Kolmogorov was born in Tambov, about 500 kilometers south-southeast of Moscow, in 1903. His unmarried mother, Maria Y. Kolmogorova, died giving birth to him. Andrey was raised by two of his aunts in Tunoshna (near Yaroslavl) at the estate of his grandfather, a well-to-do nobleman. Little is known about Andrey's father. He was supposedly named Nikolai Matveevich Kataev and had been an agronomist. Kataev had been exiled from St. Petersburg to the Yaroslavl province after his participation in the revolutionary movem ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scholarpedia

''Scholarpedia'' is an English-language wiki-based online encyclopedia with features commonly associated with open-access online academic journals, which aims to have quality content in science and medicine. ''Scholarpedia'' articles are written by invited or approved expert authors and are subject to peer review. ''Scholarpedia'' lists the real names and affiliations of all authors, curators and editors involved in an article: however, the peer review process (which can suggest changes or additions, and has to be satisfied before an article can appear) is anonymous. ''Scholarpedia'' articles are stored in an online repository, and can be cited as conventional journal articles (''Scholarpedia'' has the ISSN number ). ''Scholarpedia''s citation system includes support for revision numbers. The project was created in February 2006 by Eugene M. Izhikevich, while he was a researcher at the Neurosciences Institute, San Diego, California. Izhikevich is also the encyclopedia's editor-i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information-based Complexity

Information-based complexity (IBC) studies optimal algorithms and computational complexity for the continuous problems that arise in physical science, economics, engineering, and mathematical finance. IBC has studied such continuous problems as path integration, partial differential equations, systems of ordinary differential equations, nonlinear equations, integral equations, fixed points, and very-high-dimensional integration. All these problems involve functions (typically multivariate) of a real or complex variable. Since one can never obtain a closed-form solution to the problems of interest one has to settle for a numerical solution. Since a function of a real or complex variable cannot be entered into a digital computer, the solution of continuous problems involves ''partial'' information. To give a simple illustration, in the numerical approximation of an integral, only samples of the integrand at a finite number of points are available. In the numerical solution of partial ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Universal Turing Machine

In computer science, a universal Turing machine (UTM) is a Turing machine that can simulate an arbitrary Turing machine on arbitrary input. The universal machine essentially achieves this by reading both the description of the machine to be simulated as well as the input to that machine from its own tape. Alan Turing introduced the idea of such a machine in 1936–1937. This principle is considered to be the origin of the idea of a stored-program computer used by John von Neumann in 1946 for the "Electronic Computing Instrument" that now bears von Neumann's name: the von Neumann architecture. Martin Davis, ''The universal computer : the road from Leibniz to Turing'' (2017) In terms of computational complexity, a multi-tape universal Turing machine need only be slower by logarithmic factor compared to the machines it simulates. Introduction Every Turing machine computes a certain fixed partial computable function from the input strings over its alphabet. In that sense it be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Inductive Probability

Inductive probability attempts to give the probability of future events based on past events. It is the basis for inductive reasoning, and gives the mathematical basis for learning and the perception of patterns. It is a source of knowledge about the world. There are three sources of knowledge: inference, communication, and deduction. Communication relays information found using other methods. Deduction establishes new facts based on existing facts. Inference establishes new facts from data. Its basis is Bayes' theorem. Information describing the world is written in a language. For example, a simple mathematical language of propositions may be chosen. Sentences may be written down in this language as strings of characters. But in the computer it is possible to encode these sentences as strings of bits (1s and 0s). Then the language may be encoded so that the most commonly used sentences are the shortest. This internal language implicitly represents probabilities of statements. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Inductive Inference

Inductive reasoning is a method of reasoning in which a general principle is derived from a body of observations. It consists of making broad generalizations based on specific observations. Inductive reasoning is distinct from ''deductive'' reasoning. If the premises are correct, the conclusion of a deductive argument is ''certain''; in contrast, the truth of the conclusion of an inductive argument is ''probable'', based upon the evidence given. Types The types of inductive reasoning include generalization, prediction, statistical syllogism, argument from analogy, and causal inference. Inductive generalization A generalization (more accurately, an ''inductive generalization'') proceeds from a premise about a sample to a conclusion about the population. The observation obtained from this sample is projected onto the broader population. : The proportion Q of the sample has attribute A. : Therefore, the proportion Q of the population has attribute A. For example, say there ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayesian Inference

Bayesian inference is a method of statistical inference in which Bayes' theorem is used to update the probability for a hypothesis as more evidence or information becomes available. Bayesian inference is an important technique in statistics, and especially in mathematical statistics. Bayesian updating is particularly important in the dynamic analysis of a sequence of data. Bayesian inference has found application in a wide range of activities, including science, engineering, philosophy, medicine, sport, and law. In the philosophy of decision theory, Bayesian inference is closely related to subjective probability, often called "Bayesian probability". Introduction to Bayes' rule Formal explanation Bayesian inference derives the posterior probability as a consequence of two antecedents: a prior probability and a "likelihood function" derived from a statistical model for the observed data. Bayesian inference computes the posterior probability according to Bayes' theorem: ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algorithmic Information Theory

Algorithmic information theory (AIT) is a branch of theoretical computer science that concerns itself with the relationship between computation and information of computably generated objects (as opposed to stochastically generated), such as strings or any other data structure. In other words, it is shown within algorithmic information theory that computational incompressibility "mimics" (except for a constant that only depends on the chosen universal programming language) the relations or inequalities found in information theory. According to Gregory Chaitin, it is "the result of putting Shannon's information theory and Turing's computability theory into a cocktail shaker and shaking vigorously." Besides the formalization of a universal measure for irreducible information content of computably generated objects, some main achievements of AIT were to show that: in fact algorithmic complexity follows (in the self-delimited case) the same inequalities (except for a constant) tha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |