|

Algorithmic Probability

In algorithmic information theory, algorithmic probability, also known as Solomonoff probability, is a mathematical method of assigning a prior probability to a given observation. It was invented by Ray Solomonoff in the 1960s. It is used in inductive inference theory and analyses of algorithms. In his general theory of inductive inference, Solomonoff uses the method together with Bayes' rule to obtain probabilities of prediction for an algorithm's future outputs. In the mathematical formalism used, the observations have the form of finite binary strings viewed as outputs of Turing machines, and the universal prior is a probability distribution over the set of finite binary strings calculated from a probability distribution over programs (that is, inputs to a universal Turing machine). The prior is universal in the Turing-computability sense, i.e. no string has zero probability. It is not computable, but it can be approximated. Formally, the probability P is not a probabil ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

From Observer States To Physics Via Algorithmic Probability

{{disambig ...

From may refer to: People *Isak From (born 1967), Swedish politician *Martin Severin From (1825–1895), Danish chess master * Sigfred From (1925–1998), Danish chess master Media * ''From'' (TV series), a sci-fi-horror series that debuted on Epix in 2022 * "From" (Fromis 9 song) (2024) * "From", a song by Big Thief from U.F.O.F. (2019) * "From", a song by Yuzu (2010) * "From", a song by Bon Iver from Sable, Fable (2025) Other * From, a preposition * From (SQL), computing language keyword * From: (email message header), field showing the sender of an email * FromSoftware, a Japanese video game company * Full range of motion, the travel in a range of motion Range of motion (or ROM) is the linear or angular distance that a moving object may normally travel while properly attached to another. In biomechanics and strength training, ROM refers to the angular distance and direction a joint can move be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scholarpedia

''Scholarpedia'' is an English-language wiki-based online encyclopedia with features commonly associated with Open access (publishing), open-access online academic journals, which aims to have quality content in science and medicine. ''Scholarpedia'' articles are written by invited or approved expert authors and are subject to peer review. ''Scholarpedia'' lists the real names and affiliations of all authors, curators and editors involved in an article: however, the peer review process (which can suggest changes or additions, and has to be satisfied before an article can appear) is anonymous. ''Scholarpedia'' articles are stored in an online repository, and can be citation, cited as conventional journal articles (''Scholarpedia'' has the ISSN number ). ''Scholarpedia''s citation system includes support for revision numbers. The project was created in February 2006 by Eugene M. Izhikevich, while he was a researcher at the Neurosciences Institute, San Diego, California. Izhikevich ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information-based Complexity

Information-based complexity (IBC) studies optimal algorithms and computational complexity for the continuous problems that arise in physical science, economics, engineering, and mathematical finance Mathematical finance, also known as quantitative finance and financial mathematics, is a field of applied mathematics, concerned with mathematical modeling in the financial field. In general, there exist two separate branches of finance that req .... Further reading *Traub, J. F., Iterative Methods for the Solution of Equations, Prentice Hall, 1964. Reissued Chelsea Publishing Company, 1982; Russian translation MIR, 1985; Reissued American Mathematical Society, 1998 *Traub, J. F., and Woźniakowski, H., A General Theory of Optimal Algorithms, Academic Press, New York, 1980 *Traub, J. F., Woźniakowski, H., and Wasilkowski, G. W., Information, Uncertainty, Complexity, Addison-Wesley, New York, 1983 *Novak, E., Deterministic and Stochastic Error Bounds in Numerical Analysis, Lecture ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Universal Turing Machine

In computer science, a universal Turing machine (UTM) is a Turing machine capable of computing any computable sequence, as described by Alan Turing in his seminal paper "On Computable Numbers, with an Application to the Entscheidungsproblem". Common sense might say that a universal machine is impossible, but Turing proves that it is possible. He suggested that we may compare a human in the process of computing a real number to a machine which is only capable of a finite number of conditions ; which will be called "-configurations". He then described the operation of such machine, as described below, and argued: Turing introduced the idea of such a machine in 1936–1937. Introduction Martin Davis makes a persuasive argument that Turing's conception of what is now known as "the stored-program computer", of placing the "action table"—the instructions for the machine—in the same "memory" as the input data, strongly influenced John von Neumann's conception of the first Amer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Inductive Probability

Inductive probability attempts to give the probability of future events based on past events. It is the basis for inductive reasoning Inductive reasoning refers to a variety of method of reasoning, methods of reasoning in which the conclusion of an argument is supported not with deductive certainty, but with some degree of probability. Unlike Deductive reasoning, ''deductive'' ..., and gives the mathematical basis for learning and the perception of patterns. It is a source of knowledge about the world. There are three sources of knowledge: inference, communication, and deduction. Communication relays information found using other methods. Deduction establishes new facts based on existing facts. Inference establishes new facts from data. Its basis is Bayes' theorem. Information describing the world is written in a language. For example, a simple mathematical language of propositions may be chosen. Sentences may be written down in this language as strings of characters. But in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Inductive Inference

Inductive reasoning refers to a variety of methods of reasoning in which the conclusion of an argument is supported not with deductive certainty, but with some degree of probability. Unlike ''deductive'' reasoning (such as mathematical induction), where the conclusion is ''certain'', given the premises are correct, inductive reasoning produces conclusions that are at best ''probable'', given the evidence provided. Types The types of inductive reasoning include generalization, prediction, statistical syllogism, argument from analogy, and causal inference. There are also differences in how their results are regarded. Inductive generalization A generalization (more accurately, an ''inductive generalization'') proceeds from premises about a sample to a conclusion about the population. The observation obtained from this sample is projected onto the broader population. : The proportion Q of the sample has attribute A. : Therefore, the proportion Q of the population has attrib ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayesian Inference

Bayesian inference ( or ) is a method of statistical inference in which Bayes' theorem is used to calculate a probability of a hypothesis, given prior evidence, and update it as more information becomes available. Fundamentally, Bayesian inference uses a prior distribution to estimate posterior probabilities. Bayesian inference is an important technique in statistics, and especially in mathematical statistics. Bayesian updating is particularly important in the dynamic analysis of a sequence of data. Bayesian inference has found application in a wide range of activities, including science, engineering, philosophy, medicine, sport, and law. In the philosophy of decision theory, Bayesian inference is closely related to subjective probability, often called "Bayesian probability". Introduction to Bayes' rule Formal explanation Bayesian inference derives the posterior probability as a consequence of two antecedents: a prior probability and a "likelihood function" derive ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

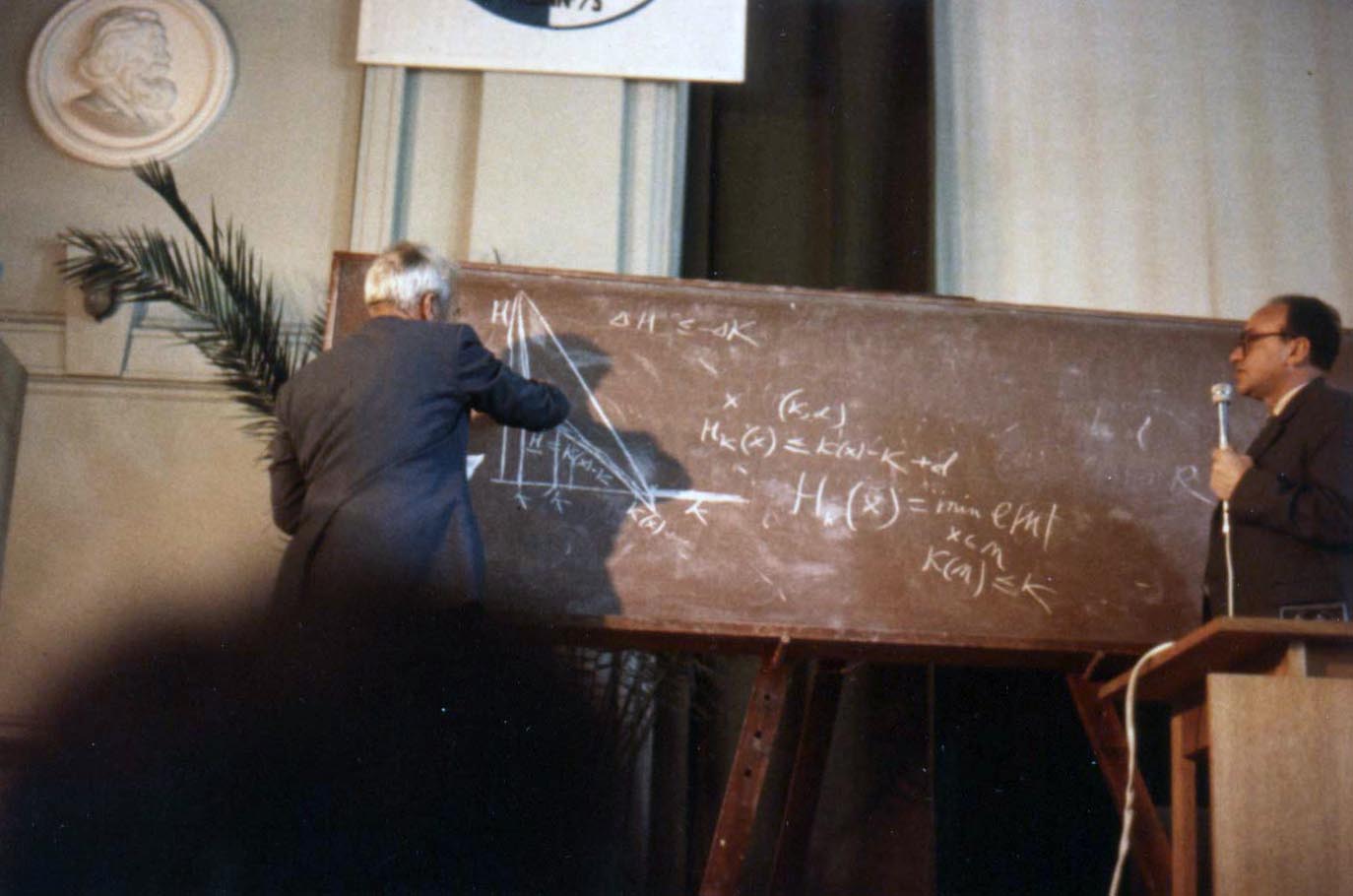

Leonid Levin

Leonid Anatolievich Levin ( ; ; ; born November 2, 1948) is a Soviet-American mathematician and computer scientist. He is known for his work in randomness in computing, algorithmic complexity and intractability, average-case complexity, foundations of mathematics and computer science, algorithmic probability, theory of computation, and information theory. He obtained his master's degree at Moscow University in 1970 where he studied under Andrey Kolmogorov and completed the Candidate Degree academic requirements in 1972. He and Stephen Cook independently discovered the existence of NP-complete problems. This NP-completeness theorem, often called the Cook–Levin theorem, was a basis for one of the seven Millennium Prize Problems declared by the Clay Mathematics Institute with a $1,000,000 prize offered. The Cook–Levin theorem was a breakthrough in computer science and an important step in the development of the theory of computational complexity. Levin was awarded th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Andrey Kolmogorov

Andrey Nikolaevich Kolmogorov ( rus, Андре́й Никола́евич Колмого́ров, p=ɐnˈdrʲej nʲɪkɐˈlajɪvʲɪtɕ kəlmɐˈɡorəf, a=Ru-Andrey Nikolaevich Kolmogorov.ogg, 25 April 1903 – 20 October 1987) was a Soviet mathematician who played a central role in the creation of modern probability theory. He also contributed to the mathematics of topology, intuitionistic logic, turbulence, classical mechanics, algorithmic information theory and Analysis of algorithms, computational complexity. Biography Early life Andrey Kolmogorov was born in Tambov, about 500 kilometers southeast of Moscow, in 1903. His unmarried mother, Maria Yakovlevna Kolmogorova, died giving birth to him. Andrey was raised by two of his aunts in Tunoshna (near Yaroslavl) at the estate of his grandfather, a well-to-do Russian nobility, nobleman. Little is known about Andrey's father. He was supposedly named Nikolai Matveyevich Katayev and had been an Agronomy, agronomist. Katayev ha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task (computing), tasks without explicit Machine code, instructions. Within a subdiscipline in machine learning, advances in the field of deep learning have allowed Neural network (machine learning), neural networks, a class of statistical algorithms, to surpass many previous machine learning approaches in performance. ML finds application in many fields, including natural language processing, computer vision, speech recognition, email filtering, agriculture, and medicine. The application of ML to business problems is known as predictive analytics. Statistics and mathematical optimisation (mathematical programming) methods comprise the foundations of machine learning. Data mining is a related field of study, focusing on exploratory data analysi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |