|

Advice (complexity)

In computational complexity theory, an advice string is an extra input to a Turing machine that is allowed to depend on the length ''n'' of the input, but not on the input itself. A decision problem is in the complexity class P/''f''(''n'') if there is a polynomial time Turing machine ''M'' with the following property: for any ''n'', there is an advice string ''A'' of length ''f''(''n'') such that, for any input ''x'' of length ''n'', the machine ''M'' correctly decides the problem on the input ''x'', given ''x'' and ''A''. The most common complexity class involving advice is P/poly where advice length ''f''(''n'') can be any polynomial in ''n''. P/poly is equal to the class of decision problems such that, for every ''n'', there exists a polynomial size Boolean circuit correctly deciding the problem on all inputs of length ''n''. One direction of the equivalence is easy to see. If, for every ''n'', there is a polynomial size Boolean circuit ''A''(''n'') deciding the problem, we ca ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Computational Complexity Theory

In theoretical computer science and mathematics, computational complexity theory focuses on classifying computational problems according to their resource usage, and explores the relationships between these classifications. A computational problem is a task solved by a computer. A computation problem is solvable by mechanical application of mathematical steps, such as an algorithm. A problem is regarded as inherently difficult if its solution requires significant resources, whatever the algorithm used. The theory formalizes this intuition, by introducing mathematical models of computation to study these problems and quantifying their computational complexity, i.e., the amount of resources needed to solve them, such as time and storage. Other measures of complexity are also used, such as the amount of communication (used in communication complexity), the number of logic gate, gates in a circuit (used in circuit complexity) and the number of processors (used in parallel computing). O ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

DTIME

In computational complexity theory, DTIME (or TIME) is the computational resource of computation time for a deterministic Turing machine. It represents the amount of time (or number of computation steps) that a "normal" physical computer would take to solve a certain computational problem using a certain algorithm. It is one of the most well-studied complexity resources, because it corresponds so closely to an important real-world resource (the amount of time it takes a computer to solve a problem). The resource DTIME is used to define complexity classes, sets of all of the decision problems which can be solved using a certain amount of computation time. If a problem of input size ''n'' can be solved in , we have a complexity class (or ). There is no restriction on the amount of memory space used, but there may be restrictions on some other complexity resources (like alternation). Complexity classes in DTIME Many important complexity classes are defined in terms of DTIME, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Lance Fortnow

Lance Jeremy Fortnow (born August 15, 1963) is a computer scientist known for major results in Computational complexity theory, computational complexity and interactive proof systems. Since 2019, he has been at the Illinois Institute of Technology, where he is currently the Dean of the College of Computing. Biography Lance Fortnow received a doctorate in applied mathematics from MIT in 1989, supervised by Michael Sipser. Since graduation, he has been on the faculty of the University of Chicago (1989–1999, 2003–2007), Northwestern University (2008–2012) and the Georgia Institute of Technology (2012–2019) as chair of the Georgia Institute of Technology School of Computer Science, School of Computer Science. From 1999-2003 he was a Senior Research Scientist at the NEC Research Institute. Fortnow was the founding editor-in-chief of the journal ''ACM Transactions on Computation Theory'' in 2009. He was the chair of ACM SIGACT and succeeded by Paul Beame. He was the chair of the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Springer-Verlag

Springer Science+Business Media, commonly known as Springer, is a German multinational publishing company of books, e-books and peer-reviewed journals in science, humanities, technical and medical (STM) publishing. Originally founded in 1842 in Berlin, it expanded internationally in the 1960s, and through mergers in the 1990s and a sale to venture capitalists it fused with Wolters Kluwer and eventually became part of Springer Nature in 2015. Springer has major offices in Berlin, Heidelberg, Dordrecht, and New York City. History Julius Springer founded Springer-Verlag in Berlin in 1842 and his son Ferdinand Springer grew it from a small firm of 4 employees into Germany's then second-largest academic publisher with 65 staff in 1872.Chronology ". Springer Science+Business Media. In 1964, Springer expanded its business internationally, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Isolation Lemma

In theoretical computer science, the term isolation lemma (or isolating lemma) refers to randomized algorithms that reduce the number of solutions to a problem to one, should a solution exist. This is achieved by constructing random constraints such that, with non-negligible probability, exactly one solution satisfies these additional constraints if the solution space is not empty. Isolation lemmas have important applications in computer science, such as the Valiant–Vazirani theorem and Toda's theorem in computational complexity theory. The first isolation lemma was introduced by , albeit not under that name. Their isolation lemma chooses a random number of random hyperplanes, and has the property that, with non-negligible probability, the intersection of any fixed non-empty solution space with the chosen hyperplanes contains exactly one element. This suffices to show the Valiant–Vazirani theorem: there exists a randomized polynomial-time reduction from the satisfiability prob ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

L (complexity)

In computational complexity theory, L (also known as LSPACE, LOGSPACE or DLOGSPACE) is the complexity class containing decision problems that can be solved by a deterministic Turing machine using a logarithmic amount of writable memory space. Formally, the Turing machine has two tapes, one of which encodes the input and can only be read, whereas the other tape has logarithmic size but can be written as well as read. Logarithmic space is sufficient to hold a constant number of pointers into the input and a logarithmic number of Boolean flags, and many basic logspace algorithms use the memory in this way. Complete problems and logical characterization Every non-trivial problem in L is complete under log-space reductions, so weaker reductions are required to identify meaningful notions of L-completeness, the most common being first-order reductions. A 2004 result by Omer Reingold shows that USTCON, the problem of whether there exists a path between two vertices in a given u ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

L/poly

In computational complexity theory, L/poly is the complexity class of logarithmic space machines with a polynomial amount of advice. L/poly is a non-uniform logarithmic space class, analogous to the non-uniform polynomial time class P/poly. Formally, for a formal language to belong to L/poly, there must exist an advice function that maps an integer to a string of length polynomial in , and a Turing machine M with two read-only input tapes and one read-write tape of size logarithmic in the input size, such that an input of length belongs to if and only if machine M accepts the input .. Alternatively and more simply, is in L/poly if and only if it can be recognized by branching programs of polynomial size. One direction of the proof that these two models of computation are equivalent in power is the observation that, if a branching program of polynomial size exists, it can be specified by the advice function and simulated by the Turing machine. In the other direction, a Turi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

NP (complexity)

In computational complexity theory, NP (nondeterministic polynomial time) is a complexity class used to classify decision problems. NP is the Set (mathematics), set of decision problems for which the Computational complexity theory#Problem instances, problem instances, where the answer is "yes", have mathematical proof, proofs verifiable in polynomial time by a deterministic Turing machine, or alternatively the set of problems that can be solved in polynomial time by a nondeterministic Turing machine.''Polynomial time'' refers to how quickly the number of operations needed by an algorithm, relative to the size of the problem, grows. It is therefore a measure of efficiency of an algorithm. * NP is the set of decision problems ''solvable'' in polynomial time by a nondeterministic Turing machine. * NP is the set of decision problems ''verifiable'' in polynomial time by a deterministic Turing machine. The first definition is the basis for the abbreviation NP; "Nondeterministic alg ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Nondeterministic Algorithm

In computer science and computer programming, a nondeterministic algorithm is an algorithm that, even for the same input, can exhibit different behaviors on different runs, as opposed to a deterministic algorithm. Different models of computation give rise to different reasons that an algorithm may be non-deterministic, and different ways to evaluate its performance or correctness: *A concurrent algorithm can perform differently on different runs due to a race condition A race condition or race hazard is the condition of an electronics, software, or other system where the system's substantive behavior is dependent on the sequence or timing of other uncontrollable events, leading to unexpected or inconsistent .... This can happen even with a single-threaded algorithm when it interacts with resources external to it. In general, such an algorithm is considered to perform correctly only when ''all'' possible runs produce the desired results. *A probabilistic algorithm's behavior ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

NTIME

In computational complexity theory, the complexity class NTIME(''f''(''n'')) is the set of decision problems that can be solved by a non-deterministic Turing machine which runs in time ''O''(''f''(''n'')). Here ''O'' is the big O notation, ''f'' is some function, and ''n'' is the size of the input (for which the problem is to be decided). Meaning This means that there is a non-deterministic machine which, for a given input of size ''n'', will run in time ''O''(''f''(''n'')) (i.e. within a constant multiple of ''f''(''n''), for ''n'' greater than some value), and will always "reject" the input if the answer to the decision problem is "no" for that input, while if the answer is "yes" the machine will "accept" that input for at least one computation path. Equivalently, there is a deterministic Turing machine ''M'' that runs in time ''O''(''f''(''n'')) and is able to check an ''O''(''f''(''n''))-length certificate for an input; if the input is a "yes" instance, then at least one certifi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Halting Problem

In computability theory (computer science), computability theory, the halting problem is the problem of determining, from a description of an arbitrary computer program and an input, whether the program will finish running, or continue to run forever. The halting problem is ''Undecidable problem, undecidable'', meaning that no general algorithm exists that solves the halting problem for all possible program–input pairs. The problem comes up often in discussions of computability since it demonstrates that some functions are mathematically Definable set, definable but not Computable function, computable. A key part of the formal statement of the problem is a mathematical definition of a computer and program, usually via a Turing machine. The proof then shows, for any program that might determine whether programs halt, that a "pathological" program exists for which makes an incorrect determination. Specifically, is the program that, when called with some input, passes its own s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

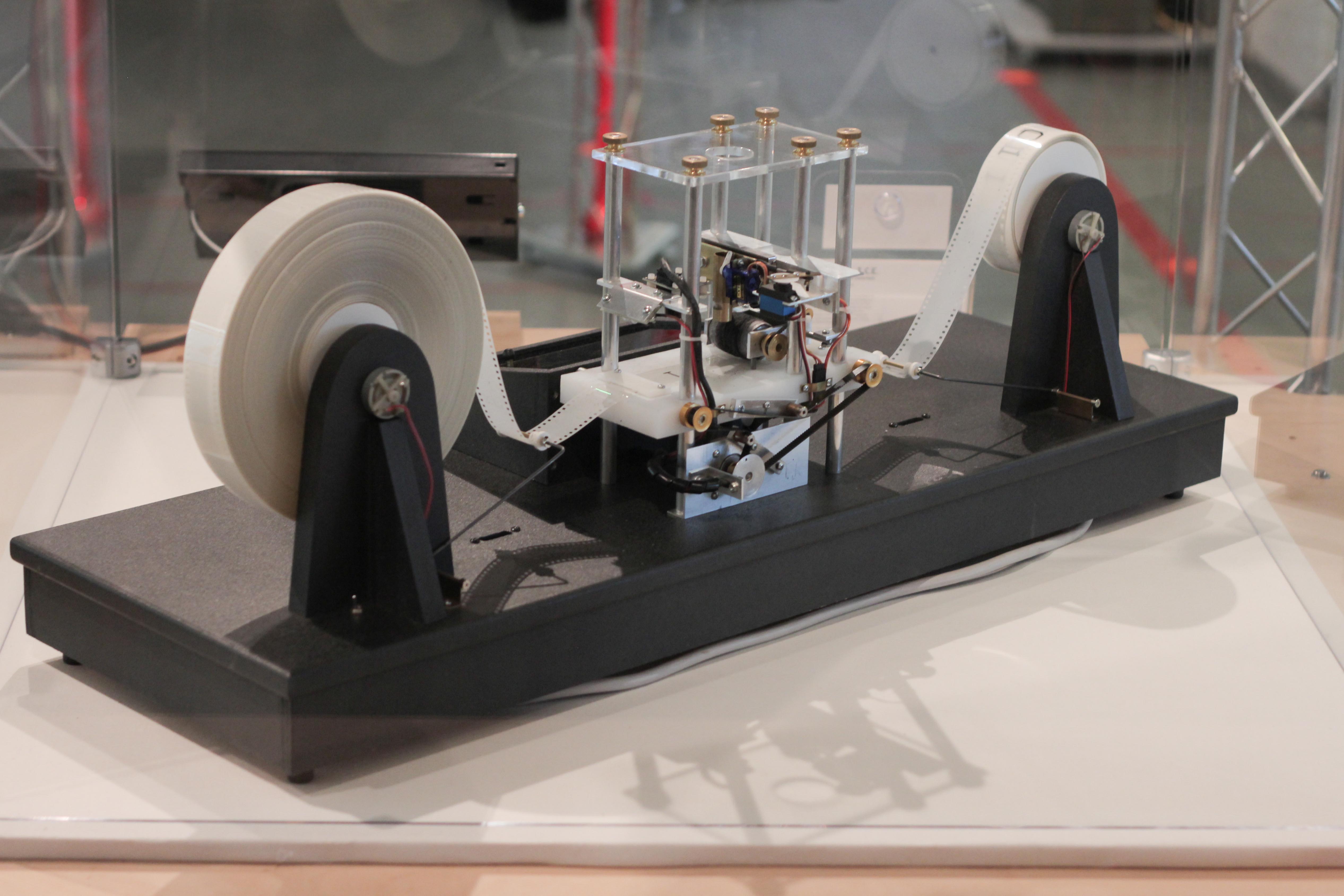

Turing Machine

A Turing machine is a mathematical model of computation describing an abstract machine that manipulates symbols on a strip of tape according to a table of rules. Despite the model's simplicity, it is capable of implementing any computer algorithm. The machine operates on an infinite memory tape divided into discrete mathematics, discrete cells, each of which can hold a single symbol drawn from a finite set of symbols called the Alphabet (formal languages), alphabet of the machine. It has a "head" that, at any point in the machine's operation, is positioned over one of these cells, and a "state" selected from a finite set of states. At each step of its operation, the head reads the symbol in its cell. Then, based on the symbol and the machine's own present state, the machine writes a symbol into the same cell, and moves the head one step to the left or the right, or halts the computation. The choice of which replacement symbol to write, which direction to move the head, and whet ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |