|

Weak Duality

In applied mathematics, weak duality is a concept in optimization which states that the duality gap is always greater than or equal to 0. That means the solution to the dual (minimization) problem is ''always'' greater than or equal to the solution to an associated primal problem. This is opposed to strong duality which only holds in certain cases. Uses Many primal-dual approximation algorithms are based on the principle of weak duality.. Weak duality theorem The ''primal'' problem: : Maximize subject to ; The ''dual'' problem, : Minimize subject to . The weak duality theorem states . Namely, if (x_1,x_2,....,x_n) is a feasible solution for the primal maximization linear program and (y_1,y_2,....,y_m) is a feasible solution for the dual minimization linear program, then the weak duality theorem can be stated as \sum_^n c_j x_j \leq \sum_^m b_i y_i , where c_j and b_i are the coefficients of the respective objective functions. Proof: Generalizations More generally, i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Applied Mathematics

Applied mathematics is the application of mathematical methods by different fields such as physics, engineering, medicine, biology, finance, business, computer science, and industry. Thus, applied mathematics is a combination of mathematical science and specialized knowledge. The term "applied mathematics" also describes the professional specialty in which mathematicians work on practical problems by formulating and studying mathematical models. In the past, practical applications have motivated the development of mathematical theories, which then became the subject of study in pure mathematics where abstract concepts are studied for their own sake. The activity of applied mathematics is thus intimately connected with research in pure mathematics. History Historically, applied mathematics consisted principally of applied analysis, most notably differential equations; approximation theory (broadly construed, to include representations, asymptotic methods, variational ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Optimization

Mathematical optimization (alternatively spelled ''optimisation'') or mathematical programming is the selection of a best element, with regard to some criterion, from some set of available alternatives. It is generally divided into two subfields: discrete optimization and continuous optimization. Optimization problems of sorts arise in all quantitative disciplines from computer science and engineering to operations research and economics, and the development of solution methods has been of interest in mathematics for centuries. In the more general approach, an optimization problem consists of maxima and minima, maximizing or minimizing a Function of a real variable, real function by systematically choosing Argument of a function, input values from within an allowed set and computing the Value (mathematics), value of the function. The generalization of optimization theory and techniques to other formulations constitutes a large area of applied mathematics. More generally, opti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Duality Gap

In optimization problems in applied mathematics, the duality gap is the difference between the primal and dual solutions. If d^* is the optimal dual value and p^* is the optimal primal value then the duality gap is equal to p^* - d^*. This value is always greater than or equal to 0 (for minimization problems). The duality gap is zero if and only if strong duality holds. Otherwise the gap is strictly positive and weak duality holds. In general given two dual pairs separated locally convex spaces \left(X,X^*\right) and \left(Y,Y^*\right). Then given the function f: X \to \mathbb \cup \, we can define the primal problem by :\inf_ f(x). \, If there are constraint conditions, these can be built into the function f by letting f = f + I_\text where I is the indicator function. Then let F: X \times Y \to \mathbb \cup \ be a perturbation function such that F(x,0) = f(x). The ''duality gap'' is the difference given by :\inf_ (x,0)- \sup_ F^*(0,y^*)/math> where F^* is the convex conj ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Primal Problem

In mathematical optimization theory, duality or the duality principle is the principle that optimization problems may be viewed from either of two perspectives, the primal problem or the dual problem. If the primal is a minimization problem then the dual is a maximization problem (and vice versa). Any feasible solution to the primal (minimization) problem is at least as large as any feasible solution to the dual (maximization) problem. Therefore, the solution to the primal is an upper bound to the solution of the dual, and the solution of the dual is a lower bound to the solution of the primal. This fact is called weak duality. In general, the optimal values of the primal and dual problems need not be equal. Their difference is called the duality gap. For convex optimization problems, the duality gap is zero under a constraint qualification condition. This fact is called strong duality. Dual problem Usually the term "dual problem" refers to the ''Lagrangian dual problem'' but other ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Strong Duality

Strong duality is a condition in mathematical optimization in which the primal optimal objective and the dual optimal objective are equal. This is as opposed to weak duality (the primal problem has optimal value smaller than or equal to the dual problem, in other words the duality gap is greater than or equal to zero). Characterizations Strong duality holds if and only if the duality gap is equal to 0. Sufficient conditions Sufficient conditions comprise: * F = F^ where F is the perturbation function relating the primal and dual problems and F^ is the biconjugate of F (follows by construction of the duality gap) * F is convex and lower semi-continuous (equivalent to the first point by the Fenchel–Moreau theorem) * the primal problem is a linear optimization problem * Slater's condition for a convex optimization problem See also *Convex optimization Convex optimization is a subfield of mathematical optimization that studies the problem of minimizing convex function ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Approximation Algorithm

In computer science and operations research, approximation algorithms are efficient algorithms that find approximate solutions to optimization problems (in particular NP-hard problems) with provable guarantees on the distance of the returned solution to the optimal one. Approximation algorithms naturally arise in the field of theoretical computer science as a consequence of the widely believed P ≠ NP conjecture. Under this conjecture, a wide class of optimization problems cannot be solved exactly in polynomial time. The field of approximation algorithms, therefore, tries to understand how closely it is possible to approximate optimal solutions to such problems in polynomial time. In an overwhelming majority of the cases, the guarantee of such algorithms is a multiplicative one expressed as an approximation ratio or approximation factor i.e., the optimal solution is always guaranteed to be within a (predetermined) multiplicative factor of the returned solution. However, there are ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Program

Linear programming (LP), also called linear optimization, is a method to achieve the best outcome (such as maximum profit or lowest cost) in a mathematical model whose requirements are represented by linear relationships. Linear programming is a special case of mathematical programming (also known as mathematical optimization). More formally, linear programming is a technique for the optimization of a linear objective function, subject to linear equality and linear inequality constraints. Its feasible region is a convex polytope, which is a set defined as the intersection of finitely many half spaces, each of which is defined by a linear inequality. Its objective function is a real-valued affine (linear) function defined on this polyhedron. A linear programming algorithm finds a point in the polytope where this function has the smallest (or largest) value if such a point exists. Linear programs are problems that can be expressed in canonical form as : \begin & \text && \ma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

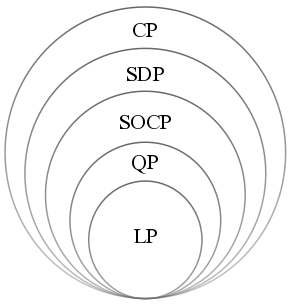

Convex Optimization

Convex optimization is a subfield of mathematical optimization that studies the problem of minimizing convex functions over convex sets (or, equivalently, maximizing concave functions over convex sets). Many classes of convex optimization problems admit polynomial-time algorithms, whereas mathematical optimization is in general NP-hard. Convex optimization has applications in a wide range of disciplines, such as automatic control systems, estimation and signal processing, communications and networks, electronic circuit design, data analysis and modeling, finance, statistics ( optimal experimental design), and structural optimization, where the approximation concept has proven to be efficient. With recent advancements in computing and optimization algorithms, convex programming is nearly as straightforward as linear programming. Definition A convex optimization problem is an optimization problem in which the objective function is a convex function and the feasible set is a c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Max–min Inequality

In mathematics, the max–min inequality is as follows: :For any function \ f : Z \times W \to \mathbb\ , :: \sup_ \inf_ f(z, w) \leq \inf_ \sup_ f(z, w)\ . When equality holds one says that , , and satisfies a strong max–min property (or a saddle-point property). The example function \ f(z,w) = \sin( z + w )\ illustrates that the equality does not hold for every function. A theorem giving conditions on , , and which guarantee the saddle point property is called a minimax theorem. Proof Define g(z) \triangleq \inf_ f(z, w)\ . For all z \in Z, we get g(z) \leq f(z, w) for all w \in W by definition of the infimum being a lower bound. Next, for all w \in W , f(z, w) \leq \sup_ f(z, w) for all z \in Z by definition of the supremum being an upper bound. Thus, for all z \in Z and w \in W , g(z) \leq f(z, w) \leq \sup_ f(z, w) making h(w) \triangleq \sup_ f(z, w) an upper bound on g(z) for any choice of w \in W . Because the supremum is the least upper bound, \sup_ g( ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Programming

Linear programming (LP), also called linear optimization, is a method to achieve the best outcome (such as maximum profit or lowest cost) in a mathematical model whose requirements are represented by linear function#As a polynomial function, linear relationships. Linear programming is a special case of mathematical programming (also known as mathematical optimization). More formally, linear programming is a technique for the mathematical optimization, optimization of a linear objective function, subject to linear equality and linear inequality Constraint (mathematics), constraints. Its feasible region is a convex polytope, which is a set defined as the intersection (mathematics), intersection of finitely many Half-space (geometry), half spaces, each of which is defined by a linear inequality. Its objective function is a real number, real-valued affine function, affine (linear) function defined on this polyhedron. A linear programming algorithm finds a point in the polytope where ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |