|

VIMCAS

VIMCAS, standing for Vertical Interval Multiple Channel Audio System, is a dual-channel Sound-in-Syncs mechanism for transmitting digitally encoded audio in a composite video analogue television signal. Invented by Australian company IRT in the 1980s, the basic concept of VIMCAS is to transmit two channels of PCM-encoded (i.e. digital) audio during the vertical blanking interval of a composite video signal. The encoded audio was transmitted over 6 horizontal scan lines during that interval, the digitally encoded signal being placed onto a series of mid-grey pedestals, in much the same way that the colour subcarrier is placed on top of the monochrome signal. As with the colour subcarrier, there is 4.7kHz bandwidth, so six lines would provide 28kHz of bandwidth (actually slightly less, there being deliberate redundancy between the final packet of encoded audio on one line and the first packet of encoded audio on the next, in order to avoid signal corruption). This could be used as a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

NICAM 728

Near Instantaneous Companded Audio Multiplex (NICAM) is an early form of lossy compression for digital audio. It was originally developed in the early 1970s for point-to-point links within broadcasting networks.Croll, M.G., Osborne, D.W. and Spicer, C.R. (1974), ''Digital sound signals: the present BBC distribution system and a proposal for bit-rate reduction by digital companding''. IEE Conference publication No. 119, pp. 90–96 In the 1980s, broadcasters began to use NICAM compression for transmissions of stereo TV sound to the public. History Near-instantaneous companding The idea was first described in 1964. In this, the 'ranging' was to be applied to the analogue signal before the analogue-to-digital converter (ADC) and after the digital-to-analogue converter (DAC). The application of this to broadcasting, in which the companding was to be done entirely digitally after the ADC and before the DAC, was described in a 1972 BBC Research Report. Point-to-point links NIC ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sound-in-Syncs

Sound-in-Syncs is a method of multiplexing sound and video signals into a channel designed to carry video, in which data representing the sound is inserted into the line synchronising pulse of an analogue television waveform. This is used on point-to-point links within broadcasting networks, including studio/transmitter links (STL). It is not used for broadcasts to the public. History The technique was first developed by the BBC in the late 1960s. In 1966, The corporation's Research Department made a feasibility study of the use of pulse-code modulation (PCM) for transmitting television sound during the synchronising period of the video signal. This had several advantages: it removed the necessity for a separate sound link, reduced the possibility of operational errors and offered improved sound quality and reliability.Pawley, E (1972). ''BBC Engineering 1922-1972'', pp. 506-7, 522. BBC. . Awards Sound-in-Syncs and its R&D engineers have won several awards, including: * The Roya ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dual-channel

In the fields of digital electronics and computer hardware, multi-channel memory architecture is a technology that increases the data transfer rate between the DRAM memory and the memory controller by adding more channels of communication between them. Theoretically, this multiplies the data rate by exactly the number of channels present. Dual-channel memory employs two channels. The technique goes back as far as the 1960s having been used in IBM System/360 Model 91 and in CDC 6600. Modern high-end desktop and workstation processors such as the AMD Ryzen Threadripper series and the Intel Core i9 Extreme Edition lineup support quad-channel memory. Server processors from the AMD Epyc series and the Intel Xeon platforms give support to memory bandwidth starting from quad-channel module layout to up to octa-channel layout. In March 2010, AMD released Socket G34 and Magny-Cours Opteron 6100 series processors with support for quad-channel memory. In 2006, Intel released chipsets that s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Composite Video

Composite video is an analog video signal format that carries standard-definition video (typically at 525 lines or 625 lines) as a single channel. Video information is encoded on one channel, unlike the higher-quality S-Video (two channels) and the even higher-quality component video (three or more channels). In all of these video formats, audio is carried on a separate connection. Composite video is also known by the initials CVBS for composite video baseband signal or color, video, blanking and sync, or is simply referred to as ''SD video'' for the standard-definition television signal it conveys. There are three dominant variants of composite video signals, corresponding to the analog color system used: NTSC, PAL, and SECAM. Usually composite video is carried by a yellow RCA connector, but other connections are used in professional settings. Signal components A composite video signal combines, on one wire, the video information required to recreate a color picture, a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pulse-code Modulation

Pulse-code modulation (PCM) is a method used to digitally represent sampled analog signals. It is the standard form of digital audio in computers, compact discs, digital telephony and other digital audio applications. In a PCM stream, the amplitude of the analog signal is sampled regularly at uniform intervals, and each sample is quantized to the nearest value within a range of digital steps. Linear pulse-code modulation (LPCM) is a specific type of PCM in which the quantization levels are linearly uniform. This is in contrast to PCM encodings in which quantization levels vary as a function of amplitude (as with the A-law algorithm or the μ-law algorithm). Though ''PCM'' is a more general term, it is often used to describe data encoded as LPCM. A PCM stream has two basic properties that determine the stream's fidelity to the original analog signal: the sampling rate, which is the number of times per second that samples are taken; and the bit depth, which determines the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vertical Blanking Interval

In a raster scan display, the vertical blanking interval (VBI), also known as the vertical interval or VBLANK, is the time between the end of the final visible line of a frame or field and the beginning of the first visible line of the next frame. It is present in analog television, VGA, DVI and other signals. In raster cathode ray tube displays, the blank level is usually supplied during this period to avoid painting the retrace line — see raster scan for details; signal sources such as television broadcasts do not supply image information during the blanking period. Digital displays usually will not display incoming data stream during the blanking interval even if present. The VBI was originally needed because of the inductive inertia of the magnetic coils which deflect the electron beam vertically in a CRT; the magnetic field, and hence the position being drawn, cannot change instantly. Additionally, the speed of older circuits was limited. For horizontal deflection, the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Subcarrier

A subcarrier is a sideband of a radio frequency carrier wave, which is modulated to send additional information. Examples include the provision of colour in a black and white television system or the provision of stereo in a monophonic radio broadcast. There is no physical difference between a carrier and a subcarrier; the "sub" implies that it has been derived from a carrier, which has been amplitude modulated by a steady signal and has a constant frequency relation to it. FM stereo Stereo broadcasting is made possible by using a subcarrier on FM radio stations, which takes the left channel and "subtracts" the right channel from it — essentially by hooking up the right-channel wires backward (reversing polarity) and then joining left and reversed-right. The result is modulated with suppressed carrier AM, more correctly called sum and difference modulation or SDM, at 38 kHz in the FM signal, which is joined at 2% modulation with the mono left+right audio (which ran ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

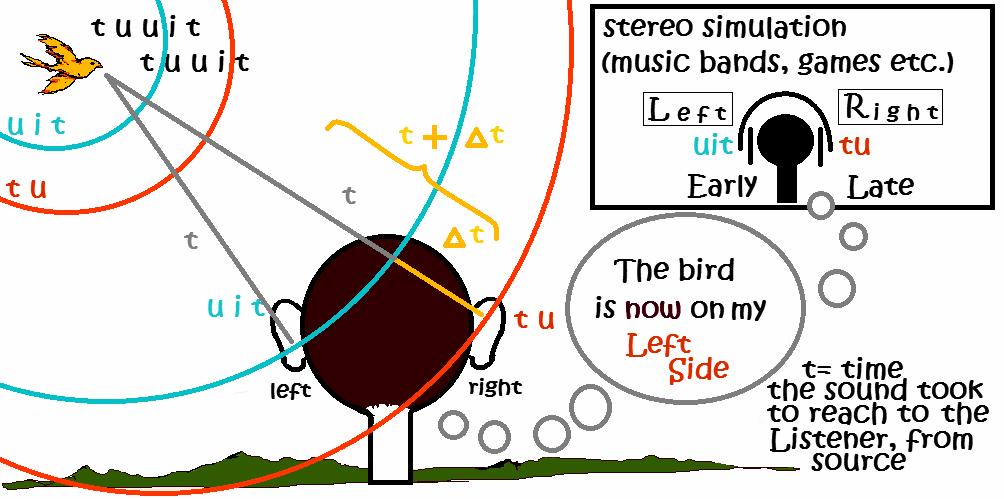

Stereo Audio

Stereophonic sound, or more commonly stereo, is a method of sound reproduction that recreates a multi-directional, 3-dimensional audible perspective. This is usually achieved by using two independent audio channels through a configuration of two loudspeakers (or stereo headphones) in such a way as to create the impression of sound heard from various directions, as in natural hearing. Because the multi-dimensional perspective is the crucial aspect, the term ''stereophonic'' also applies to systems with more than two channels or speakers such as quadraphonic and surround sound. Binaural sound systems are also ''stereophonic''. Stereo sound has been in common use since the 1970s in entertainment media such as broadcast radio, recorded music, television, video cameras, cinema, computer audio, and internet. Etymology The word ''stereophonic'' derives from the Greek (''stereós'', "firm, solid") + (''phōnḗ'', "sound, tone, voice") and it was coined in 1927 by Western Ele ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Outside Broadcast

Outside broadcasting (OB) is the electronic field production (EFP) of television or radio programmes (typically to cover television news and sports television events) from a mobile remote broadcast television studio. Professional video camera and microphone signals come into the production truck for processing, recording and possibly transmission. Some outside broadcasts use a mobile production control room (PCR) inside a production truck. History Outside radio broadcasts have been taking place since the early 1920s and television ones since the late 1920s. The first large-scale outside broadcast was the televising of the Coronation of George VI and Elizabeth in May 1937, done by the BBC's first Outside Broadcast truck, MCR 1 (short for Mobile Control Room). After the Second World War, the first notable outside broadcast was of the 1948 Summer Olympics. The Coronation of Elizabeth II followed in 1953, with 21 cameras being used to cover the event. In December 1963 i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Companding

In telecommunication and signal processing, companding (occasionally called compansion) is a method of mitigating the detrimental effects of a channel with limited dynamic range. The name is a portmanteau of the words compressing and expanding, which are the functions of a compander at the transmitting and receiving end respectively. The use of companding allows signals with a large dynamic range to be transmitted over facilities that have a smaller dynamic range capability. Companding is employed in telephony and other audio applications such as professional wireless microphones and analog recording. How it works The dynamic range of a signal is compressed before transmission and is expanded to the original value at the receiver. The electronic circuit that does this is called a compander and works by compressing or expanding the dynamic range of an analog electronic signal such as sound recorded by a microphone. One variety is a triplet of amplifiers: a logarithmic amplifier ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Digital-to-analogue Converter

In electronics, a digital-to-analog converter (DAC, D/A, D2A, or D-to-A) is a system that converts a digital signal into an analog signal. An analog-to-digital converter (ADC) performs the reverse function. There are several DAC architectures; the suitability of a DAC for a particular application is determined by figures of merit including: resolution, maximum sampling frequency and others. Digital-to-analog conversion can degrade a signal, so a DAC should be specified that has insignificant errors in terms of the application. DACs are commonly used in music players to convert digital data streams into analog audio signals. They are also used in televisions and mobile phones to convert digital video data into analog video signals. These two applications use DACs at opposite ends of the frequency/resolution trade-off. The audio DAC is a low-frequency, high-resolution type while the video DAC is a high-frequency low- to medium-resolution type. Due to the complexity a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |