|

Uconv

In computing, uconv is a command-line tool that is bundled with International Components for Unicode that converts text files between different character encodings. It is very similar to the ''iconv'' command that is part of the Single UNIX Specification which is usually implemented using libiconv. In fact the command line options for transcoding are the same. The command ''uconv'' can also convert to and from various Unicode normalization forms. There is also an alternative implementation written in Ruby. It was written to supplement support of Japanese encoding in Ruby's XML Parser. See also * International Components for Unicode * iconv In Unix and Unix-like operating systems, iconv (an abbreviation of internationalization conversion) is a command-line program and a standardized application programming interface (API) used to convert between different character encodings. "It ... References Unix text processing utilities Ruby (programming language) {{Compu-libr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Iconv

In Unix and Unix-like operating systems, iconv (an abbreviation of internationalization conversion) is a command-line program and a standardized application programming interface (API) used to convert between different character encodings. "It can convert from any of these encodings to any other, through Unicode conversion." History Initially appearing on the HP-UX operating system,iconv() as well as the utility was standardized within XPG4 and is part of the Single UNIX Specification (SUS). Implementations Most Linux distributions provide an implementation, either from the GNU Standard C Library (included since version 2.1, February 1999), or the more traditional GNU libiconv, for systems based on other Standard C Libraries. The iconv function on both is licensed as LGPL, so it is linkable with closed source applications. Unlike the libraries, the iconv utility is licensed under GPL in both implementations. The GNU libiconv implementation is portable, and can be used on ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

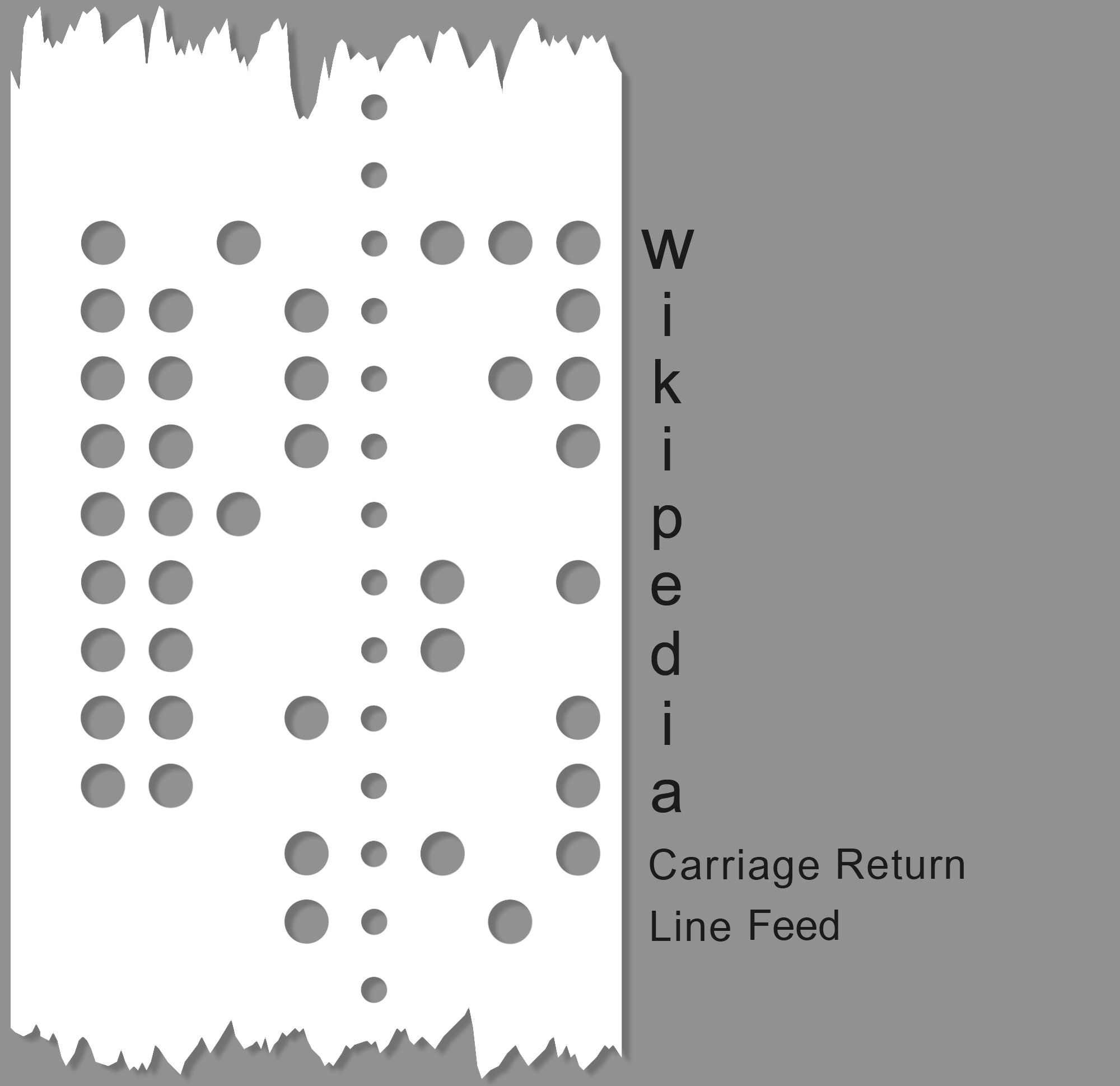

Character Encoding

Character encoding is the process of assigning numbers to Graphics, graphical character (computing), characters, especially the written characters of Language, human language, allowing them to be Data storage, stored, Data communication, transmitted, and Computing, transformed using Digital electronics, digital computers. The numerical values that make up a character encoding are known as "code points" and collectively comprise a "code space", a "code page", or a "Character Map (Windows), character map". Early character codes associated with the optical or electrical Telegraphy, telegraph could only represent a subset of the characters used in written languages, sometimes restricted to Letter case, upper case letters, Numeral system, numerals and some punctuation only. The low cost of digital representation of data in modern computer systems allows more elaborate character codes (such as Unicode) which represent most of the characters used in many written languages. Character enc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Unicode Equivalence

Unicode equivalence is the specification by the Unicode character encoding standard that some sequences of code points represent essentially the same character. This feature was introduced in the standard to allow compatibility with preexisting standard character sets, which often included similar or identical characters. Unicode provides two such notions, canonical equivalence and compatibility. Code point sequences that are defined as canonically equivalent are assumed to have the same appearance and meaning when printed or displayed. For example, the code point U+006E (the Latin lowercase "n") followed by U+0303 (the combining tilde "◌̃") is defined by Unicode to be canonically equivalent to the single code point U+00F1 (the lowercase letter " ñ" of the Spanish alphabet). Therefore, those sequences should be displayed in the same manner, should be treated in the same way by applications such as alphabetizing names or searching, and may be substituted for each other. Sim ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computing

Computing is any goal-oriented activity requiring, benefiting from, or creating computing machinery. It includes the study and experimentation of algorithmic processes, and development of both hardware and software. Computing has scientific, engineering, mathematical, technological and social aspects. Major computing disciplines include computer engineering, computer science, cybersecurity, data science, information systems, information technology and software engineering. The term "computing" is also synonymous with counting and calculating. In earlier times, it was used in reference to the action performed by mechanical computing machines, and before that, to human computers. History The history of computing is longer than the history of computing hardware and includes the history of methods intended for pen and paper (or for chalk and slate) with or without the aid of tables. Computing is intimately tied to the representation of numbers, though mathematical conc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

International Components For Unicode

International Components for Unicode (ICU) is an open-source project of mature C/C++ and Java libraries for Unicode support, software internationalization, and software globalization. ICU is widely portable to many operating systems and environments. It gives applications the same results on all platforms and between C, C++, and Java software. The ICU project is a technical committee of the Unicode Consortium and sponsored, supported, and used by IBM and many other companies. ICU provides the following services: Unicode text handling, full character properties, and character set conversions; Unicode regular expressions; full Unicode sets; character, word, and line boundaries; language-sensitive collation and searching; normalization, upper and lowercase conversion, and script transliterations; comprehensive locale data and resource bundle architecture via the Common Locale Data Repository (CLDR); multiple calendars and time zones; and rule-based formatting and parsing of dates ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ruby Programming Language

Ruby is an interpreted, high-level, general-purpose programming language which supports multiple programming paradigms. It was designed with an emphasis on programming productivity and simplicity. In Ruby, everything is an object, including primitive data types. It was developed in the mid-1990s by Yukihiro "Matz" Matsumoto in Japan. Ruby is dynamically typed and uses garbage collection and just-in-time compilation. It supports multiple programming paradigms, including procedural, object-oriented, and functional programming. According to the creator, Ruby was influenced by Perl, Smalltalk, Eiffel, Ada, BASIC, Java and Lisp. History Early concept Matsumoto has said that Ruby was conceived in 1993. In a 1999 post to the ''ruby-talk'' mailing list, he describes some of his early ideas about the language: Matsumoto describes the design of Ruby as being like a simple Lisp language at its core, with an object system like that of Smalltalk, blocks inspired by higher-order ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Unix Text Processing Utilities

Unix (; trademarked as UNIX) is a family of multitasking, multiuser computer operating systems that derive from the original AT&T Unix, whose development started in 1969 at the Bell Labs research center by Ken Thompson, Dennis Ritchie, and others. Initially intended for use inside the Bell System, AT&T licensed Unix to outside parties in the late 1970s, leading to a variety of both academic and commercial Unix variants from vendors including University of California, Berkeley (BSD), Microsoft (Xenix), Sun Microsystems ( SunOS/ Solaris), HP/ HPE ( HP-UX), and IBM (AIX). In the early 1990s, AT&T sold its rights in Unix to Novell, which then sold the UNIX trademark to The Open Group, an industry consortium founded in 1996. The Open Group allows the use of the mark for certified operating systems that comply with the Single UNIX Specification (SUS). Unix systems are characterized by a modular design that is sometimes called the "Unix philosophy". According to this philos ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |