|

Triune Continuum Paradigm

The Triune Continuum Paradigm is a paradigm for general system modeling published in 2002.A. Naumenko''Triune Continuum Paradigm: a paradigm for general system modeling and its applications for UML and RM-ODP'' Doctoral thesis 2581, Swiss Federal Institute of Technology – Lausanne. EPFL, June 2002. The paradigm allows for building of rigorous conceptual frameworks employed for systems modeling in various application contexts (highly tailored as well as interdisciplinary). Overview As stated in the ''Cambridge Dictionary of Philosophy'':R. Audi (general editor). The Cambridge Dictionary of Philosophy, second edition; Cambridge University Press 1999. "Paradigm, as used by Thomas Kuhn (''The Structure of Scientific Revolutions'', 1962), refers to a set of scientific and metaphysical beliefs that make up a theoretical framework within which scientific theories can be tested, evaluated and if necessary revised." The Triune Continuum Paradigm holds true to this definition by definin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Paradigm

In science and philosophy, a paradigm () is a distinct set of concepts or thought patterns, including theories, research methods, postulates, and standards for what constitute legitimate contributions to a field. Etymology ''Paradigm'' comes from Greek παράδειγμα (''paradeigma''), "pattern, example, sample" from the verb παραδείκνυμι (''paradeiknumi''), "exhibit, represent, expose" and that from παρά (''para''), "beside, beyond" and δείκνυμι (''deiknumi''), "to show, to point out". In classical (Greek-based) rhetoric, a paradeigma aims to provide an audience with an illustration of a similar occurrence. This illustration is not meant to take the audience to a conclusion, however it is used to help guide them get there. One way of how a ''paradeigma'' is meant to guide an audience would be exemplified by the role of a personal accountant. It is not the job of a personal accountant to tell a client exactly what (and what not) to spend money ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

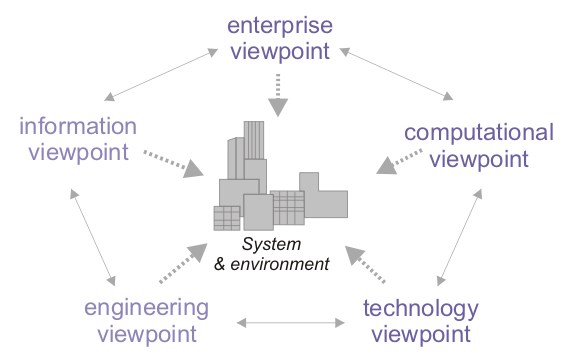

RM-ODP

Reference Model of Open Distributed Processing (RM-ODP) is a reference model in computer science, which provides a co-ordinating framework for the standardization of open distributed processing (ODP). It supports distribution, interworking, platform and technology independence, and portability, together with an enterprise architecture framework for the specification of ODP systems. RM-ODP, also named ''ITU-T Rec. X.901-X.904'' and ''ISO/IEC 10746'', is a joint effort by the International Organization for Standardization (ISO), the International Electrotechnical Commission (IEC) and the Telecommunication Standardization Sector (ITU-T). Overview The RM-ODP is a reference model based on precise concepts derived from current distributed processing developments and, as far as possible, on the use of formal description techniques for specification of the architecture. Many RM-ODP concepts, possibly under different names, have been around for a long time and have been rigorously ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Business Modeling

Business process modeling (BPM) in business process management and systems engineering is the activity of representing processes of an enterprise, so that the current business processes may be analyzed, improved, and automated. BPM is typically performed by business analysts, who provide expertise in the modeling discipline; by subject matter experts, who have specialized knowledge of the processes being modeled; or more commonly by a team comprising both. Alternatively, the process model can be derived directly from events' logs using process mining tools. The business objective is often to increase process speed or reduce cycle time; to increase quality; or to reduce costs, such as labor, materials, scrap, or capital costs. In practice, a management decision to invest in business process modeling is often motivated by the need to document requirements for an information technology project. Change management programs are typically involved to put any improved business processes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Manfred Broy

Manfred Broy (born 10 August 1949, Landsberg am Lech) is a German computer scientist, and an emeritus professor in the Department of Informatics at the Technical University of Munich, Garching, Germany. Biography Broy gained his Doctor of Philosophy (Ph.D.) in 1980 at the chair of Friedrich L. Bauer on the subject of transformation of programs running in parallel ''(Transformation parallel ablaufender Programme)''. In 1983, he founded the faculty of mathematics and computer science at the University of Passau, which dean he was until 1986. In 1989, he went to the Technical University of Munich (TUM), where in 1992, he became the founding dean of the informatics faculty, which until then was an institute within the faculty of mathematics and informatics. Since then he has been teaching at the Technical University of Munich. In 2004, he was elected as a fellow of the Gesellschaft für Informatik The German Informatics Society (GI) (german: Gesellschaft für Informatik) is a Ge ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kevin Lano

Kevin C. Lano (born 1963) is a British computer scientist. Life and work Kevin Lano studied at the University of Reading, attaining a first class degree in Mathematics and Computer Science, and the University of Bristol where he completed his doctorate. He was an originator of formal object-oriented techniques ( Z++), and developed a combination of UML and formal methods in a number of papers and books. He was one of the founders of the Precise UML group, who influenced the definition of UML 2.0. Lano published the book ''Advanced Systems Design with Java, UML and MDA'' (Butterworth-Heinemann, ) in 2005. He is also the editor of ''UML 2 Semantics and Applications'', published by Wiley in October 2009, among a number of computer science books. Lano was formerly a Research Officer at the Oxford University Computing Laboratory (now the Oxford University Department of Computer Science). He is a reader at the ''Department of Informatics'' at King's College London. In 2008, La ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Unified Modeling Language

The Unified Modeling Language (UML) is a general-purpose, developmental modeling language in the field of software engineering that is intended to provide a standard way to visualize the design of a system. The creation of UML was originally motivated by the desire to standardize the disparate notational systems and approaches to software design. It was developed at Rational Software in 1994–1995, with further development led by them through 1996. In 1997, UML was adopted as a standard by the Object Management Group (OMG), and has been managed by this organization ever since. In 2005, UML was also published by the International Organization for Standardization (ISO) as an approved ISO standard. Since then the standard has been periodically revised to cover the latest revision of UML. In software engineering, most practitioners do not use UML, but instead produce informal hand drawn diagrams; these diagrams, however, often include elements from UML. History Before UM ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Semantics Of Programming Languages

In programming language theory, semantics is the rigorous mathematical study of the meaning of programming languages. Semantics assigns computational meaning to valid strings in a programming language syntax. Semantics describes the processes a computer follows when executing a program in that specific language. This can be shown by describing the relationship between the input and output of a program, or an explanation of how the program will be executed on a certain platform, hence creating a model of computation. History In 1967, Robert W. Floyd publishes the paper ''Assigning meanings to programs''; his chief aim is "a rigorous standard for proofs about computer programs, including proofs of correctness, equivalence, and termination". Floyd further writes: A semantic definition of a programming language, in our approach, is founded on a syntactic definition. It must specify which of the phrases in a syntactically correct program represent commands, and what conditions ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

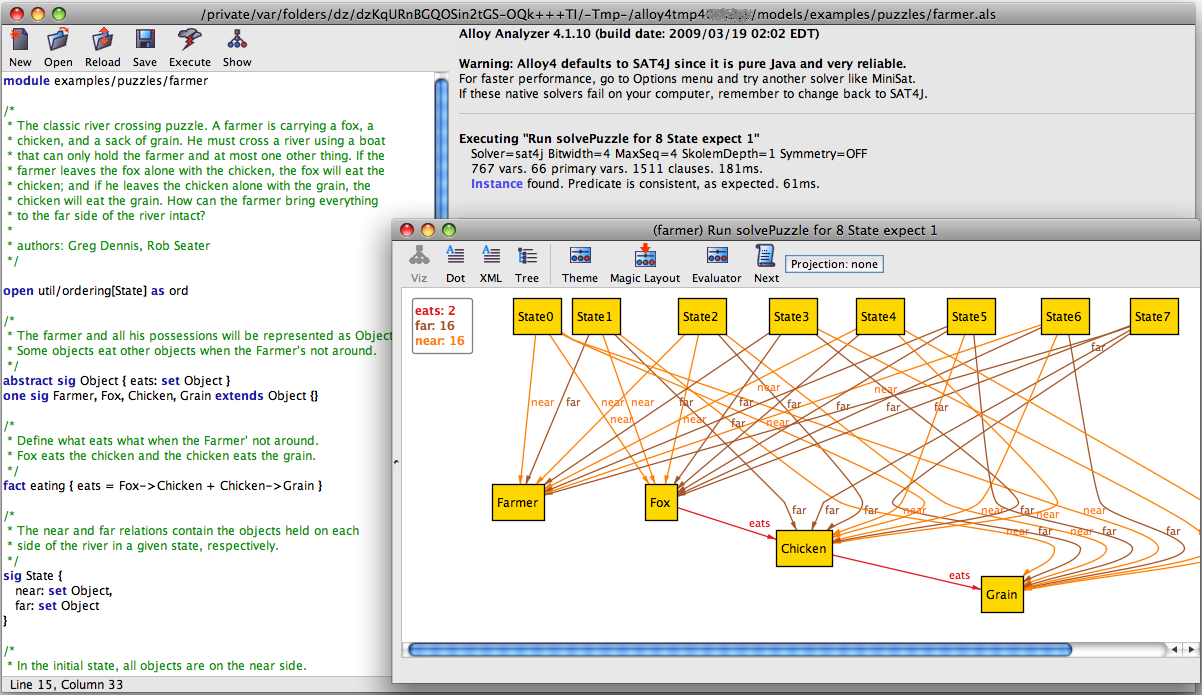

Alloy (specification Language)

In computer science and software engineering, Alloy is a declarative specification language for expressing complex structural constraints and behavior in a software system. Alloy provides a simple structural modeling tool based on first-order logic. Alloy is targeted at the creation of ''micro-models'' that can then be automatically checked for correctness. Alloy specifications can be checked using the Alloy Analyzer. Although Alloy is designed with automatic analysis in mind, Alloy differs from many specification languages designed for model-checking in that it permits the definition of infinite models. The Alloy Analyzer is designed to perform finite scope checks even on infinite models. The Alloy language and analyzer are developed by a team led by Daniel Jackson at the Massachusetts Institute of Technology in the United States. History and influences The first version of the Alloy language appeared in 1997. It was a rather limited object modeling language. Succeeding it ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Digital Object Identifier

A digital object identifier (DOI) is a persistent identifier or handle used to uniquely identify various objects, standardized by the International Organization for Standardization (ISO). DOIs are an implementation of the Handle System; they also fit within the URI system (Uniform Resource Identifier). They are widely used to identify academic, professional, and government information, such as journal articles, research reports, data sets, and official publications. DOIs have also been used to identify other types of information resources, such as commercial videos. A DOI aims to resolve to its target, the information object to which the DOI refers. This is achieved by binding the DOI to metadata about the object, such as a URL where the object is located. Thus, by being actionable and interoperable, a DOI differs from ISBNs or ISRCs which are identifiers only. The DOI system uses the indecs Content Model for representing metadata. The DOI for a document remains fixed ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Alain Wegmann

Alain Wegmann (born 1957) was a Swiss computer scientist, professor of Systemic Modeling at the École Polytechnique Fédérale de Lausanne (EPFL), and Information Technology and Services consultant, known for the development of the Systemic Enterprise Architecture Methodology (SEAM). Biography Wegmann received his EE (Engineer's degree) degree at École Polytechnique Fédérale de Lausanne (EPFL) in 1981, and his Ph.D. in office automation at Paris VI University in France in 1984.Alain Wegmann, Biography and current work at ''epfl.ch'', École Polytechnique Fédérale de Lausanne (EPFL) Accessed 8 July 2013. In 1984 Wegmann started his career at in Romanel, Switzerland, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Systems Engineering

Systems engineering is an interdisciplinary field of engineering and engineering management that focuses on how to design, integrate, and manage complex systems over their life cycles. At its core, systems engineering utilizes systems thinking principles to organize this body of knowledge. The individual outcome of such efforts, an engineered system, can be defined as a combination of components that work in synergy to collectively perform a useful function. Issues such as requirements engineering, reliability, logistics, coordination of different teams, testing and evaluation, maintainability and many other disciplines necessary for successful system design, development, implementation, and ultimate decommission become more difficult when dealing with large or complex projects. Systems engineering deals with work-processes, optimization methods, and risk management tools in such projects. It overlaps technical and human-centered disciplines such as industrial engineering, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |