|

The Book Of Why

''The Book of Why: The New Science of Cause and Effect'' is a 2018 nonfiction book by computer scientist Judea Pearl and writer Dana Mackenzie. The book explores the subject of causality and causal inference from statistical and philosophical points of view for a general audience. Summary The book consists of ten chapters and an introduction. Introduction: Mind over Data The introduction describes the inadequacy of early 20th century statistical methods at making statements about causal relationships between variables. The authors then describe what they term 'The Causal Revolution', which started in the middle of the 20th century, and provided new conceptual and mathematical tools for describing causal relationships. Chapter 1: The Ladder of Causation Chapter 1 introduces the 'ladder of causation' - a diagram used to illustrate the three levels of causal reasoning. The first level is named 'Association', which discusses associations between variables. Questions such as 'is vari ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Judea Pearl

Judea Pearl (born September 4, 1936) is an Israeli-American computer scientist and philosopher, best known for championing the probabilistic approach to artificial intelligence and the development of Bayesian networks (see the article on belief propagation). He is also credited for developing a theory of causal and counterfactual inference based on structural models (see article on causality). In 2011, the Association for Computing Machinery (ACM) awarded Pearl with the Turing Award, the highest distinction in computer science, "for fundamental contributions to artificial intelligence through the development of a calculus for probabilistic and causal reasoning". He is the author of several books, including the technical Causality: Models, Reasoning and Inference, and The Book of Why, a book on causality aimed at the general public. Judea Pearl is the father of journalist Daniel Pearl, who was kidnapped and murdered by terrorists in Pakistan connected with Al-Qaeda and the Inte ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Thomas Bayes

Thomas Bayes ( ; 1701 7 April 1761) was an English statistician, philosopher and Presbyterian minister who is known for formulating a specific case of the theorem that bears his name: Bayes' theorem. Bayes never published what would become his most famous accomplishment; his notes were edited and published posthumously by Richard Price. Biography Thomas Bayes was the son of London Presbyterian minister Joshua Bayes, and was possibly born in Hertfordshire. He came from a prominent Nonconformist (Protestantism), nonconformist family from Sheffield. In 1719, he enrolled at the University of Edinburgh to study logic and theology. On his return around 1722, he assisted his father at the latter's chapel in London before moving to Royal Tunbridge Wells, Tunbridge Wells, Kent, around 1734. There he was minister of the Mount Sion Chapel, until 1752. He is known to have published two works in his lifetime, one theological and one mathematical: #''Divine Benevolence, or an Attempt to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Instrumental Variables

In statistics, econometrics, epidemiology and related disciplines, the method of instrumental variables (IV) is used to estimate causal relationships when controlled experiments are not feasible or when a treatment is not successfully delivered to every unit in a randomized experiment. Intuitively, IVs are used when an explanatory variable of interest is correlated with the error term, in which case ordinary least squares and ANOVA give biased results. A valid instrument induces changes in the explanatory variable but has no independent effect on the dependent variable, allowing a researcher to uncover the causal effect of the explanatory variable on the dependent variable. Instrumental variable methods allow for consistent estimation when the explanatory variables (covariates) are correlated with the error terms in a regression model. Such correlation may occur when: # changes in the dependent variable change the value of at least one of the covariates ("reverse" causation), # ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lord's Paradox

In statistics, Lord's paradox raises the issue of when it is appropriate to control for baseline (configuration management), baseline status. In three papers, Frederic M. Lord gave examples when statisticians could reach different conclusions depending on whether they adjust for pre-existing differences.Lord, E. M. (1967). A paradox in the interpretation of group comparisons. Psychological Bulletin, 68, 304–305. Holland & Rubin (1983) use these examples to illustrate how there may be multiple valid descriptive comparisons in the data, but causal conclusions require an underlying (untestable) causal model. Pearl used these examples to illustrate how graphical causal models resolve the issue of when control for baseline status is appropriate. Lord's formulation The most famous formulation of Lord's paradox comes from his 1967 paper: ::“A large university is interested in investigating the effects on the students of the diet provided in the university dining halls and any sex ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Berkson's Paradox

Berkson's paradox, also known as Berkson's bias, collider bias, or Berkson's fallacy, is a result in conditional probability and statistics which is often found to be counterintuitive, and hence a veridical paradox. It is a complicating factor arising in statistical tests of proportions. Specifically, it arises when there is an ascertainment bias inherent in a study design. The effect is related to the explaining away phenomenon in Bayesian networks, and conditioning on a collider in graphical models. It is often described in the fields of medical statistics or biostatistics, as in the original description of the problem by Joseph Berkson. Examples Overview The most common example of Berkson's paradox is a false observation of a ''negative'' correlation between two desirable traits, i.e., that members of a population which have some desirable trait tend to lack a second. Berkson's paradox occurs when this observation appears true when in reality the two properties are unrelated ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Simpson's Paradox

Simpson's paradox is a phenomenon in probability and statistics in which a trend appears in several groups of data but disappears or reverses when the groups are combined. This result is often encountered in social-science and medical-science statistics, and is particularly problematic when frequency data are unduly given causal interpretations.Judea Pearl. ''Causality: Models, Reasoning, and Inference'', Cambridge University Press (2000, 2nd edition 2009). . The paradox can be resolved when confounding variables and causal relations are appropriately addressed in the statistical modeling. Simpson's paradox has been used to illustrate the kind of misleading results that the misuse of statistics can generate. Edward H. Simpson first described this phenomenon in a technical paper in 1951, but the statisticians Karl Pearson (in 1899) and Udny Yule (in 1903 ) had mentioned similar effects earlier. The name ''Simpson's paradox'' was introduced by Colin R. Blyth in 1972. It is also r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Monty Hall Problem

The Monty Hall problem is a brain teaser, in the form of a probability puzzle, loosely based on the American television game show ''Let's Make a Deal'' and named after its original host, Monty Hall. The problem was originally posed (and solved) in a letter by Steve Selvin to the ''American Statistician'' in 1975. It became famous as a question from reader Craig F. Whitaker's letter quoted in Marilyn vos Savant's "Ask Marilyn" column in ''Parade'' magazine in 1990: Vos Savant's response was that the contestant should switch to the other door. Under the standard assumptions, the switching strategy has a probability of winning the car, while the strategy that remains with the initial choice has only a probability. When the player first makes their choice, there is a chance that the car is behind one of the doors not chosen. This probability does not change after the host reveals a goat behind one of the unchosen doors. When the host provides information about the 2 unchosen do ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Jerome Cornfield

Jerome Cornfield (1912–1979) was an American statistician. He is best known for his work in biostatistics, but his early work was in economic statistics and he was also an early contributor to the theory of Bayesian inference. He played a role in the early development of input-output analysis and linear programming. Cornfield played a crucial role in establishing the causal link between smoking and incidence of lung cancer. He introduced the Rare disease assumption and the "Cornfield condition" that allows one to assess whether an unmeasured (binary) confounder can explain away the observed relative risk due to some exposure like smoking. He was born on October 30, 1912, in The Bronx, New York City. He graduated from New York University in 1933 and was briefly a graduate student at Columbia University. He also studied statistics and mathematics at the Graduate School of the US Department of Agriculture while employed by the Bureau of Labor Statistics, where he remained until ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ronald Fisher

Sir Ronald Aylmer Fisher (17 February 1890 – 29 July 1962) was a British polymath who was active as a mathematician, statistician, biologist, geneticist, and academic. For his work in statistics, he has been described as "a genius who almost single-handedly created the foundations for modern statistical science" and "the single most important figure in 20th century statistics". In genetics, his work used mathematics to combine Mendelian genetics and natural selection; this contributed to the revival of Darwinism in the early 20th-century revision of the theory of evolution known as the modern synthesis. For his contributions to biology, Fisher has been called "the greatest of Darwin’s successors". Fisher held strong views on race and eugenics, insisting on racial differences. Although he was clearly a eugenist and advocated for the legalization of voluntary sterilization of those with heritable mental disabilities, there is some debate as to whether Fisher supported sc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Abraham Lilienfeld

Abraham Morris Lilienfeld (November 13, 1920 – August 6, 1984) was an American epidemiologist and professor at the Johns Hopkins School of Hygiene and Public Health. He is known for his work in expanding epidemiology to focus on chronic diseases as well as infectious ones. Early life and education Lilienfeld was born in New York City on November 13, 1920. His father, Joe Lilienfeld, came from a wealthy family in Galicia, Ukraine, and worked as a Galician rabbinical scholar. Joe and his wife had immigrated to the United States in 1914 to escape the draft, leaving their money (which was all in German marks) behind in Germany when they did so. He graduated from Erasmus High School, whereupon he enrolled at Johns Hopkins University in Baltimore, allowing him to move in with his brother, Sam (a Baltimore resident), in 1938. In 1941, he received his A.B. from Johns Hopkins, after which he applied to the Johns Hopkins School of Medicine, but was told he would be rejected because he ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

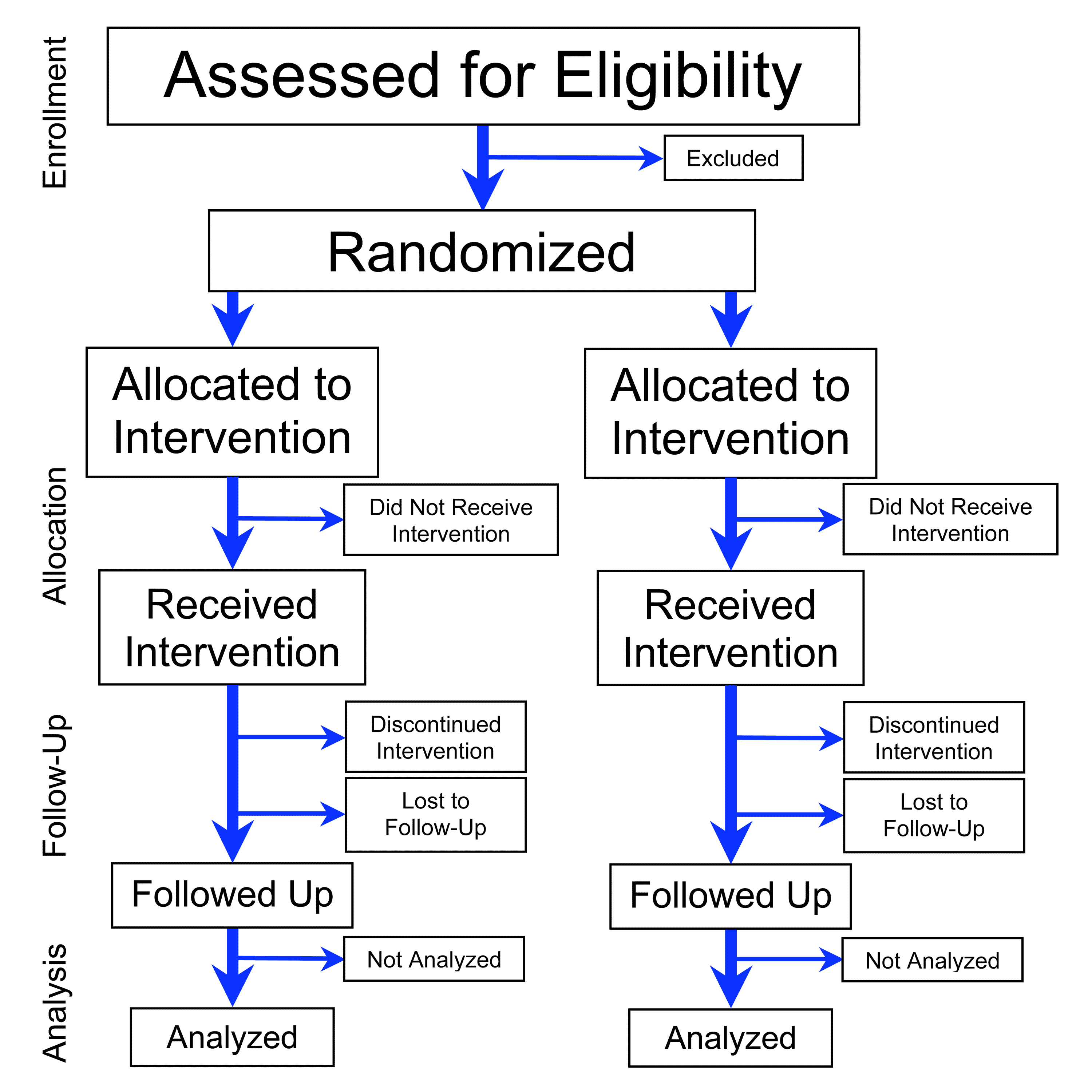

Randomized Controlled Trials

A randomized controlled trial (or randomized control trial; RCT) is a form of scientific experiment used to control factors not under direct experimental control. Examples of RCTs are clinical trials that compare the effects of drugs, surgical techniques, medical devices, diagnostic procedures or other medical treatments. Participants who enroll in RCTs differ from one another in known and unknown ways that can influence study outcomes, and yet cannot be directly controlled. By randomly allocating participants among compared treatments, an RCT enables ''statistical control'' over these influences. Provided it is designed well, conducted properly, and enrolls enough participants, an RCT may achieve sufficient control over these confounding factors to deliver a useful comparison of the treatments studied. Definition and examples An RCT in clinical research typically compares a proposed new treatment against an existing standard of care; these are then termed the 'experimental' a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Confounding

In statistics, a confounder (also confounding variable, confounding factor, extraneous determinant or lurking variable) is a variable that influences both the dependent variable and independent variable, causing a spurious association. Confounding is a causal concept, and as such, cannot be described in terms of correlations or associations.Pearl, J., (2009). Simpson's Paradox, Confounding, and Collapsibility In ''Causality: Models, Reasoning and Inference'' (2nd ed.). New York : Cambridge University Press. The existence of confounders is an important quantitative explanation why correlation does not imply causation. Confounds are threats to internal validity. Definition Confounding is defined in terms of the data generating model. Let ''X'' be some independent variable, and ''Y'' some dependent variable. To estimate the effect of ''X'' on ''Y'', the statistician must suppress the effects of extraneous variables that influence both ''X'' and ''Y''. We say that ''X'' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |