|

Super Scalable System

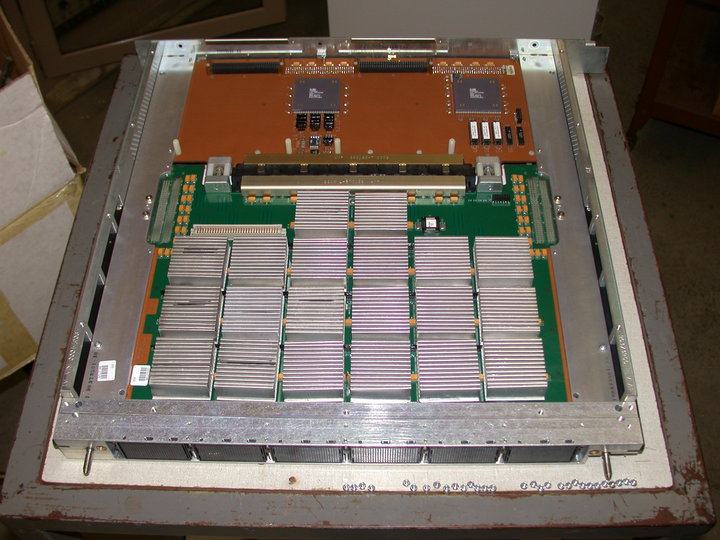

The Cray-3/SSS (Super Scalable System) was a pioneering massively parallel supercomputer project that bonded a two-processor Cray-3 to a new SIMD processing unit based entirely in the computer's main memory.http://www.secinfo.com/dsVQy.a1u4.htm CCC 10Q May 1995 It was later considered as an add-on for the Cray T90 series in the form of the T94/SSS, but there is no evidence this was ever built. Design The SSS project started after a Supercomputing Research Center (SRC) engineer, Ken Iobst, noticed a novel way to implement a parallel computer. Previous massively SIMD designs, like the Connection Machines, consisted of a large number of individual processing elements consisting of a simple processor and some local memory. Results that needed to be passed from element to element were passed along networking links at relatively slow speeds. This was a serious bottleneck in most parallel designs, which limited their use to certain roles where these interdependencies could be reduced. Iob ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Massively Parallel

Massively parallel is the term for using a large number of computer processors (or separate computers) to simultaneously perform a set of coordinated computations in parallel. GPUs are massively parallel architecture with tens of thousands of threads. One approach is grid computing, where the processing power of many computers in distributed, diverse administrative domains is opportunistically used whenever a computer is available.''Grid computing: experiment management, tool integration, and scientific workflows'' by Radu Prodan, Thomas Fahringer 2007 pages 1–4 An example is BOINC, a volunteer-based, opportunistic grid system, whereby the grid provides power only on a best effort basis.''Parallel and Distributed Computational Intelligence'' by Francisco Fernández de Vega 2010 pages 65–68 Another approach is grouping many processors in close proximity to each other, as in a computer cluster. In such a centralized system the speed and flexibility of the interconnect beco ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Static Random Access Memory

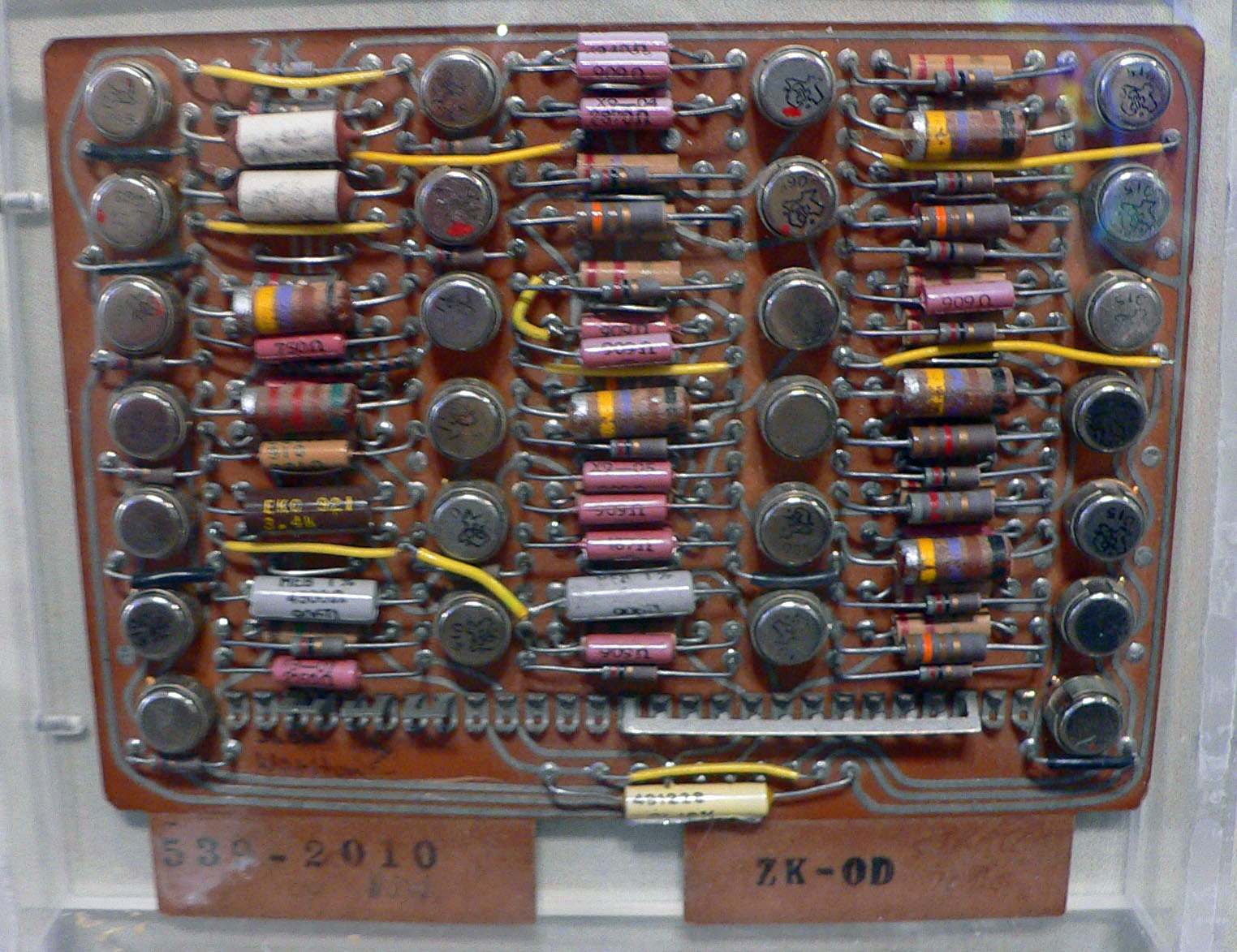

Static random-access memory (static RAM or SRAM) is a type of random-access memory (RAM) that uses latching circuitry (flip-flop) to store each bit. SRAM is volatile memory; data is lost when power is removed. The term ''static'' differentiates SRAM from DRAM (''dynamic'' random-access memory) — SRAM will hold its data permanently in the presence of power, while data in DRAM decays in seconds and thus must be periodically refreshed. SRAM is faster than DRAM but it is more expensive in terms of silicon area and cost; it is typically used for the cache and internal registers of a CPU while DRAM is used for a computer's main memory. History Semiconductor bipolar SRAM was invented in 1963 by Robert Norman at Fairchild Semiconductor. MOS SRAM was invented in 1964 by John Schmidt at Fairchild Semiconductor. It was a 64-bit MOS p-channel SRAM. The SRAM was the main driver behind any new CMOS-based technology fabrication process since 1959 when CMOS was invented. In 1965 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cray Products

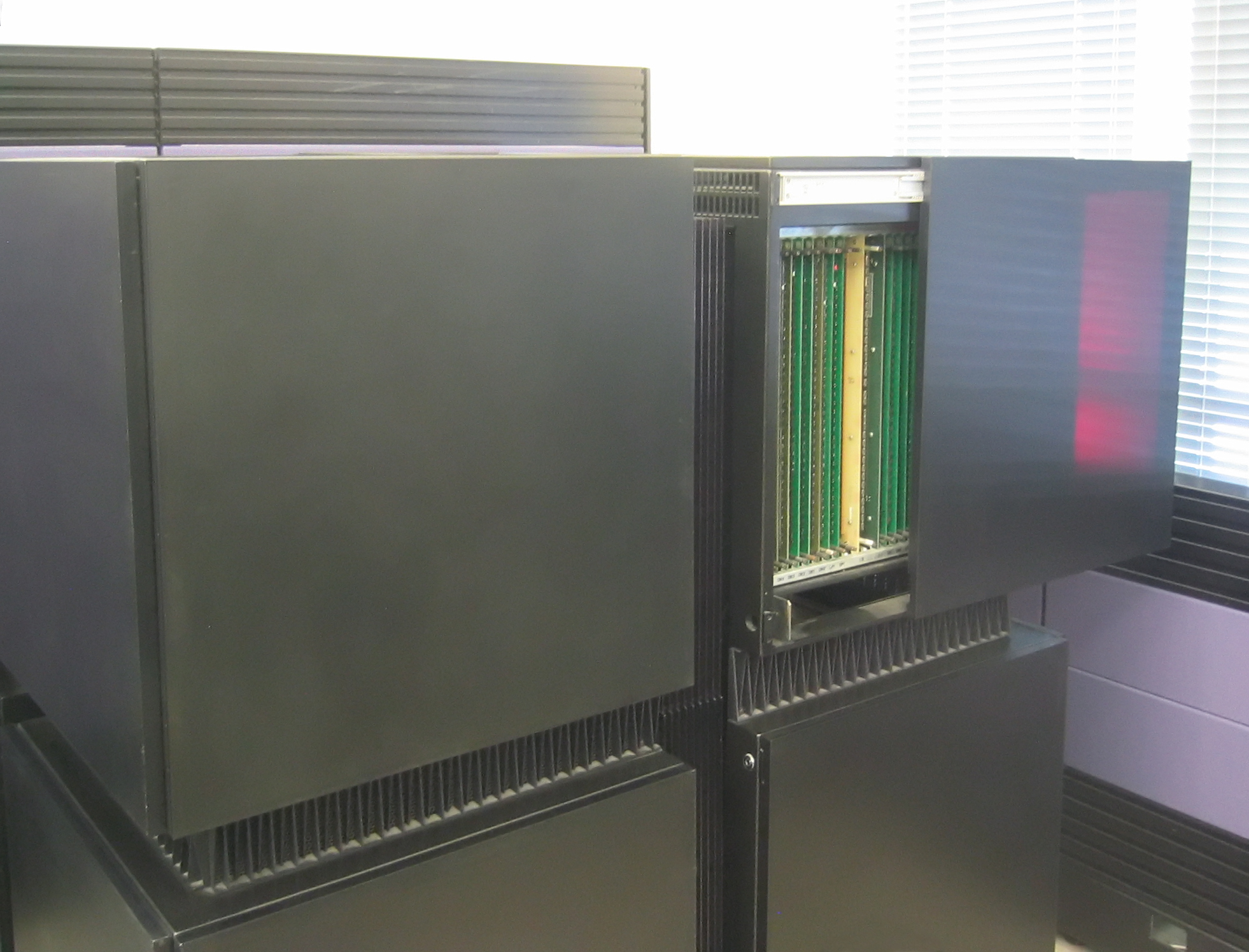

Cray Inc., a subsidiary of Hewlett Packard Enterprise, is an American supercomputer manufacturer headquartered in Seattle, Washington. It also manufactures systems for data storage and analytics. Several Cray supercomputer systems are listed in the TOP500, which ranks the most powerful supercomputers in the world. Cray manufactures its products in part in Chippewa Falls, Wisconsin, where its founder, Seymour Cray, was born and raised. The company also has offices in Bloomington, Minnesota (which have been converted to Hewlett Packard Enterprise offices), and numerous other sales, service, engineering, and R&D locations around the world. The company's predecessor, Cray Research, Inc. (CRI), was founded in 1972 by computer designer Seymour Cray. Seymour Cray later formed Cray Computer Corporation (CCC) in 1989, which went bankrupt in 1995. Cray Research was acquired by Silicon Graphics (SGI) in 1996. Cray Inc. was formed in 2000 when Tera Computer Company purchased the Cray R ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

National Semiconductor

National Semiconductor was an American semiconductor manufacturer which specialized in analog devices and subsystems, formerly with headquarters in Santa Clara, California. The company produced power management integrated circuits, display drivers, audio and operational amplifiers, communication interface products and data conversion solutions. National's key markets included wireless handsets, displays and a variety of broad electronics markets, including medical, automotive, industrial and test and measurement applications. On September 23, 2011, the company formally became part of Texas Instruments as the "Silicon Valley" division. History Founding National Semiconductor was founded in Danbury, Connecticut, by Dr. Bernard J. Rothlein on May 27, 1959, when he and seven colleagues, Edward N. Clarke, Joseph J. Gruber, Milton Schneider, Robert L. Hopkins, Robert L. Koch, Richard R. Rau and Arthur V. Siefert, left their employment at the semiconductor division of Sperry Rand Corp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cray Computer Corporation

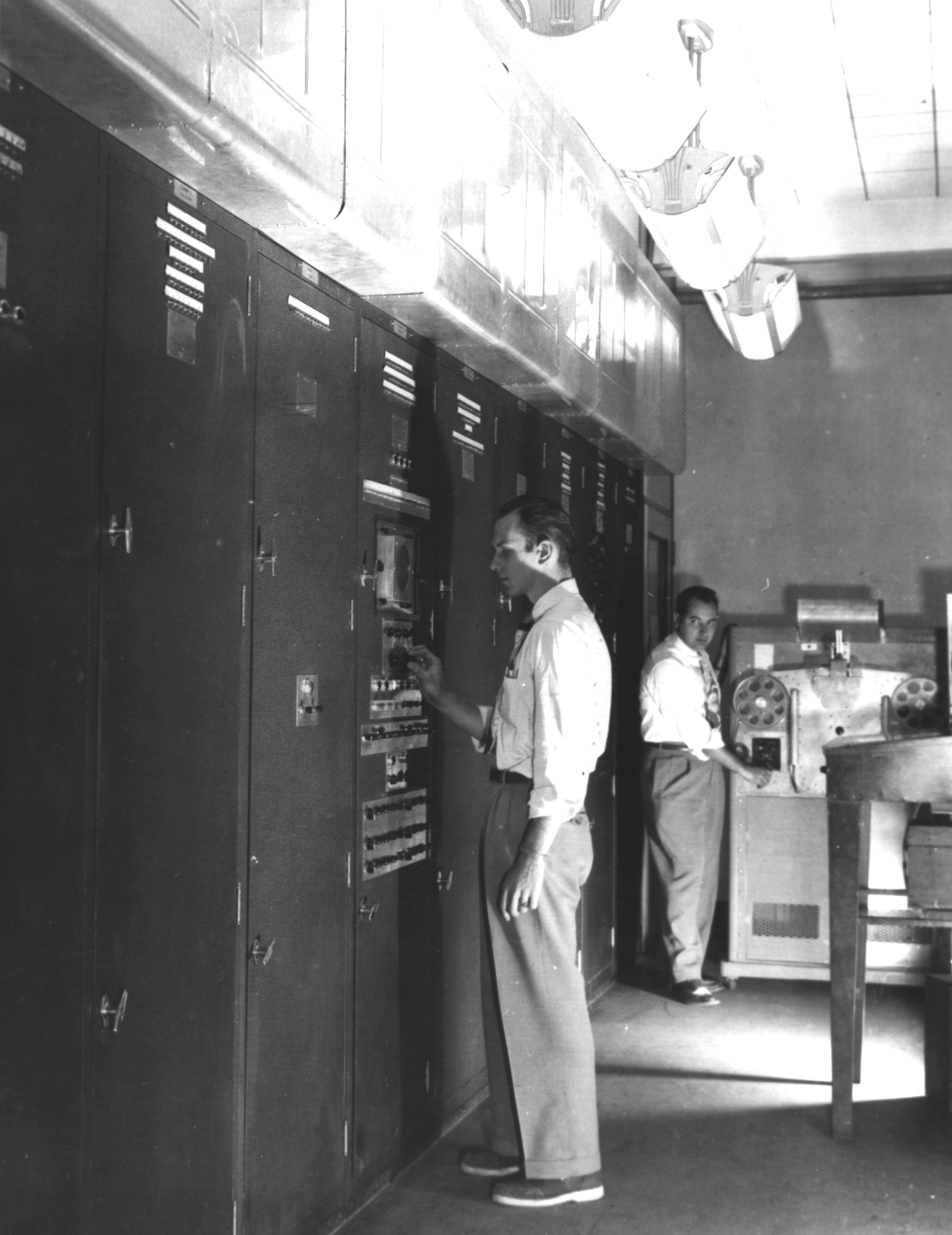

Seymour Roger Cray (September 28, 1925 – October 5, 1996 ) was an American Electrical engineering, electrical engineer and supercomputer architect who designed a series of computers that were the fastest in the world for decades, and founded Cray, Cray Research which built many of these machines. Called "the father of supercomputing", Cray has been credited with creating the supercomputer industry. Joel S. Birnbaum, then chief technology officer of Hewlett-Packard, said of him: "It seems impossible to exaggerate the effect he had on the industry; many of the things that high performance computers now do routinely were at the farthest edge of credibility when Seymour envisioned them." Larry Smarr, then director of the National Center for Supercomputing Applications at the University of Illinois at Urbana-Champaign, Unive ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

National Security Agency

The National Security Agency (NSA) is a national-level intelligence agency of the United States Department of Defense, under the authority of the Director of National Intelligence (DNI). The NSA is responsible for global monitoring, collection, and processing of information and data for foreign and domestic intelligence and counterintelligence purposes, specializing in a discipline known as signals intelligence (SIGINT). The NSA is also tasked with the protection of U.S. communications networks and information systems. The NSA relies on a variety of measures to accomplish its mission, the majority of which are clandestine. The existence of the NSA was not revealed until 1975. The NSA has roughly 32,000 employees. Originating as a unit to decipher coded communications in World War II, it was officially formed as the NSA by President Harry S. Truman in 1952. Between then and the end of the Cold War, it became the largest of the U.S. intelligence organizations in terms of pers ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Central Processing Unit

A central processing unit (CPU), also called a central processor, main processor or just processor, is the electronic circuitry that executes instructions comprising a computer program. The CPU performs basic arithmetic, logic, controlling, and input/output (I/O) operations specified by the instructions in the program. This contrasts with external components such as main memory and I/O circuitry, and specialized processors such as graphics processing units (GPUs). The form, design, and implementation of CPUs have changed over time, but their fundamental operation remains almost unchanged. Principal components of a CPU include the arithmetic–logic unit (ALU) that performs arithmetic and logic operations, processor registers that supply operands to the ALU and store the results of ALU operations, and a control unit that orchestrates the fetching (from memory), decoding and execution (of instructions) by directing the coordinated operations of the ALU, registers and other co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

64-bit

In computer architecture, 64-bit Integer (computer science), integers, memory addresses, or other Data (computing), data units are those that are 64 bits wide. Also, 64-bit central processing unit, CPUs and arithmetic logic unit, ALUs are those that are based on processor registers, address buses, or Bus (computing), data buses of that size. A computer that uses such a processor is a 64-bit computer. From the software perspective, 64-bit computing means the use of machine code with 64-bit virtual memory addresses. However, not all 64-bit instruction sets support full 64-bit virtual memory addresses; x86-64 and ARMv8, for example, support only 48 bits of virtual address, with the remaining 16 bits of the virtual address required to be all 0's or all 1's, and several 64-bit instruction sets support fewer than 64 bits of physical memory address. The term ''64-bit'' also describes a generation of computers in which 64-bit processors are the norm. 64 bits is a Word (computer archit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vector Processor

In computing, a vector processor or array processor is a central processing unit (CPU) that implements an instruction set where its instructions are designed to operate efficiently and effectively on large one-dimensional arrays of data called ''vectors''. This is in contrast to scalar processors, whose instructions operate on single data items only, and in contrast to some of those same scalar processors having additional single instruction, multiple data (SIMD) or SWAR Arithmetic Units. Vector processors can greatly improve performance on certain workloads, notably numerical simulation and similar tasks. Vector processing techniques also operate in video-game console hardware and in graphics accelerators. Vector machines appeared in the early 1970s and dominated supercomputer design through the 1970s into the 1990s, notably the various Cray platforms. The rapid fall in the price-to-performance ratio of conventional microprocessor designs led to a decline in vector supercom ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Supercomputer

A supercomputer is a computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second ( FLOPS) instead of million instructions per second (MIPS). Since 2017, there have existed supercomputers which can perform over 1017 FLOPS (a hundred quadrillion FLOPS, 100 petaFLOPS or 100 PFLOPS). For comparison, a desktop computer has performance in the range of hundreds of gigaFLOPS (1011) to tens of teraFLOPS (1013). Since November 2017, all of the world's fastest 500 supercomputers run on Linux-based operating systems. Additional research is being conducted in the United States, the European Union, Taiwan, Japan, and China to build faster, more powerful and technologically superior exascale supercomputers. Supercomputers play an important role in the field of computational science, and are used for a wide range of computationally intensive tasks in var ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Connection Machine

A Connection Machine (CM) is a member of a series of massively parallel supercomputers that grew out of doctoral research on alternatives to the traditional von Neumann architecture of computers by Danny Hillis at Massachusetts Institute of Technology (MIT) in the early 1980s. Starting with CM-1, the machines were intended originally for applications in artificial intelligence (AI) and symbolic processing, but later versions found greater success in the field of computational science. Origin of idea Danny Hillis and Sheryl Handler founded Thinking Machines Corporation (TMC) in Waltham, Massachusetts, in 1983, moving in 1984 to Cambridge, MA. At TMC, Hillis assembled a team to develop what would become the CM-1 Connection Machine, a design for a massively parallel hypercube-based arrangement of thousands of microprocessors, springing from his PhD thesis work at MIT in Electrical Engineering and Computer Science (1985). The dissertation won the ACM Distinguished Dissertation prize ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Supercomputing Research Center

A supercomputer is a computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second (FLOPS) instead of million instructions per second (MIPS). Since 2017, there have existed supercomputers which can perform over 1017 FLOPS (a hundred quadrillion FLOPS, 100 petaFLOPS or 100 PFLOPS). For comparison, a desktop computer has performance in the range of hundreds of gigaFLOPS (1011) to tens of teraFLOPS (1013). Since November 2017, all of the world's fastest 500 supercomputers run on Linux-based operating systems. Additional research is being conducted in the United States, the European Union, Taiwan, Japan, and China to build faster, more powerful and technologically superior exascale supercomputers. Supercomputers play an important role in the field of computational science, and are used for a wide range of computationally intensive tasks in variou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |