|

Sort Algorithms

In computer science, a sorting algorithm is an algorithm that puts elements of a list into an order. The most frequently used orders are numerical order and lexicographical order, and either ascending or descending. Efficient sorting is important for optimizing the efficiency of other algorithms (such as search and merge algorithms) that require input data to be in sorted lists. Sorting is also often useful for canonicalizing data and for producing human-readable output. Formally, the output of any sorting algorithm must satisfy two conditions: # The output is in monotonic order (each element is no smaller/larger than the previous element, according to the required order). # The output is a permutation (a reordering, yet retaining all of the original elements) of the input. Although some algorithms are designed for sequential access, the highest-performing algorithms assume data is stored in a data structure which allows random access. History and concepts From the beginnin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Merge Sort Animation

Merge, merging, or merger may refer to: Concepts * Merge (traffic), the reduction of the number of lanes on a road * Merge (linguistics), a basic syntactic operation in generative syntax in the Minimalist Program * Merger (politics), the combination of two or more political or administrative entities * Merger (phonology), phonological change whereby originally separate phonemes come to be pronounced exactly the same * Mergers and acquisitions, the buying, selling, dividing and combining of different companies Arts, entertainment, and media * Merger (band), a 1970s English reggae band * Merging (play), ''Merging'' (play), a 2007 one act play written by Charles Messina * Merge Records, an indie-rock record label based in Chapel Hill, North Carolina * Merger (The Office), "Merger" (''The Office''), a 2002 television episode * ''Merge'', a List of programs broadcast by Lifetime, program broadcast by Lifetime Computer science * Merge (version control), to combine simultaneously ch ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

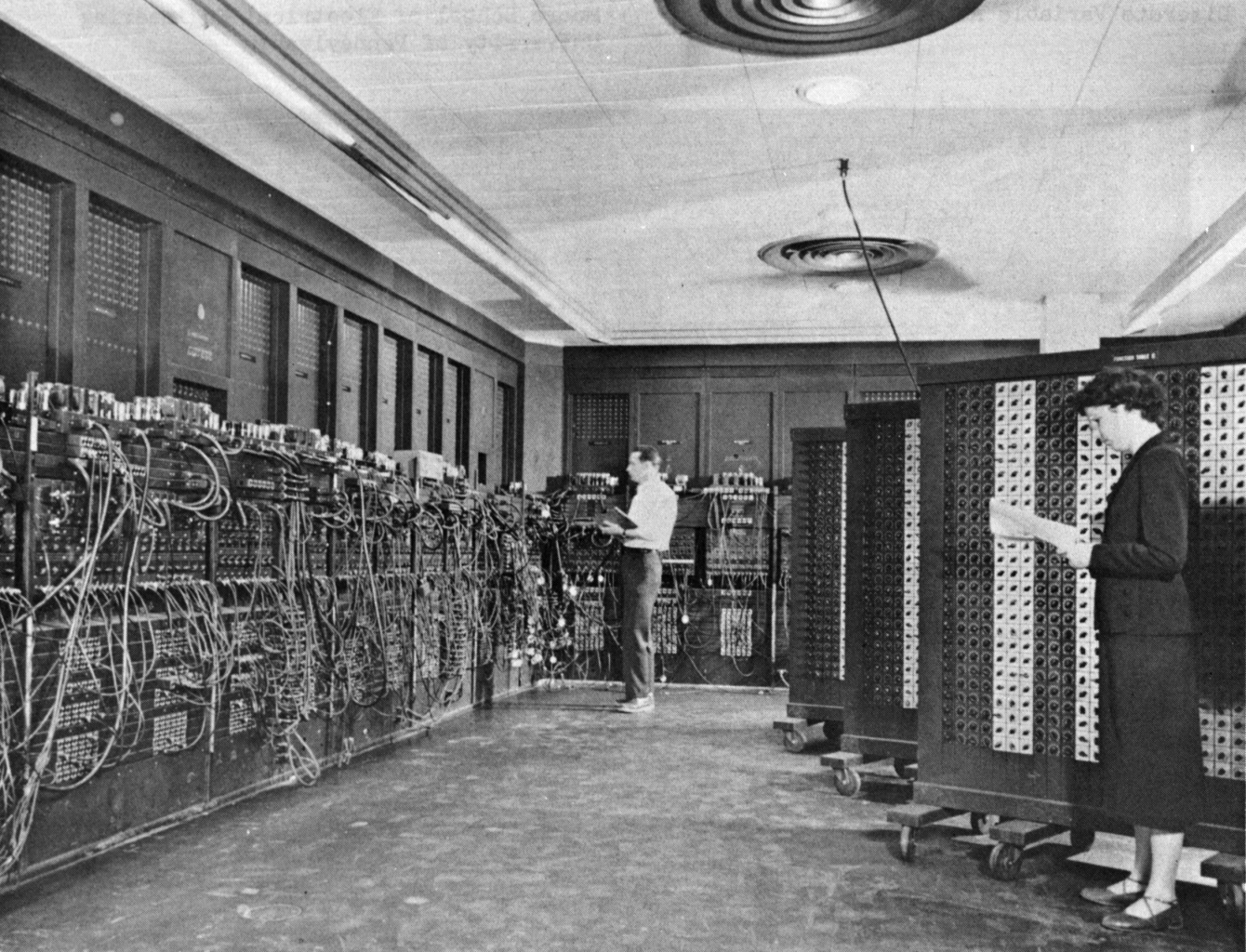

Betty Holberton

Frances Elizabeth Holberton (March 7, 1917 – December 8, 2001) was an American computer scientist who was one of the six original programmers of the first general-purpose electronic digital computer, ENIAC (Electronic Numerical Integrator And Computer). The other five ENIAC programmers were Jean Bartik, Ruth Teitelbaum, Kathleen Antonelli, Marlyn Meltzer, and Frances Spence. Holberton invented breakpoints in computer debugging. Early life and education Holberton was born Frances Elizabeth Snyder in Philadelphia, Pennsylvania in 1917. Her father was John Amos Snyder (1884–1963), her mother was Frances J. Morrow (1892–1981), and she was the third child in a family of eight children. Holberton studied journalism, because its curriculum let her travel far afield. Journalism was also one of the few fields open to women as a career in the 1940s. She stated that on her first day of classes at the University of Pennsylvania, her math professor asked her if she wouldn't be bette ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Best, Worst And Average Case

In computer science, best, worst, and average cases of a given algorithm express what the resource usage is ''at least'', ''at most'' and ''on average'', respectively. Usually the resource being considered is running time, i.e. time complexity, but could also be memory or some other resource. Best case is the function which performs the minimum number of steps on input data of n elements. Worst case is the function which performs the maximum number of steps on input data of size n. Average case is the function which performs an average number of steps on input data of n elements. In real-time computing, the worst-case execution time is often of particular concern since it is important to know how much time might be needed ''in the worst case'' to guarantee that the algorithm will always finish on time. Average performance and worst-case performance are the most used in algorithm analysis. Less widely found is best-case performance, but it does have uses: for example, where th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Randomized Algorithm

A randomized algorithm is an algorithm that employs a degree of randomness as part of its logic or procedure. The algorithm typically uses uniformly random bits as an auxiliary input to guide its behavior, in the hope of achieving good performance in the "average case" over all possible choices of random determined by the random bits; thus either the running time, or the output (or both) are random variables. There is a distinction between algorithms that use the random input so that they always terminate with the correct answer, but where the expected running time is finite (Las Vegas algorithms, for example Quicksort), and algorithms which have a chance of producing an incorrect result ( Monte Carlo algorithms, for example the Monte Carlo algorithm for the MFAS problem) or fail to produce a result either by signaling a failure or failing to terminate. In some cases, probabilistic algorithms are the only practical means of solving a problem. In common practice, randomized alg ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Binary Tree

In computer science, a binary tree is a tree data structure in which each node has at most two children, referred to as the ''left child'' and the ''right child''. That is, it is a ''k''-ary tree with . A recursive definition using set theory is that a binary tree is a triple , where ''L'' and ''R'' are binary trees or the empty set and ''S'' is a singleton (a single–element set) containing the root. From a graph theory perspective, binary trees as defined here are arborescences. A binary tree may thus be also called a bifurcating arborescence, a term which appears in some early programming books before the modern computer science terminology prevailed. It is also possible to interpret a binary tree as an undirected, rather than directed graph, in which case a binary tree is an ordered, rooted tree. Some authors use rooted binary tree instead of ''binary tree'' to emphasize the fact that the tree is rooted, but as defined above, a binary tree is always rooted. In ma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Heap (data Structure)

In computer science, a heap is a Tree (data structure), tree-based data structure that satisfies the heap property: In a ''max heap'', for any given Node (computer science), node C, if P is the parent node of C, then the ''key'' (the ''value'') of P is greater than or equal to the key of C. In a ''min heap'', the key of P is less than or equal to the key of C. The node at the "top" of the heap (with no parents) is called the ''root'' node. The heap is one maximally efficient implementation of an abstract data type called a priority queue, and in fact, priority queues are often referred to as "heaps", regardless of how they may be implemented. In a heap, the highest (or lowest) priority element is always stored at the root. However, a heap is not a sorted structure; it can be regarded as being partially ordered. A heap is a useful data structure when it is necessary to repeatedly remove the object with the highest (or lowest) priority, or when insertions need to be interspersed wit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Divide-and-conquer Algorithm

In computer science, divide and conquer is an algorithm design paradigm. A divide-and-conquer algorithm recursively breaks down a problem into two or more sub-problems of the same or related type, until these become simple enough to be solved directly. The solutions to the sub-problems are then combined to give a solution to the original problem. The divide-and-conquer technique is the basis of efficient algorithms for many problems, such as sorting (e.g., quicksort, merge sort), multiplying large numbers (e.g., the Karatsuba algorithm), finding the closest pair of points, syntactic analysis (e.g., top-down parsers), and computing the discrete Fourier transform ( FFT). Designing efficient divide-and-conquer algorithms can be difficult. As in mathematical induction, it is often necessary to generalize the problem to make it amenable to a recursive solution. The correctness of a divide-and-conquer algorithm is usually proved by mathematical induction, and its computational cos ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Big O Notation

Big ''O'' notation is a mathematical notation that describes the asymptotic analysis, limiting behavior of a function (mathematics), function when the Argument of a function, argument tends towards a particular value or infinity. Big O is a member of a #Related asymptotic notations, family of notations invented by German mathematicians Paul Gustav Heinrich Bachmann, Paul Bachmann, Edmund Landau, and others, collectively called Bachmann–Landau notation or asymptotic notation. The letter O was chosen by Bachmann to stand for '':wikt:Ordnung#German, Ordnung'', meaning the order of approximation. In computer science, big O notation is used to Computational complexity theory, classify algorithms according to how their run time or space requirements grow as the input size grows. In analytic number theory, big O notation is often used to express a bound on the difference between an arithmetic function, arithmetical function and a better understood approximation; one well-known exam ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Counting Sort

Counting is the process of determining the number of elements of a finite set of objects; that is, determining the size of a set. The traditional way of counting consists of continually increasing a (mental or spoken) counter by a unit for every element of the set, in some order, while marking (or displacing) those elements to avoid visiting the same element more than once, until no unmarked elements are left; if the counter was set to one after the first object, the value after visiting the final object gives the desired number of elements. The related term ''enumeration'' refers to uniquely identifying the elements of a finite (combinatorial) set or infinite set by assigning a number to each element. Counting sometimes involves numbers other than one; for example, when counting money, counting out change, "counting by twos" (2, 4, 6, 8, 10, 12, ...), or "counting by fives" (5, 10, 15, 20, 25, ...). There is archaeological evidence suggesting that humans have been ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Big Omega Notation

Big ''O'' notation is a mathematical notation that describes the limiting behavior of a function when the argument tends towards a particular value or infinity. Big O is a member of a family of notations invented by German mathematicians Paul Bachmann, Edmund Landau, and others, collectively called Bachmann–Landau notation or asymptotic notation. The letter O was chosen by Bachmann to stand for ''Ordnung'', meaning the order of approximation. In computer science, big O notation is used to classify algorithms according to how their run time or space requirements grow as the input size grows. In analytic number theory, big O notation is often used to express a bound on the difference between an arithmetical function and a better understood approximation; one well-known example is the remainder term in the prime number theorem. Big O notation is also used in many other fields to provide similar estimates. Big O notation characterizes functions according to their growth rat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Library Sort

A library is a collection of books, and possibly other materials and media, that is accessible for use by its members and members of allied institutions. Libraries provide physical (hard copies) or digital (soft copies) materials, and may be a physical location, a virtual space, or both. A library's collection normally includes printed materials which may be borrowed, and usually also includes a reference section of publications which may only be utilized inside the premises. Resources such as commercial releases of films, television programmes, other video recordings, radio, music and audio recordings may be available in many formats. These include DVDs, Blu-rays, CDs, cassettes, or other applicable formats such as microform. They may also provide access to information, music or other content held on bibliographic databases. In addition, some libraries offer creation stations for makers which offer access to a 3D printing station with a 3D scanner. Libraries can vary widely ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Timsort

Timsort is a hybrid, stable sorting algorithm, derived from merge sort and insertion sort, designed to perform well on many kinds of real-world data. It was implemented by Tim Peters in 2002 for use in the Python programming language. The algorithm finds subsequences of the data that are already ordered (runs) and uses them to sort the remainder more efficiently. This is done by merging runs until certain criteria are fulfilled. Timsort has been Python's standard sorting algorithm since version 2.3, and starting with 3.11 it uses Timsort with the Powersort merge policy. Timsort is also used to sort arrays of non-primitive type in Java SE 7, on the Android platform, in GNU Octave, on V8, in Swift, and Rust. It uses techniques from Peter McIlroy's 1993 paper "Optimistic Sorting and Information Theoretic Complexity". Operation Timsort was designed to take advantage of ''runs'' of consecutive ordered elements that already exist in most real-world data, ''natural runs''. It ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |