|

Smoothing Spline

Smoothing splines are function estimates, \hat f(x), obtained from a set of noisy observations y_i of the target f(x_i), in order to balance a measure of goodness of fit of \hat f(x_i) to y_i with a derivative based measure of the smoothness of \hat f(x). They provide a means for smoothing noisy x_i, y_i data. The most familiar example is the cubic smoothing spline, but there are many other possibilities, including for the case where x is a vector quantity. Cubic spline definition Let \ be a set of observations, modeled by the relation Y_i = f(x_i) + \epsilon_i where the \epsilon_i are independent, zero mean random variables. The cubic smoothing spline estimate \hat f of the function f is defined to be the unique minimizer, in the Sobolev space W^2_2 on a compact interval, of : \sum_^n \^2 + \lambda \int \hat^(x)^2 \,dx. Remarks: * \lambda \ge 0 is a smoothing parameter, controlling the trade-off between fidelity to the data and roughness of the function estimate. This is oft ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Goodness Of Fit

The goodness of fit of a statistical model describes how well it fits a set of observations. Measures of goodness of fit typically summarize the discrepancy between observed values and the values expected under the model in question. Such measures can be used in statistical hypothesis testing, e.g. to test for normality of residuals, to test whether two samples are drawn from identical distributions (see Kolmogorov–Smirnov test), or whether outcome frequencies follow a specified distribution (see Pearson's chi-square test). In the analysis of variance, one of the components into which the variance is partitioned may be a lack-of-fit sum of squares. Fit of distributions In assessing whether a given distribution is suited to a data-set, the following tests and their underlying measures of fit can be used: * Bayesian information criterion * Kolmogorov–Smirnov test * Cramér–von Mises criterion * Anderson–Darling test * Berk-Jones tests * Shapiro–Wilk test * Chi-s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

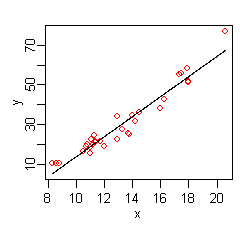

Multivariate Adaptive Regression Splines

In statistics, multivariate adaptive regression splines (MARS) is a form of regression analysis introduced by Jerome H. Friedman in 1991. It is a non-parametric regression technique and can be seen as an extension of linear models that automatically models nonlinearities and interactions between variables. The term "MARS" is trademarked and licensed to Salford Systems. In order to avoid trademark infringements, many open-source implementations of MARS are called "Earth". The basics This section introduces MARS using a few examples. We start with a set of data: a matrix of input variables ''x'', and a vector of the observed responses ''y'', with a response for each row in ''x''. For example, the data could be: Here there is only one independent variable, so the ''x'' matrix is just a single column. Given these measurements, we would like to build a model which predicts the expected ''y'' for a given ''x''. A linear model for the above data is : \widehat = -37 + 5.1 x ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

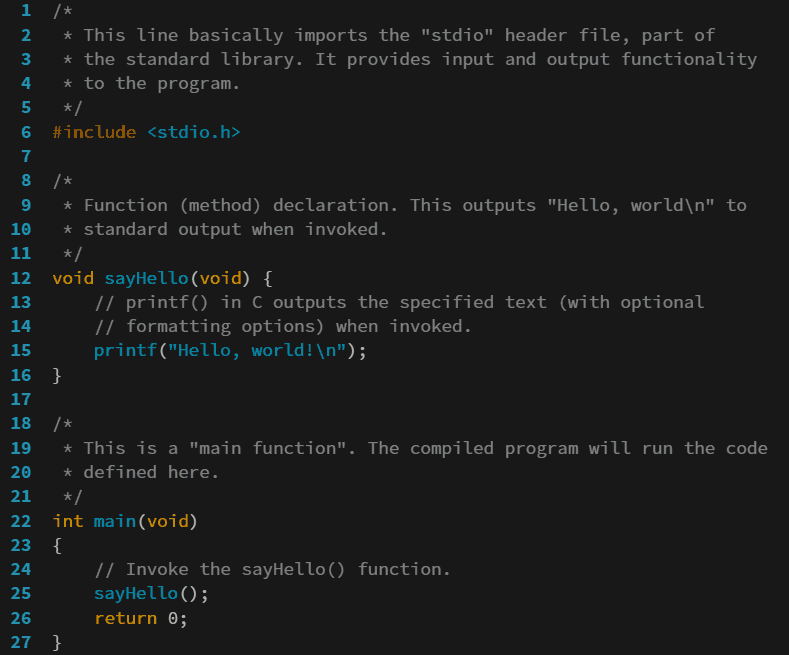

Programming Language

A programming language is a system of notation for writing computer programs. Programming languages are described in terms of their Syntax (programming languages), syntax (form) and semantics (computer science), semantics (meaning), usually defined by a formal language. Languages usually provide features such as a type system, Variable (computer science), variables, and mechanisms for Exception handling (programming), error handling. An Programming language implementation, implementation of a programming language is required in order to Execution (computing), execute programs, namely an Interpreter (computing), interpreter or a compiler. An interpreter directly executes the source code, while a compiler produces an executable program. Computer architecture has strongly influenced the design of programming languages, with the most common type (imperative languages—which implement operations in a specified order) developed to perform well on the popular von Neumann architecture. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Carl R

Carl may refer to: *Carl, Georgia, city in USA *Carl, West Virginia, an unincorporated community *Carl (name), includes info about the name, variations of the name, and a list of people with the name *Carl², a TV series * "Carl", an episode of television series ''Aqua Teen Hunger Force'' * An informal nickname for a student or alum of Carleton College CARL may refer to: *Canadian Association of Research Libraries *Colorado Alliance of Research Libraries See also *Carle (other) *Charles *Carle, a surname *Karl (other) *Karle (other) Karle may refer to: Places * Karle (Svitavy District), a municipality and village in the Czech Republic * Karli, India, a town in Maharashtra, India ** Karla Caves, a complex of Buddhist cave shrines * Karle, Belgaum, a settlement in Belgaum ... {{disambig ja:カール zh:卡尔 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Least Squares

The method of least squares is a mathematical optimization technique that aims to determine the best fit function by minimizing the sum of the squares of the differences between the observed values and the predicted values of the model. The method is widely used in areas such as regression analysis, curve fitting and data modeling. The least squares method can be categorized into linear and nonlinear forms, depending on the relationship between the model parameters and the observed data. The method was first proposed by Adrien-Marie Legendre in 1805 and further developed by Carl Friedrich Gauss. History Founding The method of least squares grew out of the fields of astronomy and geodesy, as scientists and mathematicians sought to provide solutions to the challenges of navigating the Earth's oceans during the Age of Discovery. The accurate description of the behavior of celestial bodies was the key to enabling ships to sail in open seas, where sailors could no longer rely on la ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Manifold Learning

Nonlinear dimensionality reduction, also known as manifold learning, is any of various related techniques that aim to project high-dimensional data, potentially existing across non-linear manifolds which cannot be adequately captured by linear decomposition methods, onto lower-dimensional latent manifolds, with the goal of either visualizing the data in the low-dimensional space, or learning the mapping (either from the high-dimensional space to the low-dimensional embedding or vice versa) itself. The techniques described below can be understood as generalizations of linear decomposition methods used for dimensionality reduction, such as singular value decomposition and principal component analysis. Applications of NLDR High dimensional data can be hard for machines to work with, requiring significant time and space for analysis. It also presents a challenge for humans, since it's hard to visualize or understand data in more than three dimensions. Reducing the dimensionality of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Elastic Map

Elastic maps provide a tool for nonlinear dimensionality reduction. By their construction, they are a system of elastic springs embedded in the data space. This system approximates a low-dimensional manifold. The elastic coefficients of this system allow the switch from completely unstructured k-means clustering (zero elasticity) to the estimators located closely to linear PCA manifolds (for high bending and low stretching modules). With some intermediate values of the elasticity coefficients, this system effectively approximates non-linear principal manifolds. This approach is based on a mechanical analogy between principal manifolds, that are passing through "the middle" of the data distribution, and elastic membranes and plates. The method was developed by A.N. GorbanA.Y. Zinovyevand A.A. Pitenko in 1996–1998. Energy of elastic map Let be a data set in a finite-dimensional Euclidean space. Elastic map is represented by a set of nodes _j in the same space. Each datapo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Thin Plate Splines

Thin plate splines (TPS) are a spline-based technique for data interpolation and smoothing. They were introduced to geometric design by Duchon. They are an important special case of a polyharmonic spline. Robust Point Matching (RPM) is a common extension and shortly known as the TPS-RPM algorithm. Physical analogy The name ''thin plate spline'' refers to a physical analogy involving the bending of a plate or thin sheet of metal. Just as the metal has rigidity, the TPS fit resists bending also, implying a penalty involving the smoothness of the fitted surface. In the physical setting, the deflection is in the z direction, orthogonal to the plane. In order to apply this idea to the problem of coordinate transformation, one interprets the lifting of the plate as a displacement of the x or y coordinates within the plane. In 2D cases, given a set of K corresponding control points (knots), the TPS warp is described by 2(K+3) parameters which include 6 global affine motion parameters and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Karl Longin Zeller

Karl Longin Zeller (December 28, 1924, Šiauliai, Lithuania – July 20, 2006, Tübingen) was a German mathematician and computer scientist who worked in numerical analysis and approximation theory.. He is the namesake of Zeller operators. Zeller was drafted into the Wehrmacht, and lost his right arm on the Soviet front of World War II. He earned his Ph.D. from the University of Tübingen in 1950, under the supervision of Konrad Knopp and Erich Kamke, and remained at Tübingen for most of his career as a professor and as director of the computer center. He left Tübingen in 1959 for a professorship in Stuttgart but returned to Tübingen in 1960 with a personal chair in "the mathematics of supercomputer facilities" (), making him one of the founders of computer science in Germany. He has over 200 academic descendants. In 1993, he was given an honorary doctorate by the University of Siegen The University of Siegen () is a public research university located in Siegen, North Rhi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Sobolev Space

In mathematics, a Sobolev space is a vector space of functions equipped with a norm that is a combination of ''Lp''-norms of the function together with its derivatives up to a given order. The derivatives are understood in a suitable weak sense to make the space complete, i.e. a Banach space. Intuitively, a Sobolev space is a space of functions possessing sufficiently many derivatives for some application domain, such as partial differential equations, and equipped with a norm that measures both the size and regularity of a function. Sobolev spaces are named after the Russian mathematician Sergei Sobolev. Their importance comes from the fact that weak solutions of some important partial differential equations exist in appropriate Sobolev spaces, even when there are no strong solutions in spaces of continuous functions with the derivatives understood in the classical sense. Motivation In this section and throughout the article \Omega is an open subset of \R^n. There are man ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Thin Plate Spline

Thin plate splines (TPS) are a spline-based technique for data interpolation and smoothing. They were introduced to geometric design by Duchon. They are an important special case of a polyharmonic spline. Robust Point Matching (RPM) is a common extension and shortly known as the TPS-RPM algorithm. Physical analogy The name ''thin plate spline'' refers to a physical analogy involving the bending of a plate or thin sheet of metal. Just as the metal has rigidity, the TPS fit resists bending also, implying a penalty involving the smoothness of the fitted surface. In the physical setting, the deflection is in the z direction, orthogonal to the plane. In order to apply this idea to the problem of coordinate transformation, one interprets the lifting of the plate as a displacement of the x or y coordinates within the plane. In 2D cases, given a set of K corresponding control points (knots), the TPS warp is described by 2(K+3) parameters which include 6 global affine motion parameters a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |