|

Semantic Analysis (machine Learning)

In machine learning, semantic analysis of a text corpus is the task of building structures that approximate concepts from a large set of documents. It generally does not involve prior semantic understanding of the documents. Semantic analysis strategies include: * Metalanguages based on first-order logic, which can analyze the speech of humans. * Understanding the semantics of a text is symbol grounding: if language is grounded, it is equal to recognizing a machine-readable meaning. For the restricted domain of spatial analysis, a computer-based language understanding system was demonstrated. * Latent semantic analysis (LSA), a class of techniques where documents are represented as vectors in a term space. A prominent example is probabilistic latent semantic analysis (PLSA). * Latent Dirichlet allocation, which involves attributing document terms to topics. * n-grams and hidden Markov models, which work by representing the term stream as a Markov chain, in which each term is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Machine Learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task (computing), tasks without explicit Machine code, instructions. Within a subdiscipline in machine learning, advances in the field of deep learning have allowed Neural network (machine learning), neural networks, a class of statistical algorithms, to surpass many previous machine learning approaches in performance. ML finds application in many fields, including natural language processing, computer vision, speech recognition, email filtering, agriculture, and medicine. The application of ML to business problems is known as predictive analytics. Statistics and mathematical optimisation (mathematical programming) methods comprise the foundations of machine learning. Data mining is a related field of study, focusing on exploratory data analysi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

N-gram

An ''n''-gram is a sequence of ''n'' adjacent symbols in particular order. The symbols may be ''n'' adjacent letter (alphabet), letters (including punctuation marks and blanks), syllables, or rarely whole words found in a language dataset; or adjacent phonemes extracted from a speech-recording dataset, or adjacent base pairs extracted from a genome. They are collected from a text corpus or speech corpus. If Latin numerical prefixes are used, then ''n''-gram of size 1 is called a "unigram", size 2 a "bigram" (or, less commonly, a "digram") etc. If, instead of the Latin ones, the Cardinal number (linguistics), English cardinal numbers are furtherly used, then they are called "four-gram", "five-gram", etc. Similarly, using Greek numerical prefixes such as "monomer", "dimer", "trimer", "tetramer", "pentamer", etc., or English cardinal numbers, "one-mer", "two-mer", "three-mer", etc. are used in computational biology, for polymers or oligomers of a known size, called k-mer, ''k'' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Stochastic Semantic Analysis

Stochastic (; ) is the property of being well-described by a random probability distribution. ''Stochasticity'' and ''randomness'' are technically distinct concepts: the former refers to a modeling approach, while the latter describes phenomena; in everyday conversation, however, these terms are often used interchangeably. In probability theory, the formal concept of a ''stochastic process'' is also referred to as a ''random process''. Stochasticity is used in many different fields, including image processing, signal processing, computer science, information theory, telecommunications, chemistry, ecology, neuroscience, physics, and cryptography. It is also used in finance (e.g., stochastic oscillator), due to seemingly random changes in the different markets within the financial sector and in medicine, linguistics, music, media, colour theory, botany, manufacturing and geomorphology. Etymology The word ''stochastic'' in English was originally used as an adjective with the definit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Semantic Similarity

Semantic similarity is a metric defined over a set of documents or terms, where the idea of distance between items is based on the likeness of their meaning or semantic content as opposed to lexicographical similarity. These are mathematical tools used to estimate the strength of the semantic relationship between units of language, concepts or instances, through a numerical description obtained according to the comparison of information supporting their meaning or describing their nature. The term semantic similarity is often confused with semantic relatedness. Semantic relatedness includes any relation between two terms, while semantic similarity only includes "is a" relations. For example, "car" is similar to "bus", but is also related to "road" and "driving". Computationally, semantic similarity can be estimated by defining a topological similarity, by using ontologies to define the distance between terms/concepts. For example, a naive metric for the comparison of concepts ord ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Explicit Semantic Analysis

In natural language processing and information retrieval, explicit semantic analysis (ESA) is a Vector space model, vectoral representation of text (individual words or entire documents) that uses a document corpus as a knowledge base. Specifically, in ESA, a word is represented as a column vector in the tf*idf, tf–idf matrix of the text corpus and a document (string of words) is represented as the centroid of the vectors representing its words. Typically, the text corpus is English Wikipedia, though other corpora including the Open Directory Project have been used. ESA was designed by Evgeniy Gabrilovich and Shaul Markovitch as a means of improving document classification, text categorization and has been used by this pair of researchers to compute what they refer to as "Semantics, semantic relatedness" by means of cosine similarity between the aforementioned vectors, collectively interpreted as a space of "concepts explicitly defined and described by humans", where Wikipedia arti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Markov Chain

In probability theory and statistics, a Markov chain or Markov process is a stochastic process describing a sequence of possible events in which the probability of each event depends only on the state attained in the previous event. Informally, this may be thought of as, "What happens next depends only on the state of affairs ''now''." A countably infinite sequence, in which the chain moves state at discrete time steps, gives a discrete-time Markov chain (DTMC). A continuous-time process is called a continuous-time Markov chain (CTMC). Markov processes are named in honor of the Russian mathematician Andrey Markov. Markov chains have many applications as statistical models of real-world processes. They provide the basis for general stochastic simulation methods known as Markov chain Monte Carlo, which are used for simulating sampling from complex probability distributions, and have found application in areas including Bayesian statistics, biology, chemistry, economics, fin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Hidden Markov Model

A hidden Markov model (HMM) is a Markov model in which the observations are dependent on a latent (or ''hidden'') Markov process (referred to as X). An HMM requires that there be an observable process Y whose outcomes depend on the outcomes of X in a known way. Since X cannot be observed directly, the goal is to learn about state of X by observing Y. By definition of being a Markov model, an HMM has an additional requirement that the outcome of Y at time t = t_0 must be "influenced" exclusively by the outcome of X at t = t_0 and that the outcomes of X and Y at t < t_0 must be conditionally independent of at given at time . Estimation of the parameters in an HMM can be performed using maximum likelihood estimation. For linear chain HMMs, the Baum–Welch algorithm can be used to estimate parameters. Hidden Markov models are known for their applications to thermodynamics, statistical mechanics, physics, chem ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Latent Dirichlet Allocation

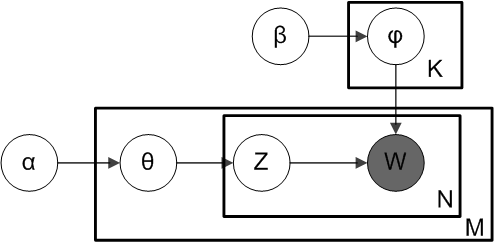

In natural language processing, latent Dirichlet allocation (LDA) is a Bayesian network (and, therefore, a generative statistical model) for modeling automatically extracted topics in textual corpora. The LDA is an example of a Bayesian topic model. In this, observations (e.g., words) are collected into documents, and each word's presence is attributable to one of the document's topics. Each document will contain a small number of topics. History In the context of population genetics, LDA was proposed by J. K. Pritchard, M. Stephens and P. Donnelly in 2000. LDA was applied in machine learning by David Blei, Andrew Ng and Michael I. Jordan in 2003. Overview Population genetics In population genetics, the model is used to detect the presence of structured genetic variation in a group of individuals. The model assumes that alleles carried by individuals under study have origin in various extant or past populations. The model and various inference algorithms allow sci ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Text Corpus

In linguistics and natural language processing, a corpus (: corpora) or text corpus is a dataset, consisting of natively digital and older, digitalized, language resources, either annotated or unannotated. Annotated, they have been used in corpus linguistics for statistical statistical hypothesis testing, hypothesis testing, checking occurrences or validating linguistic rules within a specific language territory. Overview A corpus may contain texts in a single language (''monolingual corpus'') or text data in multiple languages (''multilingual corpus''). In order to make the corpora more useful for doing linguistic research, they are often subjected to a process known as annotation. An example of annotating a corpus is part-of-speech tagging, or ''POS-tagging'', in which information about each word's part of speech (verb, noun, adjective, etc.) is added to the corpus in the form of ''tags''. Another example is indicating the Lemma (morphology), lemma (base) form of each word ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Probabilistic Latent Semantic Analysis

Probabilistic latent semantic analysis (PLSA), also known as probabilistic latent semantic indexing (PLSI, especially in information retrieval circles) is a statistical technique for the analysis of two-mode and co-occurrence data. In effect, one can derive a low-dimensional representation of the observed variables in terms of their affinity to certain hidden variables, just as in latent semantic analysis, from which PLSA evolved. Compared to standard latent semantic analysis which stems from linear algebra and downsizes the occurrence tables (usually via a singular value decomposition), probabilistic latent semantic analysis is based on a mixture decomposition derived from a latent class model. Model Considering observations in the form of co-occurrences (w,d) of words and documents, PLSA models the probability of each co-occurrence as a mixture of conditionally independent multinomial distributions: : P(w,d) = \sum_c P(c) P(d, c) P(w, c) = P(d) \sum_c P(c, d) P(w, c) with ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Feature (machine Learning)

In machine learning and pattern recognition, a feature is an individual measurable property or characteristic of a data set. Choosing informative, discriminating, and independent features is crucial to produce effective algorithms for pattern recognition, classification, and regression tasks. Features are usually numeric, but other types such as strings and graphs are used in syntactic pattern recognition, after some pre-processing step such as one-hot encoding. The concept of "features" is related to that of explanatory variables used in statistical techniques such as linear regression. Feature types In feature engineering, two types of features are commonly used: numerical and categorical. Numerical features are continuous values that can be measured on a scale. Examples of numerical features include age, height, weight, and income. Numerical features can be used in machine learning algorithms directly. Categorical features are discrete values that can be grouped into ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |