|

SPMD

In computing, single program, multiple data (SPMD) is a term that has been used to refer to computational models for exploiting parallelism whereby multiple processors cooperate in the execution of a program in order to obtain results faster. The term SPMD was introduced in 1983 and was used to denote two different computational models: # by Michel Auguin (University of Nice Sophia-Antipolis) and François Larbey (Thomson/Sintra), as a "''fork-and-join''" and data-parallel approach where the ''parallel tasks ("single program")'' are split-up and run simultaneously ''in lockstep'' on multiple ''SIMD processors'' with different inputs, and # by Frederica Darema (IBM), where "''all (processors) processes begin executing the same program... but through synchronization directives ... self-schedule themselves to execute different instructions and act on different data''" and enabling MIMD parallelization of a given program, and is a more general approach than data-parallel a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Message Passing Interface

The Message Passing Interface (MPI) is a portable message-passing standard designed to function on parallel computing architectures. The MPI standard defines the syntax and semantics of library routines that are useful to a wide range of users writing portable message-passing programs in C, C++, and Fortran. There are several open-source MPI implementations, which fostered the development of a parallel software industry, and encouraged development of portable and scalable large-scale parallel applications. History The message passing interface effort began in the summer of 1991 when a small group of researchers started discussions at a mountain retreat in Austria. Out of that discussion came a Workshop on Standards for Message Passing in a Distributed Memory Environment, held on April 29–30, 1992 in Williamsburg, Virginia. Attendees at Williamsburg discussed the basic features essential to a standard message-passing interface and established a working group to continu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Single Instruction, Multiple Threads

Single instruction, multiple threads (SIMT) is an execution model used in parallel computing where single instruction, multiple data (SIMD) is combined with zero-overhead multithreading, i.e. multithreading where the hardware is capable of switching between threads on a cycle-by-cycle basis. There are two models of multithreading involved. In addition to the zero-overhead multithreading mentioned, the SIMD execution hardware is virtualized to represent a multiprocessor, but is inferior to a SPMD processor in that instructions in all "threads" are executed in lock-step in the lanes of the SIMD processor which can only execute the same instruction in a given cycle across all lanes. The SIMT execution model has been implemented on several GPUs and is relevant for general-purpose computing on graphics processing units (GPGPU), e.g. some supercomputer A supercomputer is a type of computer with a high level of performance as compared to a general-purpose computer. The performance ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Single Instruction, Multiple Data

Single instruction, multiple data (SIMD) is a type of parallel computer, parallel processing in Flynn's taxonomy. SIMD describes computers with multiple processing elements that perform the same operation on multiple data points simultaneously. SIMD can be internal (part of the hardware design) and it can be directly accessible through an instruction set architecture (ISA), but it should not be confused with an ISA. Such machines exploit Data parallelism, data level parallelism, but not Concurrent computing, concurrency: there are simultaneous (parallel) computations, but each unit performs exactly the same instruction at any given moment (just with different data). A simple example is to add many pairs of numbers together, all of the SIMD units are performing an addition, but each one has different pairs of values to add. SIMD is particularly applicable to common tasks such as adjusting the contrast in a digital image or adjusting the volume of digital audio. Most modern Cen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Parallelism

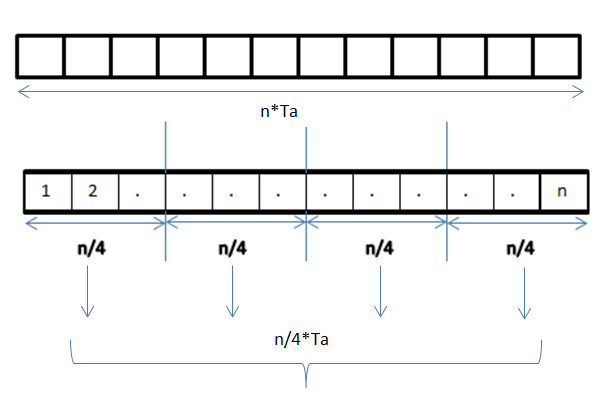

Data parallelism is parallelization across multiple processors in parallel computing environments. It focuses on distributing the data across different nodes, which operate on the data in parallel. It can be applied on regular data structures like arrays and matrices by working on each element in parallel. It contrasts to task parallelism as another form of parallelism. A data parallel job on an array of ''n'' elements can be divided equally among all the processors. Let us assume we want to sum all the elements of the given array and the time for a single addition operation is Ta time units. In the case of sequential execution, the time taken by the process will be ''n''×Ta time units as it sums up all the elements of an array. On the other hand, if we execute this job as a data parallel job on 4 processors the time taken would reduce to (''n''/4)×Ta + merging overhead time units. Parallel execution results in a speedup of 4 over sequential execution. The Locality of reference, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

OpenMP

OpenMP is an application programming interface (API) that supports multi-platform shared-memory multiprocessing programming in C, C++, and Fortran, on many platforms, instruction-set architectures and operating systems, including Solaris, AIX, FreeBSD, HP-UX, Linux, macOS, Windows and OpenHarmony. It consists of a set of compiler directives, library routines, and environment variables that influence run-time behavior. OpenMP is managed by the nonprofit technology consortium ''OpenMP Architecture Review Board'' (or ''OpenMP ARB''), jointly defined by a broad swath of leading computer hardware and software vendors, including Arm, AMD, IBM, Intel, Cray, HP, Fujitsu, Nvidia, NEC, Red Hat, Texas Instruments, and Oracle Corporation. OpenMP uses a portable, scalable model that gives programmers a simple and flexible interface for developing parallel applications for platforms ranging from the standard desktop computer to the supercomputer. An application built with ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fetch-and-add

In computer science, the fetch-and-add (FAA) CPU instruction atomically increments the contents of a memory location by a specified value. That is, fetch-and-add performs the following operation: increment the value at address by , where is a memory location and is some value, and return the original value at . in such a way that if this operation is executed by one process in a concurrent system, no other process will ever see an intermediate result. Fetch-and-add can be used to implement concurrency control structures such as mutex locks and semaphores. Overview The motivation for having an atomic fetch-and-add is that operations that appear in programming languages as are not safe in a concurrent system, where multiple processes or threads are running concurrently (either in a multi-processor system, or preemptively scheduled onto some single-core systems). The reason is that such an operation is actually implemented as multiple machine instructions: # load in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Teragrid

TeraGrid was an e-Science grid computing infrastructure combining resources at eleven partner sites. The project started in 2001 and operated from 2004 through 2011. The TeraGrid integrated high-performance computers, data resources and tools, and experimental facilities. Resources included more than a petaflops of computing capability and more than 30 petabytes of online and archival data storage, with rapid access and retrieval over high-performance computer network connections. Researchers could also access more than 100 discipline-specific databases. TeraGrid was coordinated through the Grid Infrastructure Group (GIG) at the University of Chicago, working in partnership with the resource provider sites in the United States. History The US National Science Foundation (NSF) issued a solicitation asking for a "distributed terascale facility" from program director Richard L. Hilderbrandt. The TeraGrid project was launched in August 2001 with $53 million in funding to four sites: ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Shared Memory Architecture

A shared-memory architecture (SM) is a distributed computing architecture in which the nodes share the same memory as well as the same storage.{{Cite web , title=Memory: Shared vs Distributed - UFRC , url=https://help.rc.ufl.edu/doc/Memory:_Shared_vs_Distributed , access-date=2024-03-13 , website=help.rc.ufl.edu It contrasts with shared-nothing architecture, in which each node has distinct memory and storage, and with shared-disk architecture, in which the nodes share the same storage but not the same memory. This is distinct from the use of shared memory between different programs or threads on a single node, with or without multiprocessing. See also * Distributed database A distributed database is a database in which data is stored across different physical locations. It may be stored in multiple computers located in the same physical location (e.g. a data centre); or maybe dispersed over a computer network, netwo ... * Shared memory References Distributed computin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Compare-and-swap

In computer science, compare-and-swap (CAS) is an atomic instruction used in multithreading to achieve synchronization. It compares the contents of a memory location with a given (the previous) value and, only if they are the same, modifies the contents of that memory location to a new given value. This is done as a single atomic operation. The atomicity guarantees that the new value is calculated based on up-to-date information; if the value had been updated by another thread in the meantime, the write would fail. The result of the operation must indicate whether it performed the substitution; this can be done either with a simple boolean response (this variant is often called compare-and-set), or by returning the value read from the memory location (''not'' the value written to it), thus "swapping" the read and written values. Overview A compare-and-swap operation is an atomic version of the following pseudocode, where denotes access through a pointer: function cas(p: poin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computing

Computing is any goal-oriented activity requiring, benefiting from, or creating computer, computing machinery. It includes the study and experimentation of algorithmic processes, and the development of both computer hardware, hardware and software. Computing has scientific, engineering, mathematical, technological, and social aspects. Major computing disciplines include computer engineering, computer science, cybersecurity, data science, information systems, information technology, and software engineering. The term ''computing'' is also synonymous with counting and calculation, calculating. In earlier times, it was used in reference to the action performed by Mechanical computer, mechanical computing machines, and before that, to Computer (occupation), human computers. History The history of computing is longer than the history of computing hardware and includes the history of methods intended for pen and paper (or for chalk and slate) with or without the aid of tables. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |