|

RL (complexity)

Randomized Logarithmic-space (RL), sometimes called RLP (Randomized Logarithmic-space Polynomial-time), is the complexity class of computational complexity theory problems solvable in logarithmic space and polynomial time with probabilistic Turing machines with one-sided error. It is named in analogy with RP, which is similar but has no logarithmic space restriction. Definition The probabilistic Turing machines in the definition of RL never accept incorrectly but are allowed to reject incorrectly less than 1/3 of the time; this is called ''one-sided error''. The constant 1/3 is arbitrary; any ''x'' with 0 < ''x'' < 1 would suffice. This error can be made 2−''p''(''x'') times smaller for any polynomial ''p''(''x'') without using more than polynomial time or logarithmic space by running the algorithm repeatedly. Relation to other complexity classes Sometimes the name RL is reserved for the class of problems solvable by logarithmic-space probabilisti ...[...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Complexity Class

In computational complexity theory, a complexity class is a set of computational problems of related resource-based complexity. The two most commonly analyzed resources are time and memory. In general, a complexity class is defined in terms of a type of computational problem, a model of computation, and a bounded resource like time or memory. In particular, most complexity classes consist of decision problems that are solvable with a Turing machine, and are differentiated by their time or space (memory) requirements. For instance, the class P is the set of decision problems solvable by a deterministic Turing machine in polynomial time. There are, however, many complexity classes defined in terms of other types of problems (e.g. counting problems and function problems) and using other models of computation (e.g. probabilistic Turing machines, interactive proof systems, Boolean circuits, and quantum computers). The study of the relationships between complexity class ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computational Complexity Theory

In theoretical computer science and mathematics, computational complexity theory focuses on classifying computational problems according to their resource usage, and relating these classes to each other. A computational problem is a task solved by a computer. A computation problem is solvable by mechanical application of mathematical steps, such as an algorithm. A problem is regarded as inherently difficult if its solution requires significant resources, whatever the algorithm used. The theory formalizes this intuition, by introducing mathematical models of computation to study these problems and quantifying their computational complexity, i.e., the amount of resources needed to solve them, such as time and storage. Other measures of complexity are also used, such as the amount of communication (used in communication complexity), the number of gates in a circuit (used in circuit complexity) and the number of processors (used in parallel computing). One of the roles of compu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Logarithmic Space

In computational complexity theory, L (also known as LSPACE or DLOGSPACE) is the complexity class containing decision problems that can be solved by a deterministic Turing machine using a logarithmic amount of writable memory space., Definition 8.12, p. 295., p. 177. Formally, the Turing machine has two tapes, one of which encodes the input and can only be read, whereas the other tape has logarithmic size but can be read as well as written. Logarithmic space is sufficient to hold a constant number of pointers into the input and a logarithmic number of boolean flags, and many basic logspace algorithms use the memory in this way. Complete problems and logical characterization Every non-trivial problem in L is complete under log-space reductions, so weaker reductions are required to identify meaningful notions of L-completeness, the most common being first-order reductions. A 2004 result by Omer Reingold shows that USTCON, the problem of whether there exists a path ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Polynomial Time

In computer science, the time complexity is the computational complexity that describes the amount of computer time it takes to run an algorithm. Time complexity is commonly estimated by counting the number of elementary operations performed by the algorithm, supposing that each elementary operation takes a fixed amount of time to perform. Thus, the amount of time taken and the number of elementary operations performed by the algorithm are taken to be related by a constant factor. Since an algorithm's running time may vary among different inputs of the same size, one commonly considers the worst-case time complexity, which is the maximum amount of time required for inputs of a given size. Less common, and usually specified explicitly, is the average-case complexity, which is the average of the time taken on inputs of a given size (this makes sense because there are only a finite number of possible inputs of a given size). In both cases, the time complexity is generally express ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probabilistic Turing Machine

In theoretical computer science, a probabilistic Turing machine is a non-deterministic Turing machine that chooses between the available transitions at each point according to some probability distribution. As a consequence, a probabilistic Turing machine can—unlike a deterministic Turing Machine—have stochastic results; that is, on a given input and instruction state machine, it may have different run times, or it may not halt at all; furthermore, it may accept an input in one execution and reject the same input in another execution. In the case of equal probabilities for the transitions, probabilistic Turing machines can be defined as deterministic Turing machines having an additional "write" instruction where the value of the write is uniform distribution (discrete), uniformly distributed in the Turing Machine's alphabet (generally, an equal likelihood of writing a "1" or a "0" on to the tape). Another common reformulation is simply a deterministic Turing machine with an ad ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

One-sided Error

In computing, a Monte Carlo algorithm is a randomized algorithm whose output may be incorrect with a certain (typically small) probability. Two examples of such algorithms are Karger–Stein algorithm and Monte Carlo algorithm for minimum Feedback arc set. The name refers to the grand casino in the Principality of Monaco at Monte Carlo, which is well-known around the world as an icon of gambling. The term "Monte Carlo" was first introduced in 1947 by Nicholas Metropolis. Las Vegas algorithms are a dual of Monte Carlo algorithms that never return an incorrect answer. However, they may make random choices as part of their work. As a result, the time taken might vary between runs, even with the same input. If there is a procedure for verifying whether the answer given by a Monte Carlo algorithm is correct, and the probability of a correct answer is bounded above zero, then with probability, one running the algorithm repeatedly while testing the answers will eventually give a corr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

RP (complexity)

In computational complexity theory, randomized polynomial time (RP) is the complexity class of problems for which a probabilistic Turing machine exists with these properties: * It always runs in polynomial time in the input size * If the correct answer is NO, it always returns NO * If the correct answer is YES, then it returns YES with probability at least 1/2 (otherwise, it returns NO). In other words, the algorithm is allowed to flip a truly random coin while it is running. The only case in which the algorithm can return YES is if the actual answer is YES; therefore if the algorithm terminates and produces YES, then the correct answer is definitely YES; however, the algorithm can terminate with NO ''regardless'' of the actual answer. That is, if the algorithm returns NO, it might be wrong. Some authors call this class R, although this name is more commonly used for the class of recursive languages. If the correct answer is YES and the algorithm is run ''n'' times with the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

NL (complexity)

In computational complexity theory, NL (Nondeterministic Logarithmic-space) is the complexity class containing decision problems that can be solved by a nondeterministic Turing machine using a logarithmic amount of memory space. NL is a generalization of L, the class for logspace problems on a deterministic Turing machine. Since any deterministic Turing machine is also a nondeterministic Turing machine, we have that L is contained in NL. NL can be formally defined in terms of the computational resource nondeterministic space (or NSPACE) as NL = NSPACE(log ''n''). Important results in complexity theory allow us to relate this complexity class with other classes, telling us about the relative power of the resources involved. Results in the field of algorithms, on the other hand, tell us which problems can be solved with this resource. Like much of complexity theory, many important questions about NL are still open (see Unsolved problems in computer science). Occasionally NL ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

BPL (complexity)

In computational complexity theory, BPL (Bounded-error Probabilistic Logarithmic-space), sometimes called BPLP (Bounded-error Probabilistic Logarithmic-space Polynomial-time), is the complexity class of problems solvable in logarithmic space and polynomial time with probabilistic Turing machines with two-sided error. It is named in analogy with BPP, which is similar but has no logarithmic space restriction. Error model The probabilistic Turing machines in the definition of BPL may only accept or reject incorrectly less than 1/3 of the time; this is called ''two-sided error''. The constant 1/3 is arbitrary; any ''x'' with 0 ≤ ''x'' < 1/2 would suffice. This error can be made 2−''p''(''x'') times smaller for any polynomial ''p''(''x'') without using more than polynomial time or logarithmic space by running the algorithm repeatedly. Related classes Since two-sided error is more general than one-sided error,[...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

L (complexity)

In computational complexity theory, L (also known as LSPACE or DLOGSPACE) is the complexity class containing decision problems that can be solved by a deterministic Turing machine using a logarithmic amount of writable memory space., Definition 8.12, p. 295., p. 177. Formally, the Turing machine has two tapes, one of which encodes the input and can only be read, whereas the other tape has logarithmic size but can be read as well as written. Logarithmic space is sufficient to hold a constant number of pointers into the input and a logarithmic number of boolean flags, and many basic logspace algorithms use the memory in this way. Complete problems and logical characterization Every non-trivial problem in L is complete under log-space reductions, so weaker reductions are required to identify meaningful notions of L-completeness, the most common being first-order reductions. A 2004 result by Omer Reingold shows that USTCON, the problem of whether there exists a pat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

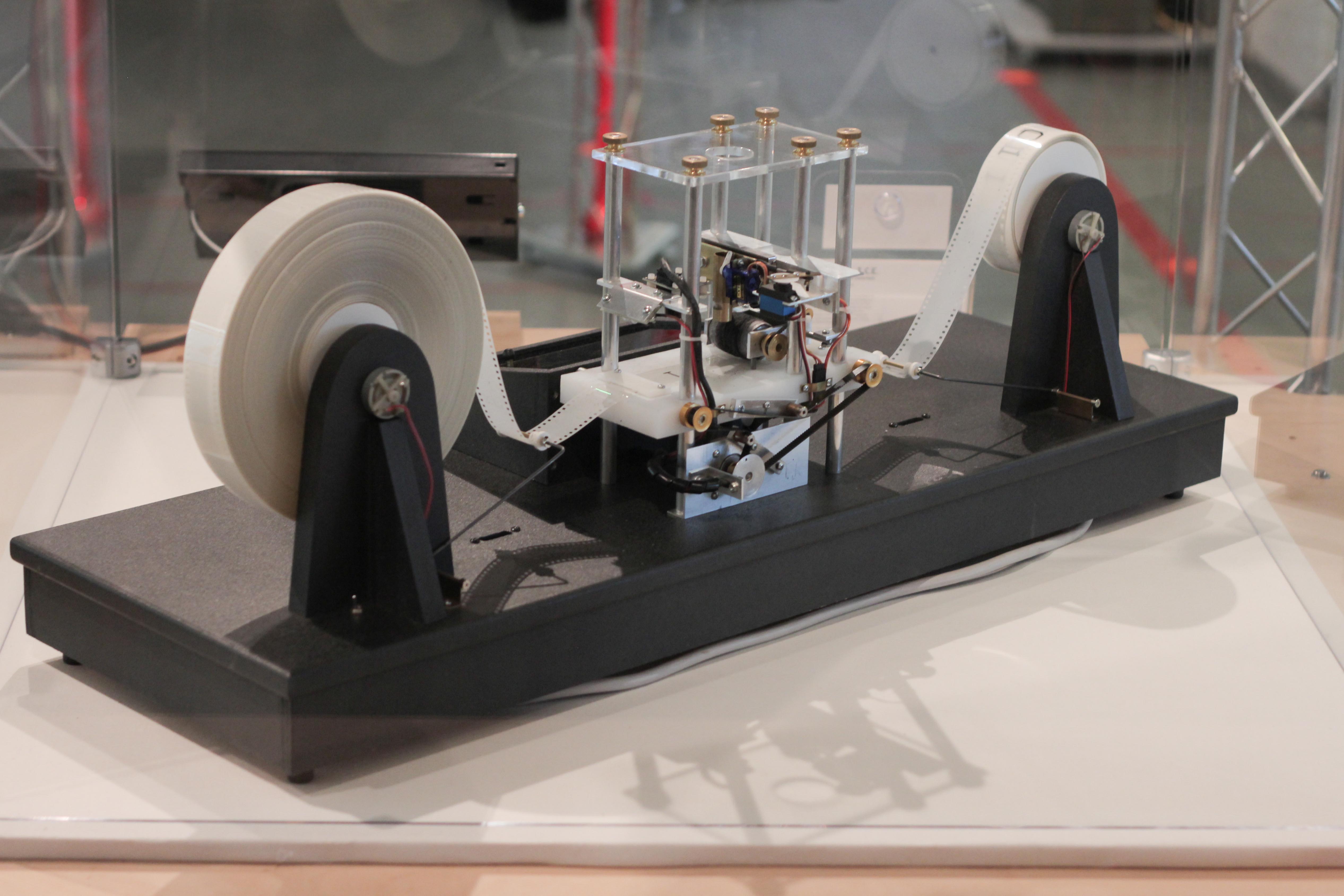

Deterministic Turing Machine

A Turing machine is a mathematical model of computation describing an abstract machine that manipulates symbols on a strip of tape according to a table of rules. Despite the model's simplicity, it is capable of implementing any computer algorithm. The machine operates on an infinite memory tape divided into discrete cells, each of which can hold a single symbol drawn from a finite set of symbols called the alphabet of the machine. It has a "head" that, at any point in the machine's operation, is positioned over one of these cells, and a "state" selected from a finite set of states. At each step of its operation, the head reads the symbol in its cell. Then, based on the symbol and the machine's own present state, the machine writes a symbol into the same cell, and moves the head one step to the left or the right, or halts the computation. The choice of which replacement symbol to write and which direction to move is based on a finite table that specifies what to do for each co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Noam Nisan

Noam Nisan ( he, נעם ניסן; born June 20, 1961) is an Israeli computer scientist, a professor of computer science at the Hebrew University of Jerusalem. He is known for his research in computational complexity theory and algorithmic game theory. Biography Nisan did his undergraduate studies at the Hebrew University, graduating in 1984. He went to the University of California, Berkeley for graduate school, and received a Ph.D. in 1988 under the supervision of Richard Karp. After postdoctoral studies at the Massachusetts Institute of Technology he joined the Hebrew University faculty in 1990.Curriculum vitae retrieved 2012-03-01. Selected publications Nisan is the author of[...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |