|

P Value

In null-hypothesis significance testing, the ''p''-value is the probability of obtaining test results at least as extreme as the result actually observed, under the assumption that the null hypothesis is correct. A very small ''p''-value means that such an extreme observed outcome would be very unlikely under the null hypothesis. Reporting ''p''-values of statistical tests is common practice in academic publications of many quantitative fields. Since the precise meaning of ''p''-value is hard to grasp, misuse is widespread and has been a major topic in metascience. Basic concepts In statistics, every conjecture concerning the unknown probability distribution of a collection of random variables representing the observed data X in some study is called a ''statistical hypothesis''. If we state one hypothesis only and the aim of the statistical test is to see whether this hypothesis is tenable, but not to investigate other specific hypotheses, then such a test is called a null ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

P-factor

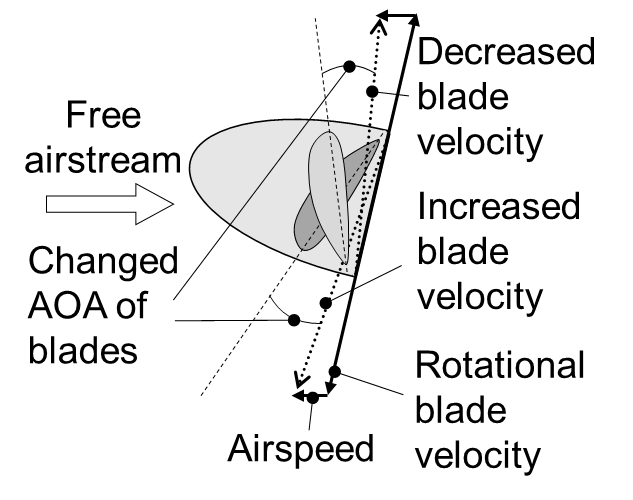

P-factor, also known as asymmetric blade effect and asymmetric disc effect, is an aerodynamic phenomenon experienced by a moving propeller,) where the propeller's center of thrust moves off-center when the aircraft is at a high angle of attack. This shift in the location of the center of thrust will exert a yawing moment on the aircraft, causing it to yaw slightly to one side. A rudder input is required to counteract the yawing tendency. Causes When a propeller aircraft is flying at cruise speed in level flight, the propeller disc is perpendicular to the relative airflow through the propeller. Each of the propeller blades contacts the air at the same angle and speed, and thus the thrust produced is evenly distributed across the propeller. However, at lower speeds the aircraft will typically be in a nose-high attitude, with the propeller disc rotated slightly toward the horizontal. This has two effects. Firstly, propeller blades will be more forward when in the down positi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fisher's Combined Probability Test

In statistics, Fisher's method, also known as Fisher's combined probability test, is a technique for data fusion or "meta-analysis" (analysis of analyses). It was developed by and named for Ronald Fisher. In its basic form, it is used to combine the results from several Chi-squared test, independence tests bearing upon the same overall statistical hypothesis testing, hypothesis (''H''0). Application to independent test statistics Fisher's method combines extreme value probabilities from each test, commonly known as "p-values", into one test statistic (''X''2) using the formula :X^2_ \sim -2\sum_^k \log(p_i), where ''p''''i'' is the p-value for the ''i''th hypothesis test. When the p-values tend to be small, the test statistic ''X''2 will be large, which suggests that the null hypotheses are not true for every test. When all the null hypotheses are true, and the ''p''''i'' (or their corresponding test statistics) are independent, ''X''2 has a chi-squared distribution with 2 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

F-distribution

In probability theory and statistics, the ''F''-distribution or F-ratio, also known as Snedecor's ''F'' distribution or the Fisher–Snedecor distribution (after Ronald Fisher and George W. Snedecor) is a continuous probability distribution that arises frequently as the null distribution of a test statistic, most notably in the analysis of variance (ANOVA) and other ''F''-tests. Definition The F-distribution with ''d''1 and ''d''2 degrees of freedom is the distribution of : X = \frac where S_1 and S_2 are independent random variables with chi-square distributions with respective degrees of freedom d_1 and d_2. It can be shown to follow that the probability density function (pdf) for ''X'' is given by : \begin f(x; d_1,d_2) &= \frac \\ pt&=\frac \left(\frac\right)^ x^ \left(1+\frac \, x \right)^ \end for real ''x'' > 0. Here \mathrm is the beta function. In many applications, the parameters ''d''1 and ''d''2 are positive integers, but the distribution is well-define ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

F-test

An ''F''-test is any statistical test in which the test statistic has an ''F''-distribution under the null hypothesis. It is most often used when comparing statistical models that have been fitted to a data set, in order to identify the model that best fits the population from which the data were sampled. Exact "''F''-tests" mainly arise when the models have been fitted to the data using least squares. The name was coined by George W. Snedecor, in honour of Ronald Fisher. Fisher initially developed the statistic as the variance ratio in the 1920s. Common examples Common examples of the use of ''F''-tests include the study of the following cases: * The hypothesis that the means of a given set of normally distributed populations, all having the same standard deviation, are equal. This is perhaps the best-known ''F''-test, and plays an important role in the analysis of variance (ANOVA). * The hypothesis that a proposed regression model fits the data well. See Lack-of-fit sum of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Student's T-distribution

In probability and statistics, Student's ''t''-distribution (or simply the ''t''-distribution) is any member of a family of continuous probability distributions that arise when estimating the mean of a normally distributed population in situations where the sample size is small and the population's standard deviation is unknown. It was developed by English statistician William Sealy Gosset under the pseudonym "Student". The ''t''-distribution plays a role in a number of widely used statistical analyses, including Student's ''t''-test for assessing the statistical significance of the difference between two sample means, the construction of confidence intervals for the difference between two population means, and in linear regression analysis. Student's ''t''-distribution also arises in the Bayesian analysis of data from a normal family. If we take a sample of n observations from a normal distribution, then the ''t''-distribution with \nu=n-1 degrees of freedom can be de ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

T-test

A ''t''-test is any statistical hypothesis testing, statistical hypothesis test in which the test statistic follows a Student's t-distribution, Student's ''t''-distribution under the null hypothesis. It is most commonly applied when the test statistic would follow a normal distribution if the value of a Scale parameter, scaling term in the test statistic were known (typically, the scaling term is unknown and therefore a nuisance parameter). When the scaling term is estimated based on the data, the test statistic—under certain conditions—follows a Student's ''t'' distribution. The ''t''-test's most common application is to test whether the means of two populations are different. History The term "''t''-statistic" is abbreviated from "hypothesis test statistic". In statistics, the t-distribution was first derived as a Posterior probability, posterior distribution in 1876 by Friedrich Robert Helmert, Helmert and Jacob Lüroth, Lüroth. The t-distribution also appeared in a more ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Z-test

A ''Z''-test is any statistical test for which the distribution of the test statistic under the null hypothesis can be approximated by a normal distribution. Z-tests test the mean of a distribution. For each significance level in the confidence interval, the ''Z''-test has a single critical value (for example, 1.96 for 5% two tailed) which makes it more convenient than the Student's ''t''-test whose critical values are defined by the sample size (through the corresponding degrees of freedom). Both the Z test and Student's t-test have similarities in that they both help determine the significance of a set of data. However, the z-test is rarely used in practice because the population deviation is difficult to determine. Applicability Because of the central limit theorem, many test statistics are approximately normally distributed for large samples. Therefore, many statistical tests can be conveniently performed as approximate ''Z''-tests if the sample size is large or the populat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scalar (mathematics)

A scalar is an element of a field which is used to define a ''vector space''. In linear algebra, real numbers or generally elements of a field are called scalars and relate to vectors in an associated vector space through the operation of scalar multiplication (defined in the vector space), in which a vector can be multiplied by a scalar in the defined way to produce another vector. Generally speaking, a vector space may be defined by using any field instead of real numbers (such as complex numbers). Then scalars of that vector space will be elements of the associated field (such as complex numbers). A scalar product operation – not to be confused with scalar multiplication – may be defined on a vector space, allowing two vectors to be multiplied in the defined way to produce a scalar. A vector space equipped with a scalar product is called an inner product space. A quantity described by multiple scalars, such as having both direction and magnitude, is called a '' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Test Statistic

A test statistic is a statistic (a quantity derived from the sample) used in statistical hypothesis testing.Berger, R. L.; Casella, G. (2001). ''Statistical Inference'', Duxbury Press, Second Edition (p.374) A hypothesis test is typically specified in terms of a test statistic, considered as a numerical summary of a data-set that reduces the data to one value that can be used to perform the hypothesis test. In general, a test statistic is selected or defined in such a way as to quantify, within observed data, behaviours that would distinguish the null from the alternative hypothesis, where such an alternative is prescribed, or that would characterize the null hypothesis if there is no explicitly stated alternative hypothesis. An important property of a test statistic is that its sampling distribution under the null hypothesis must be calculable, either exactly or approximately, which allows ''p''-values to be calculated. A ''test statistic'' shares some of the same qualities o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayes Factors

The Bayes factor is a ratio of two competing statistical models represented by their marginal likelihood, and is used to quantify the support for one model over the other. The models in questions can have a common set of parameters, such as a null hypothesis and an alternative, but this is not necessary; for instance, it could also be a non-linear model compared to its linear approximation. The Bayes factor can be thought of as a Bayesian analog to the likelihood-ratio test, but since it uses the (integrated) marginal likelihood instead of the maximized likelihood, both tests only coincide under simple hypotheses (e.g., two specific parameter values). Also, in contrast with null hypothesis significance testing, Bayes factors support evaluation of evidence ''in favor'' of a null hypothesis, rather than only allowing the null to be rejected or not rejected. Although conceptually simple, the computation of the Bayes factor can be challenging depending on the complexity of the model ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Likelihood Principle

In statistics, the likelihood principle is the proposition that, given a statistical model, all the evidence in a sample relevant to model parameters is contained in the likelihood function. A likelihood function arises from a probability density function considered as a function of its distributional parameterization argument. For example, consider a model which gives the probability density function \; f_X(x \,\vert\, \theta)\; of observable random variable \, X \, as a function of a parameter \,\theta~. Then for a specific value \,x\, of \,X~, the function \,\mathcal(\theta \,\vert\, x) = f_X(x \,\vert\, \theta)\; is a likelihood function of \,\theta\;:~ it gives a measure of how "likely" any particular value of \,\theta\, is, if we know that \,X\, has the value \,x~. The density function may be a density with respect to counting measure, i.e. a probability mass function. Two likelihood functions are ''equivalent'' if one is a scalar multiple of the other. The like ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Confidence Intervals

In frequentist statistics, a confidence interval (CI) is a range of estimates for an unknown parameter. A confidence interval is computed at a designated ''confidence level''; the 95% confidence level is most common, but other levels, such as 90% or 99%, are sometimes used. The confidence level represents the long-run proportion of corresponding CIs that contain the true value of the parameter. For example, out of all intervals computed at the 95% level, 95% of them should contain the parameter's true value. Factors affecting the width of the CI include the sample size, the variability in the sample, and the confidence level. All else being the same, a larger sample produces a narrower confidence interval, greater variability in the sample produces a wider confidence interval, and a higher confidence level produces a wider confidence interval. Definition Let be a random sample from a probability distribution with statistical parameter , which is a quantity to be estimated ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |