|

Patience Sort

In computer science, patience sorting is a sorting algorithm inspired by, and named after, the card game patience. A variant of the algorithm efficiently computes the length of a longest increasing subsequence in a given array. Overview The algorithm's name derives from a simplified variant of the patience card game. The game begins with a shuffled deck of cards. The cards are dealt one by one into a sequence of piles on the table, according to the following rules. # Initially, there are no piles. The first card dealt forms a new pile consisting of the single card. # Each subsequent card is placed on the leftmost existing pile whose top card has a value greater than or equal to the new card's value, or to the right of all of the existing piles, thus forming a new pile. # When there are no more cards remaining to deal, the game ends. This card game is turned into a two-phase sorting algorithm, as follows. Given an array of elements from some totally ordered domain, consider this ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sorting Algorithm

In computer science, a sorting algorithm is an algorithm that puts elements of a list into an order. The most frequently used orders are numerical order and lexicographical order, and either ascending or descending. Efficient sorting is important for optimizing the efficiency of other algorithms (such as search and merge algorithms) that require input data to be in sorted lists. Sorting is also often useful for canonicalizing data and for producing human-readable output. Formally, the output of any sorting algorithm must satisfy two conditions: # The output is in monotonic order (each element is no smaller/larger than the previous element, according to the required order). # The output is a permutation (a reordering, yet retaining all of the original elements) of the input. For optimum efficiency, the input data should be stored in a data structure which allows random access rather than one that allows only sequential access. History and concepts From the beginning of c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Worst-case

In computer science, best, worst, and average cases of a given algorithm express what the resource usage is ''at least'', ''at most'' and ''on average'', respectively. Usually the resource being considered is running time, i.e. time complexity, but could also be memory or some other resource. Best case is the function which performs the minimum number of steps on input data of n elements. Worst case is the function which performs the maximum number of steps on input data of size n. Average case is the function which performs an average number of steps on input data of n elements. In real-time computing, the worst-case execution time is often of particular concern since it is important to know how much time might be needed ''in the worst case'' to guarantee that the algorithm will always finish on time. Average performance and worst-case performance are the most used in algorithm analysis. Less widely found is best-case performance, but it does have uses: for example, where the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Comparison Sorts

Comparison or comparing is the act of evaluating two or more things by determining the relevant, comparable characteristics of each thing, and then determining which characteristics of each are similar to the other, which are different, and to what degree. Where characteristics are different, the differences may then be evaluated to determine which thing is best suited for a particular purpose. The description of similarities and differences found between the two things is also called a comparison. Comparison can take many distinct forms, varying by field: To compare things, they must have characteristics that are similar enough in relevant ways to merit comparison. If two things are too different to compare in a useful way, an attempt to compare them is colloquially referred to in English as "comparing apples and oranges." Comparison is widely used in society, in science and in the arts. General usage Comparison is a natural activity, which even animals engage in when deci ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Process Control

An industrial process control in continuous production processes is a discipline that uses industrial control systems to achieve a production level of consistency, economy and safety which could not be achieved purely by human manual control. It is implemented widely in industries such as automotive, mining, dredging, oil refining, pulp and paper manufacturing, chemical processing and power generating plants. There is a wide range of size, type and complexity, but it enables a small number of operators to manage complex processes to a high degree of consistency. The development of large industrial process control systems was instrumental in enabling the design of large high volume and complex processes, which could not be otherwise economically or safely operated. The applications can range from controlling the temperature and level of a single process vessel, to a complete chemical processing plant with several thousand control loops. History Early process control breakthr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

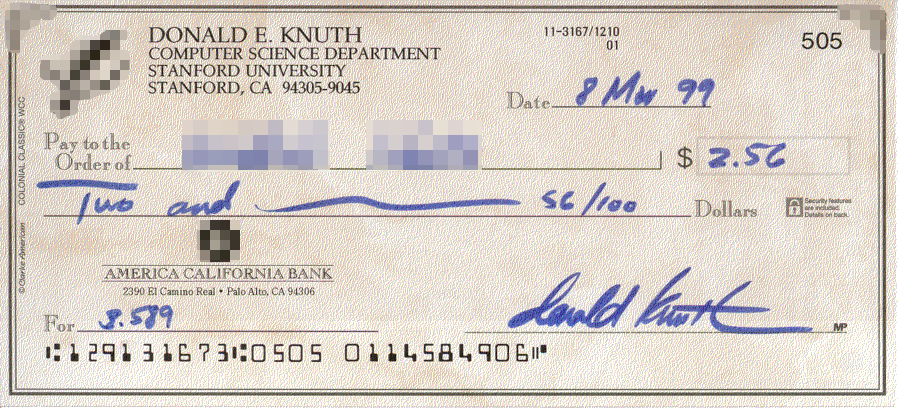

Donald Knuth

Donald Ervin Knuth ( ; born January 10, 1938) is an American computer scientist, mathematician, and professor emeritus at Stanford University. He is the 1974 recipient of the ACM Turing Award, informally considered the Nobel Prize of computer science. Knuth has been called the "father of the analysis of algorithms". He is the author of the multi-volume work '' The Art of Computer Programming'' and contributed to the development of the rigorous analysis of the computational complexity of algorithms and systematized formal mathematical techniques for it. In the process, he also popularized the asymptotic notation. In addition to fundamental contributions in several branches of theoretical computer science, Knuth is the creator of the TeX computer typesetting system, the related METAFONT font definition language and rendering system, and the Computer Modern family of typefaces. As a writer and scholar, Knuth created the WEB and CWEB computer programming systems designed to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Robert W

The name Robert is an ancient Germanic given name, from Proto-Germanic "fame" and "bright" (''Hrōþiberhtaz''). Compare Old Dutch ''Robrecht'' and Old High German ''Hrodebert'' (a compound of '' Hruod'' ( non, Hróðr) "fame, glory, honour, praise, renown" and '' berht'' "bright, light, shining"). It is the second most frequently used given name of ancient Germanic origin. It is also in use as a surname. Another commonly used form of the name is Rupert. After becoming widely used in Continental Europe it entered England in its Old French form ''Robert'', where an Old English cognate form (''Hrēodbēorht'', ''Hrodberht'', ''Hrēodbēorð'', ''Hrœdbœrð'', ''Hrœdberð'', ''Hrōðberχtŕ'') had existed before the Norman Conquest. The feminine version is Roberta. The Italian, Portuguese, and Spanish form is Roberto. Robert is also a common name in many Germanic languages, including English, German, Dutch, Norwegian, Swedish Swedish or ' may refer to: Anything ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Structure

In computer science, a data structure is a data organization, management, and storage format that is usually chosen for Efficiency, efficient Data access, access to data. More precisely, a data structure is a collection of data values, the relationships among them, and the functions or operations that can be applied to the data, i.e., it is an algebraic structure about data. Usage Data structures serve as the basis for abstract data types (ADT). The ADT defines the logical form of the data type. The data structure implements the physical form of the data type. Different types of data structures are suited to different kinds of applications, and some are highly specialized to specific tasks. For example, Relational database, relational databases commonly use B-tree indexes for data retrieval, while compiler Implementation, implementations usually use hash tables to look up identifiers. Data structures provide a means to manage large amounts of data efficiently for uses such a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Asymptotic

In analytic geometry, an asymptote () of a curve is a line such that the distance between the curve and the line approaches zero as one or both of the ''x'' or ''y'' coordinates tends to infinity. In projective geometry and related contexts, an asymptote of a curve is a line which is tangent to the curve at a point at infinity. The word asymptote is derived from the Greek ἀσύμπτωτος (''asumptōtos'') which means "not falling together", from ἀ priv. + σύν "together" + πτωτ-ός "fallen". The term was introduced by Apollonius of Perga in his work on conic sections, but in contrast to its modern meaning, he used it to mean any line that does not intersect the given curve. There are three kinds of asymptotes: ''horizontal'', ''vertical'' and ''oblique''. For curves given by the graph of a function , horizontal asymptotes are horizontal lines that the graph of the function approaches as ''x'' tends to Vertical asymptotes are vertical lines near which the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Back-pointer

In computer science, a pointer is an object in many programming languages that stores a memory address. This can be that of another value located in computer memory, or in some cases, that of memory-mapped computer hardware. A pointer ''references'' a location in memory, and obtaining the value stored at that location is known as ''dereferencing'' the pointer. As an analogy, a page number in a book's index could be considered a pointer to the corresponding page; dereferencing such a pointer would be done by flipping to the page with the given page number and reading the text found on that page. The actual format and content of a pointer variable is dependent on the underlying computer architecture. Using pointers significantly improves performance for repetitive operations, like traversing iterable data structures (e.g. strings, lookup tables, control tables and tree structures). In particular, it is often much cheaper in time and space to copy and dereference poin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Greedy Algorithm

A greedy algorithm is any algorithm that follows the problem-solving heuristic of making the locally optimal choice at each stage. In many problems, a greedy strategy does not produce an optimal solution, but a greedy heuristic can yield locally optimal solutions that approximate a globally optimal solution in a reasonable amount of time. For example, a greedy strategy for the travelling salesman problem (which is of high computational complexity) is the following heuristic: "At each step of the journey, visit the nearest unvisited city." This heuristic does not intend to find the best solution, but it terminates in a reasonable number of steps; finding an optimal solution to such a complex problem typically requires unreasonably many steps. In mathematical optimization, greedy algorithms optimally solve combinatorial problems having the properties of matroids and give constant-factor approximations to optimization problems with the submodular structure. Specifics Greedy algo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Processing Letters

''Information Processing Letters'' is a peer reviewed scientific journal in the field of computer science, published by Elsevier. The aim of the journal is to enable fast dissemination of results in the field of information processing Information processing is the change (processing) of information in any manner detectable by an observer. As such, it is a process that ''describes'' everything that happens (changes) in the universe, from the falling of a rock (a change in posi ... in the form of short papers. Submissions are limited to nine double-spaced pages. Both theoretical and experimental research is covered. External links * Computer science journals Publications established in 1971 Semi-monthly journals Elsevier academic journals {{compu-journal-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Van Emde Boas Tree

A van Emde Boas tree (), also known as a vEB tree or van Emde Boas priority queue, is a tree data structure which implements an associative array with -bit integer keys. It was invented by a team led by Dutch computer scientist Peter van Emde Boas in 1975. It performs all operations in time, or equivalently in time, where is the largest element that can be stored in the tree. The parameter is not to be confused with the actual number of elements stored in the tree, by which the performance of other tree data-structures is often measured. The vEB tree has poor space efficiency. For example, for storing 32-bit integers (i.e., when ), it requires bits of storage. However, similar data structures with equally good time efficiency and space exist, where is the number of stored elements. Supported operations A vEB supports the operations of an ''ordered associative array'', which includes the usual associative array operations along with two more ''order'' operations, ''F ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |