|

Oversampling And Undersampling In Data Analysis

Within statistics, Oversampling and undersampling in data analysis are techniques used to adjust the class distribution of a data set (i.e. the ratio between the different classes/categories represented). These terms are used both in statistical sampling, survey design methodology and in machine learning. Oversampling and undersampling are opposite and roughly equivalent techniques. There are also more complex oversampling techniques, including the creation of artificial data points with algorithms like Synthetic minority oversampling technique. Motivation for oversampling and undersampling Both oversampling and undersampling involve introducing a bias to select more samples from one class than from another, to compensate for an imbalance that is either already present in the data, or likely to develop if a purely random sample were taken. Data Imbalance can be of the following types: # ''Under-representation of a class in one or more important predictor variables.'' Suppose ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German: '' Statistik'', "description of a state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of surveys and experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample to the population as a whole. An ex ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Set

A data set (or dataset) is a collection of data. In the case of tabular data, a data set corresponds to one or more database tables, where every column of a table represents a particular variable, and each row corresponds to a given record of the data set in question. The data set lists values for each of the variables, such as for example height and weight of an object, for each member of the data set. Data sets can also consist of a collection of documents or files. In the open data discipline, data set is the unit to measure the information released in a public open data repository. The European data.europa.eu portal aggregates more than a million data sets. Some other issues ( real-time data sources, non-relational data sets, etc.) increases the difficulty to reach a consensus about it. Properties Several characteristics define a data set's structure and properties. These include the number and types of the attributes or variables, and various statistical measures applica ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence. Machine learning algorithms build a model based on sample data, known as training data, in order to make predictions or decisions without being explicitly programmed to do so. Machine learning algorithms are used in a wide variety of applications, such as in medicine, email filtering, speech recognition, agriculture, and computer vision, where it is difficult or unfeasible to develop conventional algorithms to perform the needed tasks.Hu, J.; Niu, H.; Carrasco, J.; Lennox, B.; Arvin, F.,Voronoi-Based Multi-Robot Autonomous Exploration in Unknown Environments via Deep Reinforcement Learning IEEE Transactions on Vehicular Technology, 2020. A subset of machine learning is closely related to computational statistics, which focuses on making pred ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Synthetic Minority Oversampling Technique

Synthetic things are composed of multiple parts, often with the implication that they are artificial. In particular, 'synthetic' may refer to: Science * Synthetic chemical or compound, produced by the process of chemical synthesis * Synthetic organic compounds synthetic chemical compounds based on carbon (organic compounds). * Synthetic peptide * Synthetic biology * Synthetic elements, chemical elements that are not naturally found on Earth and therefore have to be created in experiments Industry * Synthetic fuel * Synthetic oil * Synthetic marijuana * Synthetic diamond * Synthetic fibers, cloth or other material made from other substances than natural (animal, plant) materials Other * Synthetic position, a concept in finance * Synthetic-aperture radar, a type or radar * Analytic–synthetic distinction, in philosophy * Synthetic language in linguistics, inflected or agglutinative languages * Synthetic intelligence a term emphasizing that true intelligence expressed by computi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

GitHub

GitHub, Inc. () is an Internet hosting service for software development and version control using Git. It provides the distributed version control of Git plus access control, bug tracking, software feature requests, task management, continuous integration, and wikis for every project. Headquartered in California, it has been a subsidiary of Microsoft since 2018. It is commonly used to host open source software development projects. As of June 2022, GitHub reported having over 83 million developers and more than 200 million repositories, including at least 28 million public repositories. It is the largest source code host . History GitHub.com Development of the GitHub.com platform began on October 19, 2007. The site was launched in April 2008 by Tom Preston-Werner, Chris Wanstrath, P. J. Hyett and Scott Chacon after it had been made available for a few months prior as a beta release. GitHub has an annual keynote called GitHub Universe. Org ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bias

Bias is a disproportionate weight ''in favor of'' or ''against'' an idea or thing, usually in a way that is closed-minded, prejudicial, or unfair. Biases can be innate or learned. People may develop biases for or against an individual, a group, or a belief. In science and engineering, a bias is a systematic error. Statistical bias results from an unfair sampling of a population, or from an estimation process that does not give accurate results on average. Etymology The word appears to derive from Old Provençal into Old French ''biais'', "sideways, askance, against the grain". Whence comes French ''biais'', "a slant, a slope, an oblique". It seems to have entered English via the game of bowls, where it referred to balls made with a greater weight on one side. Which expanded to the figurative use, "a one-sided tendency of the mind", and, at first especially in law, "undue propensity or prejudice". Types of bias Cognitive biases A cognitive bias is a repeating or basic m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stratified Sampling

In statistics, stratified sampling is a method of sampling from a population which can be partitioned into subpopulations. In statistical surveys, when subpopulations within an overall population vary, it could be advantageous to sample each subpopulation (stratum) independently. Stratification is the process of dividing members of the population into homogeneous subgroups before sampling. The strata should define a partition of the population. That is, it should be '' collectively exhaustive'' and '' mutually exclusive'': every element in the population must be assigned to one and only one stratum. Then simple random sampling is applied within each stratum. The objective is to improve the precision of the sample by reducing sampling error. It can produce a weighted mean that has less variability than the arithmetic mean of a simple random sample of the population. In computational statistics, stratified sampling is a method of variance reduction when Monte Carlo metho ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Cleansing

Data cleansing or data cleaning is the process of detecting and correcting (or removing) corrupt or inaccurate records from a record set, table, or database and refers to identifying incomplete, incorrect, inaccurate or irrelevant parts of the data and then replacing, modifying, or deleting the dirty or coarse data. Data cleansing may be performed interactively with data wrangling tools, or as batch processing through scripting or a data quality firewall. After cleansing, a data set should be consistent with other similar data sets in the system. The inconsistencies detected or removed may have been originally caused by user entry errors, by corruption in transmission or storage, or by different data dictionary definitions of similar entities in different stores. Data cleaning differs from data validation in that validation almost invariably means data is rejected from the system at entry and is performed at the time of entry, rather than on batches of data. The actual pro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

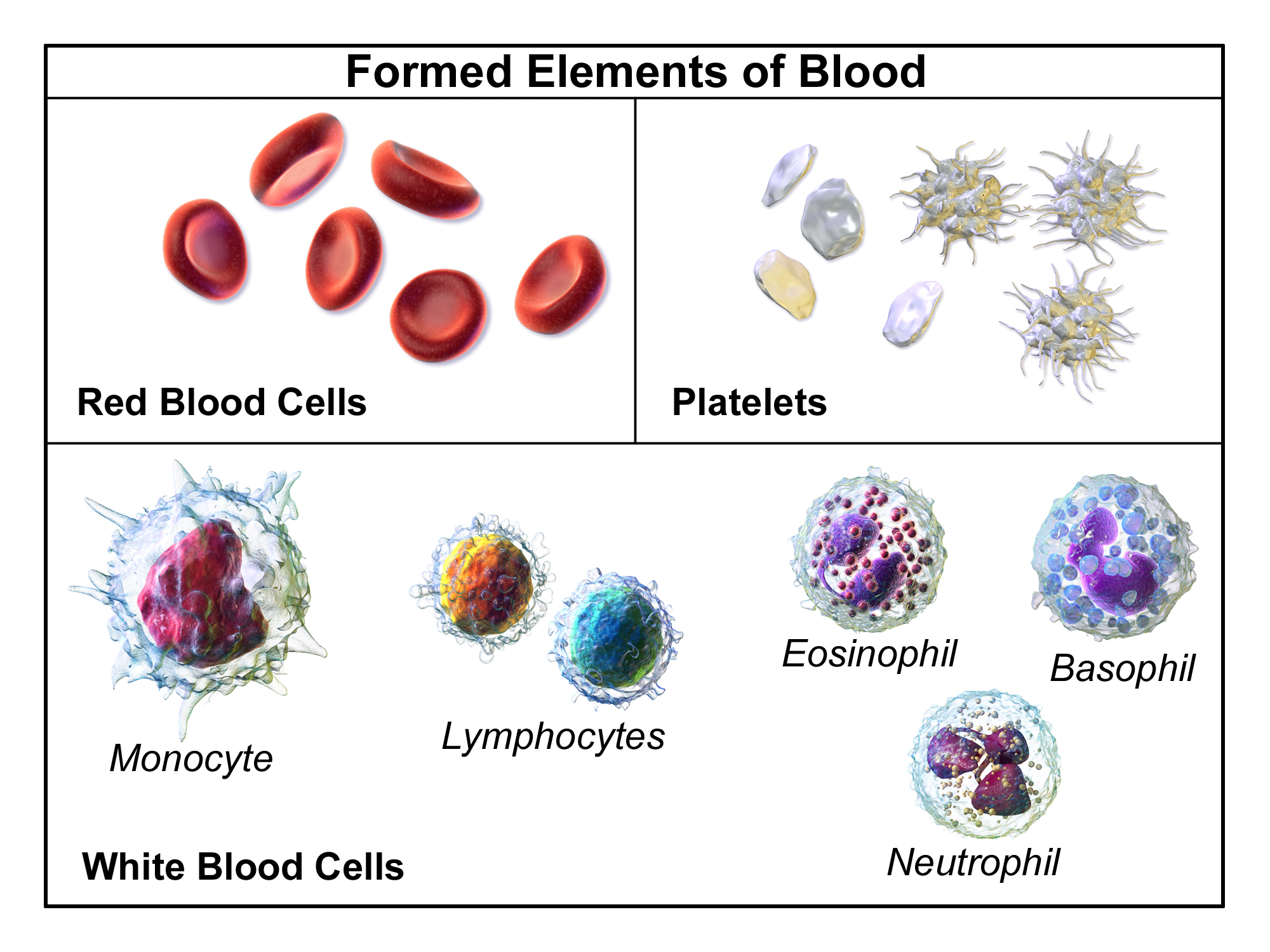

Complete Blood Count

A complete blood count (CBC), also known as a full blood count (FBC), is a set of medical laboratory tests that provide information about the cells in a person's blood. The CBC indicates the counts of white blood cells, red blood cells and platelets, the concentration of hemoglobin, and the hematocrit (the volume percentage of red blood cells). The red blood cell indices, which indicate the average size and hemoglobin content of red blood cells, are also reported, and a white blood cell differential, which counts the different types of white blood cells, may be included. The CBC is often carried out as part of a medical assessment and can be used to monitor health or diagnose diseases. The results are interpreted by comparing them to reference ranges, which vary with sex and age. Conditions like anemia and thrombocytopenia are defined by abnormal complete blood count results. The red blood cell indices can provide information about the cause of a person's anemia such as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regularization (mathematics)

In mathematics, statistics, finance, computer science, particularly in machine learning and inverse problems, regularization is a process that changes the result answer to be "simpler". It is often used to obtain results for ill-posed problems or to prevent overfitting. Although regularization procedures can be divided in many ways, following delineation is particularly helpful: * Explicit regularization is regularization whenever one explicitly adds a term to the optimization problem. These terms could be priors, penalties, or constraints. Explicit regularization is commonly employed with ill-posed optimization problems. The regularization term, or penalty, imposes a cost on the optimization function to make the optimal solution unique. * Implicit regularization is all other forms of regularization. This includes, for example, early stopping, using a robust loss function, and discarding outliers. Implicit regularization is essentially ubiquitous in modern machine learning ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Overfitting

mathematical modeling, overfitting is "the production of an analysis that corresponds too closely or exactly to a particular set of data, and may therefore fail to fit to additional data or predict future observations reliably". An overfitted model is a mathematical model that contains more parameters than can be justified by the data. The essence of overfitting is to have unknowingly extracted some of the residual variation (i.e., the noise) as if that variation represented underlying model structure. Underfitting occurs when a mathematical model cannot adequately capture the underlying structure of the data. An under-fitted model is a model where some parameters or terms that would appear in a correctly specified model are missing. Under-fitting would occur, for example, when fitting a linear model to non-linear data. Such a model will tend to have poor predictive performance. The possibility of over-fitting exists because the criterion used for selecting the model is no ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Augmentation

Data augmentation in data analysis are techniques used to increase the amount of data by adding slightly modified copies of already existing data or newly created synthetic data from existing data. It acts as a regularizer and helps reduce overfitting when training a machine learning model. It is closely related to oversampling in data analysis. Synthetic oversampling techniques for traditional machine learning Data augmentation for image classification Introducing new synthetic images If a dataset is very small, then a version augmented with rotation and mirroring etc. may still not be enough for a given problem. Another solution is the sourcing of entirely new, synthetic images through various techniques, for example the use of generative adversarial networks to create new synthetic images for data augmentation. Additionally, image recognition algorithms show improvement when transferring from images rendered in virtual environments to real-world data. Data augmenta ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |