|

Orthographic Rules

Morphological parsing, in natural language processing, is the process of determining the morphemes from which a given word is constructed. It must be able to distinguish between orthographic rules and morphological rules. For example, the word 'foxes' can be decomposed into 'fox' (the stem), and 'es' (a suffix indicating plurality). The generally accepted approach to morphological parsing is through the use of a finite state transducer (FST), which inputs words and outputs their stem and modifiers. The FST is initially created through algorithmic parsing of some word source, such as a dictionary, complete with modifier markups. Another approach is through the use of an indexed lookup method, which uses a constructed radix tree. This is not an often-taken route because it breaks down for morphologically complex languages. With the advancement of neural networks in natural language processing, it became less common to use FST for morphological analysis, especially for languages for ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Language Processing

Natural language processing (NLP) is an interdisciplinary subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, in particular how to program computers to process and analyze large amounts of natural language data. The goal is a computer capable of "understanding" the contents of documents, including the contextual nuances of the language within them. The technology can then accurately extract information and insights contained in the documents as well as categorize and organize the documents themselves. Challenges in natural language processing frequently involve speech recognition, natural-language understanding, and natural-language generation. History Natural language processing has its roots in the 1950s. Already in 1950, Alan Turing published an article titled "Computing Machinery and Intelligence" which proposed what is now called the Turing test as a criterion of intelligence, t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Morphemes

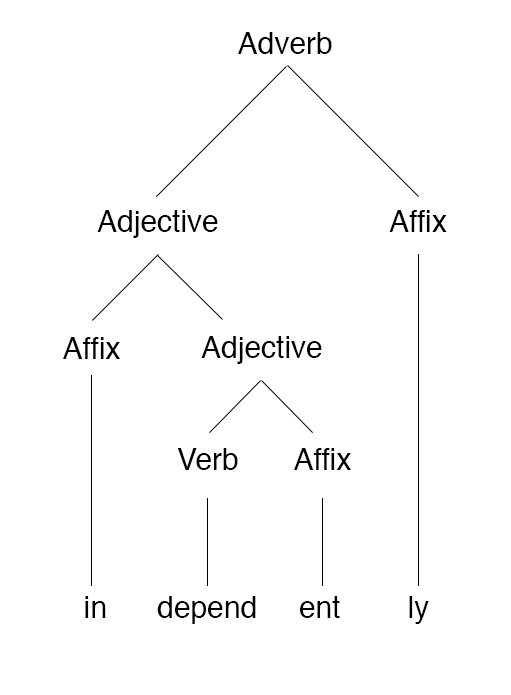

A morpheme is the smallest meaningful constituent of a linguistic expression. The field of linguistic study dedicated to morphemes is called morphology. In English, morphemes are often but not necessarily words. Morphemes that stand alone are considered roots (such as the morpheme ''cat''); other morphemes, called affixes, are found only in combination with other morphemes. For example, the ''-s'' in ''cats'' indicates the concept of plurality but is always bound to another concept to indicate a specific kind of plurality. This distinction is not universal and does not apply to, for example, Latin, in which many roots cannot stand alone. For instance, the Latin root ''reg-'' (‘king’) must always be suffixed with a case marker: ''rex'' (''reg-s''), ''reg-is'', ''reg-i'', etc. For a language like Latin, a root can be defined as the main lexical morpheme of a word. These sample English words have the following morphological analyses: * "Unbreakable" is composed of three m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Finite State Transducer

A finite-state transducer (FST) is a finite-state machine with two memory ''tapes'', following the terminology for Turing machines: an input tape and an output tape. This contrasts with an ordinary finite-state automaton, which has a single tape. An FST is a type of finite-state automaton (FSA) that maps between two sets of symbols. An FST is more general than an FSA. An FSA defines a formal language by defining a set of accepted strings, while an FST defines relations between sets of strings. An FST will read a set of strings on the input tape and generates a set of relations on the output tape. An FST can be thought of as a translator or relater between strings in a set. In morphological parsing, an example would be inputting a string of letters into the FST, the FST would then output a string of morphemes. Overview An automaton can be said to ''recognize'' a string if we view the content of its tape as input. In other words, the automaton computes a function that maps ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Radix Tree

In computer science, a radix tree (also radix trie or compact prefix tree or compressed trie) is a data structure that represents a space-optimized trie (prefix tree) in which each node that is the only child is merged with its parent. The result is that the number of children of every internal node is at most the radix of the radix tree, where is a positive integer and a power of 2, having ≥ 1. Unlike regular trees, edges can be labeled with sequences of elements as well as single elements. This makes radix trees much more efficient for small sets (especially if the strings are long) and for sets of strings that share long prefixes. Unlike regular trees (where whole keys are compared ''en masse'' from their beginning up to the point of inequality), the key at each node is compared chunk-of-bits by chunk-of-bits, where the quantity of bits in that chunk at that node is the radix of the radix trie. When is 2, the radix trie is binary (i.e., compare that node's 1-bit porti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Neural Networks

A neural network is a network or circuit of biological neurons, or, in a modern sense, an artificial neural network, composed of artificial neurons or nodes. Thus, a neural network is either a biological neural network, made up of biological neurons, or an artificial neural network, used for solving artificial intelligence (AI) problems. The connections of the biological neuron are modeled in artificial neural networks as weights between nodes. A positive weight reflects an excitatory connection, while negative values mean inhibitory connections. All inputs are modified by a weight and summed. This activity is referred to as a linear combination. Finally, an activation function controls the amplitude of the output. For example, an acceptable range of output is usually between 0 and 1, or it could be −1 and 1. These artificial networks may be used for predictive modeling, adaptive control and applications where they can be trained via a dataset. Self-learning resulting from e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Training Data

In machine learning, a common task is the study and construction of algorithms that can learn from and make predictions on data. Such algorithms function by making data-driven predictions or decisions, through building a mathematical model from input data. These input data used to build the model are usually divided in multiple data sets. In particular, three data sets are commonly used in different stages of the creation of the model: training, validation and test sets. The model is initially fit on a training data set, which is a set of examples used to fit the parameters (e.g. weights of connections between neurons in artificial neural networks) of the model. The model (e.g. a naive Bayes classifier) is trained on the training data set using a supervised learning method, for example using optimization methods such as gradient descent or stochastic gradient descent. In practice, the training data set often consists of pairs of an input vector (or scalar) and the corresponding ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Language Models

A language model is a probability distribution over sequences of words. Given any sequence of words of length , a language model assigns a probability P(w_1,\ldots,w_m) to the whole sequence. Language models generate probabilities by training on text corpora in one or many languages. Given that languages can be used to express an infinite variety of valid sentences (the property of digital infinity), language modeling faces the problem of assigning non-zero probabilities to linguistically valid sequences that may never be encountered in the training data. Several modelling approaches have been designed to surmount this problem, such as applying the Markov assumption or using neural architectures such as recurrent neural networks or transformers. Language models are useful for a variety of problems in computational linguistics; from initial applications in speech recognition to ensure nonsensical (i.e. low-probability) word sequences are not predicted, to wider use in machine tra ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Word Stem

In linguistics, a word stem is a part of a word responsible for its lexical meaning. The term is used with slightly different meanings depending on the morphology of the language in question. In Athabaskan linguistics, for example, a verb stem is a root that cannot appear on its own and that carries the tone of the word. Athabaskan verbs typically have two stems in this analysis, each preceded by prefixes. In most cases, a word stem is not modified during its declension, while in some languages it can be modified (apophony) according to certain morphological rules or peculiarities, such as sandhi. For example in Polish: ("city"), but ("in the city"). In English: "sing", "sang", "sung". Uncovering and analyzing cognation between word stems and roots within and across languages has allowed comparative philology and comparative linguistics to determine the history of languages and language families. Usage In one usage, a word stem is a form to which affixes can be attached. T ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Modifiers

In linguistics, a modifier is an optional element in phrase structure or clause structure which ''modifies'' the meaning of another element in the structure. For instance, the adjective "red" acts as a modifier in the noun phrase "red ball", providing extra details about which particular ball is being referred to. Similarly, the adverb "quickly" acts as a modifier in the verb phrase "run quickly". Modification can be considered a high-level domain of the functions of language, on par with predication and reference. Premodifiers and postmodifiers Modifiers may come either before or after the modified element (the ''head''), depending on the type of modifier and the rules of syntax for the language in question. A modifier placed before the head is called a premodifier; one placed after the head is called a postmodifier. For example, in ''land mines'', the word ''land'' is a premodifier of ''mines'', whereas in the phrase ''mines in wartime'', the phrase ''in wartime'' is a postmodif ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Grammar

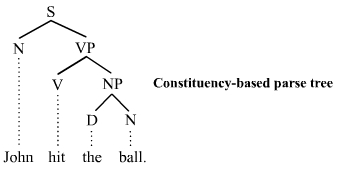

In linguistics, the grammar of a natural language is its set of structure, structural constraints on speakers' or writers' composition of clause (linguistics), clauses, phrases, and words. The term can also refer to the study of such constraints, a field that includes domains such as phonology, morphology (linguistics), morphology, and syntax, often complemented by phonetics, semantics, and pragmatics. There are currently two different approaches to the study of grammar: traditional grammar and Grammar#Theoretical frameworks, theoretical grammar. Fluency, Fluent speakers of a variety (linguistics), language variety or ''lect'' have effectively internalized these constraints, the vast majority of which – at least in the case of one's First language, native language(s) – are language acquisition, acquired not by conscious study or language teaching, instruction but by hearing other speakers. Much of this internalization occurs during early childhood; learning a language later ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linguistic Morphology

In linguistics, morphology () is the study of words, how they are formed, and their relationship to other words in the same language. It analyzes the structure of words and parts of words such as stems, root words, prefixes, and suffixes. Morphology also looks at parts of speech, intonation and stress, and the ways context can change a word's pronunciation and meaning. Morphology differs from morphological typology, which is the classification of languages based on their use of words, and lexicology, which is the study of words and how they make up a language's vocabulary. While words, along with clitics, are generally accepted as being the smallest units of syntax, in most languages, if not all, many words can be related to other words by rules that collectively describe the grammar for that language. For example, English speakers recognize that the words ''dog'' and ''dogs'' are closely related, differentiated only by the plurality morpheme "-s", only found bound to noun phr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |