|

Neural Gas

Neural gas is an artificial neural network, inspired by the self-organizing map and introduced in 1991 by Thomas Martinetz and Klaus Schulten. The neural gas is a simple algorithm for finding optimal data representations based on feature vectors. The algorithm was coined "neural gas" because of the dynamics of the feature vectors during the adaptation process, which distribute themselves like a gas within the data space. It is applied where data compression or vector quantization is an issue, for example speech recognition, image processing or pattern recognition. As a robustly converging alternative to the k-means clustering it is also used for cluster analysis. Algorithm Suppose we want to model a probability distribution P(x) of data vectors x using a finite number of feature vectors w_i, where i = 1,\cdots,N. # For each time step t ## Sample data vector x from P(x) ## Compute the distance between x and each feature vector. Rank the distances. ## Let i_0 be the index o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Neural Network

In machine learning, a neural network (also artificial neural network or neural net, abbreviated ANN or NN) is a computational model inspired by the structure and functions of biological neural networks. A neural network consists of connected units or nodes called '' artificial neurons'', which loosely model the neurons in the brain. Artificial neuron models that mimic biological neurons more closely have also been recently investigated and shown to significantly improve performance. These are connected by ''edges'', which model the synapses in the brain. Each artificial neuron receives signals from connected neurons, then processes them and sends a signal to other connected neurons. The "signal" is a real number, and the output of each neuron is computed by some non-linear function of the sum of its inputs, called the '' activation function''. The strength of the signal at each connection is determined by a ''weight'', which adjusts during the learning process. Typically, ne ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pattern Recognition

Pattern recognition is the task of assigning a class to an observation based on patterns extracted from data. While similar, pattern recognition (PR) is not to be confused with pattern machines (PM) which may possess PR capabilities but their primary function is to distinguish and create emergent patterns. PR has applications in statistical data analysis, signal processing, image analysis, information retrieval, bioinformatics, data compression, computer graphics and machine learning. Pattern recognition has its origins in statistics and engineering; some modern approaches to pattern recognition include the use of machine learning, due to the increased availability of big data and a new abundance of processing power. Pattern recognition systems are commonly trained from labeled "training" data. When no labeled data are available, other algorithms can be used to discover previously unknown patterns. KDD and data mining have a larger focus on unsupervised methods and str ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Incremental Learning

In computer science, incremental learning is a method of machine learning in which input data is continuously used to extend the existing model's knowledge i.e. to further train the model. It represents a dynamic technique of supervised learning and unsupervised learning that can be applied when training data becomes available gradually over time or its size is out of system memory limits. Algorithms that can facilitate incremental learning are known as incremental machine learning algorithms. Many traditional machine learning algorithms inherently support incremental learning. Other algorithms can be adapted to facilitate incremental learning. Examples of incremental algorithms include decision trees (IDE4, ID5R angaenari, decision rules, artificial neural networks ( RBF networks, Learn++, Fuzzy ARTMAP, TopoART,Marko Tscherepanow, Marco Kortkamp, and Marc KammerA Hierarchical ART Network for the Stable Incremental Learning of Topological Structures and Associations from Noisy ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hebbian Learning

Hebbian theory is a neuropsychological theory claiming that an increase in synaptic efficacy arises from a presynaptic cell's repeated and persistent stimulation of a postsynaptic cell. It is an attempt to explain synaptic plasticity, the adaptation of neurons during the learning process. Hebbian theory was introduced by Donald Hebb in his 1949 book '' The Organization of Behavior.'' The theory is also called Hebb's rule, Hebb's postulate, and cell assembly theory. Hebb states it as follows: Let us assume that the persistence or repetition of a reverberatory activity (or "trace") tends to induce lasting cellular changes that add to its stability. ... When an axon of cell ''A'' is near enough to excite a cell ''B'' and repeatedly or persistently takes part in firing it, some growth process or metabolic change takes place in one or both cells such that ''A''’s efficiency, as one of the cells firing ''B'', is increased. The theory is often summarized as "Neurons that fire togethe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

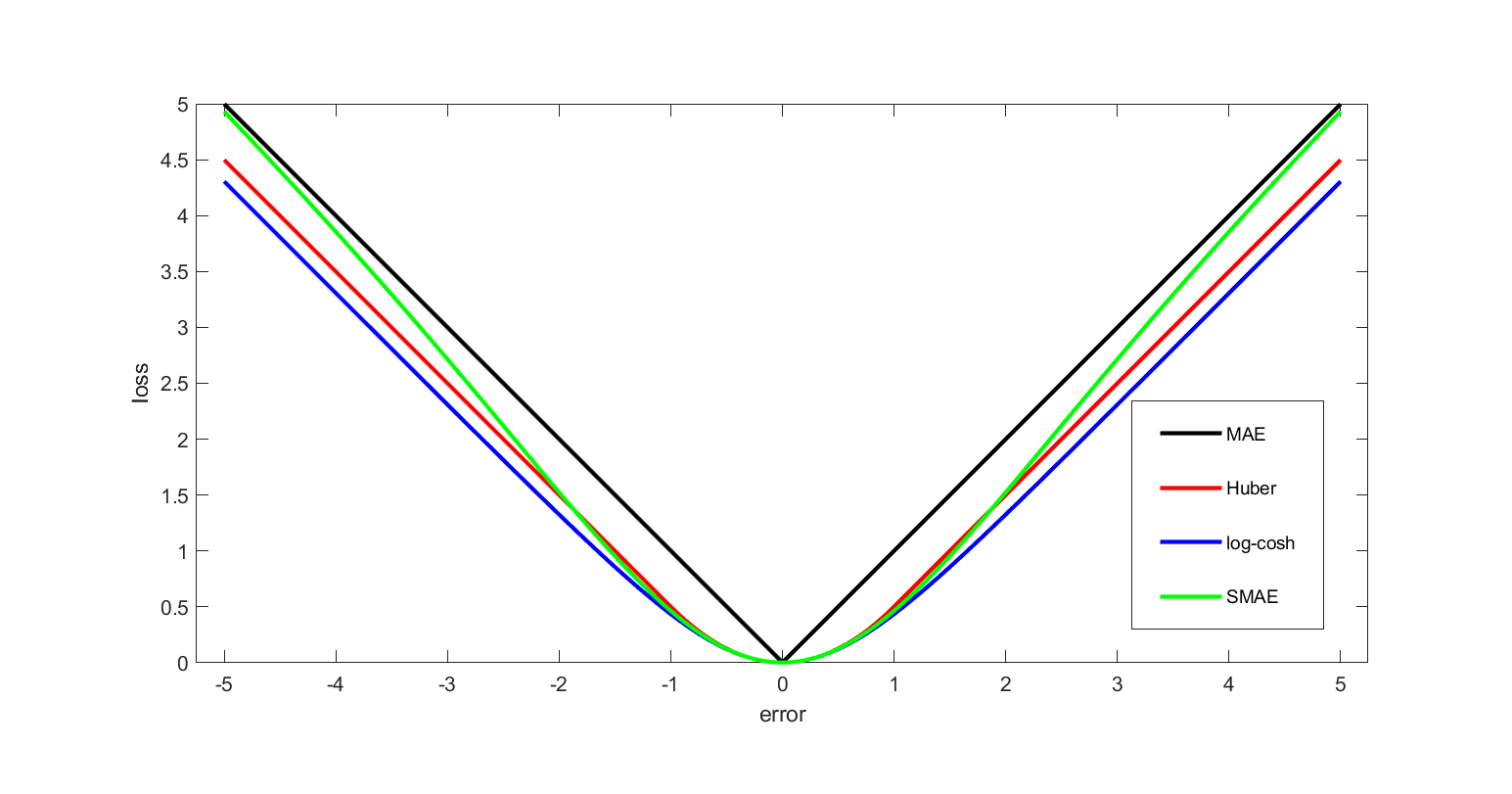

Loss Function

In mathematical optimization and decision theory, a loss function or cost function (sometimes also called an error function) is a function that maps an event or values of one or more variables onto a real number intuitively representing some "cost" associated with the event. An optimization problem seeks to minimize a loss function. An objective function is either a loss function or its opposite (in specific domains, variously called a reward function, a profit function, a utility function, a fitness function, etc.), in which case it is to be maximized. The loss function could include terms from several levels of the hierarchy. In statistics, typically a loss function is used for parameter estimation, and the event in question is some function of the difference between estimated and true values for an instance of data. The concept, as old as Pierre-Simon Laplace, Laplace, was reintroduced in statistics by Abraham Wald in the middle of the 20th century. In the context of economi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gradient Descent

Gradient descent is a method for unconstrained mathematical optimization. It is a first-order iterative algorithm for minimizing a differentiable multivariate function. The idea is to take repeated steps in the opposite direction of the gradient (or approximate gradient) of the function at the current point, because this is the direction of steepest descent. Conversely, stepping in the direction of the gradient will lead to a trajectory that maximizes that function; the procedure is then known as ''gradient ascent''. It is particularly useful in machine learning for minimizing the cost or loss function. Gradient descent should not be confused with local search algorithms, although both are iterative methods for optimization. Gradient descent is generally attributed to Augustin-Louis Cauchy, who first suggested it in 1847. Jacques Hadamard independently proposed a similar method in 1907. Its convergence properties for non-linear optimization problems were first studied by Has ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Feature (machine Learning)

In machine learning and pattern recognition, a feature is an individual measurable property or characteristic of a data set. Choosing informative, discriminating, and independent features is crucial to produce effective algorithms for pattern recognition, classification, and regression tasks. Features are usually numeric, but other types such as strings and graphs are used in syntactic pattern recognition, after some pre-processing step such as one-hot encoding. The concept of "features" is related to that of explanatory variables used in statistical techniques such as linear regression. Feature types In feature engineering, two types of features are commonly used: numerical and categorical. Numerical features are continuous values that can be measured on a scale. Examples of numerical features include age, height, weight, and income. Numerical features can be used in machine learning algorithms directly. Categorical features are discrete values that can be grouped into ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Distribution

In probability theory and statistics, a probability distribution is a Function (mathematics), function that gives the probabilities of occurrence of possible events for an Experiment (probability theory), experiment. It is a mathematical description of a Randomness, random phenomenon in terms of its sample space and the Probability, probabilities of Event (probability theory), events (subsets of the sample space). For instance, if is used to denote the outcome of a coin toss ("the experiment"), then the probability distribution of would take the value 0.5 (1 in 2 or 1/2) for , and 0.5 for (assuming that fair coin, the coin is fair). More commonly, probability distributions are used to compare the relative occurrence of many different random values. Probability distributions can be defined in different ways and for discrete or for continuous variables. Distributions with special properties or for especially important applications are given specific names. Introduction A prob ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cluster Analysis

Cluster analysis or clustering is the data analyzing technique in which task of grouping a set of objects in such a way that objects in the same group (called a cluster) are more Similarity measure, similar (in some specific sense defined by the analyst) to each other than to those in other groups (clusters). It is a main task of exploratory data analysis, and a common technique for statistics, statistical data analysis, used in many fields, including pattern recognition, image analysis, information retrieval, bioinformatics, data compression, computer graphics and machine learning. Cluster analysis refers to a family of algorithms and tasks rather than one specific algorithm. It can be achieved by various algorithms that differ significantly in their understanding of what constitutes a cluster and how to efficiently find them. Popular notions of clusters include groups with small Distance function, distances between cluster members, dense areas of the data space, intervals or pa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

K-means Clustering

''k''-means clustering is a method of vector quantization, originally from signal processing, that aims to partition of a set, partition ''n'' observations into ''k'' clusters in which each observation belongs to the cluster (statistics), cluster with the nearest mean (cluster centers or cluster centroid), serving as a prototype of the cluster. This results in a partitioning of the data space into Voronoi cells. ''k''-means clustering minimizes within-cluster variances (squared Euclidean distances), but not regular Euclidean distances, which would be the more difficult Weber problem: the mean optimizes squared errors, whereas only the geometric median minimizes Euclidean distances. For instance, better Euclidean solutions can be found using k-medians clustering, ''k''-medians and k-medoids, ''k''-medoids. The problem is computationally difficult (NP-hardness, NP-hard); however, efficient heuristic algorithms converge quickly to a local optimum. These are usually similar to the ex ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Image Processing

An image or picture is a visual representation. An image can be two-dimensional, such as a drawing, painting, or photograph, or three-dimensional, such as a carving or sculpture. Images may be displayed through other media, including a projection on a surface, activation of electronic signals, or digital displays; they can also be reproduced through mechanical means, such as photography, printmaking, or photocopying. Images can also be animated through digital or physical processes. In the context of signal processing, an image is a distributed amplitude of color(s). In optics, the term ''image'' (or ''optical image'') refers specifically to the reproduction of an object formed by light waves coming from the object. A ''volatile image'' exists or is perceived only for a short period. This may be a reflection of an object by a mirror, a projection of a camera obscura, or a scene displayed on a cathode-ray tube. A ''fixed image'', also called a hard copy, is one that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Self-organizing Map

A self-organizing map (SOM) or self-organizing feature map (SOFM) is an unsupervised machine learning technique used to produce a low-dimensional (typically two-dimensional) representation of a higher-dimensional data set while preserving the topological structure of the data. For example, a data set with p variables measured in n observations could be represented as clusters of observations with similar values for the variables. These clusters then could be visualized as a two-dimensional "map" such that observations in proximal clusters have more similar values than observations in distal clusters. This can make high-dimensional data easier to visualize and analyze. An SOM is a type of artificial neural network but is trained using competitive learning rather than the error-correction learning (e.g., backpropagation with gradient descent) used by other artificial neural networks. The SOM was introduced by the Finnish professor Teuvo Kohonen in the 1980s and therefore is some ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |